GBDT参数调优

摘要:

此处主要是如何利用GBDT以及如何进行调参,特征工程处理的比较简单。

特征工程:

- City这个变量已经被我舍弃了,因为有太多种类了。

- DOB转为Age|DOB,舍弃了DOB

- 创建了

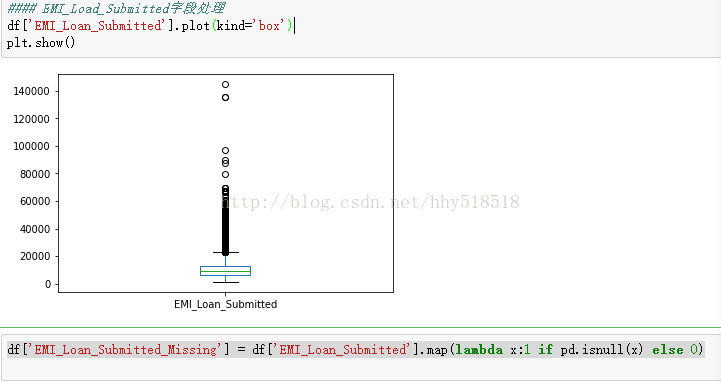

EMI_Loan_Submitted_Missing这个变量,当EMI_Loan_Submitted变量值缺失时它的值为1,否则为0。然后舍弃了EMI_Loan_Submitted。 - EmployerName的值也太多了,我把它也舍弃了

- Existing_EMI的缺失值被填补为0(中位数),因为只有111个缺失值

- 创建了

Interest_Rate_Missing变量,类似于#3,当Interest_Rate有值时它的值为0,反之为1,原来的Interest_Rate变量被舍弃了 - Lead_Creation_Date也被舍弃了,因为对结果看起来没什么影响

- 用

Loan_Amount_Applied和Loan_Tenure_Applied的中位数填补了缺失值 - 创建了

Loan_Amount_Submitted_Missing变量,当Loan_Amount_Submitted有缺失值时为1,反之为0,原本的Loan_Amount_Submitted变量被舍弃 - 创建了

Loan_Tenure_Submitted_Missing变量,当Loan_Tenure_Submitted有缺失值时为1,反之为0,原本的Loan_Tenure_Submitted变量被舍弃 - 舍弃了LoggedIn,和Salary_Account

- 创建了

Processing_Fee_Missing变量,当Processing_Fee有缺失值时为1,反之为0,原本的Processing_Fee变量被舍弃 - Source-top保留了2个,其他组合成了不同的类别

- 对一些变量采取了数值化和独热编码(One-Hot-Coding)操作

测试数据与训练数据合成

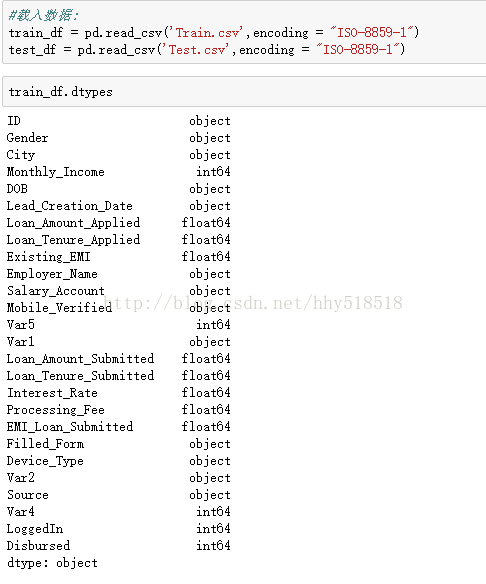

# 载入数据:

train_df = pd.read_csv('Train.csv',encoding = "ISO-8859-1")

test_df = pd.read_csv('Test.csv',encoding = "ISO-8859-1")

# train_df['source'] = 'train'

# test_df['source'] = 'test'

df = pd.concat([train_df,test_df],ignore_index=True)

XGBoost参数调节

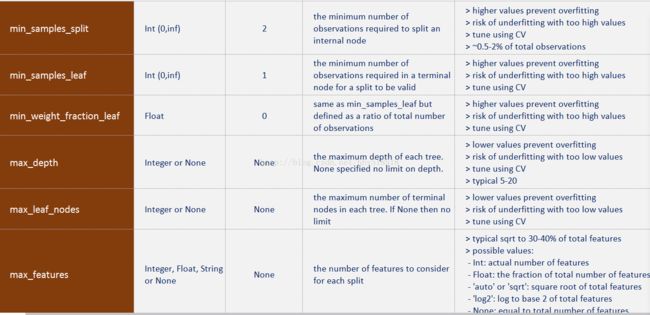

min_ samples_split

定义了树中一个节点所需要用来分裂的最少样本数。

可以避免过度拟合(over-fitting)。如果用于分类的样本数太小,模型可能只适用于用来训练的样本的分类,而用较多的样本数则可以避免这个问题。

但是如果设定的值过大,就可能出现欠拟合现象(under-fitting)。因此我们可以用CV值(离散系数)考量调节效果。

min_ samples_leaf

定义了树中终点节点所需要的最少的样本数。

同样,它也可以用来防止过度拟合。

在不均等分类问题中(imbalanced class problems),一般这个参数需要被设定为较小的值,因为大部分少数类别(minority class)含有的样本都比较小。

min_ weight_ fraction_leaf

和上面min_ samples_ leaf很像,不同的是这里需要的是一个比例而不是绝对数值:终点节点所需的样本数占总样本数的比值。

#2和#3只需要定义一个就行了

max_ depth

定义了树的最大深度。

它也可以控制过度拟合,因为分类树越深就越可能过度拟合。

当然也应该用CV值检验。

max_ leaf_ nodes

定义了决定树里最多能有多少个终点节点。

这个属性有可能在上面max_ depth里就被定义了。比如深度为n的二叉树就有最多2^n个终点节点。

如果我们定义了max_ leaf_ nodes,GBM就会忽略前面的max_depth。

max_ features

决定了用于分类的特征数,是人为随机定义的。

根据经验一般选择总特征数的平方根就可以工作得很好了,但还是应该用不同的值尝试,最多可以尝试总特征数的30%-40%.

过多的分类特征可能也会导致过度拟合。

learning_ rate

这个参数决定着每一个决定树对于最终结果(步骤2.4)的影响。GBM设定了初始的权重值之后,每一次树分类都会更新这个值,而learning_ rate控制着每次更新的幅度。

一般来说这个值不应该设的比较大,因为较小的learning rate使得模型对不同的树更加稳健,就能更好地综合它们的结果。

n_ estimators

定义了需要使用到的决定树的数量(步骤2)

虽然GBM即使在有较多决定树时仍然能保持稳健,但还是可能发生过度拟合。所以也需要针对learning rate用CV值检验。

训练每个决定树所用到的子样本占总样本的比例,而对于子样本的选择是随机的。

用稍小于1的值能够使模型更稳健,因为这样减少了方差。

一把来说用~0.8就行了,更好的结果可以用调参获得。

指的是每一次节点分裂所要最小化的损失函数(loss function)

对于分类和回归模型可以有不同的值。一般来说不用更改,用默认值就可以了,除非你对它及它对模型的影响很清楚。

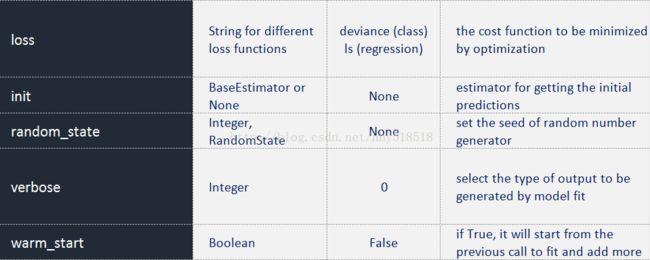

init

它影响了输出参数的起始化过程

如果我们有一个模型,它的输出结果会用来作为GBM模型的起始估计,这个时候就可以用init

random_ state

作为每次产生随机数的随机种子

使用随机种子对于调参过程是很重要的,因为如果我们每次都用不同的随机种子,即使参数值没变每次出来的结果也会不同,这样不利于比较不同模型的结果。

任一个随即样本都有可能导致过度拟合,可以用不同的随机样本建模来减少过度拟合的可能,但这样计算上也会昂贵很多,因而我们很少这样用

verbose

决定建模完成后对输出的打印方式:

0:不输出任何结果(默认)

1:打印特定区域的树的输出结果

>1:打印所有结果

warm_ start

这个参数的效果很有趣,有效地使用它可以省很多事

使用它我们就可以用一个建好的模型来训练额外的决定树,能节省大量的时间,对于高阶应用我们应该多多探索这个选项。

presort

决定是否对数据进行预排序,可以使得树分裂地更快。

默认情况下是自动选择的,当然你可以对其更改

下面主要是针对每个参数进行调节:

train_x = train_df.values

train_y = train_y.values

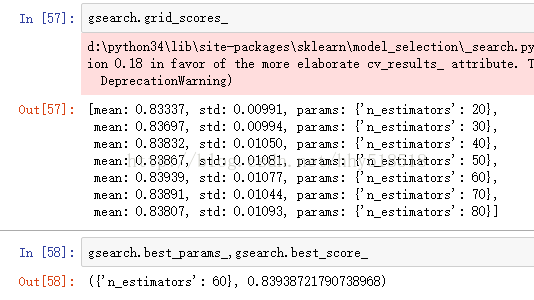

param_test1 = {'n_estimators': range(20, 81, 10)}

gsearch = GridSearchCV(estimator=GradientBoostingClassifier(learning_rate=0.1,min_samples_split=500,min_samples_leaf=50,max_depth=8,

max_features='sqrt',subsample=0.8,random_state=10),param_grid=param_test1,

scoring='roc_auc',n_jobs=4,cv=5)

gsearch.fit(train_x,train_y)

print(gsearch.grid_scores_)

print(gsearch.best_params_,gsearch.best_score_)#({'n_estimators': 60}, 0.83938721790738968)

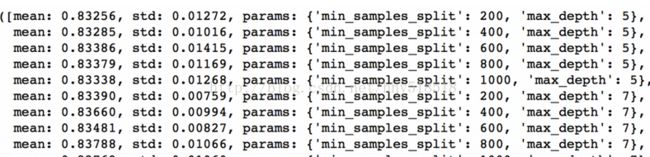

param_test2 = {'max_depth':range(5,16,2),'min_samples_split':range(200,1001,200)}

gsearch2 = GridSearchCV(estimator=GradientBoostingClassifier(learning_rate=0.1, n_estimators=60, max_features='sqrt', subsample=0.8, random_state=10),

param_grid=param_test2,scoring='roc_auc',n_jobs=4,iid=False,cv=5)

gsearch2.fit(train_x,train_y)

gsearch2.grid_scores_,gsearch2.best_params_,gsearch2.best_score_

然后调节min_samples_leaf可以测30,40,50,60,70这五个

#max_depth:9 min_samples_split:1000

#min_samples_split已经达到我们设定最大值可能比这个还大

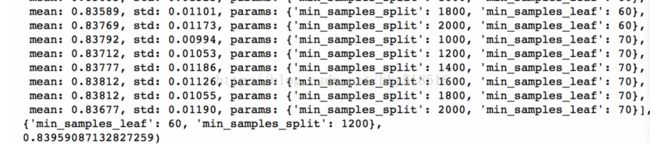

param_test3 = {'min_samples_split': range(1000, 2100, 200), 'min_samples_leaf': range(30, 71, 10)}

#根下个参数一起调节

gsearch3 = GridSearchCV(

estimator=GradientBoostingClassifier(learning_rate=0.1, n_estimators=60, max_depth=9, max_features='sqrt',

subsample=0.8, random_state=10),

param_grid=param_test3, scoring='roc_auc', n_jobs=4, iid=False, cv=5)

#max_features

param_test4 = {'max_features': range(7, 20, 2)}

gsearch4 = GridSearchCV(

estimator=GradientBoostingClassifier(learning_rate=0.1, n_estimators=60, max_depth=9, min_samples_split=1200,

min_samples_leaf=60, subsample=0.8, random_state=10),

param_grid=param_test4, scoring='roc_auc', n_jobs=4, iid=False, cv=5)

#接下来就可以调节子样本占总样本的比例,我准备尝试这些值:0.6,0.7,0.75,0.8,0.85,0.9。

predictors = [x for x in train.columns if x not in [target, IDcol]]

gbm_tuned_1 = GradientBoostingClassifier(learning_rate=0.05, n_estimators=120,max_depth=9, min_samples_split=1200,min_samples_leaf=60, subsample=0.85, random_state=10, max_features=7)

modelfit(gbm_tuned_1, train, predictors)

我们主要先看下参数的定义:

好了,现在我们已经介绍了树参数和boosting参数,此外还有第三类参数,它们能影响到模型的总体功能:

s

调节方法很明显是交叉验证的方法,设置自己的得分函数scoring=?使得当前参数得分最高的那个参数

首先调节是:Boost特有的3个参数 learning-rate n_estimators 固定后调节树参数。最后调节subsample(0.8)

如果给出的输出是20,可能就要降低我们的learning rate到0.05,然后再搜索一遍。

否则如果输出太高我们就增加learning rate

树参数调节

调节max_depth和 num_samples_split

调节min_samples_leaf

调节max_features

优先调节max_depth和num_samples_split

而我们现在的CV值已经达到83.9了

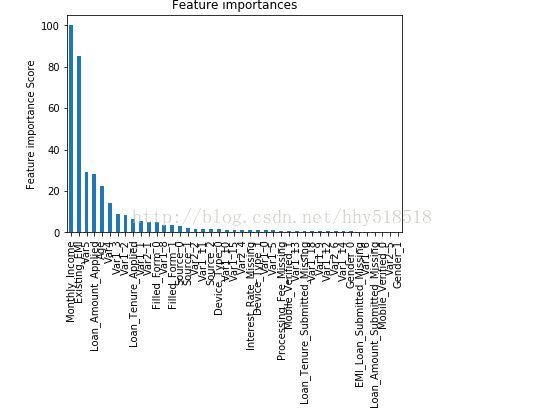

比较之前的基线模型结果可以看出,现在我们的模型用了更多的特征,并且基线模型里少数特征的重要性评估值过高,分布偏斜明显,现在分布得更加均匀了。

最后调节max_features:sqrt(49)=7我们从7到19跑一下

最佳的是0.85经过测试

上面所有调节完毕以后,这样所有的参数都设定好了,现在我们要做的就是进一步减少learning rate,就相应地增加了树的数量。需要注意的是树的个数是被动改变的,可能不是最佳的,但也很合适。随着树个数的增加,找到最佳值和CV的计算量也会加大

下面调节learning-rate和树个数的过程按照http://blog.csdn.net/han_xiaoyang/article/details/52663170思路

1.现在我们先把learning rate降一半,至0.05,这样树的个数就相应地加倍到120。

接下来我们把learning rate进一步减小到原值的十分之一,即0.01,相应地,树的个数变为600

3.继续把learning rate缩小至二十分之一,即0.005,这时候我们有1200个树此时得分没有升高。

4.排行得分稍微降低了,我们停止减少learning rate,只单方面增加树的个数,试试1500个树

排行得分已经从0.844升高到0.849了,这可是一个很大的提升。

还有一个技巧就是用“warm_start”选项这样每次用不同个数的树都不用重新开始

上面这些是一些基本探索过程。代码如下:

#-*-coding:utf-8-*-

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

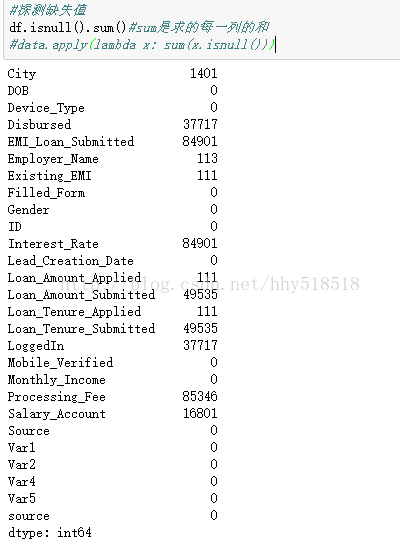

#探测缺失值data.apply(lambda x: sum(x.isnull()))

#探测有多少个不同的取值

#缺失值太多1401直接舍弃

def processCity(df):

df.drop('City',axis=1,inplace=1)

return df

#具体出生日期 算年龄

#DOB不要了

def processDOB(df):

df['Age'] = df['DOB'].map(lambda x:(117-int(x[-2:])))

df.drop('DOB',axis=1,inplace=True)

return df

#EMI_LOAD_SUBMMIT 画出箱线图发现异常点和缺失值很多

#df['EMI_Loan_Submitted'].plot(kind='box')

def processEMI_load(df):

#判断isnull最后用pd

df['EMI_Loan_Submitted_Missing'] = df['EMI_Loan_Submitted'].map(lambda x:1 if pd.isnull(x) else 0)

df.drop('EMI_Loan_Submitted',axis=1,inplace=True)

return df

#Employer_name的个数 len(df['Employer_Name'].value_counts())

def proceeEmploye_Name(df):

df['Employer_Len'] = df['Employer_Name'].map(lambda x:len(str(x)))

df.drop('Employer_Name',axis=1,inplace=True)

return df

#Exisiting_EMI

def processExisting_EMI(df):

#均值代替

# means = df['Existing_EMI'].dropna().mean()

#但是这个显然没有的时候0更靠谱因为相当于不存在

df['Existing_EMI'].fillna(0,inplace=True)#就地修改不用复制

return df

#Interest_Rate补充缺失值但是缺失值太多。84000+所以我们可以把这个属性看作有还是没有

def processInterest_Rate(df):

df['Interest_Rate_Missing'] = df['Interest_Rate'].map(lambda x:1 if pd.isnull(x) else 0)

df.drop('Interest_Rate',axis=1,inplace=True)#具体到每一行的那个值

return df

#Lead_Creation_Date

def processLead_Creation_Date(df):

df.drop('Lead_Creation_Date',axis=1,inplace=True)

return df

#Loan_Amount_Applied缺失值不是很多

#但是它的submit缺失值太多

def processLoan_Amount_Tenure_Applied(df):

df['Loan_Amount_Applied'].fillna(df['Loan_Amount_Applied'].median(), inplace=True)

df['Loan_Tenure_Applied'].fillna(df['Loan_Tenure_Applied'].median(), inplace=True)

df['Loan_Amount_Submitted_Missing'] = df['Loan_Amount_Submitted'].apply(lambda x: 1 if pd.isnull(x) else 0)

df['Loan_Tenure_Submitted_Missing'] = df['Loan_Tenure_Submitted'].apply(lambda x: 1 if pd.isnull(x) else 0)

df.drop(['Loan_Amount_Submitted','Loan_Tenure_Submitted'],axis=1,inplace=True)

return df

def processLoggedln(df):

df.drop('LoggedIn',axis=1,inplace=True)

return df

def processSalary_account(df):

#df['Salary_Account_id'] = pd.factorize(df['Salary_Account'])[0] + 1

df.drop('Salary_Account', axis=1, inplace=True)

return df

def processFee(df):

df['Processing_Fee_Missing'] = df['Processing_Fee'].apply(lambda x: 1 if pd.isnull(x) else 0)

# 旧的字段不要了

df.drop('Processing_Fee', axis=1, inplace=True)

return df

def processSource(df):

df['Source'] = df['Source'].map(lambda x:'other' if x not in ['S122','S133'] else x)

return df

#类别属性编码成数值

def processEncoder(df):

var_to_encode = ['Device_Type', 'Filled_Form', 'Gender', 'Var1', 'Var2', 'Mobile_Verified', 'Source']

for col in var_to_encode:

df[col] = pd.factorize(df[col])[0]+1

#然后虚拟化

df = pd.concat([df,pd.get_dummies(df,columns=var_to_encode)],axis=1)

return df

def dataprocess():

# 载入数据:

train_df = pd.read_csv('Train.csv',encoding = "ISO-8859-1")

test_df = pd.read_csv('Test.csv',encoding = "ISO-8859-1")

# train_df['source'] = 'train'

# test_df['source'] = 'test'

df = pd.concat([train_df,test_df],ignore_index=True)

df = processCity(df)

df = processDOB(df)

df = processEMI_load(df)

df = processExisting_EMI(df)

df = proceeEmploye_Name(df)

df = processFee(df)

df = processInterest_Rate(df)

df = processLead_Creation_Date(df)

df = processLoan_Amount_Tenure_Applied(df)

df = processLoggedln(df)

df = processSalary_account(df)

df = processSource(df)

df = processEncoder(df)

train_df = df.loc[train_df.index]

test_df = df.loc[test_df.index]

# train_df.drop('source',axis=1,inplace=True)

# test_df.drop(['source','Disbursed'],axis=1,inplace=True)

test_df.drop('Disbursed',axis=1,inplace=True) train_df.to_csv('train_modified.csv',encoding='utf-8',index=False)

test_df.to_csv('test_modified.csv',encoding='utf-8', index=False)def turn_parameters(train_df,train_y):

#先看min_samples_split这个参数总样本0.5-%1 我们这里87000我们可以小于870

min_samples_split = 500

min_samples_leaf = 50#比较小的值这个是叶节点最小的样本数否则不会分裂成这个叶节点的防止过拟合

#定义了树的最大深度。防止过拟合

#估算方法:87000/500 = 174 然后有多少个判断条件所以就是5-8。8的时候是256个节点都当作叶子节点

max_depth = 8 #5-8因为有49列

max_features = 'sqrt'

subsample = 0.8

train_x = train_df.values

train_y = train_y.values

param_test1 = {'n_estimators': range(20, 81, 10)}

gsearch = GridSearchCV(estimator=GradientBoostingClassifier(learning_rate=0.1,min_samples_split=500,min_samples_leaf=50,max_depth=8,

max_features='sqrt',subsample=0.8,random_state=10),param_grid=param_test1,

scoring='roc_auc',n_jobs=4,cv=5)

gsearch.fit(train_x,train_y)

print(gsearch.grid_scores_)

print(gsearch.best_params_,gsearch.best_score_)#({'n_estimators': 60}, 0.83938721790738968)

#如果给出的输出是20,可能就要降低我们的learning rate到0.05,然后再搜索一遍。

#如果输出值太高,比如100,因为调节其他参数需要很长时间,这时候可以把learniing rate稍微调高一点。

#开始调节树参数

param_test2 = {'max_depth':range(5,16,2),'min_samples_split':range(200,1001,200)}

gsearch2 = GridSearchCV(estimator=GradientBoostingClassifier(learning_rate=0.1, n_estimators=60, max_features='sqrt', subsample=0.8, random_state=10),

param_grid=param_test2,scoring='roc_auc',n_jobs=4,iid=False,cv=5)

gsearch2.fit(train_x,train_y)

gsearch2.grid_scores_,gsearch2.best_params_,gsearch2.best_score_

#max_depth:9 min_samples_split:1000

#min_samples_split已经达到我们设定最大值可能比这个还大

param_test3 = {'min_samples_split': range(1000, 2100, 200), 'min_samples_leaf': range(30, 71, 10)}

#根下个参数一起调节

gsearch3 = GridSearchCV(

estimator=GradientBoostingClassifier(learning_rate=0.1, n_estimators=60, max_depth=9, max_features='sqrt',

subsample=0.8, random_state=10),

param_grid=param_test3, scoring='roc_auc', n_jobs=4, iid=False, cv=5)

#设置为最佳参数

est = GradientBoostingClassifier(learning_rate=0.1,subsample=0.8,random_state=10,n_estimators=60)

param_test4 = {'max_depth':9,'min_samples_split':1200,'min_samples_leaf':60}

model_and_feature_Score(est,train_df,train_y,param_test4)

#max_features

param_test4 = {'max_features': range(7, 20, 2)}

gsearch4 = GridSearchCV(

estimator=GradientBoostingClassifier(learning_rate=0.1, n_estimators=60, max_depth=9, min_samples_split=1200,

min_samples_leaf=60, subsample=0.8, random_state=10),

param_grid=param_test4, scoring='roc_auc', n_jobs=4, iid=False, cv=5)

#最佳参数 min_samples_split:1200,min_samples_Leaf:60,max_depth:9,max_features:7

#subsamples

param_test5 = {'subsample': [0.6, 0.7, 0.75, 0.8, 0.85, 0.9]}

gsearch5 = GridSearchCV(

estimator=GradientBoostingClassifier(learning_rate=0.1, n_estimators=60, max_depth=9, min_samples_split=1200,

min_samples_leaf=60, subsample=0.8, random_state=10, max_features=7),

param_grid=param_test5, scoring='roc_auc', n_jobs=4, iid=False, cv=5)

# gsearch5.fit(train[predictors], train[target])

def model_and_feature_Score(clf,train_df,train_y,params,showFeatureImortance=True,cv_folds=5):

feature_list = train_df.columns.values

train_x = train_df.values

train_y = train_y.as_matrix()

clf.set_params(**params)

clf.fit(train_x,train_y)

#预测

train_predictions = clf.predict(train_x)#预测值

#针对分类问题

train_predprob = clf.predict_proba(train_x)[:,1]#预测的概率值第0列是为0的概率。第1列是预测为1概率

#展示交叉验证值

# if showCV:

# cv_score = cross_val_score(clf,train_x,train_y,cv=cv_folds,scoring='roc_auc')

#cv_score = cross_val_score(clf, train_x, train_y, cv=cv_folds,scoring='roc_auc')

print("\nModel Report")

print("Accuray: %.4g" %metrics.accuracy_score(train_y,train_predictions))

print("AUC Score %f" %metrics.roc_auc_score(train_y,train_predprob))

#print("CV Score : Mean - %.7g | Std - %.7g | Min - %.7g | Max - %.7g" % (np.mean(cv_score), np.std(cv_score), np.min(cv_score), np.max(cv_score)))

fi_threshold = 18

if showFeatureImortance:

feature_importance = clf.feature_importances_

feature_importance = (feature_importance/feature_importance.max())*100.0

import_index = np.where(feature_importance>fi_threshold)[0]

feat_imp = pd.Series(feature_importance[import_index],feature_list[import_index]).sort_values(ascending=True)[::-1]

feat_imp.plot(kind='bar',title='Feature importances')

plt.ylabel('Feature importance Score')

plt.show()