使用Pytorch构建MLP模型实现MNIST手写数字识别

基本流程

1、加载数据集

2、预处理数据(标准化并转换为张量)

3、查阅资料,看看是否已经有人做了这个问题,使用的是什么模型架构,并定义模型

4、确定损失函数和优化函数,并开始训练模型

5、使用模型从未见过的数据测试模型

本文在谷歌的Colab上实现

from torchvision import datasets

import torchvision.transforms as transforms

import torch

#非并行加载就填0

num_workers = 0

#决定每次读取多少图片

batch_size = 20

#转换成张量

transform = transforms.ToTensor()

#下载数据

train_data = datasets.MNIST(root = './drive/data',train = True,

download = True,transform = transform)

test_data = datasets.MNIST(root = './drive/data',train = True,

download = True,transform = transform)

#创建加载器

train_loader = torch.utils.data.DataLoader(train_data,batch_size = batch_size,

num_workers = num_workers)

test_loader = torch.utils.data.DataLoader(test_data,batch_size = batch_size,

num_workers = num_workers)

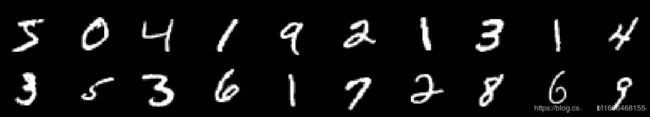

接下来的可视化部分可以省略

#可视化

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

dataiter = iter(train_loader)

images,labels = next(dataiter)

images = images.numpy()

fig = plt.figure(figsize = (25,4))

for idx in np.arange(20):#前面是读20张,所以这里就是20

ax = fig.add_subplot(2,20/2,idx + 1,xticks = [],yticks = [])

ax.imshow(np.squeeze(images[idx]),cmap = 'gray')

ax.set_title(str(labels[idx].item()))

接下来定义我们的模型

# 定义MLP模型

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

#两个全连接的隐藏层,一个输出层

#因为图片是28*28的,需要全部展开,最终我们要输出数字,一共10个数字。

#10个数字实际上是10个类别,输出是概率分布,最后选取概率最大的作为预测值输出

hidden_1 = 512

hidden_2 = 512

self.fc1 = nn.Linear(28 * 28,hidden_1)

self.fc2 = nn.Linear(hidden_1,hidden_2)

self.fc3 = nn.Linear(hidden_2,10)

#使用dropout防止过拟合

self.dropout = nn.Dropout(0.2)

def forward(self,x):

x = x.view(-1,28 * 28)

x = F.relu(self.fc1(x))

x = self.dropout(x)

x = F.relu(self.fc2(x))

x = self.dropout(x)

x = self.fc3(x)

# x = F.log_softmax(x,dim = 1)

return x

model = Net()

#打印出来看是否正确

print(model)

#定义损失函数和优化器

# criterion = nn.NLLLoss()

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(params = model.parameters(),lr = 0.01)

#训练

n_epochs = 50

for epoch in range(n_epochs):

train_loss = 0.0

for data,target in train_loader:

optimizer.zero_grad()

output = model(data)#得到预测值

loss = criterion(output,target)

loss.backward()

optimizer.step()

train_loss += loss.item()*data.size(0)

train_loss = train_loss / len(train_loader.dataset)

print('Epoch: {} \tTraining Loss: {:.6f}'.format(

epoch + 1,

train_loss))

这里是测试了,我们用之前的测试数据来测试训练好的模型,然后统计正确的数目,最后计算每个数字的正确率与总正确率

# initialize lists to monitor test loss and accuracy

test_loss = 0.0

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

model.eval() # prep model for *evaluation*

for data, target in test_loader:

# forward pass: compute predicted outputs by passing inputs to the model

output = model(data)

# calculate the loss

loss = criterion(output, target)

# update test loss

test_loss += loss.item()*data.size(0)

# convert output probabilities to predicted class

_, pred = torch.max(output, 1)

# compare predictions to true label

correct = np.squeeze(pred.eq(target.data.view_as(pred)))

# calculate test accuracy for each object class

for i in range(batch_size):

label = target.data[i]

class_correct[label] += correct[i].item()

class_total[label] += 1

# calculate and print avg test loss

test_loss = test_loss/len(test_loader.dataset)

print('Test Loss: {:.6f}\n'.format(test_loss))

for i in range(10):

if class_total[i] > 0:

print('Test Accuracy of %5s: %2d%% (%2d/%2d)' % (

str(i), 100 * class_correct[i] / class_total[i],

np.sum(class_correct[i]), np.sum(class_total[i])))

else:

print('Test Accuracy of %5s: N/A (no training examples)' % (classes[i]))

print('\nTest Accuracy (Overall): %2d%% (%2d/%2d)' % (

100. * np.sum(class_correct) / np.sum(class_total),

np.sum(class_correct), np.sum(class_total)))

以下是我测试的结果

Out:

Test Loss: 0.003499

Test Accuracy of 0: 100% (5923/5923)

Test Accuracy of 1: 99% (6740/6742)

Test Accuracy of 2: 99% (5955/5958)

Test Accuracy of 3: 99% (6125/6131)

Test Accuracy of 4: 99% (5841/5842)

Test Accuracy of 5: 100% (5421/5421)

Test Accuracy of 6: 100% (5918/5918)

Test Accuracy of 7: 99% (6264/6265)

Test Accuracy of 8: 99% (5850/5851)

Test Accuracy of 9: 99% (5947/5949)

Test Accuracy (Overall): 99% (59984/60000)

防止过拟合优化

为了防止过拟合,我们还可以采取另一种办法:利用训练集训练模型,利用检验集检验当前模型的效果(当本来是好的,变得不再那么好,可能就是出现了过拟合现象了)利用测试集做测试。

而之所以一定要加入检验集做检验,不使用测试集做检验,是因为在检验集做检验的时候,结果会带有一定的倾向性,即对检验集有利的模型和参数会被保留。如果用测试集做检验,最后的结果肯定会对结果有利,不利于模型的泛化。

下面是加入检验集的代码:

from torchvision import datasets

import torchvision.transforms as transforms

import torch

#拆分数据集

from torch.utils.data.sampler import SubsetRandomSampler

num_workers = 0

batch_size = 20

#添加验证集,让模型自动判断是否过拟合

valid_size = 0.2

transform = transforms.ToTensor()

train_data = datasets.MNIST(root = './drive/data',train = True,

download = True,transform = transform)

test_data = datasets.MNIST(root = './drive/data',train = True,

download = True,transform = transform)

num_train = len(train_data)

indices = list(range(num_train))

np.random.shuffle(indices)

split = int(np.floor(valid_size * num_train))

train_idx,valid_idx = indices[split:],indices[:split]

train_sampler = SubsetRandomSampler(train_idx)

valid_sampler = SubsetRandomSampler(valid_idx)

train_loader = torch.utils.data.DataLoader(train_data,batch_size = batch_size,

sampler = train_sampler,num_workers = num_workers)

valid_loader = torch.utils.data.DataLoader(train_data,batch_size = batch_size,

sampler = valid_sampler)

test_loader = torch.utils.data.DataLoader(test_data,batch_size = batch_size,

num_workers = num_workers)

# 定义MLP模型

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

hidden_1 = 512

hidden_2 = 512

self.fc1 = nn.Linear(28 * 28,hidden_1)

self.fc2 = nn.Linear(hidden_1,hidden_2)

self.fc3 = nn.Linear(hidden_2,10)

self.dropout = nn.Dropout(0.2)

def forward(self,x):

x = x.view(-1,28 * 28)

x = F.relu(self.fc1(x))

x = self.dropout(x)

x = F.relu(self.fc2(x))

x = self.dropout(x)

x = self.fc3(x)

# x = F.log_softmax(x,dim = 1)

return x

model = Net()

print(model)

#定义损失函数和优化器

# criterion = nn.NLLLoss()

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(params = model.parameters(),lr = 0.01)

这里如果训练集的损失还在减少,但是检验集的损失开始上升了,说明可能是过拟合了。

当两者都还在减少的时候,则保存模型,用于后期的使用

n_epochs = 50

valid_loss_min = np.Inf

for epoch in range(n_epochs):

train_loss = 0.0

valid_loss = 0.0

for data,target in train_loader:

optimizer.zero_grad()

output = model(data)#得到预测值

loss = criterion(output,target)

loss.backward()

optimizer.step()

train_loss += loss.item()*data.size(0)

#计算检验集的损失,这里不需要反向传播

for data,target in valid_loader:

output = model(data)

loss = criterion(output,target)

valid_loss += loss.item() * data.size(0)

train_loss = train_loss / len(train_loader.dataset)

valid_loss = valid_loss / len(valid_loader.dataset)

print('Epoch: {} \tTraining Loss: {:.6f} \tValidation Loss: {:.6f}'.format(

epoch + 1,

train_loss,

valid_loss))

if valid_loss <= valid_loss_min:#保存模型

print('Validation loss decreased ({:.6f} --> {:.6f}). Saving model...'.format(

valid_loss_min,

valid_loss))

torch.save(model.state_dict(),'model.pt')

valid_loss_min = valid_loss

这一步直接加载之前保存的模型

model.load_state_dict(torch.load('model.pt'))

# initialize lists to monitor test loss and accuracy

test_loss = 0.0

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

model.eval() # prep model for *evaluation*

for data, target in test_loader:

# forward pass: compute predicted outputs by passing inputs to the model

output = model(data)

# calculate the loss

loss = criterion(output, target)

# update test loss

test_loss += loss.item()*data.size(0)

# convert output probabilities to predicted class

_, pred = torch.max(output, 1)

# compare predictions to true label

correct = np.squeeze(pred.eq(target.data.view_as(pred)))

# calculate test accuracy for each object class

for i in range(batch_size):

label = target.data[i]

class_correct[label] += correct[i].item()

class_total[label] += 1

# calculate and print avg test loss

test_loss = test_loss/len(test_loader.dataset)

print('Test Loss: {:.6f}\n'.format(test_loss))

for i in range(10):

if class_total[i] > 0:

print('Test Accuracy of %5s: %2d%% (%2d/%2d)' % (

str(i), 100 * class_correct[i] / class_total[i],

np.sum(class_correct[i]), np.sum(class_total[i])))

else:

print('Test Accuracy of %5s: N/A (no training examples)' % (classes[i]))

print('\nTest Accuracy (Overall): %2d%% (%2d/%2d)' % (

100. * np.sum(class_correct) / np.sum(class_total),

np.sum(class_correct), np.sum(class_total)))

Out:

Test Loss: 0.019485

Test Accuracy of 0: 99% (5914/5923)

Test Accuracy of 1: 99% (6716/6742)

Test Accuracy of 2: 99% (5929/5958)

Test Accuracy of 3: 99% (6086/6131)

Test Accuracy of 4: 99% (5822/5842)

Test Accuracy of 5: 99% (5393/5421)

Test Accuracy of 6: 99% (5902/5918)

Test Accuracy of 7: 99% (6231/6265)

Test Accuracy of 8: 99% (5822/5851)

Test Accuracy of 9: 99% (5914/5949)

Test Accuracy (Overall): 99% (59729/60000)

这里发现,其实结果不如之前的那个模型,但是没关系,前面其实也使用了dropout来防止过拟合,而且这个案例特征实际上不是很多,模型并不复杂,但特征变得多起来的时候,检验集的威力就能得到显现了。