caffe如何自定义网络以及自定义层(python)(一)

深度学习理论基础知识与进阶书下载:

《深度学习-花书》链接: https://pan.baidu.com/s/1pMeyhvUtgucy8vUFwvYETQ 提取码: nvig

一直想了解检测这一套代码是如何添加lib目录然后编译到caffe的网络中?我先从开始网络定义开始。。。。。

首先默认caffe已经安装好,我们长话短说,

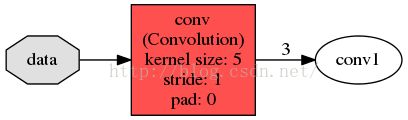

先定义一个最简单的网络conv.protxt

name: "convolution"

input: "data"

input_dim: 1

input_dim: 1

input_dim: 100

input_dim: 100

layer {

name: "conv"

type: "Convolution"

bottom: "data"

top: "conv1"

convolution_param {

num_output: 3

kernel_size: 5

stride: 1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}然后采用python接口使用并调试查看中间的变量。

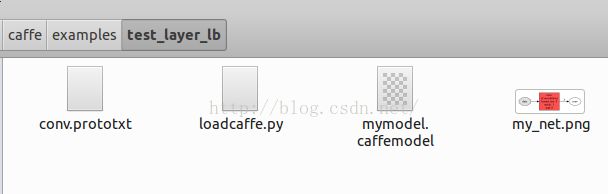

loadcaffe.py

#!/usr/bin/env python

#coding=utf-8

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

caffe_root = '/home/x/git/caffe/'

import sys

sys.path.insert(0, caffe_root + 'python')

import caffe

#caffe.set_mode_cpu()

caffe.set_device(0)

caffe.set_mode_gpu()

net = caffe.Net('/home/x/git/caffe/examples/test_layer_lb/conv.prototxt', caffe.TEST)

print '==========================1==========================='

#查看类型

print type(net)

#查看对像内所有属于及方法

print dir(net)

#具体使用方法等等

#print help(net)

print type(net.inputs)

#print help(net.inputs)

print type(net.blobs)

print '==========================2============================'

print dir(net.blobs['data'])

print [(k, v) for k, v in net.blobs.items()]

print net.blobs['data']

print net.blobs['data'].channels

print net.blobs['data'].count

print net.blobs['data'].data

print net.blobs['data'].diff

print net.blobs['data'].num

print net.blobs['data'].width

print net.blobs['data'].data

print net.blobs['data'].data.shape

print '============================='

print dir(net.blobs['conv1'])

print net.blobs['conv1'].data

print net.blobs['conv1'].data.shape

print [(k, v.data.shape) for k, v in net.blobs.items()]

print '==========================3============================='

print net.params

print type(net.params['conv'])

print dir(net.params['conv'])

print len(net.params['conv'])

print type(net.params['conv'][0])#权重

print type(net.params['conv'][1])#偏置

print dir(net.params['conv'][0])

print dir(net.params['conv'][1])

print net.params['conv'][0].count

print '============================='

print net.params['conv'][0].data

print '============================='

print net.params['conv'][0].diff

print '============================='

print net.params['conv'][0].channels

print net.params['conv'][0].height

print net.params['conv'][0].width

print [(k, v[0].data.shape, v[1].data.shape) for k, v in net.params.items()]

print '===========================4============================'

im = np.array(Image.open('/home/x/git/caffe/examples/images/cat_gray.jpg'))

im_input = im[np.newaxis, np.newaxis, :, :]

net.blobs['data'].reshape(*im_input.shape)

net.blobs['data'].data[...] = im_input

print net.blobs['data'].data.shape

net.forward()

print net.blobs['conv1'].data[0,:3]

feature=net.blobs['conv1'].data[0,1]

print '\n'

print net.blobs['conv1'].data.shape

net.save('/home/x/git/caffe/examples/test_layer_lb/mymodel.caffemodel')画一下网络结构

python ./python/draw_net.py ./examples/test_layer_lb/conv.prototxt ./examples/test_layer_lb/my_net.png

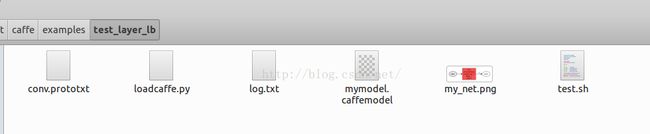

运行脚本test.sh

python ./examples/test_layer_lb/loadcaffe.py 2>&1 | tee ./examples/test_layer_lb/log.txt

在终端操作跑一下sh文件

会生成一个log日志log.txt

WARNING: Logging before InitGoogleLogging() is written to STDERR

I1108 14:53:34.118219 10548 upgrade_proto.cpp:66] Attempting to upgrade input file specified using deprecated input fields: /home/x/git/caffe/examples/test_layer_lb/conv.prototxt

I1108 14:53:34.118250 10548 upgrade_proto.cpp:69] Successfully upgraded file specified using deprecated input fields.

W1108 14:53:34.118253 10548 upgrade_proto.cpp:71] Note that future Caffe releases will only support input layers and not input fields.

I1108 14:53:34.118351 10548 net.cpp:49] Initializing net from parameters:

name: "convolution"

state {

phase: TEST

}

layer {

name: "input"

type: "Input"

top: "data"

input_param {

shape {

dim: 1

dim: 1

dim: 100

dim: 100

}

}

}

layer {

name: "conv"

type: "Convolution"

bottom: "data"

top: "conv1"

convolution_param {

num_output: 3

kernel_size: 5

stride: 1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

I1108 14:53:34.118379 10548 layer_factory.hpp:77] Creating layer input

I1108 14:53:34.118391 10548 net.cpp:91] Creating Layer input

I1108 14:53:34.118396 10548 net.cpp:399] input -> data

I1108 14:53:34.126137 10548 net.cpp:141] Setting up input

I1108 14:53:34.126170 10548 net.cpp:148] Top shape: 1 1 100 100 (10000)

I1108 14:53:34.126173 10548 net.cpp:156] Memory required for data: 40000

I1108 14:53:34.126178 10548 layer_factory.hpp:77] Creating layer conv

I1108 14:53:34.126194 10548 net.cpp:91] Creating Layer conv

I1108 14:53:34.126197 10548 net.cpp:425] conv <- data

I1108 14:53:34.126204 10548 net.cpp:399] conv -> conv1

I1108 14:53:34.281524 10548 net.cpp:141] Setting up conv

I1108 14:53:34.281549 10548 net.cpp:148] Top shape: 1 3 96 96 (27648)

I1108 14:53:34.281553 10548 net.cpp:156] Memory required for data: 150592

I1108 14:53:34.281570 10548 net.cpp:219] conv does not need backward computation.

I1108 14:53:34.281585 10548 net.cpp:219] input does not need backward computation.

I1108 14:53:34.281589 10548 net.cpp:261] This network produces output conv1

I1108 14:53:34.281594 10548 net.cpp:274] Network initialization done.

==========================1===========================

['__class__', '__delattr__', '__dict__', '__doc__', '__format__', '__getattribute__', '__hash__', '__init__', '__module__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', '_backward', '_batch', '_blob_loss_weights', '_blob_names', '_blobs', '_bottom_ids', '_forward', '_inputs', '_layer_names', '_outputs', '_set_input_arrays', '_top_ids', 'backward', 'blob_loss_weights', 'blobs', 'bottom_names', 'copy_from', 'forward', 'forward_all', 'forward_backward_all', 'inputs', 'layers', 'load_hdf5', 'outputs', 'params', 'reshape', 'save', 'save_hdf5', 'set_input_arrays', 'share_with', 'top_names']

==========================2============================

['__class__', '__delattr__', '__dict__', '__doc__', '__format__', '__getattribute__', '__hash__', '__init__', '__module__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'channels', 'count', 'data', 'diff', 'height', 'num', 'reshape', 'shape', 'width']

[('data', ), ('conv1', )]

1

10000

[[[[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

...,

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]]]]

[[[[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

...,

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]]]]

1

100

[[[[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

...,

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]]]]

(1, 1, 100, 100)

=============================

['__class__', '__delattr__', '__dict__', '__doc__', '__format__', '__getattribute__', '__hash__', '__init__', '__module__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'channels', 'count', 'data', 'diff', 'height', 'num', 'reshape', 'shape', 'width']

[[[[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

...,

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]]

[[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

...,

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]]

[[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

...,

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]

[ 0. 0. 0. ..., 0. 0. 0.]]]]

(1, 3, 96, 96)

[('data', (1, 1, 100, 100)), ('conv1', (1, 3, 96, 96))]

==========================3=============================

OrderedDict([('conv', )])

['__class__', '__contains__', '__delattr__', '__delitem__', '__dict__', '__doc__', '__format__', '__getattribute__', '__getitem__', '__hash__', '__init__', '__instance_size__', '__iter__', '__len__', '__module__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__setitem__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'add_blob', 'append', 'extend']

2

['__class__', '__delattr__', '__dict__', '__doc__', '__format__', '__getattribute__', '__hash__', '__init__', '__module__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'channels', 'count', 'data', 'diff', 'height', 'num', 'reshape', 'shape', 'width']

['__class__', '__delattr__', '__dict__', '__doc__', '__format__', '__getattribute__', '__hash__', '__init__', '__module__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'channels', 'count', 'data', 'diff', 'height', 'num', 'reshape', 'shape', 'width']

75

=============================

[[[[-0.00704367 -0.00119209 0.00686654 0.00972978 0.00362616]

[ 0.00425677 0.00765205 0.00991023 0.00645294 -0.00508114]

[ 0.00014955 -0.00313787 0.00975105 0.00171034 -0.00474508]

[ 0.00591688 0.00376475 -0.00325199 0.0108103 0.00266656]

[ 0.00169351 0.00993805 0.01318043 0.0033258 -0.01794613]]]

[[[ 0.00061946 -0.00263051 -0.00400451 0.00263068 -0.0146658 ]

[ 0.00062043 0.00074114 -0.00670637 0.00151422 0.00046219]

[-0.01326571 0.00625049 0.0023697 0.0159948 0.00615419]

[-0.00978592 0.00256772 -0.00447202 -0.01537695 -0.01556842]

[-0.00405405 0.00903763 0.01306344 0.01252459 0.00674845]]]

[[[ 0.00452632 -0.01486946 0.00304247 -0.00080888 0.01048851]

[ 0.00261175 0.00788001 -0.00522757 -0.00017629 -0.00323713]

[ 0.00389483 0.00541457 -0.00040399 -0.0134966 0.00171397]

[-0.0081343 -0.00347066 0.00388015 0.00704651 -0.01185634]

[ 0.0117879 0.01379872 -0.0018975 -0.0082513 -0.01582935]]]]

=============================

[[[[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]]]

[[[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]]]

[[[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0.]]]]

=============================

1

5

5

[('conv', (3, 1, 5, 5), (3,))]

===========================4============================

(1, 1, 360, 480)

[[[ 1.80256486 1.85992372 1.93549395 ..., 3.61516118 3.61371922

3.62665534]

[ 1.89069617 1.90655768 1.90023112 ..., 3.92563343 3.86107874

3.89744091]

[ 1.81342971 1.87407196 1.88135409 ..., 4.24605417 4.08810377

4.07397509]

...,

[ 2.43323398 2.45806456 2.56792927 ..., 14.08971691 13.60014725

13.05749702]

[ 2.64301062 2.58942032 2.69755673 ..., 14.45938492 13.62996197

13.4437809 ]

[ 2.65608096 2.82163405 3.07213736 ..., 13.9683733 13.70003796

13.68651295]]

[[ -0.33850718 -0.27488053 -0.20817412 ..., -0.19259498 -0.28113356

-0.37528431]

[ -0.28850365 -0.28463683 -0.32789859 ..., -0.33837739 -0.2473668

-0.34177506]

[ -0.23893072 -0.14233448 -0.19258618 ..., -0.40048659 -0.39444199

-0.44427004]

...,

[ -0.39798406 -0.37440255 -0.30903548 ..., -1.08480096 -1.47327387

-1.51259077]

[ -0.119674 -0.07250091 -0.13145781 ..., -2.08813357 -2.22062492

-2.20186663]

[ 0.05246845 0.19791798 0.11286487 ..., -1.70923364 -1.74369955

-2.21097374]]

[[ -0.29370564 -0.2816526 -0.34196407 ..., -0.63975441 -0.61519194

-0.61688489]

[ -0.32314965 -0.20828477 -0.23015976 ..., -0.67447752 -0.65674865

-0.7253679 ]

[ -0.33232877 -0.32414252 -0.33327326 ..., -0.75686628 -0.70744246

-0.73356175]

...,

[ -0.62094498 -0.54891551 -0.34199172 ..., -1.91440523 -2.09178185

-1.86249638]

[ -0.49657905 -0.51457095 -0.34446782 ..., -0.58781362 -1.33256721

-2.25190568]

[ -0.87521422 -0.77985859 -0.5490675 ..., -1.67486632 -1.22804785

-1.45197225]]]

(1, 3, 356, 476) 基本完结。

这些你都学到了么?下一篇进行进一步深入实践。