kubernetes权威指南读书笔记(1)--搭建基础服务、Pod并访问

走过路过不要错过,随手点赞手有余香。

- 关闭防火墙

systemctl status firewalld

systemctl disable firewalld

systemctl stop firewalld

Systemctl是一个systemd工具,主要负责控制systemd系统和服务管理器.

Systemd是一个系统管理守护进程、工具和库的集合,用于取代System V初始进程。Systemd的功能是用于集中管理和配置类UNIX系统。

在Linux生态系统中,Systemd被部署到了大多数的标准Linux发行版中,只有为数不多的几个发行版尚未部署。Systemd通常是所有其它守护进程的父进程,但并非总是如此。

https://linux.cn/article-5926-1.html

- 安装etcd和kubernetes

yum install etcd kubernetes

etcd是一个开源的、分布式的键值对数据存储系统,提供共享配置、服务的注册和发现。

问题:

安装报错

Transaction check error:

file /usr/bin/kubectl from install of kubernetes-client-1.5.2-0.7.git269f928.el7.x86_64 conflicts with file from package kubectl-1.1

file /usr/bin/kubelet from install of kubernetes-node-1.5.2-0.7.git269f928.el7.x86_64 conflicts with file from package kubelet-1.10.

原因:与已经安装的冲突

解决方案:写在本地的冲突项目 yum remove

- 启动服务

systemctl start etcd

systemctl start docker

systemctl start kube-apiserver

systemctl start kube-controller-manager

systemctl start kube-scheduler

systemctl start kubelet

systemctl start kube-proxy

- 新建rc来启动pod(mysql)

kubectl create -f mysql-rc.yaml

#rc模板 mysql-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

问题:

Kubernetes新建RC成功时但Pods没有自动生成的解决方法

原因:权限问题

解决办法:编辑/etc/kubernetes/apiserver 去除 KUBE_ADMISSION_CONTROL 中的 SecurityContextDeny,ServiceAccount ,并重启kube-apiserver.service服务

新建成功后,查看rc和pods的状态

[root@localhost k8s]# kubectl get rc

NAME DESIRED CURRENT READY AGE

mysql 1 1 0 22s

[root@localhost k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-10gsx 0/1 ContainerCreating 0 26s

#卡在这个状态,查看pod状态

[root@localhost k8s]# kubectl describe pod mysql-10gsx

Name: mysql-10gsx

Namespace: default

Node: 127.0.0.1/127.0.0.1

Start Time: Sat, 20 Oct 2018 01:31:50 -0700

Labels: app=mysql

Status: Pending

IP:

Controllers: ReplicationController/mysql

Containers:

mysql:

Container ID:

Image: docker.io/mysql

Image ID:

Port: 3306/TCP

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Volume Mounts:

Environment Variables:

MYSQL_ROOT_PASSWORD: 123456

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations:

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

1d 1m 265 {kubelet 127.0.0.1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

1d 9s 5786 {kubelet 127.0.0.1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

其中最主要的问题是:details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)

解决方案:

查看/etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt (该链接就是上图中的说明) 是一个软链接,但是链接过去后并没有真实的/etc/rhsm,所以需要使用yum安装:

yum install *rhsm*

安装完成后,执行一下docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

如果依然报错,可参考下面的方案:

wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm

rpm2cpio python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm | cpio -iv --to-stdout ./etc/rhsm/ca/redhat-uep.pem | tee /etc/rhsm/ca/redhat-uep.pem

这两个命令会生成/etc/rhsm/ca/redhat-uep.pem文件.

顺得的话会得到下面的结果。

[root@localhost]# docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

Trying to pull repository registry.access.redhat.com/rhel7/pod-infrastructure ...

latest: Pulling from registry.access.redhat.com/rhel7/pod-infrastructure

26e5ed6899db: Pull complete

66dbe984a319: Pull complete

9138e7863e08: Pull complete

Digest: sha256:92d43c37297da3ab187fc2b9e9ebfb243c1110d446c783ae1b989088495db931

Status: Downloaded newer image for registry.access.redhat.com/rhel7/pod-infrastructure:latest

# 再次查看状态

[root@localhost k8s]yukubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-10gsx 1/1 Running 0 1d

删除操作

[root@cent7-2 docker]# kubectl delete -f mysql-rc.yaml

replicationcontroller "mysql" deleted

[root@cent7-2 docker]# kubectl get rc

No resources found.

[root@cent7-2 docker]# kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-hgkwr 0/1 Terminating 0 17m

[root@cent7-2 docker]# kubectl delete po mysql-hgkwr

pod "mysql-hgkwr" deleted

# 强行删除pods

kubectl delete pods --all --grace-period=0 --force

- 新建svc

#svc模板 mysql-rsvc.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

selector:

app: mysql

[root@localhost k8s]# kubectl create -f mysql-svc.yaml

service "mysql" created

[root@localhost k8s]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 443/TCP 1d

mysql 10.254.248.175 3306/TCP 42s

注意到MySQL服务被分配了一个值10.254.248.175的Cluster IP地址,这是一个虚地址,kubernetes集群中其他新创建的Pod就可以通过service的Cluster IP+端口号3306访问它了。

- 创建WEB容器

#docker search tomcat-app

#docker images docker.io/kubeguide/tomcat-app

#docker pull docker.io/kubeguide/tomcat-app:v1

vi myweb-rc.yaml

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 5

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: kubeguide/tomcat-app:v1

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql'

- name: MYSQL_SERVICE_PORT

value: '3306'

#注意到上面RC对应的Tomcat容器里引用了MYSQL_SERVICR_HOST=mysql这个环境标量,

#而"mysql"恰好是我们之前应以的MySQL服务的服务名。

结果

[root@localhost k8s]# kubectl create -f myweb-rc.yaml

replicationcontroller "myweb" created

[root@localhost k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-10gsx 1/1 Running 0 1d

myweb-1t5h1 1/1 Running 0 33s

myweb-7wfx9 1/1 Running 0 33s

myweb-96bw7 1/1 Running 0 33s

myweb-mr3cg 1/1 Running 0 33s

myweb-v0sp8 1/1 Running 0 33s

[root@localhost k8s]# kubectl get rc

NAME DESIRED CURRENT READY AGE

mysql 1 1 1 1d

myweb 5 5 5 1m

创建对应的service

[root@localhost k8s]# vi myweb-svc.yaml

[root@localhost k8s]# cat myweb-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30001

selector:

app: myweb

type=NodePort和nodePort=30001的两个属性,表明此service开启了NodePort方式外网访问模式。

Service的虚拟IP是由Kubernetes虚拟出来的内部网络,外部是无法寻址到的。但是有些服务又需要被外部访问到,例如web前段。这时候就需要加一层网络转发,即外网到内网的转发。Kubernetes提供了NodePort、LoadBalancer、Ingress三种方式。

nodePort,在之前的Guestbook示例中,已经延时了NodePort的用法。NodePort的原理是,Kubernetes会在每一个Node上暴露出一个端口:nodePort,外部网络可以通过(任一Node)[NodeIP]:[NodePort]访问到后端的Service。

[root@localhost k8s]# kubectl create -f myweb-svc.yaml

service "myweb" created

[root@localhost k8s]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 443/TCP 1d

mysql 10.254.248.175 3306/TCP 12h

myweb 10.254.183.138 8080:30001/TCP 34s

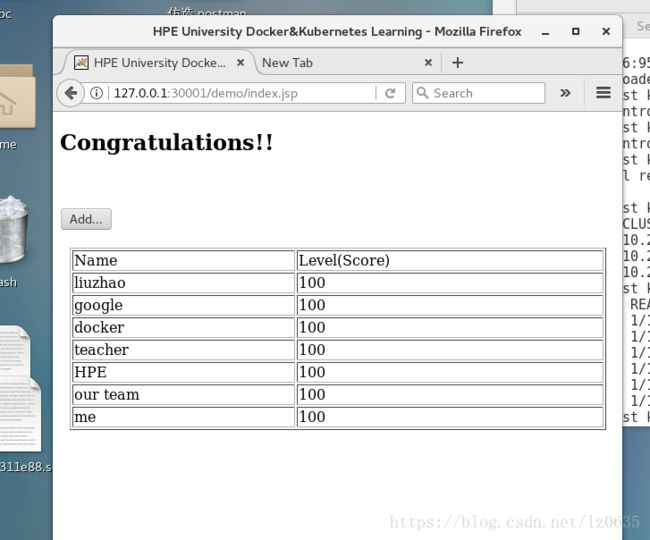

可以通过本机IP/127.0.0.1:30001打开tomcat页面。

然鹅,我们使用127.0.0.1:30001/demo打开页面提示jdbc数据库连接错误。

[root@localhost ~]# kubectl get ep

NAME ENDPOINTS AGE

kubernetes 192.168.80.128:6443 9h

mysql 172.17.0.7:3306 9h

myweb 172.17.0.2:8080,172.17.0.3:8080,172.17.0.4:8080 + 2 more... 9h

[root@localhost ~]# kubectl exec -ti myweb-qrjsd -- /bin/bash

root@myweb-qrjsd:/usr/local/tomcat# echo $MYSQL_SERVICE_HOST

mysql

root@myweb-qrjsd:/usr/local/tomcat# echo "172.17.0.7 mysql" >> /etc/hosts

root@myweb-qrjsd:/usr/local/tomcat#

大功告成,打完收工,收工回家,回家吃饭。

# 走过路过不要错过,随手点赞手有余香。