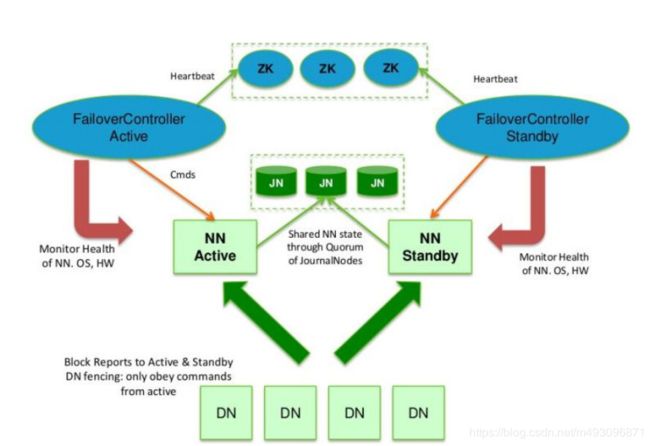

hadoop3.0.3高可用(ZK;DN)

server1-5五台配置好apache的hadoop

nfs-utils rpcbind 将hadoopserver1挂载-->2-5

清空环境

sbin/stop-yarn.sh

sbin/stop-dfs.sh

rm -fr /tmp/* 每个节点

kill 所有java进程

2 5 高可用

134 zk

server1 上 因为这里弄混了2 1 所以 你做的时候按照1

[hadoop@server2 ~]$ tar zxf zookeeper-3.4.9.tar.gz

[hadoop@server2 ~]$ cd zookeeper-3.4.9

zookeeper-3.4.9/ zookeeper-3.4.9.tar.gz

[hadoop@server2 ~]$ cd zookeeper-3.4.9/con

conf/ contrib/

[hadoop@server2 ~]$ cd zookeeper-3.4.9/con

conf/ contrib/

[hadoop@server2 ~]$ cd zookeeper-3.4.9/conf/

[hadoop@server2 conf]$ cp zoo_sample.cfg zoo.cfg

[hadoop@server2 conf]$ vim zoo.cfg

server.1=172.25.11.2:2888:3888

server.2=172.25.11.3:2888:3888

server.3=172.25.11.4:2888:3888

server 1 3 4 分别echo 1 2 3 --> myid

server 1 3 4 分别执行下面 但是id号要对应 1 2 3

[hadoop@server2 conf]$ mkdir /tmp/zookeeper

[hadoop@server2 conf]$ vim /tmp/zookeeper/myid

[hadoop@server2 conf]$ cat /tmp/zookeeper/myid

1

echo 1 > /tmp/zookeeper/myid 数字和空格> 要分开

server 134 zookeeper

bin/zkServer.sh start

~/zookeeper-3.4.9/bin/zkServer.sh start

server2

vim core-site.xml

[hadoop@server2 zookeeper-3.4.9]$ vim ../hadoop/etc/hadoop/core-site.xml

[hadoop@server2 zookeeper-3.4.9]$ vim ../hadoop/etc/hadoop/hdfs-site.xml

sshfence

shell(/bin/true)

bin/hdfs namenode -format

134 为节点

25为HA

server 134

sbin/hadoop-daemon.sh start journalnode

~/hadoop/bin/hdfs --daemon start journalnode

server2

bin/hdfs namenode -format

scp -r /tmp/hadoop-hadoop 172.25.11.5:/tmp

~/hadoop/bin/hdfs zkfc -formatZK 故障控制器

~/hadoop/sbin/start-dfs.sh

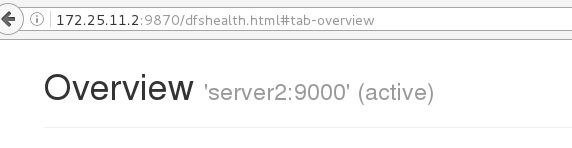

http://172.25.11.2:9870/dfshealth.html#tab-overview

http://172.25.11.5:9870/dfshealth.html#tab-overview

../hadoop/bin/hdfs zkfc

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir -p /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir input

[hadoop@server1 hadoop]$ bin/hdfs dfs -put etc/hadoop/* input

bin/hdfs --daemon start namenode

~/zookeeper-3.4.9/bin/zkCli.sh -server 127.0.0.1:218

[hadoop@server1 zookeeper-3.4.9]$ /home/hadoop/zookeeper-3.4.9/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: follower

[hadoop@server3 ~]$ /home/hadoop/zookeeper-3.4.9/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: follower

[hadoop@server4 ~]$ /home/hadoop/zookeeper-3.4.9/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: leader

[hadoop@server4 ~]$ jps

13992 Jps

13498 DataNode

13403 JournalNode

13789 QuorumPeerMain

mapreduce

mapred-site.xml

yarn-site.xml

server2

sbin/start-yarn.sh

杀掉之后再打开测试

bin/yarn --daemon start resourcemanager

[hadoop@server2 hadoop]$ jps

24800 NameNode

25998 Jps

25775 ResourceManager

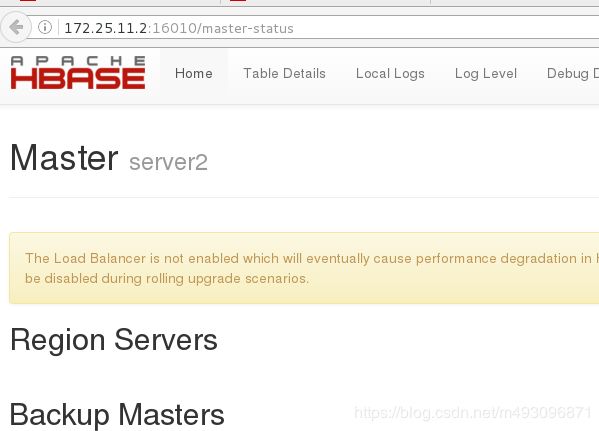

Hbase分布式部署

(HIVE HBASE是两个不同的)

[hadoop@server2 ~]$ tar zxf hbase-1.2.4-bin.tar.gz

[hadoop@server2 hbase]$ vim conf/hbase-env.sh

export JAVA_HOME=/home/hadoop/java/

export HADOOP_HOME=/home/hadoop/hadoop

export HBASE_MANAGES_ZK=false

因为上面自己做过ZK 这里去掉ZK

vim conf/hbase-site.xml

[hadoop@server2 hbase]$ vim conf/regionservers

172.25.11.1

172.25.11.3

172.25.11.4

server 2 主结点

bin/start-hbase.sh

server5

bin/hbase-daemon.sh start master

访问HBASE

172.25.11.2:16010

http://172.25.11.5:16010/master-status

http://172.25.11.2:16010/master-status

放数据

bin/hbase shell

create "test","cf"

list 'test'

bin/hbase-daemon