吴恩达机器学习ex6:支持向量机

1、支持向量机(support vector machines, SVMs)

本小节使用SVM处理二维数据。

1.1、数据集1

使用二维数据集,其中数据可视化图形上用“+“表示y=1,用“o”表示y=0。

代码:

>> load('ex6data1.mat');

>> plotData(X, y);

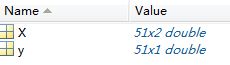

共有数据m=51个,每个数据有n=2个特征值。

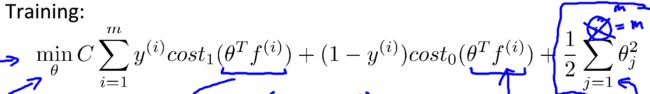

SVM中损失函数为:

此处f表示为选择不同的核函数(kernel)对应的特征参数。

对于参数C(类似于正则化线性回归中的参数1/lambda):

当参数C很大时,相当于lambda很小,其正则化效果较差,拟合曲线趋于过拟合,其对样本的分类正确率非常高,处于高方差,低偏差状态;

当参数C很小时,相当于lambda很大,其正则化效果很好,拟合曲线趋于欠拟合,处于高偏差,低方差状态。

取C=1,并使用线性核(即没有核)进行训练:

>> C = 1;

>> model = svmTrain(X, y, C, @linearKernel, 1e-3, 20);

>> visualizeBoundaryLinear(X, y, model);

>> C = 100;

>> model = svmTrain(X, y, C, @linearKernel, 1e-3, 20);

>> visualizeBoundaryLinear(X, y, model);

可以看到,当C=1时,其处于高偏差,低方差的状态,其存在误分类,图的左上角有点y=0分类到y=1阵营中,但决策边界与两数据集留有大间隙;当C=100时,其处于高方差,低偏差状态,其不存在误分类,训练的分类正确率达到100%,但决策边界与两数据集较近,为高方差状态。

1.2、使用Gaussian核的SVM

1.2.1、Gaussian核

高斯核其实是两个样本点之间的相似度的度量,其将两个点之间的距离转换到区间(0,1)。

假设样本有n个特征参数,则计算两个样本点xi和xj的高斯核公式定义为:

此处参数sigma决定了样本的高斯相似度下降到0的速度。

sigma越小,其下降的速率越快,曲线拟合趋于过拟合,偏差越小,方差越大

sigma越大,其下降的速率越慢,曲线拟合趋于欠拟合,方差越小,偏差越大。

补充函数function sim = gaussianKernel(x1, x2, sigma):

sim = exp((x1-x2)'*(x1-x2)/(-2*sigma*sigma));

通过ex6.m验算函数是否正确:

x1 = [1 2 1]; x2 = [0 4 -1]; sigma = 2;

sim = gaussianKernel(x1, x2, sigma);

fprintf(['Gaussian Kernel between x1 = [1; 2; 1], x2 = [0; 4; -1], sigma = %f :' ...

'\n\t%f\n(for sigma = 2, this value should be about 0.324652)\n'], sigma, sim);

其输出为:

Gaussian Kernel between x1 = [1; 2; 1], x2 = [0; 4; -1], sigma = 2.000000 :

0.324652

(for sigma = 2, this value should be about 0.324652)

说明高斯核函数代码正确。

1.2.2、数据集2

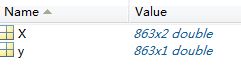

load数据集2,样本X的数量m=863,其特征参数维度n=2.

将样本数据可视化有:

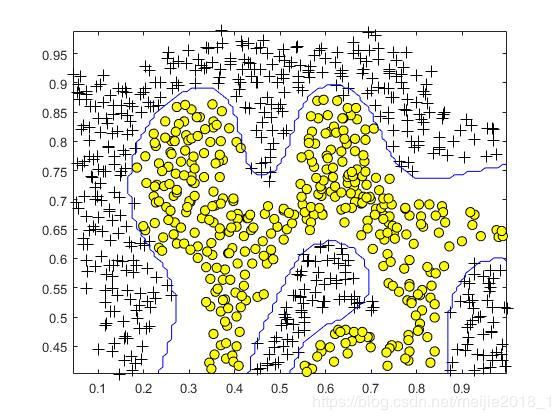

取C=1,sigma=0.1用带高斯核的SVM进行训练:

C = 1; sigma = 0.1;

model= svmTrain(X, y, C, @(x1, x2) gaussianKernel(x1, x2, sigma));

visualizeBoundary(X, y, model);

得到其非线性分类边界为:

1.2.3、数据集3

导入数据集3,其含有训练样本211个,交叉验证样本200个,每个样本的特征参数为2维。

训练样本的可视化图形为:

函数[C, sigma] = dataset3Params(X, y, Xval, yval)为获取最佳的参数C和sigma,补充完整该函数:

function [C, sigma] = dataset3Params(X, y, Xval, yval)

C = 1;

sigma = 0.3;

para_test = [0.01;0.03;0.1;0.3;1;3;10;30];

predictionErr = [];

for i = 1:numel(para_test)

for j = 1:numel(para_test)

C = para_test(i);

sigma = para_test(j);

model= svmTrain(X, y, C, @(x1, x2) gaussianKernel(x1, x2, sigma));

predictions = svmPredict(model, Xval);

predictionErr = [predictionErr;mean(double(predictions ~= yval))];

end

end

[M,I] = min(predictionErr);

j = rem(I,numel(para_test));

i = (I-j)/numel(para_test)+1;

C = para_test(i);

sigma = para_test(j);

end

获取的最佳参数为:

![]()

其决策边界为:

2、垃圾邮件分类

2.1、邮件的预处理

包含下列的预处理和归一化过程:

Lower-casing:将整个邮件内容全部转换成小写;

Stripping HTML:将邮件内容中HTML格式标记内容去除;

Normalizing URLs:将邮件中链接转换成字符“httpaddr”;

Normalizing Email Address:将邮件中含邮箱地主的内容转换成字符"emailaddr";

Normailzing Dollars:将邮件中美元符号“$”转换成字符"dollar";

Normailzing Numbers:将邮件中所有的数字转换成字符"number";

Word Stemming:将邮件中所有的单词转换成词根形式;

Removal of non-words:去除非字符和标点符号。

以’emailSample1.txt’为例,其处理前邮件内容为:

> Anyone knows how much it costs to host a web portal ?

>

Well, it depends on how many visitors you're expecting.

This can be anywhere from less than 10 bucks a month to a couple of $100.

You should checkout http://www.rackspace.com/ or perhaps Amazon EC2

if youre running something big..

To unsubscribe yourself from this mailing list, send an email to:

[email protected]

处理完成后,其内容为:

anyon know how much it cost to host a web portal well it depend on how mani

visitor you re expect thi can be anywher from less than number buck a month

to a coupl of dollarnumb you should checkout httpaddr or perhap amazon ecnumb

if your run someth big to unsubscrib yourself from thi mail list send an

email to emailaddr

以’emailSample2.txt’为例,其处理前邮件内容为:

Folks,

my first time posting - have a bit of Unix experience, but am new to Linux.

Just got a new PC at home - Dell box with Windows XP. Added a second hard disk

for Linux. Partitioned the disk and have installed Suse 7.2 from CD, which went

fine except it didn't pick up my monitor.

I have a Dell branded E151FPp 15" LCD flat panel monitor and a nVidia GeForce4

Ti4200 video card, both of which are probably too new to feature in Suse's default

set. I downloaded a driver from the nVidia website and installed it using RPM.

Then I ran Sax2 (as was recommended in some postings I found on the net), but

it still doesn't feature my video card in the available list. What next?

Another problem. I have a Dell branded keyboard and if I hit Caps-Lock twice,

the whole machine crashes (in Linux, not Windows) - even the on/off switch is

inactive, leaving me to reach for the power cable instead.

If anyone can help me in any way with these probs., I'd be really grateful -

I've searched the 'net but have run out of ideas.

Or should I be going for a different version of Linux such as RedHat? Opinions

welcome.

Thanks a lot,

Peter

--

Irish Linux Users' Group: [email protected]

http://www.linux.ie/mailman/listinfo/ilug for (un)subscription information.

List maintainer: [email protected]

处理完成后,其内容为:

folk my first time post have a bit of unix experi but am new to linux just

got a new pc at home dell box with window xp ad a second hard disk for linux

partit the disk and have instal suse number number from cd which went fine

except it didn t pick up my monitor i have a dell brand enumberfpp number lcd

flat panel monitor and a nvidia geforcenumb tinumb video card both of which

ar probabl too new to featur in suse s default set i download a driver from

the nvidia websit and instal it us rpm then i ran saxnumb as wa recommend in

some post i found on the net but it still doesn t featur my video card in the

avail list what next anoth problem i have a dell brand keyboard and if i hit

cap lock twice the whole machin crash in linux not window even the on off

switch is inact leav me to reach for the power cabl instead if anyon can help

me in ani wai with these prob i d be realli grate i ve search the net but

have run out of idea or should i be go for a differ version of linux such as

redhat opinion welcom thank a lot peter irish linux user group emailaddr

httpaddr for un subscript inform list maintain emailaddr

2.1.1、词汇列表

预处理完成邮件后,每个邮件会对应一个单词列表。下一步就是从这些单词列表中分离出我们在分类器想使用的词汇。

在本小节中,从垃圾邮件中已经挑出了至少出现100次的词汇列表,共计1899个词汇,存储在vocab.txt中。实际中,词汇清单应该在10000-50000个左右。

补充完整word_indices = processEmail(email_contents)函数有:

for i=1:numel(vocabList)

if strcmp(str, vocabList{i})==1

word_indices=[word_indices;i]

end

end

得到的word_indices为:

>> word_indices

word_indices =

86

916

794

1077

883

370

1699

790

1822

1831

883

431

1171

794

1002

1893

1364

592

1676

238

162

89

688

945

1663

1120

1062

1699

375

1162

479

1893

1510

799

1182

1237

810

1895

1440

1547

181

1699

1758

1896

688

1676

992

961

1477

71

530

1699

531

2.2 提取邮件的特征参数

每个邮件的特征参数共计有1899个,其意义为,当对应的第i个特征参数为1表示邮件中含有词汇列表第i个词汇。

function x = emailFeatures(word_indices)

n = 1899;

% You need to return the following variables correctly.

x = zeros(n, 1);

for i =1:numel(word_indices)

x(word_indices(i),1) = 1;

end

end

2.3、垃圾邮件分类的SVM训练

给定的训练库spamTrain.mat共计有4000个训练样本,测试库spamTest.mat共计有1000个测试样本。

选取C=0.1对样本进行训练,得到的训练精度为99.85;对测试样本集进行测试,其预测精度为98.9%。

>> load('spamTest.mat');

>> load('spamTrain.mat');

>> C = 0.1;

>> model = svmTrain(X, y, C, @linearKernel);

Training ......................................................................

...............................................................................

...............................................................................

....................... Done!

>> p = svmPredict(model, X);

>> fprintf('Training Accuracy: %f\n', mean(double(p == y)) * 100);

Training Accuracy: 99.850000

>> p = svmPredict(model, Xtest);

>> fprintf('Test Accuracy: %f\n', mean(double(p == ytest)) * 100);

Test Accuracy: 98.900000

下面寻找该分类器中权重值高的词汇:

[weight, idx] = sort(model.w, ‘descend’);%将权重和索引按照降序排列

vocabList = getVocabList();%导入词汇库

for i = 1:15

fprintf(’ %-15s (%f) \n’, vocabList{idx(i)}, weight(i));%打印排名前15的权重值和其对应的词汇

end

其结果如下:

our (0.501718)

click (0.465863)

remov (0.423458)

guarante (0.388884)

visit (0.367451)

basenumb (0.343614)

dollar (0.328021)

price (0.266814)

will (0.265697)

pleas (0.262256)

lo (0.258528)

nbsp (0.256080)

most (0.254478)

ga (0.240055)

da (0.238208)