【OpenCV 4.0 C++】 Kinect Fusion 使用

【承接图像分类、检测、分割、生成相关项目,私信。】

完整的工程应用:3DFR Star Please

编译源码:

参考这篇博客OpenCV+OpenCV-contrib 编译步骤 ,特别的要勾选OPENCV_ENABLE_NONFREE 在 CMake 中 ,这样才能使用kinect fusion算法。

Depth 数据(RGBD-dataset): 代码使用到的数据集。

当使用自己的数据集时,应设置自己的cv::kinfu::params ,这涉及到frame_size等重要参数。默认参数如下。

KinFu Params(src code):

Ptr<Params> Params::defaultParams()

{

Params p;

p.frameSize = Size(640, 480);

float fx, fy, cx, cy;

fx = fy = 525.f;

cx = p.frameSize.width/2 - 0.5f;

cy = p.frameSize.height/2 - 0.5f;

p.intr = Matx33f(fx, 0, cx,

0, fy, cy,

0, 0, 1);

// 5000 for the 16-bit PNG files

// 1 for the 32-bit float images in the ROS bag files

p.depthFactor = 5000;

// sigma_depth is scaled by depthFactor when calling bilateral filter

p.bilateral_sigma_depth = 0.04f; //meter

p.bilateral_sigma_spatial = 4.5; //pixels

p.bilateral_kernel_size = 7; //pixels

p.icpAngleThresh = (float)(30. * CV_PI / 180.); // radians

p.icpDistThresh = 0.1f; // meters

p.icpIterations = {10, 5, 4};

p.pyramidLevels = (int)p.icpIterations.size();

p.tsdf_min_camera_movement = 0.f; //meters, disabled

p.volumeDims = Vec3i::all(512); //number of voxels

float volSize = 3.f;

p.voxelSize = volSize/512.f; //meters

// default pose of volume cube

p.volumePose = Affine3f().translate(Vec3f(-volSize/2.f, -volSize/2.f, 0.5f));

p.tsdf_trunc_dist = 0.04f; //meters;

p.tsdf_max_weight = 64; //frames

p.raycast_step_factor = 0.25f; //in voxel sizes

// gradient delta factor is fixed at 1.0f and is not used

//p.gradient_delta_factor = 0.5f; //in voxel sizes

//p.lightPose = p.volume_pose.translation()/4; //meters

p.lightPose = Vec3f::all(0.f); //meters

// depth truncation is not used by default

//p.icp_truncate_depth_dist = 0.f; //meters, disabled

return makePtr<Params>(p);

}

下面的代码是精简过的,去掉了摄像头的相关部分,毕竟一般也用不到。

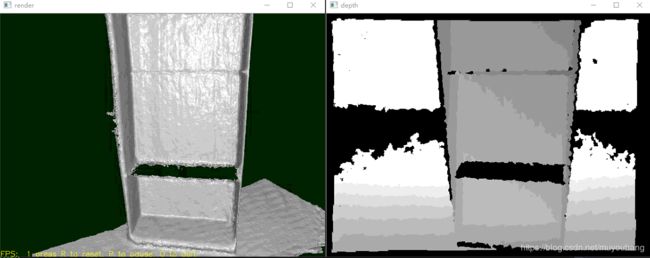

Kinect Fusion 使用样例代码(demo code) :

#include