Jcseg分词器的实现详解

1.之前的项目一直都是用的ik分词器,但是最近的一个项目项目大佬说ik好像很长时间都没更新,版本太老旧,故而用了新的jcseg的分词器,于是也上网找了一些资料,学习了一下如何使用jcseg分词器。

2.http://www.docin.com/p-782941386.html这个是网上找到的Jcseg中文分词器开发说明文档

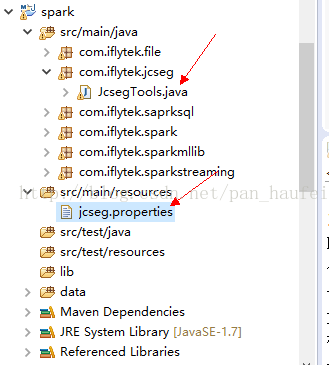

3.具体实现

在pom文件里面添加相关架包

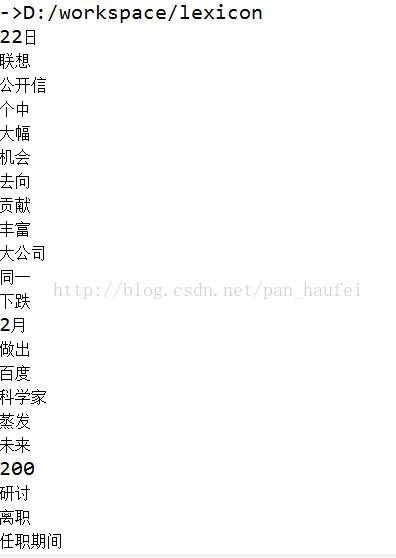

jcseg.properties 注意的是那个词典(lexicon)的目录,没有的可以去官网上下载。

# jcseg properties file.

# bug report chenxin

# Jcseg function

#maximum match length. (5-7)

jcseg.maxlen=5

#recognized the chinese name.(1 to open and 0 to close it)

jcseg.icnname=1

#maximum chinese word number of english chinese mixed word.

jcseg.mixcnlen=2

#maximum length for pair punctuation text.

jcseg.pptmaxlen=15

#maximum length for chinese last name andron.

jcseg.cnmaxlnadron=1

#Wether to clear the stopwords.(set 1 to clear stopwords and 0 to close it)

jcseg.clearstopword=0

#Wether to convert the chinese numeric to arabic number. (set to 1 open it and 0 to close it)

# like '\u4E09\u4E07' to 30000.

jcseg.cnnumtoarabic=1

#Wether to convert the chinese fraction to arabic fraction.

jcseg.cnfratoarabic=1

#Wether to keep the unrecognized word. (set 1 to keep unrecognized word and 0 to clear it)

jcseg.keepunregword=1

#Wether to start the secondary segmentation for the complex english words.

jcseg.ensencondseg = 1

#min length of the secondary simple token. (better larger than 1)

jcseg.stokenminlen = 2

#thrshold for chinese name recognize.

# better not change it before you know what you are doing.

jcseg.nsthreshold=1000000

#The punctuations that will be keep in an token.(Not the end of the token).

jcseg.keeppunctuations=@%.&+

####about the lexicon

#prefix of lexicon file.

lexicon.prefix=lex

#suffix of lexicon file.

lexicon.suffix=lex

#abusolte path of the lexicon file.

#Multiple path support from jcseg 1.9.2, use ';' to split different path.

#example: lexicon.path = /home/chenxin/lex1;/home/chenxin/lex2 (Linux)

# : lexicon.path = D:/jcseg/lexicon/1;D:/jcseg/lexicon/2 (WinNT)

#lexicon.path=C:/Users/admin/Downloads/jcseg-1.9.2/lexicon

lexicon.path=D:/workspace/lexicon

#Wether to load the modified lexicon file auto.

lexicon.autoload=0

#Poll time for auto load. (seconds)

lexicon.polltime=120

####lexicon load

#Wether to load the part of speech of the entry.

jcseg.loadpos=1

#Wether to load the pinyin of the entry.

jcseg.loadpinyin=0

#Wether to load the synoyms words of the entry.

jcseg.loadsyn=1

JcsegTools.java的代码

package com.iflytek.jcseg;

import java.io.IOException;

import java.io.Serializable;

import java.io.StringReader;

import java.util.ArrayList;

import java.util.HashSet;

import java.util.List;

import java.util.Set;

import org.lionsoul.jcseg.core.ADictionary;

import org.lionsoul.jcseg.core.DictionaryFactory;

import org.lionsoul.jcseg.core.ISegment;

import org.lionsoul.jcseg.core.IWord;

import org.lionsoul.jcseg.core.JcsegException;

import org.lionsoul.jcseg.core.JcsegTaskConfig;

import org.lionsoul.jcseg.core.SegmentFactory;

public class JcsegTools implements Serializable {

private static final long serialVersionUID = 1L;

private static JcsegTaskConfig config;

ISegment seg = null;

private boolean inited;

synchronized private void init() throws JcsegException {

JcsegTaskConfig config = new JcsegTaskConfig();

ADictionary dic = DictionaryFactory.createDefaultDictionary(config);

for (String s : config.getLexiconPath()) {

System.out.println("->" + s);

}

seg = SegmentFactory.createJcseg(JcsegTaskConfig.COMPLEX_MODE,

new Object[] { config, dic });

inited = true;

}

public List

JcsegException {

if (!inited) {

init();

}

IWord word = null;

seg.reset(new StringReader(fullText));

List

Set

while ((word = seg.next()) != null) {

if (word.getLength() < 2) {

continue; // 去掉长度小于2的词

}

ml.add(word.getValue());

}

ret.addAll(ml);

return ret;

}

public static void main(String[] args) throws JcsegException, IOException {

String str = "3月22日,百度首席科学家吴恩达在多个社交平台发布公开信,宣布自己将从百度离职,对于未来的去向,他在推特上表示:未来将会推动大公司使用人工智能,并创造丰富的创业机会及进一步的人工智能研究。对于吴恩达的离职,百度官方也给予了祝福,并感谢其在百度任职期间做出的贡献。巧合的是,百度股价曾在2月的同一天大幅下跌,市值蒸发超200亿人民币,联想到今天的离职事件,个中缘由耐人寻味。";

// List

// for (String word : list) {

// System.out.println(word);

// }

// System.out.println(list.size());

JcsegTools demo = new JcsegTools();

try {

List

for(int i=0;i

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

结果截图: