Spring-Data-Redis custom annotation set expire time for SpringBoot2.X

最近研究redis缓存发现Spring虽然提供了缓存的失效时间设置,但是全局设置的,灵活度及自由度不够,因此就决定自定义注解开发在各个方法上设置缓存失效时间,开发语言为Kotlin.

自定义注解:

@Target(AnnotationTarget.FUNCTION) //方法级别

@Retention(AnnotationRetention.RUNTIME)

@Inherited //拓展性

@Cacheable //元注解

annotation class RedisCacheable(

val cacheNames: Array = [], //Cacheable Builder caches

//val key: String = "",

val expireTime: Long = 0 //失效时间 min

) 如果您想拓展Spring的@Cacheable设置cacheNames则在您的自定义注解里须定义cacheNames,这样SpringBoot才能在构建缓存时自动将您的缓存名称赋值给caches:

2018-11-332 12:43:32.935 [restartedMain] DEBUG o.s.c.a.AnnotationCacheOperationSource - Adding cacheable method 'findByName' with attribute: [Builder[public final

spring.data.redis.entity.Person com.sun.proxy.$Proxy105.findByName(java.lang.String)] caches=[nameForPerson] | key='' | keyGenerator='' | cacheManager='' | cacheRe

solver='' | condition='' | unless='' | sync='false']自定义value值加@AliasFor("cacheNames")事实证明是不起作用的,请跳过相关坑,其他相关参数可直接定义(eg:key)都能赋值.说到这里可以了解一下AnnotatedElementUtils.findAllMergedAnnotations();

注解应用:

interface PersonRedisCacheRepository : BaseRedisCacheRepository {

@RedisCacheable(cacheNames = ["nameForPerson"], expireTime = 1)

fun findByName(name: String): Person?

} 在这里需要说明的是:自定义的@RedisCacheable没有给出key的值,Spring@Cacheable会默认取方法中的参数值,与cacheNames组成Redis中的key.上述注解应用例子则极有可能出现key重复事件;

解决方案:1.参数体现该类唯一性(ID);2.自动生成键值策略;3.有需求可使用RedisList

测试类:

package spring.data.redis.entity

import org.springframework.data.annotation.Id

import java.io.Serializable

import javax.persistence.Entity

import javax.persistence.EnumType

import javax.persistence.Enumerated

import javax.persistence.Table

@Table(name = "kotlin_person")

@Entity

data class Person(

@get:Id

@javax.persistence.Id

open var id: String = "",

open var name: String = "",

open var age: Int = 0,

@get:Enumerated(EnumType.STRING)

open var sex: Gender =Gender.MALE

) : Serializable {

override fun toString(): String {

return "Person(id='$id', name='$name', age=$age, sex=$sex)"

}

}

注解处理器:

package spring.data.redis.annotation

import org.slf4j.LoggerFactory

import org.springframework.beans.BeansException

import org.springframework.beans.factory.InitializingBean

import org.springframework.cache.annotation.AnnotationCacheOperationSource

import org.springframework.cache.annotation.CacheConfig

import org.springframework.context.ApplicationContext

import org.springframework.context.ApplicationContextAware

import org.springframework.core.annotation.AnnotationUtils

import org.springframework.data.redis.cache.RedisCacheConfiguration

import org.springframework.data.redis.cache.RedisCacheManager

import org.springframework.data.redis.cache.RedisCacheWriter

import org.springframework.data.redis.serializer.GenericJackson2JsonRedisSerializer

import org.springframework.data.redis.serializer.RedisSerializationContext

import org.springframework.data.redis.serializer.StringRedisSerializer

import org.springframework.util.ReflectionUtils

import spring.data.redis.extension.isNotNull

import spring.data.redis.extension.isNull

import java.lang.reflect.Method

import java.time.Duration

class AnnotationProcessor(cacheWriter: RedisCacheWriter, defaultCacheConfiguration: RedisCacheConfiguration) : RedisCacheManager(cacheWriter, defaultCacheConfiguration), ApplicationContextAware, InitializingBean {

private val log = LoggerFactory.getLogger(AnnotationProcessor::class.java)

private var applicationContext: ApplicationContext? = null

private var initialCacheConfiguration = LinkedHashMap()

@Throws(BeansException::class)

override fun setApplicationContext(applicationContext: ApplicationContext) {

this.applicationContext = applicationContext

}

override fun afterPropertiesSet() {

parseCacheDuration(this.applicationContext)

super.afterPropertiesSet()

}

override fun loadCaches() = initialCacheConfiguration.map { super.createRedisCache(it.key, it.value) }.toMutableList() //创建缓存

private fun parseCacheDuration(applicationContext: ApplicationContext?) {

if (applicationContext.isNull()) return

val beanNames = applicationContext!!.getBeanNamesForType(Any::class.java)

beanNames.forEach {

val clazz = applicationContext!!.getType(it)

addRedisCacheExpire(clazz)

}

}

private fun addRedisCacheExpire(clazz: Class<*>) {

ReflectionUtils.doWithMethods(clazz, { method ->

ReflectionUtils.makeAccessible(method)

val redisCache = findRedisCache(method)

if (redisCache.isNotNull()) {

val cacheConfig = AnnotationUtils.findAnnotation(clazz, CacheConfig::class.java)

val cacheNames = findCacheNames(cacheConfig, redisCache)

cacheNames.forEach {

val config = RedisCacheConfiguration.defaultCacheConfig()

.entryTtl(Duration.ofMinutes(redisCache.expireTime)) // 设置失效时间1min

.disableCachingNullValues() // nullValue不缓存

.serializeKeysWith(RedisSerializationContext.SerializationPair.fromSerializer(StringRedisSerializer()))

.serializeValuesWith(RedisSerializationContext.SerializationPair.fromSerializer(GenericJackson2JsonRedisSerializer())) //友好可视化Json

initialCacheConfiguration[it] = config

}

}

}, { method ->

AnnotationUtils.findAnnotation(method, RedisCacheable::class.java).isNotNull()// 方法拦截@RedisCacheable

})

}

private fun findRedisCache(method: Method) = AnnotationUtils.findAnnotation(method, RedisCacheable::class.java)

private fun findCacheNames(cacheConfig: CacheConfig?, cache: RedisCacheable?) = if (cache.isNull() || cache!!.cacheNames.isEmpty()) cacheConfig?.cacheNames

?: emptyArray() else cache.cacheNames

}

如果您基于Java语言请忽略此处,kotlin data class 反序列化时则会抛出LinkHashMap cannot cast Person,这是因为Jackson反序列化kotlin对象时,您的Any::class.java是不能被反序列化的,我们可以在build.gradle 加上:

allOpen{

annotation("javax.persistence.Entity")

annotation("javax.persistence.MappedSuperclass")

annotation("javax.persistence.Embeddable")

}注意类导包的方式不可javax.persistence.*替代.

如果抛出Person cannot cast Person 异常,则很有可能您开启了热部署:compile("org.springframework.boot:spring-boot-devtools")

解决方案多种我在这说个比较简单的,在您的启动类应如下配置:

fun cacheManager() = AnnotationProcessor(RedisCacheWriter.nonLockingRedisCacheWriter(redisConnectionFactory), RedisCacheConfiguration.defaultCacheConfig(Thread.currentThread().contextClassLoader))

这是因为JVM类加载器与Spring类加载器不一致导致.

启动类:

@Bean

fun cacheManager() = AnnotationProcessor(RedisCacheWriter.nonLockingRedisCacheWriter(redisConnectionFactory), RedisCacheConfiguration.defaultCacheConfig())

注意开启Spring缓存 @EnableCaching(proxyTargetClass = true)

控制器:

@RequestMapping("person/cache/byName")

fun getCacheByName() = personRedisCacheRepository.findByName("asd")

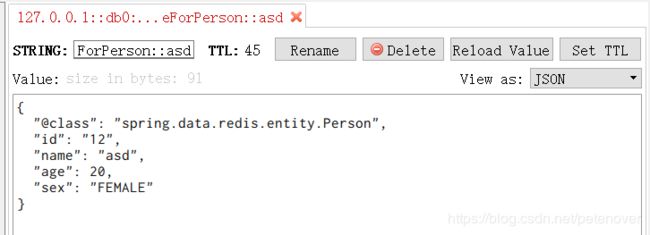

启动SpringBoot 访问:http://localhost:8080/person/cache/byName

堆栈信息:

2018-11-332 13:50:19.498 [lettuce-nioEventLoop-4-1] DEBUG i.l.core.protocol.RedisStateMachine - Decode AsyncCommand [type=GET, output=ValueOutput [output=null, err

or='null'], commandType=io.lettuce.core.protocol.Command]

2018-11-332 13:50:19.499 [lettuce-nioEventLoop-4-1] DEBUG i.l.core.protocol.RedisStateMachine - Decoded AsyncCommand [type=GET, output=ValueOutput [output=null, er

ror='null'], commandType=io.lettuce.core.protocol.Command], empty stack: true

Hibernate: select person0_.id as id1_2_, person0_.age as age2_2_, person0_.name as name3_2_, person0_.sex as sex4_2_ from kotlin_person person0_ where person0_.nam

e=?

2018-11-332 13:50:19.725 [http-nio-8080-exec-1] DEBUG io.lettuce.core.RedisChannelHandler - dispatching command AsyncCommand [type=SET, output=StatusOutput [output

=null, error='null'], commandType=io.lettuce.core.protocol.Command]

2018-11-332 13:50:19.725 [http-nio-8080-exec-1] DEBUG i.l.core.protocol.DefaultEndpoint - [channel=0x63db3443, /127.0.0.1:53613 -> /127.0.0.1:6379, epid=0x1] write

() writeAndFlush command AsyncCommand [type=SET, output=StatusOutput [output=null, error='null'], commandType=io.lettuce.core.protocol.Command]

2018-11-332 13:50:19.725 [http-nio-8080-exec-1] DEBUG i.l.core.protocol.DefaultEndpoint - [channel=0x63db3443, /127.0.0.1:53613 -> /127.0.0.1:6379, epid=0x1] write

() done

第一次查询直接查库,并将返回结果缓存到redis,第二次查询就直接从redis取数据了.

可视化:

如果我们不使用allOpen插件的话"@class"不会被缓存到redis里面,从而Spring Deserializer时会转换异常,这里多说一嘴哈,kotlin所有函数及类都是默认final,而Spring相关库都得需要类是public/open的,so you see.

补充说明:有同学问为什么我只开发了function级别注解,为什么没有class,我在之前的文章中有用到@RedisHash这个注解,在这里您可以设置缓存名称value及失效时间timeToLive,咱们就不做无用功了.