用keras实现人脸关键点检测

转载于:http://www.cnblogs.com/ansang/p/8583122.html

上一个代码只能实现小数据的读取与训练,在大数据训练的情况下。会造内存紧张,于是我根据keras的官方文档,对上一个代码进行了改进。

用keras实现人脸关键点检测

数据集:https://pan.baidu.com/s/1cnAxJJmN9nQUVYj8w0WocA

第一步:准备好需要的库

- tensorflow 1.4.0

- h5py 2.7.0

- hdf5 1.8.15.1

- Keras 2.0.8

- opencv-python 3.3.0

- numpy 1.13.3+mkl

第二步:准备数据集:

我对每一张图像进行了剪裁,使图像的大小为178*178的正方形。

并且对于原有的lable进行了优化

第三步:将图片和标签转成numpy array格式:

参数

1 trainpath = 'E:/pycode/facial-keypoints-master/data/50000train/'

2 testpath = 'E:/pycode/facial-keypoints-master/data/50000test/'

3 imgsize = 178

4 train_samples =40000

5 test_samples = 200

6 batch_size = 32

![]()

1 def __data_label__(path):

2 f = open(path + "lable-40.txt", "r")

3 j = 0

4 i = -1

5 datalist = []

6 labellist = []

7 while True:

8

9 for line in f.readlines():

10 i += 1

11 j += 1

12 a = line.replace("\n", "")

13 b = a.split(",")

14 lable = b[1:]

15 # print(b[1:])

16 #对标签进行归一化(不归一化也行)

17 # for num in b[1:]:

18 # lab = int(num) / 255.0

19 # labellist.append(lab)

20 # lab = labellist[i * 10:j * 10]

21 imgname = path + b[0]

22 images = load_img(imgname)

23 images = img_to_array(images).astype('float32')

24 # 对图片进行归一化(不归一化也行)

25 # images /= 255.0

26 image = np.expand_dims(images, axis=0)

27 lables = np.array(lable)

28

29 # lable =keras.utils.np_utils.to_categorical(lable)

30 # lable = np.expand_dims(lable, axis=0)

31 lable = lables.reshape(1, 10)

32 #这里使用了生成器

33 yield (image,lable)![]()

第四步:搭建网络:

这里使用非常简单的网络

![]()

1 def __CNN__(self):

2 model = Sequential()#178*178*3

3 model.add(Conv2D(32, (3, 3), input_shape=(imgsize, imgsize, 3)))

4 model.add(Activation('relu'))

5 model.add(MaxPooling2D(pool_size=(2, 2)))

6

7 model.add(Conv2D(32, (3, 3)))

8 model.add(Activation('relu'))

9 model.add(MaxPooling2D(pool_size=(2, 2)))

10

11 model.add(Conv2D(64, (3, 3)))

12 model.add(Activation('relu'))

13 model.add(MaxPooling2D(pool_size=(2, 2)))

14

15 model.add(Flatten())

16 model.add(Dense(64))

17 model.add(Activation('relu'))

18 model.add(Dropout(0.5))

19 model.add(Dense(10))

20 return model

21 #因为是回归问题,抛弃了softmax![]()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 176, 176, 32) 896

_________________________________________________________________

activation_1 (Activation) (None, 176, 176, 32) 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 88, 88, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 86, 86, 32) 9248

_________________________________________________________________

activation_2 (Activation) (None, 86, 86, 32) 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 43, 43, 32) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 41, 41, 64) 18496

_________________________________________________________________

activation_3 (Activation) (None, 41, 41, 64) 0

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 20, 20, 64) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 25600) 0

_________________________________________________________________

dense_1 (Dense) (None, 64) 1638464

_________________________________________________________________

activation_4 (Activation) (None, 64) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 64) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 650

=================================================================

Total params: 1,667,754

Trainable params: 1,667,754

Non-trainable params: 0

_________________________________________________________________

第五步:训练网络:

![]()

1 def train(model):

2 # print(lable.shape)

3 model.compile(loss='mse', optimizer='adam')

4 # optimizer = SGD(lr=0.03, momentum=0.9, nesterov=True)

5 # model.compile(loss='mse', optimizer=optimizer, metrics=['accuracy'])

6 epoch_num = 14

7 learning_rate = np.linspace(0.03, 0.01, epoch_num)

8 change_lr = LearningRateScheduler(lambda epoch: float(learning_rate[epoch]))

9 early_stop = EarlyStopping(monitor='val_loss', patience=20, verbose=1, mode='auto')

10 check_point = ModelCheckpoint('CNN_model_final.h5', monitor='val_loss', verbose=0, save_best_only=True,

11 save_weights_only=False, mode='auto', period=1)

12

13 model.fit_generator(__data_label__(trainpath),callbacks=[check_point,early_stop,change_lr],samples_per_epoch=int(train_samples // batch_size),

14 epochs=epoch_num,validation_steps = int(test_samples // batch_size),validation_data=__data_label__(testpath))

15

16 # model.fit(traindata, trainlabel, batch_size=32, epochs=50,

17 # validation_data=(testdata, testlabel))

18 model.evaluate_generator(__data_label__(testpath),steps=10)

19

20 def save(model, file_path=FILE_PATH):

21 print('Model Saved.')

22 model.save_weights(file_path)

23

24

25 def predict(model,image):

26 # 预测样本分类

27 image = cv2.resize(image, (imgsize, imgsize))

28 image.astype('float32')

29 image /= 255

30

31 #归一化

32 result = model.predict(image)

33 result = result*1000+20

34

35 print(result)

36 return result![]()

使用了fit_generator这一方法,加入了learning_rate,LearningRateScheduler,early_stop等参数。

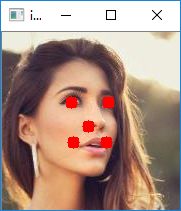

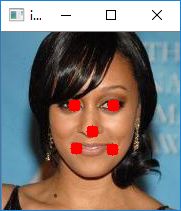

第六步:图像验证

![]()

1 import tes_main

2 from keras.preprocessing.image import load_img, img_to_array

3 import numpy as np

4 import cv2

5 FILE_PATH = 'E:\\pycode\\facial-keypoints-master\\code\\CNN_model_final.h5'

6 imgsize =178

7 def point(img,x, y):

8 cv2.circle(img, (x, y), 1, (0, 0, 255), 10)

9

10 Model = tes_main.Model()

11 model = Model.__CNN__()

12 Model.load(model,FILE_PATH)

13 img = []

14 # path = "D:\\Users\\a\\Pictures\\face_landmark_data\data\\test\\000803.jpg"

15 path = "E:\pycode\\facial-keypoints-master\data\\50000test\\049971.jpg"

16 # image = load_img(path)

17 # img.append(img_to_array(image))

18 # img_data = np.array(img)

19 imgs = cv2.imread(path)

20 # img_datas = np.reshape(imgs,(imgsize, imgsize,3))

21 image = cv2.resize(imgs, (imgsize, imgsize))

22 rects = Model.predict(model,imgs)

23

24 for x, y, w, h, a,b,c,d,e,f in rects:

25 point(image,x,y)

26 point(image,w, h)

27 point(image,a,b)

28 point(image,c,d)

29 point(image,e,f)

30

31 cv2.imshow('img', image)

32 cv2.waitKey(0)

33 cv2.destroyAllWindows()![]()

完整代码如下

![]()

1 from tensorflow.contrib.keras.api.keras.preprocessing.image import ImageDataGenerator,img_to_array

2 from keras.models import Sequential

3 from keras.layers.core import Dense, Dropout, Activation, Flatten

4 from keras.layers.advanced_activations import PReLU

5 from keras.layers.convolutional import Conv2D, MaxPooling2D,ZeroPadding2D

6 from keras.preprocessing.image import load_img, img_to_array

7 from keras.optimizers import SGD

8 import numpy as np

9 import cv2

10 from keras.callbacks import *

11 import keras

12

13 FILE_PATH = 'E:\\pycode\\facial-keypoints-master\\code\\CNN_model_final.h5'

14 trainpath = 'E:/pycode/facial-keypoints-master/data/50000train/'

15 testpath = 'E:/pycode/facial-keypoints-master/data/50000test/'

16 imgsize = 178

17 train_samples =40000

18 test_samples = 200

19 batch_size = 32

20 def __data_label__(path):

21 f = open(path + "lable-40.txt", "r")

22 j = 0

23 i = -1

24 datalist = []

25 labellist = []

26 while True:

27

28 for line in f.readlines():

29 i += 1

30 j += 1

31 a = line.replace("\n", "")

32 b = a.split(",")

33 lable = b[1:]

34 # print(b[1:])

35 #对标签进行归一化(不归一化也行)

36 # for num in b[1:]:

37 # lab = int(num) / 255.0

38 # labellist.append(lab)

39 # lab = labellist[i * 10:j * 10]

40 imgname = path + b[0]

41 images = load_img(imgname)

42 images = img_to_array(images).astype('float32')

43 # 对图片进行归一化(不归一化也行)

44 # images /= 255.0

45 image = np.expand_dims(images, axis=0)

46 lables = np.array(lable)

47

48 # lable =keras.utils.np_utils.to_categorical(lable)

49 # lable = np.expand_dims(lable, axis=0)

50 lable = lables.reshape(1, 10)

51

52 yield (image,lable)

53

54 ###############:

55

56 # 开始建立CNN模型

57 ###############

58

59 # 生成一个model

60 class Model(object):

61 def __CNN__(self):

62 model = Sequential()#218*178*3

63 model.add(Conv2D(32, (3, 3), input_shape=(imgsize, imgsize, 3)))

64 model.add(Activation('relu'))

65 model.add(MaxPooling2D(pool_size=(2, 2)))

66

67 model.add(Conv2D(32, (3, 3)))

68 model.add(Activation('relu'))

69 model.add(MaxPooling2D(pool_size=(2, 2)))

70

71 model.add(Conv2D(64, (3, 3)))

72 model.add(Activation('relu'))

73 model.add(MaxPooling2D(pool_size=(2, 2)))

74

75 model.add(Flatten())

76 model.add(Dense(64))

77 model.add(Activation('relu'))

78 model.add(Dropout(0.5))

79 model.add(Dense(10))

80 model.summary()

81 return model

82

83

84 def train(self,model):

85 # print(lable.shape)

86 model.compile(loss='mse', optimizer='adam', metrics=['accuracy'])

87 # optimizer = SGD(lr=0.03, momentum=0.9, nesterov=True)

88 # model.compile(loss='mse', optimizer=optimizer, metrics=['accuracy'])

89 epoch_num = 10

90 learning_rate = np.linspace(0.03, 0.01, epoch_num)

91 change_lr = LearningRateScheduler(lambda epoch: float(learning_rate[epoch]))

92 early_stop = EarlyStopping(monitor='val_loss', patience=20, verbose=1, mode='auto')

93 check_point = ModelCheckpoint('CNN_model_final.h5', monitor='val_loss', verbose=0, save_best_only=True,

94 save_weights_only=False, mode='auto', period=1)

95

96 model.fit_generator(__data_label__(trainpath),callbacks=[check_point,early_stop,change_lr],samples_per_epoch=int(train_samples // batch_size),

97 epochs=epoch_num,validation_steps = int(test_samples // batch_size),validation_data=__data_label__(testpath))

98

99 # model.fit(traindata, trainlabel, batch_size=32, epochs=50,

100 # validation_data=(testdata, testlabel))

101 model.evaluate_generator(__data_label__(testpath))

102

103 def save(self,model, file_path=FILE_PATH):

104 print('Model Saved.')

105 model.save_weights(file_path)

106

107 def load(self,model, file_path=FILE_PATH):

108 print('Model Loaded.')

109 model.load_weights(file_path)

110

111 def predict(self,model,image):

112 # 预测样本分类

113 print(image.shape)

114 image = cv2.resize(image, (imgsize, imgsize))

115 image.astype('float32')

116 image = np.expand_dims(image, axis=0)

117

118 #归一化

119 result = model.predict(image)

120

121 print(result)

122 return result