Ubuntu 16.04 源码编译安装GPU tensorflow(二)

如前一篇在1.4.0版本的Tensorflow上安裝Tensorflow Object Detection API,在验证测试时出現serialized_options=None问题。需安装高版本Tensorflow。到目前,最新的Tensorflow是1.10.0版本,虽想尝个鲜,但怕坑太多。下面还是安装1.8版本。

特别注意:请各位看官务必看完全文再参考,本文未经最后整理,基本是安装过程记录,有反复,务必先看完全文!!!

关于配套版本https://tensorflow.google.cn/install/install_linux#NVIDIARequirements 有下面一段话:

The following NVIDIA® hardware must be installed on your system:

- GPU card with CUDA Compute Capability 3.5 or higher. See NVIDIA documentation for a list of supported GPU cards.

The following NVIDIA® software must be installed on your system:

- GPU drivers. CUDA 9.0 requires 384.x or higher.

- CUDA Toolkit 9.0.

- cuDNN SDK (>= 7.2).

- CUPTI ships with the CUDA Toolkit, but you also need to append its path to the

LD_LIBRARY_PATHenvironment variable:export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda/extras/CUPTI/lib64 - OPTIONAL: NCCL 2.2 to use TensorFlow with multiple GPUs.

- OPTIONAL: TensorRT which can improve latency and throughput for inference for some models.

1、升级前后软件包

| 软件 | 升级前版本 | 升级后版本 | 升级包 | 说明 |

| Nvidia Driver | 390.87 | 390.87 | NVIDIA-Linux-x86_64-390.87.run | 已是最新版本 |

| CUDA | 9.0.176 | 9.2.148.1 | 1)cuda_9.2.148_396.37_linux.run 2)cuda_9.2.148.1_linux.run |

1)是 Base Installer 2)是 patch 1 (Released Aug 6, 2018) |

| CUDNN | 7.0.3 | 7.2.1.38 | cudnn-9.2-linux-x64-v7.2.1.38.tgz | CUDNN安装包下载时要选择与CUDA版本对应的包 |

| Tensorflow | 1.4.0-rc1 | 1.8.0 | ||

| bazel | 0.12.0 | 0.16.1 |

|

cuda下载链接: https://developer.nvidia.com/cuda-downloads

cudnn下载链接:https://developer.nvidia.com/rdp/cudnn-download

bazel下载链接:https://github.com/bazelbuild/bazel/releases

此处描述为升级,实际与重装区别不大。能否有更方便的升级方式呢?

2.CUDA的升级

(1)卸载旧版本CUDA

用如下命令卸载掉CUDA 9.0.176。GPU驱动已经是新版本,无需卸载。

$ sudo /usr/local/cuda-9.0/bin/uninstall_cuda_9.0.pl执行完后,提示 Not removing directory, it is not empty: /usr/local/cuda-9.0,因为cuda-9.0目录中有cudnn文件,目录不为空,需手动删除cuda-9.0目录

$ sudo rm -R /usr/local/cuda-9.0(2)安装CUDA9.2.148

首先安装CUDA9.2.148的基础包cuda_9.2.148_396.37_linux.run,安装 过程中选择不安装nvidia driver,因为显卡驱动已经单独装好了。

$ sudo sh cuda_9.2.148_396.37_linux.run再安装补丁包 cuda_9.2.148.1_linux.run

$ sudo sh cuda_9.2.148.1_linux.run(3)修改环境变量

打开home目录下的 .bashrc文件,按实际安装路径修改,如下:

export PATH=/usr/local/cuda/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:/usr/local/cuda/extras/CUPTI/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

export CUDA_HOME=/usr/local/cuda要确保在/usr/local下有如下目录和链接:cuda-9.2目录是cuda的实际安装目录,cuda链接到该目录。默认安装就有这两条。

lrwxrwxrwx 1 root root 19 9月 12 22:17 cuda -> /usr/local/cuda-9.2

drwxr-xr-x 18 root root 4096 9月 12 22:17 cuda-9.2

(4)查看CUDA版本

$ cd

source .bashrc

$ nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2018 NVIDIA Corporation

Built on Tue_Jun_12_23:07:04_CDT_2018

Cuda compilation tools, release 9.2, V9.2.148

3.CUDNN的升级

cudnn的升级过程与CUDA升级过程类似

(1)卸载旧版本CUDNN

在卸载CUDA步骤时,已经删除了/usr/local/cuda,/usr/local/cuda-9.0两个目录,已经卸载了旧版本的CUDNN,无需其它操作

(2)安装CUDNN7.2版本

$ tar xvf cudnn-9.2-linux-x64-v7.2.1.38.tgz

$ cd cuda

$ sudo cp include/cudnn.h /usr/local/cuda/include/

$ sudo cp lib64/lib* /usr/local/cuda/lib64/(3)建立软链接

在/usr/local/cuda/lib64目录下打开终端,执行如下指令:

$ cd /usr/local/cuda/lib64

$ sudo chmod +r libcudnn.so.7.2.1

$ sudo ln -sf libcudnn.so.7.2.1 libcudnn.so.7

$ sudo ln -sf libcudnn.so.7 libcudnn.so

$ sudo ldconfig(4)查看当前cudnn的版本

$ cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2

#define CUDNN_MAJOR 7

#define CUDNN_MINOR 2

#define CUDNN_PATCHLEVEL 1

--

#define CUDNN_VERSION (CUDNN_MAJOR * 1000 + CUDNN_MINOR * 100 + CUDNN_PATCHLEVEL)

#include "driver_types.h"

新版本为7.2.1

4. 升级bazel

从 https://github.com/bazelbuild/bazel/releases 这里下载安装包,速度实在太慢。使用如下命令升级

$ sudo apt-get upgrade bazel

$ bazel version

Extracting Bazel installation...

Starting local Bazel server and connecting to it...

Build label: 0.16.1

Build target: bazel-out/k8-opt/bin/src/main/java/com/google/devtools/build/lib/bazel/BazelServer_deploy.jar

Build time: Mon Aug 13 13:43:36 2018 (1534167816)

Build timestamp: 1534167816

Build timestamp as int: 1534167816

5. 升级Tensorflow

通过源码编译安装升级tensorflow到1.8版本,支持GPU。

(1)下载Tensorflow源码

直接执行如下命令cloe tensorflow源码下载速度很慢很慢。在/etc/hosts文件中增加如下两行:

151.101.72.249 http://global-ssl.fastly.Net

192.30.253.112 http://github.com然后重启网卡:

sudo /etc/init.d/networking restart再次执行如下,下载速度会很快。

git clone https://github.com/tensorflow/tensorflow$ cd tensorflow

$ git checkout r1.8

Branch r1.8 set up to track remote branch r1.8 from origin.

Switched to a new branch 'r1.8'(2) 配置tensorflow编译环境

$ ./configure

WARNING: --batch mode is deprecated. Please instead explicitly shut down your Bazel server using the command "bazel shutdown".

You have bazel 0.16.1 installed.

Please specify the location of python. [Default is /home/kou/anaconda3/bin/python]:

Found possible Python library paths:

/home/kou/anaconda3/lib/python3.6/site-packages

/home/kou/tensorflow/models/research

/home/kou/tensorflow/models/research/slim

Please input the desired Python library path to use. Default is [/home/kou/anaconda3/lib/python3.6/site-packages]

Do you wish to build TensorFlow with jemalloc as malloc support? [Y/n]: n

No jemalloc as malloc support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Google Cloud Platform support? [Y/n]: n

No Google Cloud Platform support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Hadoop File System support? [Y/n]: n

No Hadoop File System support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Amazon S3 File System support? [Y/n]: n

No Amazon S3 File System support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Apache Kafka Platform support? [Y/n]: n

No Apache Kafka Platform support will be enabled for TensorFlow.

Do you wish to build TensorFlow with XLA JIT support? [y/N]: n

No XLA JIT support will be enabled for TensorFlow.

Do you wish to build TensorFlow with GDR support? [y/N]: n

No GDR support will be enabled for TensorFlow.

Do you wish to build TensorFlow with VERBS support? [y/N]: n

No VERBS support will be enabled for TensorFlow.

Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]: n

No OpenCL SYCL support will be enabled for TensorFlow.

Do you wish to build TensorFlow with CUDA support? [y/N]: y

CUDA support will be enabled for TensorFlow.

Please specify the CUDA SDK version you want to use, e.g. 7.0. [Leave empty to default to CUDA 9.0]: 9.2

Please specify the location where CUDA 9.2 toolkit is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:

Please specify the cuDNN version you want to use. [Leave empty to default to cuDNN 7.0]: 7.2

Please specify the location where cuDNN 7 library is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:

Do you wish to build TensorFlow with TensorRT support? [y/N]: n

No TensorRT support will be enabled for TensorFlow.

Please specify the NCCL version you want to use. [Leave empty to default to NCCL 1.3]:

Please specify a list of comma-separated Cuda compute capabilities you want to build with.

You can find the compute capability of your device at: https://developer.nvidia.com/cuda-gpus.

Please note that each additional compute capability significantly increases your build time and binary size. [Default is: 3.5,5.2]6.1

Do you want to use clang as CUDA compiler? [y/N]: n

nvcc will be used as CUDA compiler.

Please specify which gcc should be used by nvcc as the host compiler. [Default is /usr/bin/gcc]:

Do you wish to build TensorFlow with MPI support? [y/N]: n

No MPI support will be enabled for TensorFlow.

Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native]:

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]: n

Not configuring the WORKSPACE for Android builds.

Preconfigured Bazel build configs. You can use any of the below by adding "--config=<>" to your build command. See tools/bazel.rc for more details.

--config=mkl # Build with MKL support.

--config=monolithic # Config for mostly static monolithic build.

Configuration finished

kou@aikou:~/Downloads/tensorflow$

(3)源码编译Tensorflow

$ bazel build -c opt --copt=-msse4.1 --copt=-msse4.2 --copt=-mavx --config=cuda --verbose_failures //tensorflow/tools/pip_package:build_pip_package编译过程中在下面提示处卡住,硬盘灯全红,

[1,925 / 3,713] 16 actions running

Compiling tensorflow/contrib/seq2seq/kernels/beam_search_ops_gpu.cu.cc [for host]; 988s local

Compiling tensorflow/contrib/data/kernels/prefetching_kernels.cc [for host]; 987s local

Compiling tensorflow/contrib/fused_conv/kernels/fused_conv2d_bias_activation_op.cc [for host]; 985s local

Compiling tensorflow/contrib/nccl/kernels/nccl_manager.cc [for host]; 985s local

Compiling tensorflow/contrib/nccl/kernels/nccl_ops.cc [for host]; 984s local

Compiling tensorflow/contrib/resampler/kernels/resampler_ops_gpu.cu.cc [for host]; 984s local

Compiling tensorflow/contrib/resampler/ops/resampler_ops.cc [for host]; 984s local

Compiling tensorflow/contrib/resampler/kernels/resampler_ops.cc [for host]; 983s local ...

约1小时后,提示如下错误:

Server terminated abruptly (error code: 14, error message: '', log file: '/home/kou/.cache/bazel/_bazel_kou/e9328cc7a7c353977c81adfdbba54a6e/server/jvm.out')

查看该jvm.out文件内容如下:

$ more jvm.out

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by io.netty.util.internal.ReflectionUtil (file:/home/ko

u/.cache/bazel/_bazel_kou/install/ca1b8b7c3e5200be14b7f27896826862/_embedded_binaries/A-se

rver.jar) to field sun.nio.ch.SelectorImpl.selectedKeys

WARNING: Please consider reporting this to the maintainers of io.netty.util.internal.Refle

ctionUtil

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access

operations

WARNING: All illegal access operations will be denied in a future release

从提示看可能是SWAP分区太小,如下命令查看。

$ free -g

total used free shared buff/cache available

Mem: 7 1 5 0 0 5

Swap: 0 0 0

增加64G硬盘作为SWAP分区(具体增加过程不赘述),增加后如下:

kou@aikou:/$ free -g

total used free shared buff/cache available

Mem: 7 2 1 0 3 4

Swap: 63 0 63

再次执行上面的编译命令,编译正常进行,未出错(有N多warning),不过编译时间太长,共11643步(文件),一个一个执行。请一定耐心等待。从下面的数据看,共耗时6个小时。

Target //tensorflow/tools/pip_package:build_pip_package up-to-date:

bazel-bin/tensorflow/tools/pip_package/build_pip_package

INFO: Elapsed time: 21605.219s, Critical Path: 21060.97s

INFO: 8722 processes: 8722 local.

INFO: Build completed successfully, 11643 total actions

(4)生成PIP安装文件

执行如下命令:

$ bazel-bin/tensorflow/tools/pip_package/build_pip_package /tmp/tensorflow_pkg

2018年 09月 16日 星期日 20:09:46 CST : === Using tmpdir: /tmp/tmp.T3qmbD3fJE

~/Downloads/tensorflow/bazel-bin/tensorflow/tools/pip_package/build_pip_package.runfiles ~/Downloads/tensorflow

~/Downloads/tensorflow

/tmp/tmp.T3qmbD3fJE ~/Downloads/tensorflow

2018年 09月 16日 星期日 20:09:49 CST : === Building wheel

warning: no files found matching '*.dll' under directory '*'

warning: no files found matching '*.lib' under directory '*'

warning: no files found matching '*' under directory 'tensorflow/aux-bin'

warning: no files found matching '*.h' under directory 'tensorflow/include/tensorflow'

warning: no files found matching '*' under directory 'tensorflow/include/Eigen'

warning: no files found matching '*' under directory 'tensorflow/include/external'

warning: no files found matching '*.h' under directory 'tensorflow/include/google'

warning: no files found matching '*' under directory 'tensorflow/include/third_party'

warning: no files found matching '*' under directory 'tensorflow/include/unsupported'

~/Downloads/tensorflow

2018年 09月 16日 星期日 20:10:15 CST : === Output wheel file is in: /tmp/tensorflow_pkg查看/tmp/tensorflor_pkg文件夹下生成的安装文件为:tensorflow-1.8.0-cp36-cp36m-linux_x86_64.whl

kou@aikou:~/Downloads/tensorflow$ ls -al /tmp/tensorflow_pkg

total 81432

drwxrwxr-x 2 kou kou 4096 9月 16 20:10 .

drwxrwxrwt 19 root root 28672 9月 16 20:10 ..

-rw-rw-r-- 1 kou kou 83352985 9月 16 20:10 tensorflow-1.8.0-cp36-cp36m-linux_x86_64.whl(5)安装

安装之前需卸载原来的1.4版本tensorflow

$sudo pip uninstall tensorflow==1.4执行如下命令安装

kou@aikou:~/Downloads/tensorflow$ pip install /tmp/tensorflow_pkg/tensorflow-1.8.0-cp36-cp36m-linux_x86_64.whl

Processing /tmp/tensorflow_pkg/tensorflow-1.8.0-cp36-cp36m-linux_x86_64.whl

Collecting tensorboard<1.9.0,>=1.8.0 (from tensorflow==1.8.0)

Using cached https://files.pythonhosted.org/packages/59/a6/0ae6092b7542cfedba6b2a1c9b8dceaf278238c39484f3ba03b03f07803c/tensorboard-1.8.0-py3-none-any.whl

Collecting grpcio>=1.8.6 (from tensorflow==1.8.0)

Downloading https://files.pythonhosted.org/packages/a7/9c/523fec4e50cd4de5effeade9fab6c1da32e7e1d72372e8e514274ffb6509/grpcio-1.15.0-cp36-cp36m-manylinux1_x86_64.whl (9.5MB)

100% |████████████████████████████████| 9.5MB 245kB/s

Collecting absl-py>=0.1.6 (from tensorflow==1.8.0)

Downloading https://files.pythonhosted.org/packages/a7/86/67f55488ec68982270142c340cd23cd2408835dc4b24bd1d1f1e114f24c3/absl-py-0.4.1.tar.gz (88kB)

100% |████████████████████████████████| 92kB 29kB/s

Requirement already satisfied: numpy>=1.13.3 in /home/kou/anaconda3/lib/python3.6/site-packages (from tensorflow==1.8.0) (1.13.3)

Requirement already satisfied: six>=1.10.0 in /home/kou/anaconda3/lib/python3.6/site-packages (from tensorflow==1.8.0) (1.11.0)

Collecting termcolor>=1.1.0 (from tensorflow==1.8.0)

Using cached https://files.pythonhosted.org/packages/8a/48/a76be51647d0eb9f10e2a4511bf3ffb8cc1e6b14e9e4fab46173aa79f981/termcolor-1.1.0.tar.gz

Requirement already satisfied: wheel>=0.26 in /home/kou/anaconda3/lib/python3.6/site-packages (from tensorflow==1.8.0) (0.29.0)

Requirement already satisfied: protobuf>=3.4.0 in /home/kou/anaconda3/lib/python3.6/site-packages (from tensorflow==1.8.0) (3.5.0.post1)

Collecting astor>=0.6.0 (from tensorflow==1.8.0)

Downloading https://files.pythonhosted.org/packages/35/6b/11530768cac581a12952a2aad00e1526b89d242d0b9f59534ef6e6a1752f/astor-0.7.1-py2.py3-none-any.whl

Collecting gast>=0.2.0 (from tensorflow==1.8.0)

Using cached https://files.pythonhosted.org/packages/5c/78/ff794fcae2ce8aa6323e789d1f8b3b7765f601e7702726f430e814822b96/gast-0.2.0.tar.gz

Requirement already satisfied: werkzeug>=0.11.10 in /home/kou/anaconda3/lib/python3.6/site-packages (from tensorboard<1.9.0,>=1.8.0->tensorflow==1.8.0) (0.12.2)

Requirement already satisfied: markdown>=2.6.8 in /home/kou/anaconda3/lib/python3.6/site-packages (from tensorboard<1.9.0,>=1.8.0->tensorflow==1.8.0) (2.6.10)

Requirement already satisfied: bleach==1.5.0 in /home/kou/anaconda3/lib/python3.6/site-packages (from tensorboard<1.9.0,>=1.8.0->tensorflow==1.8.0) (1.5.0)

Requirement already satisfied: html5lib==0.9999999 in /home/kou/anaconda3/lib/python3.6/site-packages (from tensorboard<1.9.0,>=1.8.0->tensorflow==1.8.0) (0.9999999)

Requirement already satisfied: setuptools in /home/kou/anaconda3/lib/python3.6/site-packages (from protobuf>=3.4.0->tensorflow==1.8.0) (36.5.0.post20170921)

Building wheels for collected packages: absl-py, termcolor, gast

Running setup.py bdist_wheel for absl-py ... done

Stored in directory: /home/kou/.cache/pip/wheels/78/a3/a3/689120b95c26b9a21be6584b4b482b0fda0a1b60efd30af558

Running setup.py bdist_wheel for termcolor ... done

Stored in directory: /home/kou/.cache/pip/wheels/7c/06/54/bc84598ba1daf8f970247f550b175aaaee85f68b4b0c5ab2c6

Running setup.py bdist_wheel for gast ... done

Stored in directory: /home/kou/.cache/pip/wheels/9a/1f/0e/3cde98113222b853e98fc0a8e9924480a3e25f1b4008cedb4f

Successfully built absl-py termcolor gast

Installing collected packages: tensorboard, grpcio, absl-py, termcolor, astor, gast, tensorflow

Found existing installation: tensorflow 1.4.0rc1

Uninstalling tensorflow-1.4.0rc1:

Successfully uninstalled tensorflow-1.4.0rc1

Successfully installed absl-py-0.4.1 astor-0.7.1 gast-0.2.0 grpcio-1.15.0 tensorboard-1.8.0 tensorflow-1.8.0 termcolor-1.1.0

kou@aikou:~/Downloads/tensorflow$

(6)验证

kou@aikou:~$ python

Python 3.6.3 |Anaconda custom (64-bit)| (default, Oct 13 2017, 12:02:49)

[GCC 7.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

>>> print(tf.__version__)

1.8.0

>>> hello = tf.constant("Hello, Tensorflow")

>>> seee = tf.Session()

2018-09-16 20:31:57.727331: I tensorflow/core/platform/cpu_feature_guard.cc:140] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2018-09-16 20:31:58.617543: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:898] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2018-09-16 20:31:58.618193: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1356] Found device 0 with properties:

name: GeForce GTX 1070 Ti major: 6 minor: 1 memoryClockRate(GHz): 1.683

pciBusID: 0000:26:00.0

totalMemory: 7.93GiB freeMemory: 7.63GiB

2018-09-16 20:31:58.618218: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1435] Adding visible gpu devices: 0

2018-09-16 20:31:58.618390: E tensorflow/core/common_runtime/direct_session.cc:154] Internal: cudaGetDevice() failed. Status: CUDA driver version is insufficient for CUDA runtime version

Traceback (most recent call last):

File "

File "/home/kou/anaconda3/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 1560, in __init__

super(Session, self).__init__(target, graph, config=config)

File "/home/kou/anaconda3/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 633, in __init__

self._session = tf_session.TF_NewSession(self._graph._c_graph, opts)

tensorflow.python.framework.errors_impl.InternalError: Failed to create session.

>>>

出现错误提示 CUDA driver version is insufficient for CUDA runtime version,这个提示的含义是CUDA driver的版本低于CUDA runtime版本的要求。从前面的安装看,CUDAdriver的版本即GPU的版本是 390.87,CUDA runtime的版本是9.2.148.1

但问题是GPU driver的版本已经是当前能够从NVIDIA官网下载的最新版本了,已经找不到更新的版本了,如图:

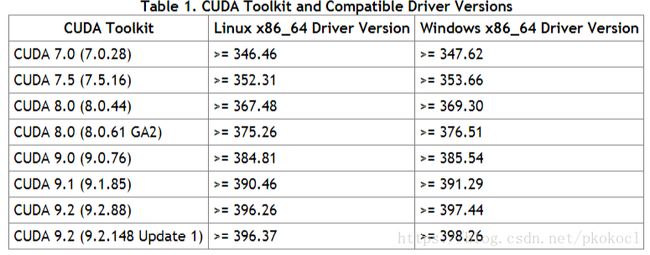

查询到NVIDIA官网关于CUDA DRIVER与CUDA runtime的配套关系说明 https://docs.nvidia.com/cuda/cuda-toolkit-release-notes/index.html

由于安装的CUDA toolkit版本(即CUDA runtime) 版本是9.2.148 Update1,要求的CUDA Driver是396.37及以上版本。

这个问题,根据当前的情况,有两个可选的解决办法:

办法1:将GPU驱动的版本由390.87 更换为396.37及以上版本

办法2:继续保留GPU驱动390.87版本,按上表配套关系更换CUDA toolkit版本,按上表,应替换为CUDA 9.1.85版本。

但CUDA版本与CUDNN版本相关,且在后续的tensorflow源码configure时也已经指定过CUDA,CUDNN的版本了。因此,如果采用办法2,意味着tensorflow还要重新编译。办法2不可取。

但官网最新的驱动中根本找不到这个396.37版本。仔细回想了一下,在CUDA toolkit的安装包名为:cuda_9.2.148_396.37_linux.run ,其实这个安装包中已经自带396.37驱动,只是当初安装时选了NO,这里是个坑:NVIDIA专门的GPU驱动下载页面提供的驱动版本,居然没有这个安装包中的驱动版本新。

6 卸载GPU驱动,重新安装CUDA

(1) 卸载GPU驱动

Ctrl+Alt+F1 进入字符界面

$ sudo service lightdm stop

$ sudo ./NVIDIA-Linux-x86_64-390.87.run --uninstall

Verifying archive integrity... OK

Uncompressing NVIDIA Accelerated Graphics Driver for Linux-x86_64 390.87.........................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................

(2) 卸载CUDA

$ sudo /usr/local/cuda-9.2/bin/uninstall_cuda_9.2.pl

$ sudo rm -rf /usr/local/cuda-9.2(3) 安装CUDA

$ sudo sh cuda_9.2.148_396.37_linux.run

...

Do you accept the previously read EULA?

accept/decline/quit: accept

Install NVIDIA Accelerated Graphics Driver for Linux-x86_64 396.37?

(y)es/(n)o/(q)uit: y

Do you want to install the OpenGL libraries?

(y)es/(n)o/(q)uit [ default is yes ]: n

Do you want to run nvidia-xconfig?

This will update the system X configuration file so that the NVIDIA X driver

is used. The pre-existing X configuration file will be backed up.

This option should not be used on systems that require a custom

X configuration, such as systems with multiple GPU vendors.

(y)es/(n)o/(q)uit [ default is no ]: y

Install the CUDA 9.2 Toolkit?

(y)es/(n)o/(q)uit: y

Enter Toolkit Location

[ default is /usr/local/cuda-9.2 ]:

Do you want to install a symbolic link at /usr/local/cuda?

(y)es/(n)o/(q)uit: y

Install the CUDA 9.2 Samples?

(y)es/(n)o/(q)uit: y

Enter CUDA Samples Location

[ default is /home/kou ]:

Installing the NVIDIA display driver...下面这个选项选择y,安装GPU 驱动

Install NVIDIA Accelerated Graphics Driver for Linux-x86_64 396.37?

(y)es/(n)o/(q)uit: y

打补丁,重新安装CUDNN版本 ---- 这两个步骤参考上面第2,第3部分的说明

验证版本:

$ sudo service lightdm start

$ nvidia-smi

Sun Sep 16 22:22:45 2018

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 396.37 Driver Version: 396.37 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GeForce GTX 107... Off | 00000000:26:00.0 On | N/A |

| 0% 47C P0 37W / 180W | 59MiB / 8118MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 16758 G /usr/lib/xorg/Xorg 57MiB |

+-----------------------------------------------------------------------------+

$ nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2018 NVIDIA Corporation

Built on Tue_Jun_12_23:07:04_CDT_2018

Cuda compilation tools, release 9.2, V9.2.148

$ cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2

#define CUDNN_MAJOR 7

#define CUDNN_MINOR 2

#define CUDNN_PATCHLEVEL 1

--

#define CUDNN_VERSION (CUDNN_MAJOR * 1000 + CUDNN_MINOR * 100 + CUDNN_PATCHLEVEL)

#include "driver_types.h"

7 再次验证Tensorflow

~$ python

Python 3.6.3 |Anaconda custom (64-bit)| (default, Oct 13 2017, 12:02:49)

[GCC 7.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

>>> print(tf.__version__)

1.8.0

>>> hello = tf.constant("Hello Tensorflow!")

>>> sess = tf.Session()

2018-09-16 22:27:46.669398: I tensorflow/core/platform/cpu_feature_guard.cc:140] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2018-09-16 22:27:46.890977: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:898] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2018-09-16 22:27:46.891498: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1356] Found device 0 with properties:

name: GeForce GTX 1070 Ti major: 6 minor: 1 memoryClockRate(GHz): 1.683

pciBusID: 0000:26:00.0

totalMemory: 7.93GiB freeMemory: 7.74GiB

2018-09-16 22:27:46.891516: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1435] Adding visible gpu devices: 0

2018-09-16 22:27:48.519109: I tensorflow/core/common_runtime/gpu/gpu_device.cc:923] Device interconnect StreamExecutor with strength 1 edge matrix:

2018-09-16 22:27:48.519159: I tensorflow/core/common_runtime/gpu/gpu_device.cc:929] 0

2018-09-16 22:27:48.519169: I tensorflow/core/common_runtime/gpu/gpu_device.cc:942] 0: N

2018-09-16 22:27:48.522931: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1053] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 7477 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1070 Ti, pci bus id: 0000:26:00.0, compute capability: 6.1)

>>> sess.run(hello)

b'Hello Tensorflow!'

>>>

Tensorflow版本为1.8,运行一切正常。

不过有个提示 this TensorFlow binary was not compiled to use: AVX2 FMA。CPU AMD 1700X支持AVX2,但在第5.3步骤编译tensorflow源码时未选择支持AVX2

要增加这个选项,需重新编译。重做5.3,5.4,5.5步骤。编译太费时,等有空的时候再改。

在新安装,升级的时候,一定要先搞清楚软件配套关系(GPU Driver,CUDA,CUDNN,Tensorflow,bazel等)。