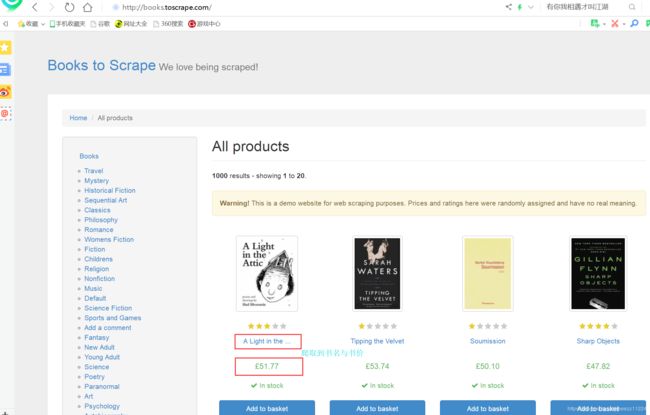

爬取静态页面内容加分页内容

实验网站http://books.toscrape.com/爬取

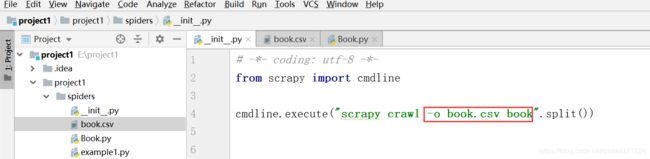

# -*- coding: utf-8 -*-

import scrapy

class Book(scrapy.Spider):

name = 'book' #爬虫标识名称

# allowed_domains = ['example.com']

start_urls = ['http://books.toscrape.com/'] #开始爬取的位置

def parse(self, response):

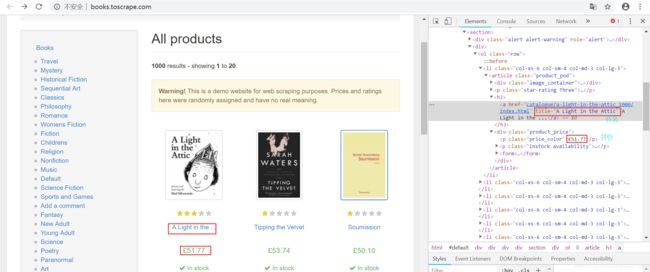

for book in response.xpath("//article[@class='product_pod']"):

book_name = book.xpath("./h3/a/@title").extract()

book_price = book.xpath("./div[@class='product_price']/p[@class='price_color']/text()").extract()

yield{

'name':book_name,

'price':book_price,

}

#获取下一页代码实现所有页数的数据的爬取

url = response.xpath("//li[@class='next']/a/@href").extract_first()

url2 = response.urljoin(url)

yield scrapy.Request(url2,callback=self.parse)

这个地址不是全部内容所以要拼截url2 = response.urljoin(url)

最后通过yield获取全部路径

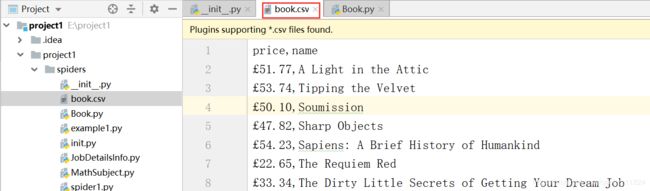

到此显示book网页的对书名与书价的静态数据爬取与分页的显示所有内容完成

例子2:

爬取51cto中的静态数据数据中输入关键字python,爬取,职位名,公司名,工作地点,薪资,发布时间

# -*- coding:utf-8 -*-

import scrapy

class Job51(scrapy.Spider):

name = 'job51' #爬虫名称

start_urls = ['https://search.51job.com/list/150200,000000,0000,00,9,99,python,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=']

def parse(self, response):

for jobinfo in response.xpath("//div[@class='dw_wp']/div[@class='dw_table']/div[@class='el']"):

jobname = jobinfo.xpath("./p/span/a/@title").extract()

companyname = jobinfo.xpath("./span[@class='t2']/a/@title").extract()

place = jobinfo.xpath("./span[@class='t3']/text()").extract()

salary = jobinfo.xpath("./span[@class='t4']/text()").extract()

time = jobinfo.xpath("./span[@class='t5']/text()").extract()

yield{

'jobname':jobname,

' companyname':companyname,

'place':place,

'salary':salary,

'time':time,

}

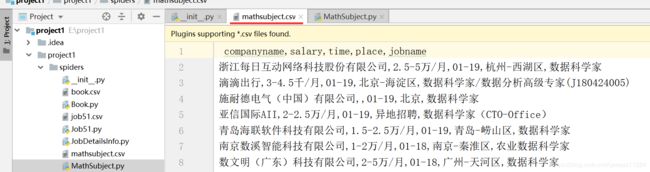

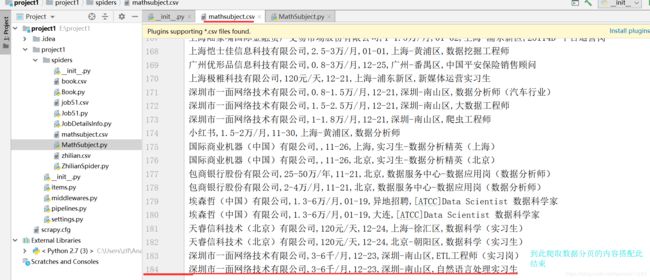

第三部分例子

爬取51job中的分页内容 我们输入关键字数据科学家

共有4页将爬取所有的数据

# -*- coding:utf-8 -*-

import scrapy

class MathSubject(scrapy.Spider):

name = 'mathsubject' #爬虫名称

start_urls = ['https://search.51job.com/list/000000,000000,0000,00,9,99,%25E6%2595%25B0%25E6%258D%25AE%25E7%25A7%2591%25E5%25AD%25A6%25E5%25AE%25B6,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=']

def parse(self, response):

for jobinfo in response.xpath("//div[@class='dw_wp']/div[@class='dw_table']/div[@class='el']"):

jobname = jobinfo.xpath("./p/span/a/@title").extract()

companyname = jobinfo.xpath("./span[@class='t2']/a/@title").extract()

place = jobinfo.xpath("./span[@class='t3']/text()").extract()

salary = jobinfo.xpath("./span[@class='t4']/text()").extract()

time = jobinfo.xpath("./span[@class='t5']/text()").extract()

yield{

'jobname':jobname,

' companyname':companyname,

'place':place,

'salary':salary,

'time':time,

}

#上面是获取一页中的内容

#下面是获取下一页的网页地址路径

url = response.xpath("//li[@class='bk']")[1].xpath("./a/@href").extract_first()

yield scrapy.Request(url,callback=self.parse)

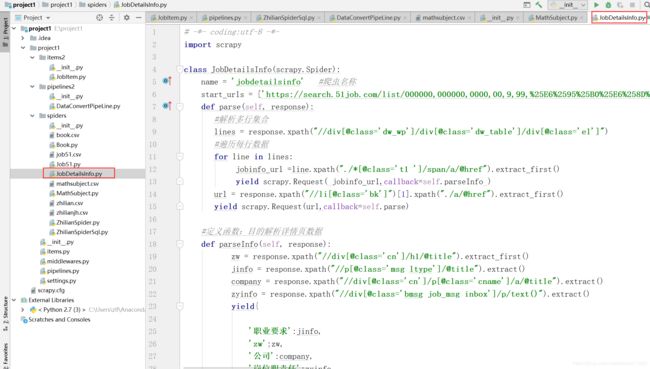

# -*- coding:utf-8 -*-

import scrapy

class JobDetailsInfo(scrapy.Spider):

name = 'jobdetailsinfo' #爬虫名称

start_urls = ['https://search.51job.com/list/000000,000000,0000,00,9,99,%25E6%2595%25B0%25E6%258D%25AE%25E7%25A7%2591%25E5%25AD%25A6%25E5%25AE%25B6,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=']

def parse(self, response):

#解析多行集合

lines = response.xpath("//div[@class='dw_wp']/div[@class='dw_table']/div[@class='el']")

#遍历每行数据

for line in lines:

jobinfo_url =line.xpath("./*[@class='t1 ']/span/a/@href").extract_first()

yield scrapy.Request( jobinfo_url,callback=self.parseInfo )

url = response.xpath("//li[@class='bk']")[1].xpath("./a/@href").extract_first()

yield scrapy.Request(url,callback=self.parse)

#定义函数:目的解析详情页数据

def parseInfo(self, response):

zw = response.xpath("//div[@class='cn']/h1/@title").extract_first()

jinfo = response.xpath("//p[@class='msg ltype']/@title").extract()

company = response.xpath("//div[@class='cn']/p[@class='cname']/a/@title").extract()

zyinfo = response.xpath("//div[@class='bmsg job_msg inbox']/p/text()").extract()

yield{

'职业要求':jinfo,

'zw':zw,

'公司':company,

'岗位职责任':zyinfo

}

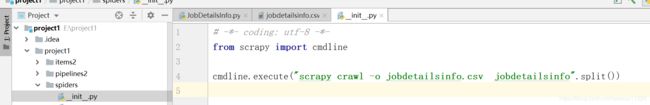

启动验证结果