win10+vs2015下caffe安装详解

本人安装环境:

1, windows10操作系统

2, vs2015,可以下载官方Community版本,这个可以一直免费使用

3, python2.7,可以参考该链接http://blog.csdn.net/qq_14845119/article/details/52354394

4, 官方BVLC版本的caffe ,https://github.com/BVLC/caffe/tree/windows

5, Cuda8.0,官网链接,https://developer.nvidia.com/cuda-toolkit

6, Cudnn-v5.1,官网链接,https://developer.nvidia.com/cudnn

安装过程:

1, cuda+cudnn安装

cuda一路next进行安装。Cudnn下载完成后,进行解压,将相应的bin,include,lib分别放于自己的cuda下面的相应目录中,例如,本人电脑的cuda目录为C:\Program Files\NVIDIAGPU Computing Toolkit\CUDA\v8.0,因此,将刚才解压的文件放在这个目录下面的bin,include,lib文件夹下。

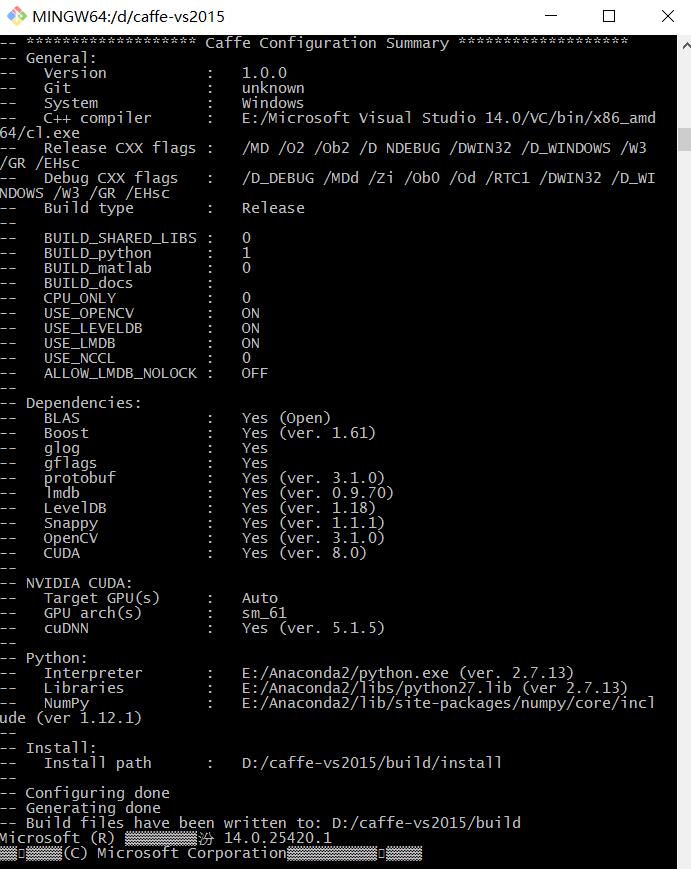

2, caffe安装

这里假设本人下载后解压的caffe目录cafferoot为D:\caffe-vs2015,先修改该目录下,D:\caffe-vs2015\ scripts\build_win.cmd,

修改第8行为,if NOT DEFINED WITH_NINJA set WITH_NINJA=0,表示使用cl编译器,而非NINJA

修改第9行为,if NOT DEFINED CPU_ONLY set CPU_ONLY=0,表示编译GPU版本caffe

修改第74行为,if NOT DEFINED WITH_NINJA set WITH_NINJA=0,表示使用cl编译器,而非NINJA

修改完毕后,返回上级目录,cmd下执行下面的命令。

scripts\build_win.cmd

然后cmake就会自动下载需要的依赖项,默认下载到C:\Users\当前用户名\.caffe\dependencies,下载完毕后cmake就会进行编译,生成vs2015需要的.Sln工程。

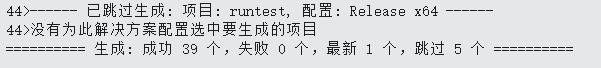

完毕后在D:\caffe-vs2015\build下面就可以找到Caffe.sln,使用VS2015打开,对整个工程进行编译即可。完毕后就会生成需要的库文件。

至此,如果你一路走下来了,那么恭喜你,你已经成功编译出来了。为了证明我们刚才编译完的caffe是可以使用的,我们进行下面的简单测试。

首先,需要对安装的依赖包做一些简单的修正,

1, 将C:\Users\用户名\.caffe\dependencies\libraries_v140_x64_py27_1.1.0\libraries\include下面的boost-1_61文件夹下面的,boost文件夹剪切到和该boost-1_61同一目录。这样方便vs可以找到。

2, 将libboost_date_time-vc140-mt-1_61.lib,libboost_filesystem-vc140-mt-1_61.lib拷贝到C:\Users\用户名\.caffe\dependencies\libraries_v140_x64_py27_1.1.0\libraries\lib下面,这个可能是下载过程中没有下载全吧。这2个lib可以这里下载,http://download.csdn.net/download/qq_14845119/9962236

下面可以开始我们的测试程序了,躁动起来吧。

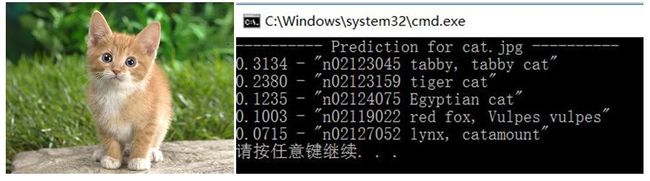

这个例子主要基于,D:\caffe-vs2015\examples\cpp_classification.cpp,进行简单的修改。

程序主要如下,

Head.h

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

namespace caffe

{

extern INSTANTIATE_CLASS(InputLayer);

extern INSTANTIATE_CLASS(InnerProductLayer);

extern INSTANTIATE_CLASS(DropoutLayer);

extern INSTANTIATE_CLASS(ConvolutionLayer);

extern INSTANTIATE_CLASS(ReLULayer);

extern INSTANTIATE_CLASS(PoolingLayer);

extern INSTANTIATE_CLASS(LRNLayer);

extern INSTANTIATE_CLASS(SoftmaxLayer);

}

Classification.cpp

#define USE_OPENCV 1

//#define CPU_ONLY 1

#include

#ifdef USE_OPENCV

#include

#include

#include

#endif // USE_OPENCV

#include

#include

#include

#include

#include

#include

#include "head.h"

#ifdef USE_OPENCV

using namespace cv;

using namespace caffe; // NOLINT(build/namespaces)

using std::string;

typedef std::pair Prediction;

class Classifier {

public:

Classifier(const string& model_file, const string& trained_file, const string& mean_file, const string& label_file);

std::vector Classify(const cv::Mat& img, int N = 5);

private:

void SetMean(const string& mean_file);

std::vector Predict(const cv::Mat& img);

void WrapInputLayer(std::vector* input_channels);

void Preprocess(const cv::Mat& img,std::vector* input_channels);

private:

shared_ptr > net_;

cv::Size input_geometry_;

int num_channels_;

cv::Mat mean_;

std::vector labels_;

};

Classifier::Classifier(const string& model_file,

const string& trained_file,

const string& mean_file,

const string& label_file) {

#ifdef CPU_ONLY

Caffe::set_mode(Caffe::CPU);

#else

Caffe::set_mode(Caffe::GPU);

#endif

net_.reset(new Net(model_file, TEST));

net_->CopyTrainedLayersFrom(trained_file);

CHECK_EQ(net_->num_inputs(), 1) << "Network should have exactly one input.";

CHECK_EQ(net_->num_outputs(), 1) << "Network should have exactly one output.";

Blob* input_layer = net_->input_blobs()[0];

num_channels_ = input_layer->channels();

CHECK(num_channels_ == 3 || num_channels_ == 1) << "Input layer should have 1 or 3 channels.";

input_geometry_ = cv::Size(input_layer->width(), input_layer->height());

SetMean(mean_file);

std::ifstream labels(label_file.c_str());

CHECK(labels) << "Unable to open labels file " << label_file;

string line;

while (std::getline(labels, line))

labels_.push_back(string(line));

Blob* output_layer = net_->output_blobs()[0];

CHECK_EQ(labels_.size(), output_layer->channels()) << "Number of labels is different from the output layer dimension.";

}

static bool PairCompare(const std::pair& lhs, const std::pair& rhs) {

return lhs.first > rhs.first;

}

static std::vector Argmax(const std::vector& v, int N) {

std::vector > pairs;

for (size_t i = 0; i < v.size(); ++i)

pairs.push_back(std::make_pair(v[i], static_cast(i)));

std::partial_sort(pairs.begin(), pairs.begin() + N, pairs.end(), PairCompare);

std::vector result;

for (int i = 0; i < N; ++i)

result.push_back(pairs[i].second);

return result;

}

std::vector Classifier::Classify(const cv::Mat& img, int N) {

std::vector output = Predict(img);

N = std::min(labels_.size(), N);

std::vector maxN = Argmax(output, N);

std::vector predictions;

for (int i = 0; i < N; ++i) {

int idx = maxN[i];

predictions.push_back(std::make_pair(labels_[idx], output[idx]));

}

return predictions;

}

void Classifier::SetMean(const string& mean_file) {

BlobProto blob_proto;

ReadProtoFromBinaryFileOrDie(mean_file.c_str(), &blob_proto);

Blob mean_blob;

mean_blob.FromProto(blob_proto);

CHECK_EQ(mean_blob.channels(), num_channels_) << "Number of channels of mean file doesn't match input layer.";

std::vector channels;

float* data = mean_blob.mutable_cpu_data();

for (int i = 0; i < num_channels_; ++i) {

cv::Mat channel(mean_blob.height(), mean_blob.width(), CV_32FC1, data);

channels.push_back(channel);

data += mean_blob.height() * mean_blob.width();

}

cv::Mat mean;

cv::merge(channels, mean);

cv::Scalar channel_mean = cv::mean(mean);

mean_ = cv::Mat(input_geometry_, mean.type(), channel_mean);

}

std::vector Classifier::Predict(const cv::Mat& img) {

Blob* input_layer = net_->input_blobs()[0];

input_layer->Reshape(1, num_channels_,input_geometry_.height, input_geometry_.width);

net_->Reshape();

std::vector input_channels;

WrapInputLayer(&input_channels);

Preprocess(img, &input_channels);

net_->Forward();

Blob* output_layer = net_->output_blobs()[0];

const float* begin = output_layer->cpu_data();

const float* end = begin + output_layer->channels();

return std::vector(begin, end);

}

void Classifier::WrapInputLayer(std::vector* input_channels) {

Blob* input_layer = net_->input_blobs()[0];

int width = input_layer->width();

int height = input_layer->height();

float* input_data = input_layer->mutable_cpu_data();

for (int i = 0; i < input_layer->channels(); ++i) {

cv::Mat channel(height, width, CV_32FC1, input_data);

input_channels->push_back(channel);

input_data += width * height;

}

}

void Classifier::Preprocess(const cv::Mat& img, std::vector* input_channels) {

cv::Mat sample;

if (img.channels() == 3 && num_channels_ == 1)

cv::cvtColor(img, sample, cv::COLOR_BGR2GRAY);

else if (img.channels() == 4 && num_channels_ == 1)

cv::cvtColor(img, sample, cv::COLOR_BGRA2GRAY);

else if (img.channels() == 4 && num_channels_ == 3)

cv::cvtColor(img, sample, cv::COLOR_BGRA2BGR);

else if (img.channels() == 1 && num_channels_ == 3)

cv::cvtColor(img, sample, cv::COLOR_GRAY2BGR);

else

sample = img;

cv::Mat sample_resized;

if (sample.size() != input_geometry_)

cv::resize(sample, sample_resized, input_geometry_);

else

sample_resized = sample;

cv::Mat sample_float;

if (num_channels_ == 3)

sample_resized.convertTo(sample_float, CV_32FC3);

else

sample_resized.convertTo(sample_float, CV_32FC1);

cv::Mat sample_normalized;

cv::subtract(sample_float, mean_, sample_normalized);

cv::split(sample_normalized, *input_channels);

CHECK(reinterpret_cast(input_channels->at(0).data) == net_->input_blobs()[0]->cpu_data())

<< "Input channels are not wrapping the input layer of the network.";

}

int main(int argc, char** argv) {

::google::InitGoogleLogging(argv[0]);

string model_file = "deploy.prototxt";

string trained_file = "bvlc_reference_caffenet.caffemodel";

string mean_file = "imagenet_mean.binaryproto";

string label_file = "synset_words.txt";

Classifier classifier(model_file, trained_file, mean_file, label_file);

string file = "cat.jpg";

std::cout << "---------- Prediction for " << file << " ----------" << std::endl;

cv::Mat img = cv::imread(file,1);

CHECK(!img.empty()) << "Unable to decode image " << file;

std::vector predictions = classifier.Classify(img);

for (size_t i = 0; i < predictions.size(); ++i) {

Prediction p = predictions[i];

std::cout << std::fixed << std::setprecision(4) << p.second << " - \"" << p.first << "\"" << std::endl;

}

}

#else

int main(int argc, char** argv) {

LOG(FATAL) << "This example requires OpenCV; compile with USE_OPENCV.";

}

#endif // USE_OPENCV

建一个vs的端口程序,然后将该cpp添加进去。

环境配置如下,模式为x64,release模式。

包含目录:

D:\caffe-vs2015\include

D:\caffe-vs2015\build\include

C:\Users\用户名\.caffe\dependencies\libraries_v140_x64_py27_1.1.0\libraries\include

C:\Program Files\NVIDIA GPU ComputingToolkit\CUDA\v8.0\include

库目录:

C:\Users\用户名\.caffe\dependencies\libraries_v140_x64_py27_1.1.0\libraries\lib

D:\caffe-vs2015\build\lib\Release

C:\Users\用户名\.caffe\dependencies\libraries_v140_x64_py27_1.1.0\libraries\x64\vc14\lib

E:\Anaconda2\libs

C:\Program Files\NVIDIA GPU ComputingToolkit\CUDA\v8.0\lib\x64

链接器-输入:

opencv_core310.lib

opencv_highgui310.lib

opencv_imgproc310.lib

opencv_imgcodecs310.lib

caffe.lib

caffeproto.lib

caffehdf5.lib

caffehdf5_hl.lib

gflags.lib

glog.lib

leveldb.lib

libprotobuf.lib

libopenblas.dll.a

lmdb.lib

boost_python-vc140-mt-1_61.lib

boost_thread-vc140-mt-1_61.lib

cublas.lib

cuda.lib

cudart.lib

curand.lib

cudnn.lib

运行程序,结果如下,

这下高兴了吧,终于可以出去玩耍了。

plus:

浮躁的社会,千万不要花了一天,一星期没弄好,就放弃。

给大家一个信心:有位大师说过:在相同的文明程度和种族背景下,每一个正常人的潜意识与意识相加之和,在精神能量意义上基本上是相等的。