《hbase学习》-08-HBase报错org.apache.hadoop.hbase.RegionTooBusyException

执行了一个spark-submit程序,操作hbase但是报错org.apache.hadoop.hbase.RegionTooBusyException,具体错误如下

17/12/07 11:49:41 INFO client.AsyncProcess: #70, table=www:person_dist, attempt=10/35 failed=826ops, last exception: org.apache.hadoop.hbase.RegionTooBusyException: org.apache.hadoop.hbase.RegionTooBusyException: Above memstore limit, regionName=www:person_dist,,1512617928531.a5bc9f2b05e93ca73beb7b1057d83e29., server=bigdata01.hzjs.co,16020,1510713472687, memstoreSize=216421096, blockingMemStoreSize=209715200

at org.apache.hadoop.hbase.regionserver.HRegion.checkResources(HRegion.java:3647)

at org.apache.hadoop.hbase.regionserver.HRegion.batchMutate(HRegion.java:2872)

at org.apache.hadoop.hbase.regionserver.HRegion.batchMutate(HRegion.java:2823)

at org.apache.hadoop.hbase.regionserver.RSRpcServices.doBatchOp(RSRpcServices.java:758)

at org.apache.hadoop.hbase.regionserver.RSRpcServices.doNonAtomicRegionMutation(RSRpcServices.java:720)

at org.apache.hadoop.hbase.regionserver.RSRpcServices.multi(RSRpcServices.java:2168)

at org.apache.hadoop.hbase.protobuf.generated.ClientProtos$ClientService$2.callBlockingMethod(ClientProtos.java:33656)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2188)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:112)

at org.apache.hadoop.hbase.ipc.RpcExecutor.consumerLoop(RpcExecutor.java:133)

at org.apache.hadoop.hbase.ipc.RpcExecutor$1.run(RpcExecutor.java:108)

at java.lang.Thread.run(Thread.java:745)

但是在下面还有这些(里面有个70 又执行成功了)

17/12/07 12:05:26 INFO client.AsyncProcess: #66, table=www:person_dist, attempt=15/35 succeeded on bigdata01.hzjs.co,16020,1510713472687, tracking started Thu Dec 07 12:03:36 CST 2017

17/12/07 12:05:26 INFO client.AsyncProcess: #70, table=www:person_dist, attempt=15/35 succeeded on bigdata01.hzjs.co,16020,1510713472687, tracking started Thu Dec 07 12:03:36 CST 2017

17/12/07 12:05:26 INFO client.AsyncProcess: #68, table=www:person_dist, attempt=15/35 succeeded on bigdata01.hzjs.co,16020,1510713472687, tracking started Thu Dec 07 12:03:36 CST 2017

17/12/07 12:05:26 INFO client.AsyncProcess: #64, table=www:person_dist, attempt=15/35 succeeded on bigdata01.hzjs.co,16020,1510713472687, tracking started Thu Dec 07 12:03:36 CST 2017

这个原因是 存在热点写入了,如果region处理不过来了,会不接受请求了,然后让它等着,等到轮到他的时候再去操作。看图

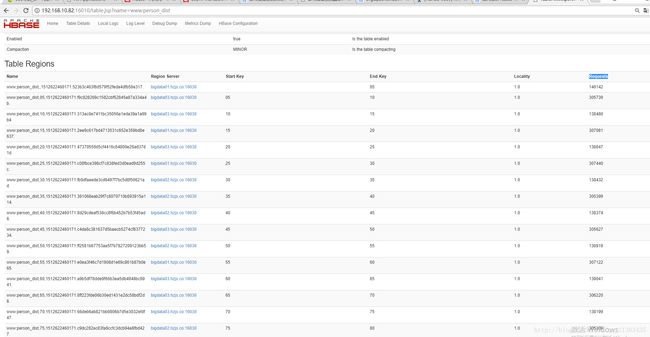

只有一个分区, Requests数值非常大

解决方法:

1。Hbase做预分区

2。还有个办法,你把rowkey格式化掉倒排,但是治标不治本

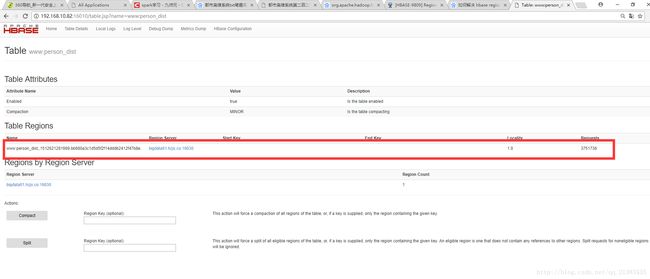

做预分区

create 'www:person_dist',{NAME=>'base',VERSIONS => 1}, SPLITS => ['05', '10','15', '20', '25', '30', '35', '40', '45', '50', '55', '60', '65', '70', '75', '80', '85', '90', '95']

再次执行程序

注意:这里是治标不治本的 还是会报RegionTooBusyException,具体解决方法,我还没想到,知道的留言,涉及优化调优问题了