用TensorFlowObjectDetectionAPIModel 采用ssd_mobilenet_v1模型训练自己的数据:

1.下载 https://github.com/tensorflow/models.git 安装请自行百度,官网上也提供说明 我的路径 /home/sy/models/

2.制作数据集:

由于我之前用yolo训练过,识别率也可以,但是在移植转化的时候出里点问题,所哟用ssd尝试下,我现有的数据:

- 将标注文件.txt->.xml

import os

import xml.etree.ElementTree as ET

from PIL import Image

import numpy as np

def change_txt_xml(img_dir, labels_dir, annotations_dir, class_file):

labels = os.listdir(labels_dir)

with open(class_file, 'r')as f:

classes = f.readlines()

classes = [cls.strip('\n') for cls in classes]

def write_xml(imgname, filepath, labeldicts):

root = ET.Element('Annotation')

ET.SubElement(root, 'folder').text = 'image'

ET.SubElement(root, 'filename').text = str(imgname)

ET.SubElement(root,'path').text=str(img_dir)+str(imgname)

source = ET.SubElement(root, 'source')

ET.SubElement(source, 'databashe').text = 'Unknown'

size = ET.SubElement(root, 'size')

ET.SubElement(size, 'width').text = '1280'

ET.SubElement(size, 'height').text = '1024'

ET.SubElement(size, 'depth').text = '3'

# segmented = ET.SubElement(size, 'segmented')

ET.SubElement(root,'segmented').text = '0'

for labeldict in labeldicts:

objects = ET.SubElement(root, 'object')

ET.SubElement(objects, 'name').text = labeldict['name']

ET.SubElement(objects, 'pose').text = 'Unspecified'

ET.SubElement(objects, 'truncated').text = '0'

ET.SubElement(objects, 'difficult').text = '0'

bndbox = ET.SubElement(objects, 'bndbox')

ET.SubElement(bndbox, 'xmin').text = str(int(labeldict['xmin']))

ET.SubElement(bndbox, 'ymin').text = str(int(labeldict['ymin']))

ET.SubElement(bndbox, 'xmax').text = str(int(labeldict['xmax']))

ET.SubElement(bndbox, 'ymax').text = str(int(labeldict['ymax']))

tree = ET.ElementTree(root)

tree.write(filepath, encoding='utf-8')

for label in labels:

with open(labels_dir + label, 'r')as f:

img_id = os.path.splitext(label)[0]+'.jpg'

contents = f.readlines()

labeldicts = []

for content in contents:

img = np.array(Image.open(img_dir + label.strip('.txt') + '.jpg'))

sh, sw = img.shape[0], img.shape[1]

content = content.strip('\n').split()

x = float(content[1]) * sw

y = float(content[2]) * sh

w = float(content[3]) * sw

h = float(content[4]) * sh

new_dict = {'name': classes[int(content[0])],

'difficult': '0',

'xmin': x + 1 - w / 2,

'ymin': y + 1 - h / 2,

'xmax': x + 1 + w / 2,

'ymax': y + 1 + h / 2}

labeldicts.append(new_dict)

write_xml(img_id, annotations_dir + label.strip('.txt') + '.xml', labeldicts)

if __name__ == '__main__':

img_dir = '/home/sy/data/image_jpg/'

labels_dir = '/home/sy/data/label-txt/'

annotations_dir = '/home/sy/data/xml_jpg/'

class_file = '/home/sy/data/class/classes.txt'

change_txt_xml(img_dir,labels_dir,annotations_dir,class_file)

- 将.xml->.csv

import os

import glob

import pandas as pd

import xml.etree.ElementTree as ET

def xml_to_csv(path):

xml_list = []

for xml_file in glob.glob(path + '/*.xml'):

tree = ET.parse(xml_file)

root = tree.getroot()

for member in root.findall('object'):

value = (root.find('filename').text,

int(root.find('size')[0].text),

int(root.find('size')[1].text),

member[0].text,

int(member[4][0].text),

int(member[4][1].text),

int(member[4][2].text),

int(member[4][3].text)

)

xml_list.append(value)

column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax']

xml_df = pd.DataFrame(xml_list, columns=column_name)

return xml_df

if __name__=="__main__":

image_path = '/home/sy/data/test_xml_jpg'

xml_df = xml_to_csv(image_path)

xml_df.to_csv('/home/sy/data/pupils/data/test.csv', index=None)

print('Successfully converted xml to csv.')

- 将.csv->.record(注意train 和 test 分开) 把生成的.record放在一个文件夹下

/home/sy/data/pupils/data/

import os

import io

import pandas as pd

import tensorflow as tf

from PIL import Image

from object_detection.utils import dataset_util

from collections import namedtuple, OrderedDict

os.chdir('/home/sy/models/research/object_detection/')

flags = tf.app.flags

flags.DEFINE_string('csv_input', '', 'Path to the CSV input')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

FLAGS = flags.FLAGS

# TO-DO replace this with label map

def class_text_to_int(row_label):

if row_label == 'pupils':

return 1

else:

None

def split(df, group):

data = namedtuple('data', ['filename', 'object'])

gb = df.groupby(group)

return [data(filename, gb.get_group(x)) for filename, x in zip(gb.groups.keys(), gb.groups)]

def create_tf_example(group, path):

with tf.gfile.GFile(os.path.join(path, '{}'.format(group.filename)), 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = Image.open(encoded_jpg_io)

width, height = image.size

filename = group.filename.encode('utf8')

image_format = b'jpg'

xmins = []

xmaxs = []

ymins = []

ymaxs = []

classes_text = []

classes = []

for index, row in group.object.iterrows():

xmins.append(row['xmin'] / width)

xmaxs.append(row['xmax'] / width)

ymins.append(row['ymin'] / height)

ymaxs.append(row['ymax'] / height)

classes_text.append(row['class'].encode('utf8'))

classes.append(class_text_to_int(row['class']))

tf_example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(filename),

'image/source_id': dataset_util.bytes_feature(filename),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature(image_format),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmins),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmaxs),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymins),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymaxs),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

}))

return tf_example

def main(_):

writer = tf.python_io.TFRecordWriter(FLAGS.output_path)

path = os.path.join(os.getcwd(), '/home/sy/data/image_jpg')

examples = pd.read_csv(FLAGS.csv_input)

grouped = split(examples, 'filename')

for group in grouped:

tf_example = create_tf_example(group, path)

writer.write(tf_example.SerializeToString())

writer.close()

output_path = os.path.join(os.getcwd(), FLAGS.output_path)

print('Successfully created the TFRecords: {}'.format(output_path))

if __name__ == '__main__':

tf.app.run()- 生成.pbtxt文件 也放在/home/sy/data/pupils/data

item{

id:1

name:'pupils'

}- 修改config 文件

把/home/sy/models/research/object_detection/samples/configs/ssd_mobilenet_v1_coco.config文件 复制到 /home/sy/data/pupils/data 做如下修改

- num_classes: 1

- fine_tune_checkpoint: "/home/sy/data/pupils/data/ssd_mobilenet_v1_coco_2017_11_17/model.ckpt"

(需要下载预训练模型,如果你想从头开始,可以注释掉这句和下句)

3. 修改传入路径和传出路径

(官网上提供一条很长的指令,

sed -i "s|PATH_TO_BE_CONFIGURED|/home/user/model/reaearch/objectdetect/data|g" ssd_mobilenet_v2_coco.config)

也是在做这个工作,只不过你的数据需要在它指定的路径下,还是手动比较保险)

4.如果电脑显存不够的话,把batch_size设置的小点

batch_size: 2(我的GTX2080,大的也许可以)

3.开始训练

Pythonobject_detection/model_main.py --pipeline_config_path=/home/sy/data/pupils/data/ssd_mobilenet_v1_coco.config --model_dir=/home/sy/data/pupils/result

--num_train_steps=50000

--num_eval_steps=2000

--alsologtostderr

(在这个阶段遇到的问题)

TypeError: can't pickle dict_values objects

Ans:

If you're using python3 , add list() to category_index.values() in model_lib.py about line 381 as this list(category_index.values()).

https://github.com/tensorflow/models/issues/4780

4.用官网工具转换成.pb 文件

Ckpt->.pb

Python object_detection/export_inference_graph.py

--input_type=image_tensor

--pipeline_config_path=/home/sy/data/pupils/data/ssd_mobilenet_v1_coco.config

--trained_checkpoint_prefix=/home/sy/data/pupils/result/model.ckpt-17402

--output_directory=/home/sy/data/pupils/result/log/pb_model

5. 替换官网demo中模型路径和label文件(需要下载github上的tensorflow,命令行安装的不包含example)

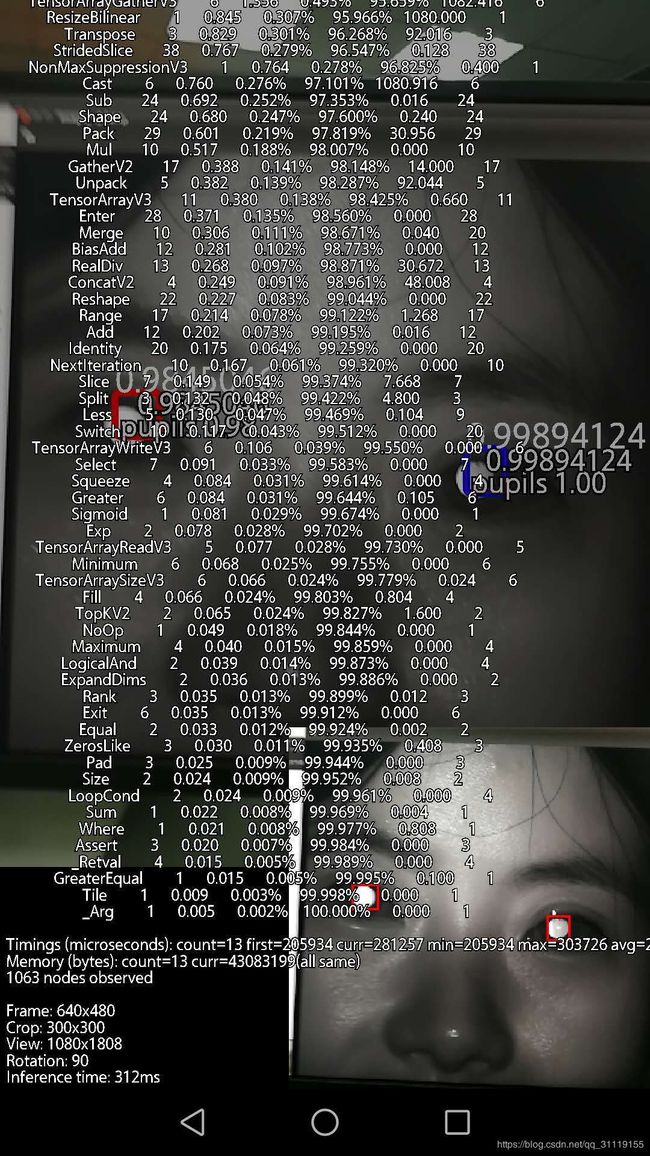

6.最后运行效果

当我启动截屏键出现了右图,很神奇有没有 很酷有没有 exceting(层,图片,时间什么都有了)