透视变换-鸟瞰图

原理介绍

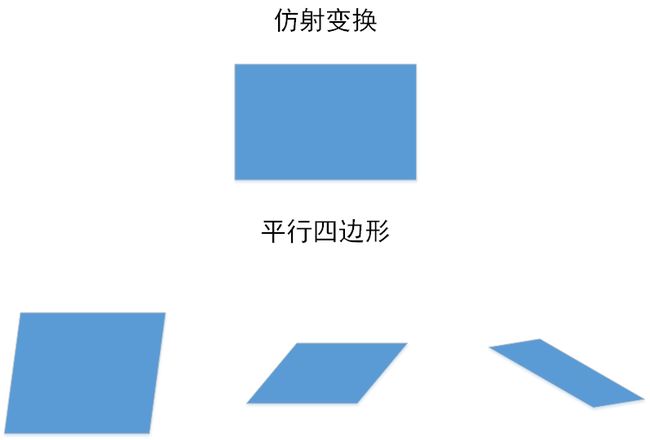

仿射变换

仿射变换是一种常用的图像几何变换方法,它能够将平行四边形 ABCD 变换为另一个平行四边形 A′B′C′D′ 。

其仿射变换的数学表达式为

令

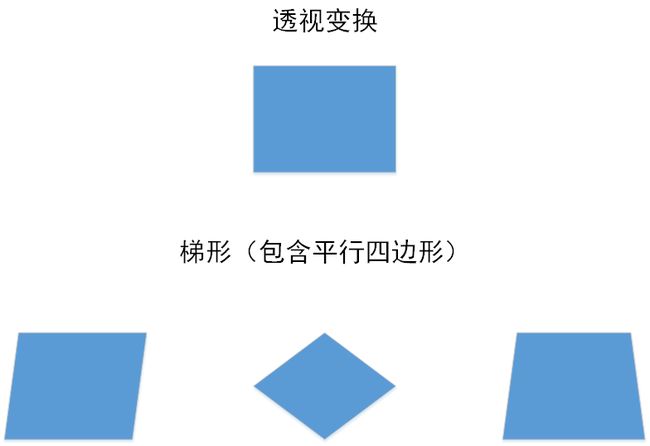

透视变换

透视变换又叫做投影映射,是将图像投影到另一个平面而形成的像,其中不要求投影平面与图像平面互相平行。由此,得到的投影图像将可能不会再是平行四边形,而有可能出现梯形。

其数学表达式为:

其中,变换矩阵可以分为四个部分, [h11h21h12h22] ,表示线性变换,比如scaling,shearing,ratotion, [h13h23] 表示平移变换, [h31h32] 产生透视变换。

并且有透视变换图像的实际坐标为方程组为:

由此可知,透视变换需要用4组对应点去求解其变换矩阵。

opencv相关函数介绍

仿射变换

void cv::warpAffine ( InputArray src,

OutputArray dst,

InputArray M,

Size dsize,

int flags = INTER_LINEAR,

int borderMode = BORDER_CONSTANT,

const Scalar & borderValue = Scalar()

)

仿射变换实现函数

重要参数介绍

M-变换需要的2X3矩阵

dsize-输出图像的大小

flags-图像插值方法

三个主要参数为:

| INTER_NEAREST | nearest neighbor interpolation |

|---|---|

| INTER_LINEAR | bilinear interpolation |

| WARP_INVERSE_MAP | flag, inverse transformation |

其中第三个参数表示将M变换为其逆矩阵 M−1 来计算

flag is not set : dst(u,v)=src(x,y)

flag is set: dst(x,y)=src(u,v)

Mat cv::getAffineTransform ( const Point2f src[],const Point2f dst[])

函数的功能是在输入三组对应点计算出相应的仿射变换矩阵

透视变换

void cv::warpPerspective ( InputArray src,

OutputArray dst,

InputArray M,

Size dsize,

int flags = INTER_LINEAR,

int borderMode = BORDER_CONSTANT,

const Scalar & borderValue = Scalar()

)

透视变换生成函数

参数与仿射变换的参数含义基本一致

Mat cv::getPerspectiveTransform ( const Point2f src[],

const Point2f dst[]

)

输入4个对应的参数计算透视变换所需的矩阵

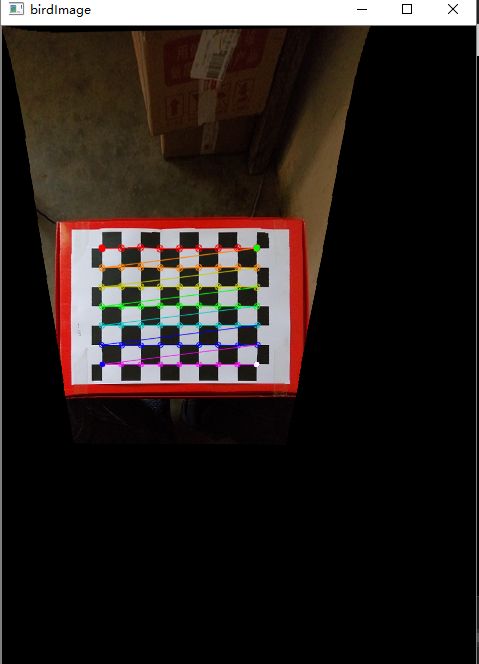

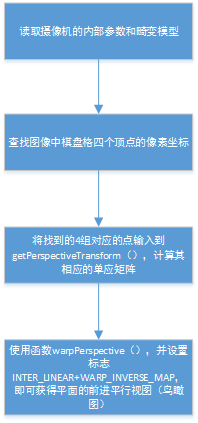

鸟瞰图

在机器人导航的一项中常见的工作就是将机器人场景的摄像机视图转换到从上到下的“俯视”视图,即鸟瞰图

其算法的基本流程如下

根据学习opencv书上的源码改写的opencv3的源码如下

//@vs2013

//opencv 3.00

//函数实现鸟瞰图变换,但是存在问题,并不是所有的图片的都能转化为平面图,目前还没有完全搞清楚原因所在

//

#include(0, 0) = 1; M.at(0, 1) = 0; M.at(0, 2) = 0;

M.at(1, 0) = 0; M.at(1, 1) = 1; M.at(1, 2) = 50;

cout << "M" << M << endl;

Mat bird = birdImage.clone();

warpAffine(bird, birdImage, M, bird.size());*/

/*warpPerspective(srcImage, birdImage, H, srcImage.size());*/

/*Mat tempBirdImage = birdImage.clone();

Mat M = Mat::ones(Size(3, 2), CV_32FC1);

M.at(0, 2) = 100;

cout << M;

warpAffine(tempBirdImage, birdImage, M, tempBirdImage.size());*/

namedWindow("birdImage");

imshow("birdImage", birdImage);

imshow("The undistort image", srcImage);

waitKey(0);

return 0;

}备注

此程序有问题有的图像不能得到足够大小的鸟瞰图,即使适当调整h33参数

如下

畸变矫正图

鸟瞰图(h33=1的情况下得到)