Linux安装Hadoop集群(CentOS 7.4+hadoop-2.8.0)

引言

公司服务器安装Hadoop集群(CentOS 7.4+hadoop-2.8.0)

配置3台linux机器实现ssh免密登陆

机器环境

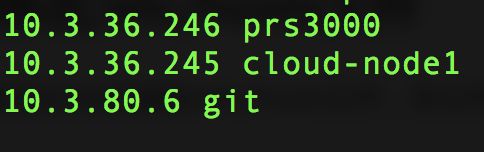

| ip | hostname | 系统 | 类型 |

|---|---|---|---|

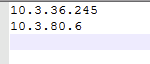

| 10.3.36.246 | prs3000 | CentOS 7.4 | master |

| 10.3.36.245 | cloud-node1 | CentOS 7.4 | slave |

| 10.3.80.6 | git | CentOS 7.4 | slave |

修改/etc/hosts文件

vi /etc/hosts

设置好hosts后,在1台机器上执行以下命令看能否ping通另外2台机器,以prs3000机器为例

ping -c 3 cloud-node1

ping -c 3 git

给3个机器生成秘钥文件

以prs3000机器为例,输入命令如下:

ssh-keygen -t rsa -P ''

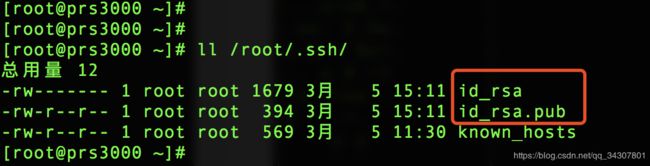

查看生成的秘钥

ll /root/.ssh/

使用同样的方法为cloud-node1和git生成秘钥(命令完全相同,不用做如何修改)。

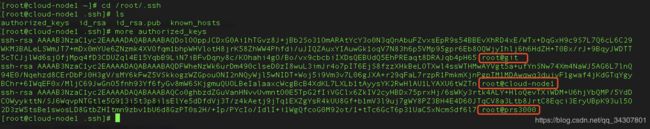

在master节点上创建authorized_keys文件

使用ssh-copy-id命令把公钥拷贝到master节点

在cloud-node1机器上执行:

ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected]

在git机器上执行

ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected]

在prs3000机器上执行

ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected]

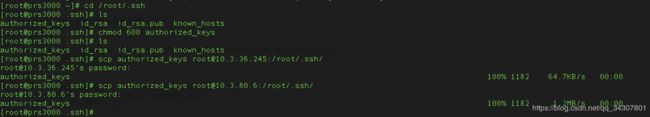

这时可以看到prs3000机器上/root/.ssh目录下生成了authorized_keys文件,赋予权限

chmod 600 authorized_keys

将authorized_keys文件复制到其他机器

把prs3000机器上authorized_keys文件拷贝到另外两台机器,scp命令拷贝

命令:

scp authorized_keys [email protected]:/root/.ssh/

scp authorized_keys [email protected]:/root/.ssh/

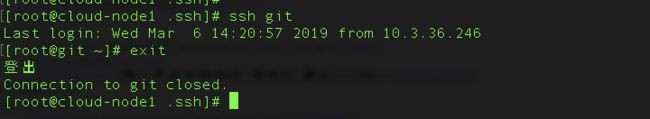

测试使用ssh进行无密码登录

试验免密ssh登陆,第一次登陆要输入密码,cloud-node1配置一样

每次ssh完成后,都要执行exit,否则你的后续命令是在另外一台机器上执行的。同理在cloud-node1和git上试验登陆

安装jdk和hadoop

下载hadoop

下载地址:

http://archive.apache.org/dist/hadoop/common/hadoop-2.8.0/

hadoop安装

安装文件拷贝和解压

把hadoop-2.8.0.tar.gz分别拷贝到prs3000,cloud-node1,git机器的/opt/hadoop目录(hadoop目录需要创建)并解压。

执行解压缩命令,以下操作3台机器一样

cd /opt/hadoop

tar -xzvf hadoop-2.8.0.tar.gz

创建文件夹

在/root目录下新建几个目录,复制粘贴执行下面的命令:

mkdir /root/hadoop

mkdir /root/hadoop/tmp

mkdir /root/hadoop/var

mkdir /root/hadoop/dfs

mkdir /root/hadoop/dfs/name

mkdir /root/hadoop/dfs/data

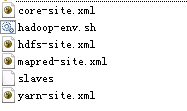

修改etc/hadoop中的一系列配置文件

需要修改的文件路径在/opt/hadoop/hadoop-2.8.0/etc/hadoop/

修改文件列表如下:

修改后文件拷贝进3台机器/opt/hadoop/hadoop-2.8.0/etc/hadoop/,注意3台机器java安装路径,在hadoop-env.sh中需要注意

core-site.xml

hadoop.tmp.dir

/root/hadoop/tmp

Abase for other temporary directories.

fs.default.name

hdfs://10.3.36.246:9000

hadoop-env.sh

hdfs-site.xml

dfs.name.dir

/root/hadoop/dfs/name

Path on the local filesystem where theNameNode stores the namespace and transactions logs persistently.

dfs.data.dir

/root/hadoop/dfs/data

Comma separated list of paths on the localfilesystem of a DataNode where it should store its blocks.

dfs.replication

2

dfs.permissions

false

need not permissions

mapred-site.xml

mapred.job.tracker

10.3.36.246:9001

mapred.local.dir

/root/hadoop/var

mapreduce.framework.name

yarn

slaves

yarn-site.xml

最重要的一个文件,我把地址直接配置进yarn

yarn.resourcemanager.hostname

prs3000

The address of the applications manager interface in the RM.

yarn.resourcemanager.address

10.3.36.246:8032

The address of the scheduler interface.

yarn.resourcemanager.scheduler.address

10.3.36.246:8030

The http address of the RM web application.

yarn.resourcemanager.webapp.address

10.3.36.246:8088

The https adddress of the RM web application.

yarn.resourcemanager.webapp.https.address

10.3.36.246:8090

yarn.resourcemanager.resource-tracker.address

10.3.36.246:8031

The address of the RM admin interface.

yarn.resourcemanager.admin.address

10.3.36.246:8033

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.scheduler.maximum-allocation-mb

4096

每个节点可用内存,单位MB,默认8182MB

yarn.nodemanager.vmem-pmem-ratio

2.1

yarn.nodemanager.resource.memory-mb

4096

yarn.nodemanager.vmem-check-enabled

false

启动hadoop

在namenode上执行格式化

在prs3000进入/opt/hadoop/hadoop-2.8.0/bin,执行格式化脚本

./hadoop namenode -format

格式化成功后,可以在看到在/root/hadoop/dfs/name/目录多了一个current目录,而且该目录内有一系列文件

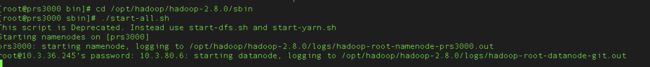

在namenode上执行启动命令

cd /opt/hadoop/hadoop-2.8.0/sbin

./start-all.sh

接着启动yarn

./start-yarn.sh

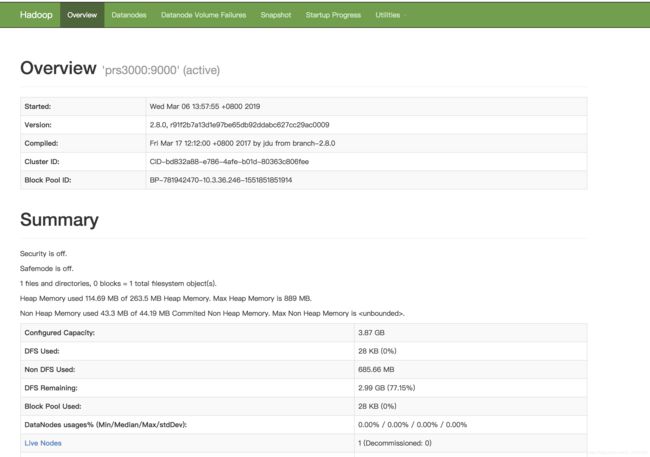

访问http://10.3.36.246:50070,跳转到了overview页面

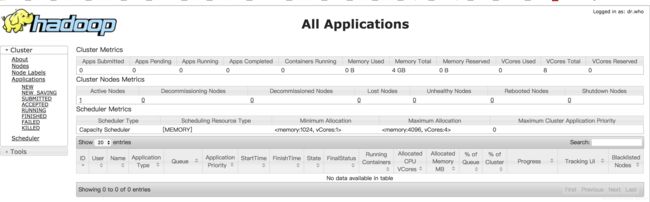

访问http://10.3.36.246:8088,集群页面

注意3台机器关闭防火墙

systemctl stop firewalld.service

感谢

参考了以下资料,对各位表示感谢

https://blog.csdn.net/pucao_cug/article/details/71698903

https://www.cnblogs.com/K-artorias/p/7144904.html

https://www.cnblogs.com/ivan0626/p/4144277.html

https://ask.helplib.com/hadoop/post_5408454