python爬虫之虎扑步行街主题帖

前言

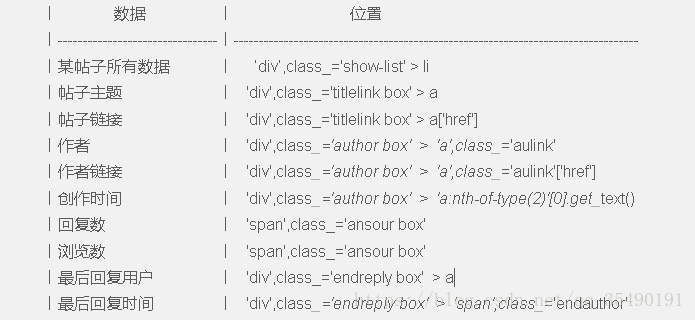

python爬虫的盛行让数据变得不在是哪么的难以获取。现在呢,我们可以根据我们的需求去寻找我们需要的数据,我们下来就利用python来写一个虎扑步行街主题帖的基本信息,主要包括:帖子主题(title)、帖子链接(post_link)、作者(author)、作者链接(author_link)、创作时间(start_date)、回复数(reply)、浏览数(view)、最后回复用户(last_reply)和最后回复时间(date_time)。接下来我们需要把获取到的信息存到数据库里面进行保存,以便于我们来使用。

import requests

from bs4 import BeautifulSoup

import datetime

#获取网站HTML数据

def get_page(link):

headers = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64;x64;rv:59.0) Gecko/20100101 Firefox/59.0'}

r = requests.get(link,headers=headers)

html = r.content

html = html.decode('UTF-8')

soup = BeautifulSoup(html,'lxml')

return soup

#对返回的HTML信息进行处理的到虎扑步行街list信息

def get_data(post_list):

data_list=[]

for post in post_list:

title_id = post.find('div',class_='titlelink box')

title = title_id.a.text.strip()

post_link = title_id.a['href']

post_link = 'https://bbs.hupu.com'+post_link

#作者、作者链接、创建时间

author_div = post.find('div',class_='author box')

author = author_div.find('a',class_='aulink').text.strip()

author_page = author_div.find('a',class_='aulink')['href']

start_data = author_div.select('a:nth-of-type(2)')[0].get_text()

#回复人数和浏览次数

reply_view = post.find('span',class_='ansour box').text.strip()

reply = reply_view.split('/')[0].strip()

view = reply_view.split('/')[1].strip()

#最后回复用户和最后回复时间

reply_div = post.find('div',class_='endreply box')

reply_time = reply_div.a.text.strip()

last_reply = reply_div.find('span',class_='endauthor').text.strip()

if ':' in reply_time:

date_time = str(datetime.date.today())+' '+ reply_time

date_time = datetime.datetime.strptime(date_time,'%Y-%m-%d %H:%M')

else:

date_time = datetime.datetime.strptime(reply_time,'%Y-%m-%d').date()

data_list.append([title,post_link,author,author_page,start_data,reply,view,last_reply,date_time])

return data_list

link = 'http://bbs.hupu.com/bxj-'+str(i)

print('开始第%s页数据爬取...' %i)

soup = get_page(link)

soup = soup.find('div',class_='show-list')

post_list = soup.find_all('li')

data_list = get_data(post_list)

hupu_post = MysqlAPI('localhost','root','mysql','scraping','utf8')

for each in data_list:

print(each)

print('第%s页爬取完成!' %i)

time.sleep(3)这段代码完成的是对我们分析获取到数据之后进行打印输出到控制台

3.存入到MySQL数据库中

首先我们需要进行数据库处理进行类的封装。之后数据库的连接需要我们提供数据库ip地址、用户名、密码、数据库名和字符型;其次,编写插入数据库的sql语句以及需要传入参数的设置。

import MySQLdb

class MysqlAPI(object):

def __init__(self,db_ip,db_name,db_password,table_name,db_charset):

self.db_ip = db_ip

self.db_name = db_name

self.db_password = db_password

self.table_name = table_name

self.db_charset = db_charset

self.conn = MySQLdb.connect(host=self.db_ip,user=self.db_name,

password=self.db_password,db=self.table_name,charset=self.db_charset)

self.cur = self.conn.cursor()

def add(self,title,post_link,author,author_page,start_data,reply,view,last_reply,date_time):

sql = "INSERT INTO hupu(title,post_link,author,author_page,start_data,reply,view,last_reply,date_time)"\

"VALUES (\'%s\',\'%s\',\'%s\',\'%s\',\'%s\',\'%s\',\'%s\',\'%s\',\'%s\')"

self.cur.execute(sql %(title,post_link,author,author_page,start_data,reply,view,last_reply,date_time))

self.conn.commit()*注意:在执行完sql语句之后必须需要self.conn,commit(),不然就会出现存不进去数据问题,这个问题困扰了我好久。在sql语句的编写中我们很容易编写错误,所以用的引号劲量用转义字符进行转义,这样防止出现一些不必要的错误。

附录:

import requests

from bs4 import BeautifulSoup

import datetime

import MySQLdb

import time

def get_page(link):

headers = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64;x64;rv:59.0) Gecko/20100101 Firefox/59.0'}

r = requests.get(link,headers=headers)

html = r.content

html = html.decode('UTF-8')

soup = BeautifulSoup(html,'lxml')

return soup

def get_data(post_list):

data_list=[]

for post in post_list:

title_id = post.find('div',class_='titlelink box')

title = title_id.a.text.strip()

post_link = title_id.a['href']

post_link = 'https://bbs.hupu.com'+post_link

#作者、作者链接、创建时间

author_div = post.find('div',class_='author box')

author = author_div.find('a',class_='aulink').text.strip()

author_page = author_div.find('a',class_='aulink')['href']

start_data = author_div.select('a:nth-of-type(2)')[0].get_text()

#回复人数和浏览次数

reply_view = post.find('span',class_='ansour box').text.strip()

reply = reply_view.split('/')[0].strip()

view = reply_view.split('/')[1].strip()

#最后回复用户和最后回复时间

reply_div = post.find('div',class_='endreply box')

reply_time = reply_div.a.text.strip()

last_reply = reply_div.find('span',class_='endauthor').text.strip()

if ':' in reply_time:

date_time = str(datetime.date.today())+' '+ reply_time

date_time = datetime.datetime.strptime(date_time,'%Y-%m-%d %H:%M')

else:

date_time = datetime.datetime.strptime(reply_time,'%Y-%m-%d').date()

data_list.append([title,post_link,author,author_page,start_data,reply,view,last_reply,date_time])

return data_list

class MysqlAPI(object):

def __init__(self,db_ip,db_name,db_password,table_name,db_charset):

self.db_ip = db_ip

self.db_name = db_name

self.db_password = db_password

self.table_name = table_name

self.db_charset = db_charset

self.conn = MySQLdb.connect(host=self.db_ip,user=self.db_name,

password=self.db_password,db=self.table_name,charset=self.db_charset)

self.cur = self.conn.cursor()

def add(self,title,post_link,author,author_page,start_data,reply,view,last_reply,date_time):

sql = "INSERT INTO hupu(title,post_link,author,author_page,start_data,reply,view,last_reply,date_time)"\

"VALUES (\'%s\',\'%s\',\'%s\',\'%s\',\'%s\',\'%s\',\'%s\',\'%s\',\'%s\')"

self.cur.execute(sql %(title,post_link,author,author_page,start_data,reply,view,last_reply,date_time))

self.conn.commit()

for i in range(1,3):

link = 'http://bbs.hupu.com/bxj-'+str(i)

print('开始第%s页数据爬取...' %i)

soup = get_page(link)

soup = soup.find('div',class_='show-list')

post_list = soup.find_all('li')

data_list = get_data(post_list)

hupu_post = MysqlAPI('localhost','root','mysql','scraping','utf8')

for each in data_list:

hupu_post.add(each[0],each[1],each[2],each[3],each[4],each[5],each[6],each[7],each[8])

print('第%s页爬取完成!' %i)

time.sleep(3)