Ceph集群部署文档(4节点)

Ceph集群部署文档(4节点)

一、 背景

Ceph是一个分布式存储,可以提供对象存储、块存储和文件存储,其中对象存储和块存储可以很好地和各大云平台集成。一个Ceph集群中有Monitor节点、MDS节点(可选,用于文件存储)、至少两个OSD守护进程。

Ceph OSD:OSD守护进程,用于存储数据、处理数据拷贝、恢复、回滚、均衡,并通过心跳程序向Monitor提供部分监控信息。一个Ceph集群中至少需要两个OSD守护进程。

Monitor:维护集群的状态映射信息,包括monitor、OSD、Placement Group(PG)。还维护了Monitor、OSD和PG的状态改变历史信息。

MDS:存储Ceph文件系统的元数据。

二、 环境规划

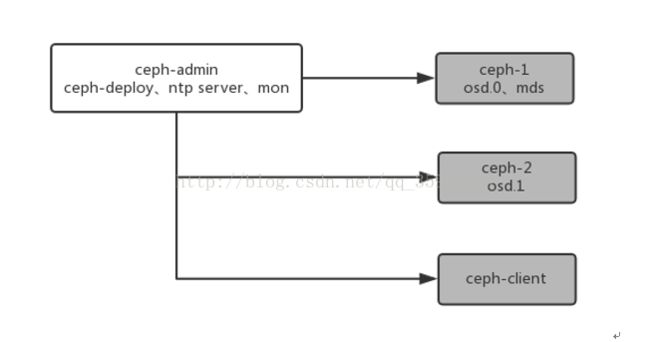

1、四节点Centos7主机,其中ceph-admin节点为管理节点和监控节点,ceph-1、ceph-2为osd节点,每个节点3个2T的磁盘(分别命名为sdb、sdc、sdd)。ceph-client为客户端,方便以后进行存储测试。所有节点都安装CeontOS7。

2、集群配置如下:

| 主机 |

IP |

功能 |

| ceph-admin |

192.168.56.100 |

ceph-deploy、mon、ntp server |

| ceph-1 |

192.168.56.101 |

osd.0、mds |

| ceph-2 |

192.168.56.102 |

osd.1 |

| ceph-client |

192.168.56.103 |

客户端,主要利用它挂载ceph集群提供的存储进行测试 |

3、节点关系图

三、前期准备,安装ceph-deploy工具

所有的节点都是root用户登录的。

1、修改每个节点的主机名,并重启。

# hostnamectl set-hostname ceph-admin(ceph-1/ceph-2/ceph-client)

2、配置防火墙启动端口

需要在每个节点上执行以下命令:

# firewall-cmd--zone=public --add-port=6789/tcp –permanent

# firewall-cmd--zone=public --add-port=6800-7100/tcp–permanent

# firewall-cmd–reload

# firewall-cmd--zone=public --list-all

3、禁用selinux

需要在每个节点上执行以下命令:

# setenforce 0

# vim /etc/selinux/config

将SELINUX设置为disabled

4、配置ceph-admin节点的hosts文件

# vi /etc/hosts

192.168.56.100 ceph-admin

192.168.56.101 ceph-1

192.168.56.102 ceph-2

192.168.56.103 ceph-client

四、配置ceph-admin部署节点的无密码登录每个ceph节点

1、在每个节点上安装一个SSH服务器

需要在每个节点上执行以下命令:

# yum installopenssh-server -y

2、配置ceph-admin管理节点与每个ceph节点无密码的SSH访问

# ssh-keygen

3、复制ceph-admin节点的密钥到每个ceph节点

# ssh-copy-id root@ceph-1

# ssh-copy-id root@ceph-2

# ssh-copy-id root@ceph-client

4、测试每个节点不用密码是否可以登录

# ssh root@ceph-1

# ssh root@ceph-2

# ssh root@ceph-client

5、修改ceph-admin管理节点的~/.ssh/config文件

# vi .ssh/config

Host ceph-admin

Hostname ceph-admin

User root

Host ceph-1

Hostname ceph-1

User root

Host ceph-2

Hostname ceph-2

User root

Host ceph-client

Hostname ceph-client

User root

五、yum源及ceph的安装

需要在每个节点(ceph-admin/1/2/client)上执行以下命令:

# yumclean all

# rm-rf /etc/yum.repos.d/*.repo

# wget-O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

# wget-O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

# sed-i '/aliyuncs/d' /etc/yum.repos.d/CentOS-Base.repo

# sed-i '/aliyuncs/d' /etc/yum.repos.d/epel.repo

# sed-i 's/$releasever/7/g' /etc/yum.repos.d/CentOS-Base.repo

1、增加ceph的源

# vim /etc/yum.repos.d/ceph.repo

添加以下内容:

[ceph]

name=ceph

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/x86_64/

gpgcheck=0

[ceph-noarch]

name=cephnoarch

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarch/

gpgcheck=0

2、安装ceph客户端

# yum makecache

# yum install ceph ceph-radosgw rdate -y

六、配置NTP

我们把NTP Server放在ceph-admin节点上,其余三个ceph-1/2/client节点都是NTP Client,目的是从根本上解决时间同步问题。

1、 在ceph-admin节点上

a、修改/etc/ntp.conf,注释掉默认的四个server,添加三行配置如下:

# vim /etc/ntp.conf

###comment following lines:

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

###add following lines:

server 127.127.1.0 minpoll 4

fudge 127.127.1.0 stratum 0

#这一行需要根据client的IP范围设置。

restrict 192.168.56.0 mask 255.255.255.0 nomodify notrap

b、修改/etc/ntp/step-tickers文件如下:

# vim /etc/ntp/step-tickers

# List of NTP servers used by the ntpdateservice.

# 0.centos.pool.ntp.org

127.127.1.0

c、重启ntp服务,并查看server端是否运行正常,正常的标准就是ntpq-p指令的最下面一行是*:

# systemctl enable ntpd

# systemctl restart ntpd

# ntpq -p

remote refid st t when poll reach delay offset jitter

*LOCAL(0) .LOCL. 0 l - 16 1 0.000 0.000 0.000

至此,NTP Server端已经配置完毕,下面开始配置Client端。

2、 在ceph-1/ceph-2/ceph-client三个节点上

a、修改/etc/ntp.conf,注释掉四行server,添加一行server指向ceph-admin:

# vim /etc/ntp/conf

#server 0.centos.pool.ntp.org.iburst

#server 1.centos.pool.ntp.org.iburst

#server 2.centos.pool.ntp.org.iburst

#server 3.centos.pool.ntp.org.iburst

server 192.168.56.100

b、重启ntp服务并观察client是否正确连接到server端,同样正确连接的标准是ntpq-p的最下面一行以*号开头:

# systemctl enable ntpd

# systemctl restart ntpd

# ntpq -p

remote refid st t when poll reach delay offset jitter

*ceph-admin .LOCL. 1 u 1 64 1 0.329 0.023 0.000

七.部署Ceph

在部署节点(ceph-admin)安装ceph-deploy,下文的部署节点统一指ceph-admin:

1、 在ceph-admin节点上安装ceph部署工具,并检查版本号

# yum -yinstall ceph-deploy

# ceph-deploy–version

# ceph -v

2、在部署节点(ceph-admin)创建部署目录

# mkdir cluster

# cd /cluster

3、创建以ceph-admin为监控节点的集群

# ceph-deploynew ceph-admin

4、完成后,查看目录内容

# ls

ceph.conf ceph.deploy-ceph.log ceph.mon.keyring

5、编辑admin-node节点的ceph配置文件,把下面的配置放入ceph.conf中

# vim ceph.conf

osd pool default size = 2//根据具体的osd数量,这里是2

6、初始化mon节点并收集keyring

# ceph-deploy mon create-initial

…….

…….

# ls

ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring

ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log

7、把ceph-admin节点的配置文件与keyring同步至其它节点:

# ceph-deploy adminceph-admin ceph-1 ceph-2 ceph-client

8、查看集群状态

# ceph -s

cluster19f4be8e-20ef-4ebb-964c-7c31e8df0059

health HEALTH_ERR

monmap e1: 1 mons at {ceph-admin=192.168.56.100:6789/0}

election epoch 4, quorum 0,1,2 ceph-1,ceph-2,ceph-client

osdmap e37: 0 osds: 0up, 0 in

pgmap v499: 64 pgs, 1 pools, 606 MB data, 160 objects

6950 MB used, 8030 MB / 15805 MBavail

64 creatring

9、开始部署OSD

# ceph-deploy --overwrite-conf osd prepareceph-2:/dev/sdb ceph-2:/dev/sdc ceph-2:/dev/sdd ceph-3:/dev/sdb ceph-3:/dev/sdcceph-3:/dev/sdd --zap-disk

# ceph-deploy --overwrite-conf osd activateceph-2:/dev/sdb ceph-2:/dev/sdc ceph-2:/dev/sdd ceph-3:/dev/sdb ceph-3:/dev/sdcceph-3:/dev/sdd

10、查看集群状态

# ceph -s

cluster19f4be8e-20ef-4ebb-964c-7c31e8df0059

health HEALTH_WARN

monmap e1: 1 mons at {ceph-admin=192.168.56.100:6789/0}

election epoch 4, quorum 0,1,2 ceph-1,ceph-2,ceph-client

osdmap e37: 6 osds: 6 up, 6 in

pgmap v499: 64 pgs, 1 pools, 606 MB data, 160 objects

6950 MB used, 8030 MB / 15805 MBavail

64 active+clean

11、增加rbd池的PG,去除WARN

# ceph osd pool set rbd pg_num 128

# ceph osd pool set rbd pgp_num 128

# ceph -s

cluster19f4be8e-20ef-4ebb-964c-7c31e8df0059

health HEALTH_OK

monmap e1: 1 mons at {ceph-admin=192.168.56.100:6789/0}

election epoch 4, quorum 0,1,2 ceph-1,ceph-2,ceph-client

osdmap e37: 6 osds: 6 up, 6 in

pgmap v499: 64 pgs, 1 pools, 606 MB data, 160 objects

6950 MB used, 8030 MB / 15805 MBavail

128 active+clean

12、添加一个元数据服务器

# ceph-deploy mds create ceph-1

13、再次查看集群状态

# ceph -s

cluster19f4be8e-20ef-4ebb-964c-7c31e8df0059

health HEALTH_OK

monmap e1: 1 mons at {ceph-admin=192.168.56.100:6789/0}

election epoch 4, quorum 0,1,2 ceph-1,ceph-2,ceph-3

mdsmap e4: 1/1/1 up {0=ceph-1=up:active}

osdmap e37: 6 osds: 6 up, 6 in

pgmap v499: 64 pgs, 1 pools, 606 MB data, 160 objects

6950 MB used, 8030 MB / 15805 MBavail

128 active+clean

八、验证

使用Ceph块存储验证集群功能是否可用。

1、在ceph-admin上安装ceph客户端

# ceph-deploy install ceph-client

2、在ceph-client上创建块设备映像

# rbd create test --size 4096 --image-format 2 --image-feature layering

3、查看创建的映像

# rbd ls

4、将ceph提供的块设备映射到ceph-client

# rbd map test --pool rbd --name client.admin

5、创建文件系统

# mkfs.xfs /dev/rbd/rbd/test

6、挂载文件系统

# mkdir /test

# mount /dev/rbd/rbd/test /test

7、使用dd测试

# cd /test

# dd if=/dev/zero of=ceshi bs=1M count=10

8、在随便一台osd节点上,查看是否有数据写入

# ceph -w