基本信息

控制节点

|--server_nme:ops-ctr

|--manage_ip:192.168.122.11

|--external_ip:192.168.0.111

|--service

|--NTP

|--memcached

|--port:11211

|--etcd

|--port:2379/2380

|--placement

|--server_name:placement

|--server_user:placement

|--db_name:placement

|--db-user:plcm_db

|--port:8778

|--keystone

|--service_name:keystone

|--service_user:keystone

|--db_name:keystone

|--db_user:kst_db

|--port:5000

|--glance

|--service_name:glance

|--service_user:glance

|--db_name:glance

|--db_user:glc_db

|--port:9292

|--nova

|--service_name:nova

|--server_user:nova

|--db_name:nova/nova_api/nova_cell0

|--db_user:nva_db

|--port:8774/6080

vice_user:nova

|--db_name:nova/nova_api/nova_cell0

|--db_user:nva_db

|--neutron

|--service_name:neutron

|--service_user:neutron

|--db_name:neutron

|--db_user:ntr_db

|--port:9696

|--horizon

|--mariadb

|--port:3306

|--cinder

|--service_name:cinder

|--server_user:cinder

|--db_user:cid_db

|--db_name:cinder

|--port:8776

计算节点

|--server_name:ops-cmp

|--manage_ip:192.168.122.12

|--external_ip:192.168.0.112

|--service

|--NTP

|--nova

|--service_name:nova

|--service_user:nova

|--db_name:nova/nova_api/nova_cell0

|--db_user:nva_db

|--port:8774

|--neutron

|--service_name:neutron

|--service_user:neutron

|--db_name:neutron

|--db_user:ntr_db

块存储节点

|--server_name:ops-cid

|--manage_ip:192.168.122.13

|--external_ip:192.168.0.113

|--volume_group:cinder

安装

- 安装openstack-stein软件源

yum install centos-release-openstack-stein -y - 安装NTP服务

yum install chrony -y - 安装openstack客户端

yum install python-openstackclient openstack-selinux -y

安装数据库1

- 安装mariadb-server pyhont2-pymysql

yum install mariadb mariadb-server python2-pymysql -y

- 配置数据库

vi /etc/my.cnf.d/mariadb-server.cnf

# modify

[mysqld]

bind-address=192.168.122.11

default-storage-engine=innodb

innodb_file_per_table=on

max_connections=4096

collation-server=utf8_general_ci

character-set-server=utf8

- 启动服务并执行安全检查

systemctl start mariadb

systemctl enable mariadb

mysql_secure_installation

- 添加开放端口

firewall-cmd --zone=internal --add-port=3306/tcp --permanent

安装消息服务rabbit2

- 安装

yum install rabbitmq-server -y - 启动服务

systemctl start rabbitmq-server

systemctl enable rabbitmq-server

- 创建消息服务用户

rabbitmqctl add_user rbtmq user_passwd - 授权用户读写权限

rabbitmqctl set_permissions rbtmq ".*" ".*" ".*" - 添加开放端口

firewall-cmd --zone=internal --add-port=5672/tcp --permanent

安装认证缓存memcached3

- 安装

yum install memcached python-memcached -y - 配置

vi /etc/sysconfig/memecached

## modify

OPTION="-l 127.0.0.1,::1,ops-ctr"

- 启动服务

systemctl start memecached

systemctl enable memecached

# add firewall rule

firewall-cmd --zone=internal --add-port=11211 --permanent

安装etcd服务4

- 安装

yum install etcd -y - 配置

vi /etc/etcd/etcd.conf

# modify

#[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://192.168.122.11:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.122.11:2379"

ETCD_NAME="controller"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.122.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.122.11:2379"

ETCD_INITIAL_CLUSTER="controller=http://192.168.122.11:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

- 启动服务

systemctl start etcd

systemctl enabl etcd

- 添加开放端口

firewall-cmd --zone=internal --add-port=2379/tcp --permanent

firewall-cmd --zone=internal --add-port=2380/tcp --permanent

安装placement服务10

- 数据库

create database placement;

grant all on placement.* to 'plcm_db'@'localhost' identified by 'passwd';

grant all on placement.* to 'plcm_db'@'%' identified by 'passwd';

- 创建用户

openstack user create --domain default \

--password-prompt placement

openstackk role add --project service --user placement admin

- 创建服务实体

openstack service --name placement \

--description "OpenStack Placement" placement

- 创建endpoint

openstack endpoint create --region RegionOne \

placement public http://ops-ctr:8778

# create internal admin endpoint like public

- 安装组件

yum install openstack-placement-api -y - 配置

vi /etc/placement/placement.conf

[placement_database]

connection=mysql+pymysql://plcm_db:passwd@ops-ctr/placement

[api]

auth_strategy=keystone

[keystone_authtoken]

auth_url=http://ops-ctr:5000/v3

memcached_servers=ops-ctr:11211

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=placement

password=passwd

vi /etc/httpd/conf.d/00-placement-api.conf

# add

= 2.4>

Require all granted

Order allow,deny

Allow from all

- 同步数据库

/bin/sh -c "placement-manage db sync" placement - 重启httpd服务

systemctl restart httpd

安装openstack服务

安装认证服务keystone5

- 数据库服务

# create database

create database keystone;

# set permission

grant all on keystone.* to 'kst_db'@'localhost' identified by '';

grant all on keystone.* to 'kst_db'@'%' identified by '';

- 安装keystone组件

yum install openstack-keystone httpd mod_wsgi -y - 配置

vi /etc/keystone/keystone.conf

[database]

connection=mysql+pymysql://kst_db:passwd@ops-cont/keystone

[token]

provider=fernet

[signing]

enable=true

certfile=/etc/pki/tls/private/pub.pem

keyfile=/etc/pki/tls/private/key.pem

ca_certs=/etc/pki/tls/certs/cert.pem

cert_required=true

- 同步数据库

/bin/sh -c "keystone-manage db_sync" keystone - 初始化fernet

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group=keystone

- 创建bootstrap服务

keystone-manage bootstrap --bootstrap-password passwd \

--bootstrap-admin-url http://ops-ctr:5000/v3 \

--bootstrap-internal-url http://ops-ctr:5000/v3 \

--bootstrap-public-url http://ops-ctr:5000/v3 \

--bootstrap-region-id RegionOne

- 配置httpd服务

vi /etc/httpd/conf/httpd.conf

# add

ServerName ops-ctr

# configure /etc/httpd/conf.d/wsgi-keystone.conf

Listen 5000

# SSLEngine on

# SSLCertificateKeyFile /etc/pki/tls/private/key.pem

# SSLCertificateFile /etc/pki/tls/private/cert.pem

WSGIDaemonProcess keystone-public processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-public

WSGIScriptAlias / /usr/bin/keystone-wsgi-public

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/apache2/keystone.log

CustomLog /var/log/apache2/keystone_access.log combined

Require all granted

# create link

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

启用ssl加密链接,参考Apache enable ssl on centos

设置环境变量

export OS_USERNAME=admin

export OS_PASSWORD=passwd

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_DOMAIN_NAME=default

export OS_AUTH_URL=https://ops-ctr:5000/v3

export OS_IDENTITY_API_VERSION=3

- 创建服务用户、角色、项目和域

# create domain if need

openstack domain create --description "mystack" mystack

# create project

openstack project create --domain default \

--description "Service Project" service

openstack project create --domain default \

--description "Demo Project" demo

# create user

openstack user create --domain default \

--password-prompt demo

# create role

openstack role create demo

# set role for user

openstack role add --project service --user demo demo

- 验证操作

# unset

unset OS_AUTH_URL OS_PASSWORD

# request new auth token

openstack --os-auth-url https://ops-ctr:5000/v3 \

--os-project-domain-name default \

--os-user-domain-name default \

--os-project-name admin \

--os-username admin token issue

openstack --os-auth-url https://ops-ctr:5000/v3 \

--os-project-domain-name default \

--os-user-domain-name default \

--os-project-name demo \

--os-username demo token issue

- 分别创建用户admin和demo的环境脚本

admin-openrc

---

export OS_USERNAME=admin

export OS_PASSWORD=passwd

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_DOMAIN_NAME=default

export OS_AUTH_URL=http://ops-ctr:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

demo-openrc

---

export OS_USERNAME=demo

export OS_PASSWORD=passwd

export OS_PROJECT_NAME=demo

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_DOMAIN_NAME=default

export OS_AUTH_URL=http://ops-ctr:5000/v3

export OS_IDENTITY_API_VERSION=3

安装镜像服务glance6

- 数据库

create database glance;

# set permission to glc_db on glance like keystone

- 创建用户

openstack user create --domain default \

--password-prompt glance

openstack role add --project service --user glance admin

- 创建服务实体

openstack service create \

--name glance \

--description "OpenStack Image" image

- 创建服务endpoint

openstack endpoint create --region RegionOne \

image public http://ops-ctr:9292

# create admin internal endpoint like public

# add port 9292 by firewall-cmd

- 安装glance组件

yum install openstack-glance -y - 配置

vi /etc/glance/glance-api.conf

[database]

connection=mysql+pymysql://glc_db:passwd@ops-ctr/glance

[keystone_authtoken]

www_authenticate_uri=http://ops-ctr:5000

auth_url=http://ops-ctr:5000

memcached_servers=ops-ctr:11211

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=glance

password=passwd

[paste_deploy]

flavor=keystone

[glance_store]

stores=file,http

default_store=file

filesystem_store_datadir=/var/lib/glance/images/

vi /etc/glance/glance-registry.conf

[database]

connection=mysql+pymysql://glc_db:passwd@ops-ctr/glance

www_authenticate_uri=http://ops-ctr:5000

auth_url=http://ops-ctr:5000

memcached_servers=ops-ctr:11211

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=glance

password=passwd

[paste_deploy]

flavor=keystone

- 同步数据库

glance-manage db_sync glance - 启动服务

systemctl start openstack-glance-api openstack-glance-registry

systemctl enable openstack-glance-api openstack-glance-registry

如果glance-api服务启动失败,尝试修改/var/lib/glance/images和/var/log/glance/api.log的所属用户和组为glance:glance

- 验证操作

. admin-openrc

# create image use cirros.img

openstack image create "cirros" \

--file /home/user/cirros-0.4.0-x86_64-disk.img \

--disk-format qcow2 \

--container-format bare \

--public

# show image

openstack image list

安装计算服务nova8

控制节点中安装nova服务

- 数据库

# create database

create database nova;

create database nova_api;

create database nova_cell0;

# set permission like others

grant all on nova.* to 'nva_db'@'localhost' identified by 'passwd';

- 创建用户

openstack user create --domain default \

--password-prompt nova

openstack role add --project service --user nova admin

- 创建服务实体

openstack service create --name nova \

--description "OpenStack Compute" compute

- 创建endpoint

openstack endpoint create --region RegionOne \

compute public http://ops-ctr:8774/v2.1

# create internal admin endpoint like public

# add port to firewall

- 安装nova组件

yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-console -y - 配置组件

vi /etc/nova/nova.conf

[DEFAULT]

enabled_apis=osapi_compute,metadata

transport_url=rabbit://rbtmq:paswd@ops-ctr

my_ip=192.168.122.11

use_neutron=true

firewall_driver=nova.virt.firewall.NoopFirewallDriver

[database]

connection=mysql+pymysql://nva_db:passwd@ops-ctr/nova

[api_database]

connection=mysql+pymysql://nva_db:passwd@ops-ctr/nova_api

[api]

auth_strategy=keystone

[keystone_authtoken]

auth_url=http://ops-ctr:5000/v3

memcached_servers=ops-ctr:11211

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=nova

password=passwd

[vnc]

enabled=true

server_listen=$my_ip

server_proxyclient_address=$my_ip

[glance]

api_servers=http://ops-ctr:9292

[oslo_concurrency]

lock_path=/var/lib/nova/tmp

[placement]

region_name=RegionOne

auth_url=http://ops-ctr:5000/v3

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=placement

password=passwd

[scheduler]

discover_hosts_in_cells_interval=300

- 同步数据库

/bin/sh -c "nova-manage api_db sync" nova

/bin/sh -c "nova-manage cell_v2 map_cell0" nova

/bin/sh -c "nova-manage cell_v2 create_cell --name cell1 --verbose" nova

/bin/sh -c "nova-manage db sync" nova

# show cells

nova-manage cell_v2 list_cells nova

- 启动服务

systemctl start openstack-nova-api openstack-nova-consoleauth openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy

systemctl enable openstack-nova-api openstack-nova-consoleauth openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy

- 将计算节点添加到cell数据库

# show compute service

openstack compute service list

# discover compute node

/bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

- 确认操作

# show endpoint

openstack catalog list

# show image

openstack image list

nova-status upgrade check

计算节点中安装nova服务9

- 安装组件

yum install openstack-nova-compute -y - 配置

vi /etc/nova/nova.conf

[DEFAULT]

enabled_apis=osapi_compute,metadata

transport_url=rabbit://rbtmq:passwd@ops-ctr

my_ip=192.168.122.12

use_neutron=true

firewall_driver=nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy-keystone

[keystone_authtoken]

auth_url=http://ops-ctr:5000/v3

memcached_servers=ops-ctr:11211

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=nova

password=passwd

[vnc]

enabled=true

server_listen=0.0.0.0

server_proxyclient_address=$my_ip

novncproxy_base_url=http://ops-ctr:6080/vnc_auto.html

[glance]

api_servers=http://ops-ctr:9292

[oslo_concurrency]

lock_path=/var/lib/nova/tmp

[placement]

region_name=RegionOne

auth_url=http://ops-ctr:5000/v3

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=placement

password=passwd

[libvirt]

virt_type=qemu

- 启用虚拟化

egrep -c '(vmx|svm)' /proc/cpuinfo

结果:0-qemu

- 启动服务

systemctl start lilbvirtd openstack-nova-compute

systemctl enable lilbvirtd openstack-nova-compute

安装网络服务neutron

控制节点

- 数据库

create database neutron;

grant all on neutron.* to 'ntr_db'@'localhost' identified by 'passwd';

grant all on neutron.* to 'ntr_db'@'%' identified by 'passwd';

- 创建用户

openstack user create --domain default \

--password-prompt neutron

openstack role add --project service --user neutron admin

- 创建服务实体和endpoint

- 创建网络

- 私有网络

- 安装组件

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linux-bridge ebtables -y - 配置

vi /etc/neutron/neutron.conf [DEFAULT] core_plugin=ml2 service_plugins=router allow_overlapping_ips=true transport_url=rabbit://rbtmq:passwd@ops-ctr auth_strategy=keystone notify_nova_on_port_status_changes=true notify_nova_on_port_data_changes=true [database] connection=mysql+pymysql://ntr_db:passwd@ops-ctr/neutron [keystone_authtoken] www_authenticate_uri=http://ops-ctr:5000 auth_url=http://ops-ctr:5000 memcached_servers=ops-ctr:11211 auth_type=password project_domain_name=default user_domain_name=default project_name=service username=neutron password=passwd [nova] auth_url=http://ops-ctr:5000 auth_type=password project_domain_name=default user_domain_name=default project_name=service username=neutron password=passwd [oslo_concurrency] lock_path=/var/lib/neutron/tmp vi /etc/neutron/plugins/ml2/ml2_conf.ini [ml2] type_drivers=flat,vlan,vxlan tenant_network_types=vxlan mechanism_drivers=linuxbridge,l2population extension_drivers=pot_security [ml2_type_flat] flat_networks=provider [ml2_type_vxlan] vni_ranges=1:1000 [securitygroup] enable_ipset=true vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings=provider:eth1 [vxlan] enable_vxlan=true local_ip=192.168.122.11 l2_population=true [securitygroup] enable_security_group=true firewall_driver=neutron.agent.linux.iptables_firewall.IptablesFirewallDriver vi /etc/neutron/l3_agent.ini [DEFAULT] interface_driver=linuxbridge vi /etc/neutron/dhcp_agent.ini [DEFAULT] interface_driver=linuxbridge dhcp_driver=neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata=true - 安装组件

- 配置元数据

vi /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_host=ops-ctr

metadata_proxy_shared_secret=passwd

- 配置nova服务

vi /etc/nova/nova.conf

[neutron]

url=http://ops-ctr:9696

auth_url=http://ops-ctr:5000

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=neutron

password=passwd

service_metadata_proxy=true

metadata_proxy_shared_secret=passwd

- 同步数据库

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

/bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

- 启动服务

systemctl restart openstack-nova-api

systemctl start neutron-server neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent neutron-l3-agent

systemctl enable neutron-server neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent neutron-l3-agent

- 验证操作

openstack network agent list

计算节点有1个服务,控制节点有4个服务

计算节点

- 安装组件

yum install openstack-neutron-linuxbridge ebtables ipset -y - 配置

vi /etc/neutron/neutron.conf

[DEFAULT]

transport_url=rabbit://rbtmq:passwd@ops-ctr

auth_strategy=keystone

[keystone_authtoken]

www_authenticate_uri=http://ops-ctr:5000

auth_url=http://ops-ctr:5000

memcached_servers=ops-ctr:11211

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=neutron

password=passwd

[oslo_concurrency]

lock_path=/var/lib/neutron/tmp

vi /etc/nova/nova.conf

[neutron]

url=http://ops-ctr:9696

auth_url=http://ops-ctr:5000

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=neutron

password=passwd

vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings=provider:eth1

[vxlan]

enable_vxlan=true

local_ip=192.168.122.12

l2_population=true

[securitygroup]

enable_security_group=true

firewall_driver=neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

- 启动服务

systemctl restart openstack-nova-compute

systemctl start neutron-linuxbridge-agent

systemctl enable neutron-linuxbridge-agent

安装UI服务horizon12

- 安装组件

yum install openstack-dashboard -y - 配置

vi /etc/openstack-dashboard/local_settings

OPENSTACK_HOST="ops-ctr'

ALLOW_HOSTS=['*', ]

SESSION_ENGINE='django.contrib.sessions.backends.cache'

CACHE={

'default':{

'BACKEND':'django.core.cache.backends.memcached.MemcachedCache',

'LOCALTION':'ops-ctr:11211',

}

}

OPENSTACK_KEYSTONE_URL="http://%s:5000/v3 % OPENSTACK_HOST"

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT=True

# True要使用大写,用小写会报错

OPENSTACK_VERSIONS={

"identity":3,

"image":2,

"volume":3,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN="default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE="demo"

TIME_ZONE="Asia/Shanghai"

vi /etc/httpd/conf.d/openstack-dashboard.conf

# add

WSGIApplicationGroup %{GLOBAL}

- 重启服务

systemctl restart httpd memcached

添加一个存储节点

控制节点13

- 数据库

create database cinder;

grant all on cinder.* to 'cid_db'@'localhost' identified by 'passwd';

- 创建用户、角色、endpoint和2个服务:

- cinderv2,cinderv3,类型分别是volumev2,volumev3

- v2 endpoint地址http://ops-ctr:8776/v2/%(project_id)s

- v3 endpoint地址http://ops-ctr:8776/v3/%(project_id)s

- 安装组件

yum install openstack-cinder -y - 配置

vi /etc/cinder/cinder.conf

[DEFAULT]

transport_url=rabbit://rbtmq:passwd@ops-ctr

auth_strategy=keystone

my_ip=192.168.122.11

[database]

connection=mysql+pymysql://cid_db:passwd@ops-ctr/cinder

[keystone_authtoken]

www_authenticate_uri=http://ops-ctr:5000

auth_url=http://ops-ctr:5000

memcached_servers=ops-ctr:11211

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=cinder

password=passwd

[oslo_concurrency]

lock_path=/var/lib/cinder/tmp

- 同步数据库

/bin/sh -c "cinder-manage db sync" cinder - 配置计算服务使用块存储

vi /etc/nova/nova.conf

# add

[cinder]

os_region_name=RegionOne

- 启动服务

systemctl restart openstack-nova-api

systemctl start openstack-cinder-api openstack-cinder-scheduler

systemctl enable openstack-cinder-api openstack-cinder-scheduler

- 检查操作

openstack volume service list

存储节点

- 安装组件

yum install lvm2 device-mapper-persistent-data -y - 创建逻辑分区

pvcreate /dev/vdb - 创建逻辑卷组

vgcreate cinder /dev/vdb - 添加过滤器

vi /etc/lvm/lvm.conf

filter=["a/dev/vda/","a/dev/vdb/","r/.*/"]

- 安装cinder组件

yum install openstack-cinder targetcli python-keystone -y - 配置

vi /etc/cinder/cinder.conf

[DEFAULT]

transport_url=rabbit://rbtmq:passwd@ops-ctr

auth_strategy=keytone

my_ip=192.168.122.13

enabled_backends=lvm

glance_api_servers=http://ops-ctr:9292

[database]

connection=mysql+pymysql://cid_db:passwd@ops-ctr/cinder

[keystone_authtoken]

www_authenticate_uri=http://ops-ctr:5000

auth_url=http://ops-ctr:5000

memcached_servers=ops-ctr:11211

auth_type=password

project_domain_name=default

user_domain_name=default

project_name=service

username=cinder

password=passwd

[lvm]

volume_driver=cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group=cinder

target_protocol=iscsi

target_helper=lioadm

[oslo_concurrency]

lock_path=/var/lib/cinder/tmp

- 启动服务

systemctl start openstack-cinder-volume target

systemctl enable openstack-cinder-volume target

创建实例

创建公有网络15

- 创建网络

. admin-openrc

# 使用provider创建1个flat类型的网络,名称为provider

openstack network create \

--share --external \

--provider-physical-network provider \

--provider-network-type flat provider

- 创建子网

使用创建的provider网络,创建1个192.168.0.200-240范围的子网

openstack subnet create \

--network provider \

--allocation-pool start=192.168.0.200,end=192.168.0.240 \

--dns-nameserver 192.168.0.1 \

--gateway 192.168.0.1 \

--subnet-range 192.168.0.0/24 provider

创建私有网络16

- 创建网络

. demo-openrc

openstack network create selfservice

- 创建子网

openstack subnet create \

--network selfservice \

--dns-nameserver 192.168.0.1 \

--gateway 192.168.100.1 \

--subnet-range 192.168.100.0/24 selfservice

- 创建路由

openstack router create self-router - 将selfservice网络添加到路由中

openstack router add subnet self-router selservice - 在路由中设置公网网关

openstack router set self-router --external-gateway provider - 检查操作

. admin-openrc

ip netns

openstack port list --router self-router

创建实例

- 创建最小规格的主机,内存64M,硬盘1G,名称m1.nano

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano - 添加密钥对

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey - 添加安全规则到default安全组中

# allow ping

openstack security group rule create --proto icmp default

# allow ssh

openstack security group rule create --proto tcp --dst-port 22 default

创建主机

- 私网主机17

. demo-openrc

openstack server create --flavor m1.nano \

--image cirros \

--nic net-id=c34add94-6f4d-4312-92f9-ac4ad426bce7 \

--security-group default \

--key-name mykey self-host

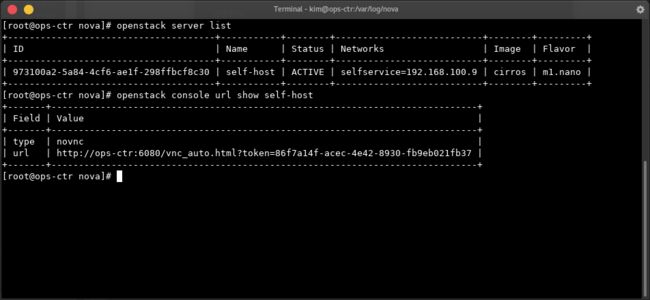

- 查看创建的主机

openstack server list - 虚拟终端访问主机

openstack console url show self-host

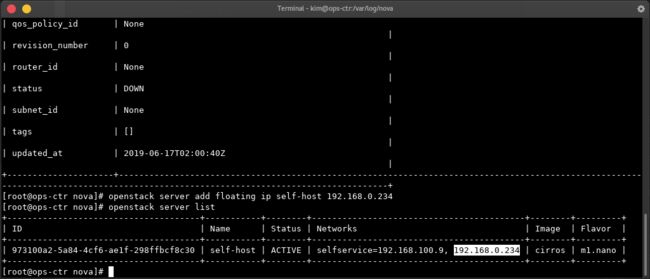

- 远程访问主机

# create float ip

openstack floating ip create provider

# associate floating ip with self-host

openstack server add floating ip self-host 192.168.0.234

# show server list

openstack server list

参考

- install database on centos

- install rabbitmq-server on centos

- install memcached on centos

- install etcd on centos

- install keystone on centos

- install glance on centos

- enable ssl on keystone

- install nova on centos

- compute server install nova

- install placement on centos

- incell neutron on centos

- install horizon on centos

- install cinder on centos for controller

- install cinder on centos for storage

- create provider network

- create self-service network

- create self-host in selfservice