概述

在业务上了kubernetes集群以后,容器编排的功能之一是能提供业务服务弹性伸缩能力,但是我们应该保持多大的节点规模来满足应用需求呢?这个时候可以通过cluster-autoscaler实现节点级别的动态添加与删除,动态调整容器资源池,应对峰值流量。在Kubernetes中共有三种不同的弹性伸缩策略,分别是HPA(HorizontalPodAutoscaling)、VPA(VerticalPodAutoscaling)与CA(ClusterAutoscaler)。其中HPA和VPA主要扩缩容的对象是容器,而CA的扩缩容对象是节点。

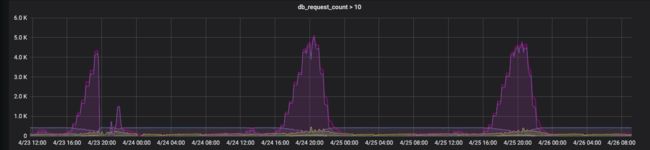

最近有转码业务从每天下午4点开始,负载开始飙高,在晚上10点左右负载会回落;目前是采用静态容量规划导致服务器资源不能合理利用别浪费,所以记录下cluster-autoscaler来动态伸缩pod资源所需的容器资源池

应用aliyun-cluster-autoscaler

前置条件:

- aliyun-cloud-provider

1. 权限认证

由于autoscaler会调用阿里云ESS api来触发集群规模调整,因此需要配置api 访问的AK。

自定义权限策略如下:

{

"Version": "1",

"Statement": [

{

"Action": [

"ess:Describe*",

"ess:CreateScalingRule",

"ess:ModifyScalingGroup",

"ess:RemoveInstances",

"ess:ExecuteScalingRule",

"ess:ModifyScalingRule",

"ess:DeleteScalingRule",

"ess:DetachInstances",

"ecs:DescribeInstanceTypes"

],

"Resource": [

"*"

],

"Effect": "Allow"

}

]

}

创建一个k8s-cluster-autoscaler的编程访问用户,将其自定义权限策略应用到此用户,并创建AK.

2.ASG Setup

- 创建一个伸缩组ESS https://essnew.console.aliyun.com

自动扩展kubernetes集群需要阿里云ESS(弹性伸缩组)的支持,因此需要先创建一个ESS。

进入ESS控制台. 选择北京Region(和kubernetes集群所在region保持一致),点击【创建伸缩组】,在弹出的对话框中填写相应信息,注意网络类型选择专有网络,并且专有网络选择前置条件1中的Kubernetes集群所在的vpc网络名,然后选择vswitch(和kubernetes节点所在的vswitch),然后提交。如下图:

其中伸缩配置需要单独创建,选择实例规格(建议选择多种资源一致的实例规格,避免实力规格不足导致伸缩失败)、安全组(和kubernetes node所在同个安全组)、带宽峰值选择0(不分配公网IP),设置用户数据等等。注意用户数据取使用文本形式,同时将获取kubernetes集群的添加节点命令粘贴到该文本框中,并在之前添加#!/bin/bash,下面是将此节点注册到集群的实例示例:

#!/bin/bash

curl https://file.xx.com/kubernetes-stage/attach_node.sh | bash -s -- --kubeconfig [kubectl.kubeconfig | base64] --cluster-dns 172.19.0.10 --docker-version 18.06.2-ce-3 --labels type=autoscaler

然后完成创建,启用配置

3.部署Autoscaler到kubernetes集群中

需要手动指定上面刚刚创建伸缩组ID以及伸缩最小和最大的机器数量,示例:--nodes=1:3:asg-2ze9hse7u4udb6y4kd25

---

apiVersion: v1

kind: ConfigMap

metadata:

name: cloud-autoscaler-config

namespace: kube-system

data:

access-key-id: "xxxx"

access-key-secret: "xxxxx"

region-id: "cn-beijing"

---

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: cluster-autoscaler

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

rules:

- apiGroups: [""]

resources: ["events","endpoints"]

verbs: ["create", "patch"]

- apiGroups: [""]

resources: ["pods/eviction"]

verbs: ["create"]

- apiGroups: [""]

resources: ["pods/status"]

verbs: ["update"]

- apiGroups: [""]

resources: ["endpoints"]

resourceNames: ["cluster-autoscaler"]

verbs: ["get","update"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["watch","list","get","update"]

- apiGroups: [""]

resources: ["pods","services","replicationcontrollers","persistentvolumeclaims","persistentvolumes"]

verbs: ["watch","list","get"]

- apiGroups: ["extensions"]

resources: ["replicasets","daemonsets"]

verbs: ["watch","list","get"]

- apiGroups: ["policy"]

resources: ["poddisruptionbudgets"]

verbs: ["watch","list"]

- apiGroups: ["apps"]

resources: ["statefulsets"]

verbs: ["watch","list","get"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["watch","list","get"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create","list","watch"]

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["cluster-autoscaler-status", "cluster-autoscaler-priority-expander"]

verbs: ["delete","get","update","watch"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: cluster-autoscaler

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-autoscaler

subjects:

- kind: ServiceAccount

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: cluster-autoscaler

subjects:

- kind: ServiceAccount

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

app: cluster-autoscaler

spec:

replicas: 1

selector:

matchLabels:

app: cluster-autoscaler

template:

metadata:

labels:

app: cluster-autoscaler

spec:

priorityClassName: system-cluster-critical

serviceAccountName: cluster-autoscaler

containers:

- image: registry.cn-hangzhou.aliyuncs.com/acs/autoscaler:v1.3.1-567fb17

name: cluster-autoscaler

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 100m

memory: 300Mi

command:

- ./cluster-autoscaler

- --v=4

- --stderrthreshold=info

- --cloud-provider=alicloud

- --nodes={MIN_NODE}:{MAX_NODE}:{ASG_ID}

- --skip-nodes-with-system-pods=false

- --skip-nodes-with-local-storage=false

imagePullPolicy: "Always"

env:

- name: ACCESS_KEY_ID

valueFrom:

configMapKeyRef:

name: cloud-autoscaler-config

key: access-key-id

- name: ACCESS_KEY_SECRET

valueFrom:

configMapKeyRef:

name: cloud-autoscaler-config

key: access-key-secret

- name: REGION_ID

valueFrom:

configMapKeyRef:

name: cloud-autoscaler-config

key: region-id

测试自动扩展节点效果

Autoscaler根据用户应用的资源静态请求量来决定是否扩展集群大小,因此需要设置好应用的资源请求量。

测试前节点数量如下,配置均为2核4G ECS,其中两个节点可调度。

[root@iZ2ze190o505f86pvk8oisZ cluster-autoscaler]# kubectl get node

NAME STATUS ROLES AGE VERSION

cn-beijing.i-2ze190o505f86pvk8ois Ready master,node 46h v1.12.3

cn-beijing.i-2zeef9b1nhauqusbmn4z Ready node 46h v1.12.3

接下来我们创建一个副本nginx deployment, 指定每个nginx副本需要消耗2G内存。

[root@iZ2ze190o505f86pvk8oisZ cluster-autoscaler]# cat <看到由于有足够的cpu内存资源,所以pod能够正常调度。接下来我们使用kubectl scale 命令来扩展副本数量到4个。

[root@iZ2ze190o505f86pvk8oisZ cluster-autoscaler]# kubectl scale deploy nginx-example --replicas 3

deployment.extensions/nginx-example scaled

[root@iZ2ze190o505f86pvk8oisZ ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-example-584bdb467-2s226 1/1 Running 0 13m

nginx-example-584bdb467-lz2jt 0/1 Pending 0 4s

nginx-example-584bdb467-r7fcc 1/1 Running 0 4s

[root@iZ2ze190o505f86pvk8oisZ cluster-autoscaler]# kubectl describe pod nginx-example-584bdb467-lz2jt | grep -A 4 Event

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 1s (x5 over 19s) default-scheduler 0/2 nodes are available: 2 Insufficient memory.

发现由于没有足够的cpu内存资源,该pod无法被调度(pod 处于pending状态)。这时候autoscaler会介入,尝试创建一个新的节点来让pod可以被调度。看下伸缩组状态,已经创建了一台机器

接下来我们执行一个watch kubectl get no 的命令来监视node的添加。大约几分钟后,就有新的节点添加进来了。

[root@iZ2ze190o505f86pvk8oisZ ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

cn-beijing.i-2ze190o505f86pvk8ois Ready master,node 17m v1.12.3

cn-beijing.i-2zedqvw2bewvk0l2mk9x Ready autoscaler,node 2m30s v1.12.3

cn-beijing.i-2zeef9b1nhauqusbmn4z Ready node 2d17h v1.12.3

[root@iZ2ze190o505f86pvk8oisZ ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-example-584bdb467-2s226 1/1 Running 0 19m

nginx-example-584bdb467-lz2jt 1/1 Running 0 5m47s

nginx-example-584bdb467-r7fcc 1/1 Running 0 5m47s

可以观察到比测试前新增了一个节点,并且pod也正常调度了。

测试自动收缩节点数量

当Autoscaler发现通过调整Pod分布时可以空闲出多余的node的时候,会执行节点移除操作。这个操作不会立即执行,通常设置了一个冷却时间,300s左右才会执行scale down。

通过kubectl scale 来调整nginx副本数量到1个,观察集群节点的变化。

[root@iZ2ze190o505f86pvk8oisZ cluster-autoscaler]# kubectl scale deploy nginx-example --replicas 1

deployment.extensions/nginx-example scaled

[root@iZ2ze190o505f86pvk8oisZ cluster-autoscaler]# kubectl get node

NAME STATUS ROLES AGE VERSION

cn-beijing.i-2ze190o505f86pvk8ois Ready master,node 46h v1.12.3

cn-beijing.i-2zeef9b1nhauqusbmn4z Ready node 46h v1.12.3

TODO:

- 模糊调度

创建多个伸缩组,每个伸缩组对应不同实例规格机器比如高IO\高内存的,不同应用弹性伸缩对应类型的伸缩组 - cronHPA + autoscaler

由于node弹性伸缩存在一定的时延,这个时延主要包含:采集时延(分钟级) + 判断时延(分钟级) + 伸缩时延(分钟级)结合业务,根据时间段,自动伸缩业务(CronHPA)来处理高峰数据,底层自动弹性伸缩kubernetes node增大容器资源池

参考地址:

https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/FAQ.md

https://github.com/kubernetes/autoscaler/tree/master/cluster-autoscaler/cloudprovider/alicloud