- AWS GCR EKS Resource:构建高效弹性云原生应用的利器

杨女嫚

AWSGCREKSResource:构建高效弹性云原生应用的利器eks-workshop-greater-chinaAWSWorkshopforLearningEKSforGreaterChina项目地址:https://gitcode.com/gh_mirrors/ek/eks-workshop-greater-china在云计算的浪潮中,AWS(AmazonWebServices)一直处于创新

- AI行业高压与人才健康:纪念Felix Hill,并探讨AI代码生成工具的价值

前端

今天,我们怀着沉痛的心情悼念GoogleDeepMind研究科学家FelixHill,这位杰出的AI学者在41岁的年纪离开了我们。他的离世引发了我们对AI行业高压环境与人才健康问题的深刻反思。Felix生前曾公开表达AI行业前所未有的压力,这促使我们思考如何利用技术,例如AI代码生成器,来改善开发者的工作环境,提升效率,守护人才健康。FelixHill在自然语言处理和人工智能领域取得了令人瞩目的成

- DeepSeek新模型霸榜,代码能力与OpenAI o1相当且确认开源,网友:今年编程只剩Tab键

量子位

原创关注前沿科技量子位DeepSeek版o1,有消息了。还未正式发布,已在代码基准测试LiveCodeBench霸榜前三,表现与OpenAIo1的中档推理设置相当。注意了,这不是在DeepSeek官方App已经能试玩的DeepSeek-R1-Lite-Preview(轻量预览版)。而是摘掉了轻量版的帽子,称为DeepSeek-R1-Preview(预览版),意味着替换了规模更大的基础模型。Live

- deepin 中 find 命令查找技巧

deepin

find命令是deepin系统中一个非常强大的文件查找工具,它可以帮助用户快速定位文件和目录。全面掌握这个命令可以使很多操作达到事半功倍的效果。本文将详细介绍find命令的各种查找技巧,包括基本用法、高级技巧和实际应用场景。基本用法1.1命令格式find命令的基本格式如下:find[路径][表达式]•路径:指定要搜索的目录路径。可以是一个或多个路径。•表达式:指定查找文件的条件和操作。表达式是fi

- deepin桌面卡死问题应对策略

deepin

摘要:deepin操作系统,作为一款基于Linux的国产操作系统,以其美观的界面和稳定的性能受到用户的喜爱。然而,用户在使用过程中可能会遇到桌面卡死的问题。本文将提供一些常见的桌面卡死情况及其解决方案,帮助用户快速恢复系统的正常运行。引言deepin操作系统在提供高效能的同时,也可能会遇到桌面卡死的问题。这种情况可能是由于桌面环境、Xorg服务或者特定进程的异常造成的。本文将针对这些情况提供详细的

- 在 deepin 中使用原生 Wine 安装与运行 Windows 软件指南

deepin

一、前言deepin作为一款广受好评的国产Linux发行版,凭借其出色的性能和易用性,吸引了众多用户。然而,在使用deepin时,我们可能会遇到一些仅支持Windows平台的软件。借助Wine这一兼容层,我们可以在deepin中顺利安装和运行这些Windows软件。本文将详细阐述在deepin中利用原生Wine安装与运行Windows软件的全过程,以32位7-Zip的安装程序为例,助你轻松掌握相关

- deepin下载mysql教程

deepinmysql

官方下载Mysql8.2支持here解压到需要的文件夹去(建议放到/opt/mysql/mysql8)建立mysql的用户与组(groupaddmysql&&useradd-r-gmysqlmysql)创建软链接到系统中,后继服务与配置中会使用到cd/usr/local&&sudoln-s/opt/mysql/mysql8mysql8cd/usr/bin&&sudoln-s/opt/mysql/m

- deepin 中 apt 与 dpkg 安装包管理工具的区别

deepin

在Linux系统中,尤其是基于Debian的发行版如Ubuntu和deepin,apt和dpkg是两种常用的包管理工具。它们在功能和使用场景上有一些显著的区别。本文将详细介绍这两种工具的主要区别以及它们的常用命令。主要区别1.1dpkg•功能:dpkg侧重于本地软件包的管理。它主要用于安装、删除和查询本地的.deb文件。•依赖管理:dpkg不会自动处理依赖关系。如果安装的包有依赖,需要手动安装这些

- deepin-如何在 ArchLinux 发行版上安装 DDE 桌面环境

deepin

ArchLinux是一个独立开发的x86-64通用GNU/Linux发行版,其用途广泛,足以适应任何角色。开发侧重于简单、极简主义和代码优雅。Arch是作为一个最小的基础系统安装的,由用户配置,通过仅安装其独特目的所需或所需的东西来组装他们自己的理想环境。官方没有提供GUI配置实用程序,大多数系统配置是通过编辑简单的文本文件从shell执行的。Arch努力保持领先,通常提供大多数软件的最新稳定版本

- deepin操作系统壁纸管理操作及命令行指南

deepin

摘要:壁纸作为操作系统可视化的重要组成部分,不仅美化了桌面环境,也体现了用户的个性化需求。deepin操作系统提供了丰富的壁纸管理功能,包括为每个显示器设置壁纸、自定义壁纸、管理壁纸库等。本文将详细介绍如何通过命令行对Deepin的壁纸进行管理,包括设置壁纸、获取壁纸列表、删除壁纸以及获取当前壁纸路径等操作。引言deepin操作系统允许用户通过多种方式管理壁纸,包括图形界面和命令行。命令行操作为用

- deepin系统升级后网络模块丢失问题的解决方案

deepin

摘要:在deepin操作系统的升级过程中,用户可能会遇到网络模块丢失的问题,这通常与升级命令处理推荐依赖的方式有关。本文将探讨这一问题的成因,并提供推荐的升级方法和解决方案,以确保系统升级的完整性和功能的完整性。引言deepin操作系统在升级过程中,如果使用不当的命令,可能会导致部分功能模块丢失,如网络模块。这可能会影响用户的正常使用。本文将提供解决方案,帮助用户恢复丢失的网络模块。问题分析2.1

- deepin操作系统任务栏网络图标异常问题解决指南

deepin

摘要:在使用deepin操作系统时,用户可能会遇到任务栏网络图标显示异常的情况,即使网络连接正常,图标也可能错误地提示无法访问互联网。本文将探讨这一问题的成因,并提供一系列解决方案,以帮助用户解决任务栏网络图标状态异常的问题。引言deepin操作系统的任务栏网络图标有时会出现状态异常,这可能是由于网络检测机制的误判或配置文件的错误。本文将提供详细的解决方案,以确保网络图标能够准确反映网络连接状态。

- deepin操作系统登录问题全面分析与解决方案

deepin

摘要:deepin操作系统,作为一款基于Linux的国产操作系统,以其美观的界面和稳定的性能受到用户的喜爱。然而,用户在使用过程中可能会遇到无法登录的问题。本文将对deepin无法登录的问题进行详细分析,并提供相应的解决方案。引言deepin操作系统在升级或使用过程中可能会出现无法登录的情况,这可能是由于系统升级、配置文件错误或软件冲突等原因造成的。本文旨在帮助用户解决这些问题,确保系统的正常使用

- deepin桌面卡死问题处理指南

deepin

摘要:deepin操作系统以其优雅的界面和流畅的用户体验受到用户的青睐。然而,用户有时可能会遇到桌面卡死的问题,这可能由多种原因引起。本文将提供一些常见的桌面卡死情况及其解决方案,帮助用户快速恢复系统的正常运行。引言deepin操作系统在提供高效能的同时,也可能会遇到桌面卡死的问题。这种情况可能是由于桌面环境、Xorg服务或者特定进程的异常造成的。本文将针对这些情况提供详细的解决方案。桌面卡死常见

- 如何在 deepin文件夹中搜索包含特定内容、关键字的 Word 文档

deepin

在deepin系统中,搜索包含特定内容或关键字的Word文档是一项常见需求。以下是一个详细的步骤指南,帮助你在文件夹中高效地完成这项任务。一、安装依赖工具要搜索Word文档,首先需要安装一些必要的工具。这些工具包括catdoc、docx2txt、iconv和grep。它们分别用于处理不同格式的Word文档、字符编码转换和文本搜索。在终端执行以下命令安装这些工具:sudoaptinstallcatd

- 用deepin-wine6安装/运行exe程序的方法

deepin

一、建立deepin-wine6-stable环境对于新装的系统,首先需要安装一款应用商店里使用deepin-wine6-stable运行的wine应用,例如wine版微信或wine版QQ,并运行一下。这样,系统会自动建立deepin-wine6-stable环境,为后续安装其他exe程序奠定基础.二、安装exe程序以32位7-Zip的安装程序7z2107.exe(版本21.7.0.0)为例,该e

- deepin 系统网络信息查看指南

deepin

deepin系统网络信息查看指南在Linux操作系统,如deepin和Ubuntu中,我们可以通过多种shell命令来查看网络信息和网络状态。本文将介绍这些命令,帮助您更好地理解和监控您的网络环境。1.ifconfig命令ifconfig是查看所有网卡信息的命令,但已被弃用,推荐使用ip命令。ifconfig2.ip命令ip命令用于查看所有网卡的信息。#查看所有接口信息:ipaddrshow#查看

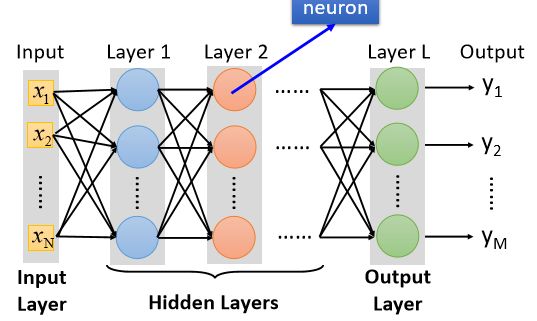

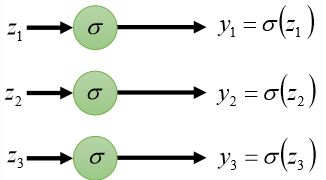

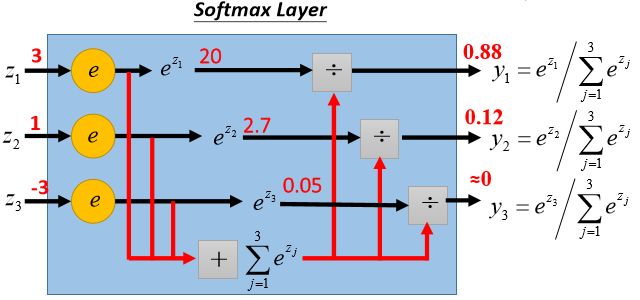

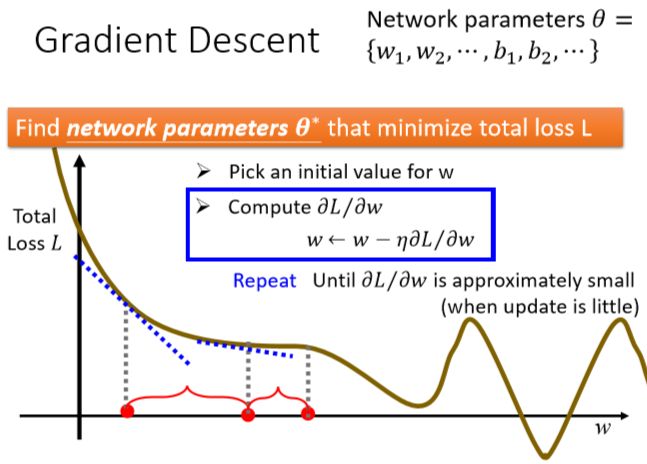

- 什么是多模态机器学习:跨感知融合的智能前沿

非凡暖阳

人工智能神经网络

在人工智能的广阔天地里,多模态机器学习(MultimodalMachineLearning)作为一项前沿技术,正逐步解锁人机交互和信息理解的新境界。它超越了单一感官输入的限制,通过整合视觉、听觉、文本等多种数据类型,构建了一个更加丰富、立体的认知模型,为机器赋予了接近人类的综合感知与理解能力。本文将深入探讨多模态机器学习的定义、核心原理、关键技术、面临的挑战以及未来的应用前景,旨在为读者勾勒出这一

- Python|基于DeepSeek大模型,实现文本内容仿写(8)

写python的鑫哥

AI大模型实战应用人工智能python大模型DeepSeekKimi文本仿写

前言本文是该专栏的第8篇,后面会持续分享AI大模型干货知识,记得关注。我们在处理文本数据项目的时候,有时可能会遇到这样的需求。比如说,指定某些文本模板样例,需要仿写或者生成该“模板”样例数据。再或者说,通过给予某些指定类型的关键词,生成关键词相关领域的文本素材或内容。如果单单投入人力去完成,这肯定是没问题,但耗费的更多是人力成本。而现阶段,对于这种需求,大大可以选择大模型去完成。而本文,笔者将基于

- 像素空间文生图之Imagen原理详解

funNLPer

AI算法ImagenstablediffusionAIGC

论文:PhotorealisticText-to-ImageDiffusionModelswithDeepLanguageUnderstanding项目地址:https://imagen.research.google/代码(非官方):https://github.com/deep-floyd/IF模型权重:https://huggingface.co/DeepFloyd/IF-I-XL-v1.0

- ::v-deep的理解

记得早睡~

vue.js前端javascript

vue样式穿透在刚开始使用element-ui组件库时,想要修改其内部的样式,但总是不生效,通过查询资料,了解到了深度作用选择器。如果希望scoped样式中的一个选择器能够作用得“更深”,例如影响子组件,可以使用>>>操作符:.a>>>.b{width:100%;height:100%;background:red;}但是像scss等预处理器却无法解析>>>,所以我们使用下面的方式:.a{/dee

- 蓝桥杯真题 - 公因数匹配 - 题解

ExRoc

蓝桥杯算法c++

题目链接:https://www.lanqiao.cn/problems/3525/learning/个人评价:难度2星(满星:5)前置知识:调和级数整体思路题目描述不严谨,没说在无解的情况下要输出什么(比如nnn个111),所以我们先假设数据保证有解;从222到10610^6106枚举xxx作为约数,对于约数xxx去扫所有xxx的倍数,总共需要扫n2+n3+n4+⋯+nn≈nlnn\frac{

- 蓝桥杯真题 - 子树的大小 - 题解

ExRoc

蓝桥杯算法c++

题目链接:https://www.lanqiao.cn/problems/3526/learning/个人评价:难度2星(满星:5)前置知识:无整体思路整体将节点编号−1-1−1,通过找规律可以发现,节点iii下一层最左边的节点编号是im+1im+1im+1,最右边的节点编号是im+mim+mim+m;用l,rl,rl,r分别标记当前层子树的最小节点编号与最大节点编号,每次让最左边的节点往下一层的

- C#遇见TensorFlow.NET:开启机器学习的全新时代

墨夶

C#学习资料1机器学习c#tensorflow

在当今快速发展的科技世界里,机器学习(MachineLearning,ML)已经成为推动创新的重要力量。从个性化推荐系统到自动驾驶汽车,ML的应用无处不在。对于那些习惯于使用C#进行开发的程序员来说,将机器学习集成到他们的项目中似乎是一项具有挑战性的任务。但随着TensorFlow.NET的出现,这一切变得不再困难。今天,我们将一起探索如何利用这一强大的工具,在熟悉的.NET环境中轻松构建、训练和

- 【JVM】—G1 GC日志详解

一棵___大树

JVMjvm

G1GC日志详解⭐⭐⭐⭐⭐⭐Github主页https://github.com/A-BigTree笔记链接https://github.com/A-BigTree/Code_Learning⭐⭐⭐⭐⭐⭐如果可以,麻烦各位看官顺手点个star~文章目录G1GC日志详解1G1GC周期2G1日志开启与设置3YoungGC日志4MixedGC5FullGC关于G1回收器的前置知识点:【JVM】—深入理解

- NLP 中文拼写检测纠正论文-04-Learning from the Dictionary

后端java

拼写纠正系列NLP中文拼写检测实现思路NLP中文拼写检测纠正算法整理NLP英文拼写算法,如果提升100W倍的性能?NLP中文拼写检测纠正Paperjava实现中英文拼写检查和错误纠正?可我只会写CRUD啊!一个提升英文单词拼写检测性能1000倍的算法?单词拼写纠正-03-leetcodeedit-distance72.力扣编辑距离NLP开源项目nlp-hanzi-similar汉字相似度word-

- 【已解决】ImportError: libnvinfer.so.8: cannot open shared object file: No such file or directory

小小小小祥

python

问题描述:按照tensorrt官方安装文档:https://docs.nvidia.com/deeplearning/tensorrt/install-guide/index.html#installing-tar安装完成后,使用python测试导入tensorrtimporttensorrt上述代码报错:Traceback(mostrecentcalllast):File“main.py”,li

- ASPICE 4.0引领自动驾驶未来:机器学习模型的特点与实践

亚远景aspice

机器学习自动驾驶人工智能

ASPICE4.0-ML机器学习模型是针对汽车行业,特别是在汽车软件开发中,针对机器学习(MachineLearning,ML)应用的特定标准和过程。ASPICE(AutomotiveSPICE)是一种基于软件控制的系统开发过程的国际标准,旨在提升软件开发过程的质量、效率和可靠性。ASPICE4.0中的ML模型部分则进一步细化了机器学习在汽车软件开发中的具体要求和流程。以下是对ASPICE4.0-

- 利用Python运行Ansys Apdl

ssssasda

ansysapdl流处理批处理python

Ansys流处理1.学习资源2.版本要求3.pymapdl安装流程4.初始设置和本地启动mapdl5.PyMAPDL语法6.工具库7.与window的交互接口1.学习资源Ansys官网:https://www.ansys.com/zh-cnAnsysAcademic(Ansys学术):https://www.ansys.com/zh-cn/academicAnsysLearningForum(An

- DeepSeek V3:新一代开源 AI 模型,多语言编程能力卓越

that's boy

人工智能chatgptopenaiclaudemidjourneydeepseek-v3

DeepSeekV3横空出世,以其强大的多语言编程能力和先进的技术架构,引发了业界的广泛关注。这款最新的AI模型不仅在性能上实现了质的飞跃,还采用了开源策略,为广大开发者提供了更广阔的探索空间。本文将深入解析DeepSeekV3的技术原理、主要功能、性能表现及应用场景,带您全面了解这款新一代AI模型。DeepSeekV3的核心亮点DeepSeekV3是一款基于混合专家(MoE)架构的大型语言模型,

- Algorithm

香水浓

javaAlgorithm

冒泡排序

public static void sort(Integer[] param) {

for (int i = param.length - 1; i > 0; i--) {

for (int j = 0; j < i; j++) {

int current = param[j];

int next = param[j + 1];

- mongoDB 复杂查询表达式

开窍的石头

mongodb

1:count

Pg: db.user.find().count();

统计多少条数据

2:不等于$ne

Pg: db.user.find({_id:{$ne:3}},{name:1,sex:1,_id:0});

查询id不等于3的数据。

3:大于$gt $gte(大于等于)

&n

- Jboss Java heap space异常解决方法, jboss OutOfMemoryError : PermGen space

0624chenhong

jvmjboss

转自

http://blog.csdn.net/zou274/article/details/5552630

解决办法:

window->preferences->java->installed jres->edit jre

把default vm arguments 的参数设为-Xms64m -Xmx512m

----------------

- 文件上传 下载 解析 相对路径

不懂事的小屁孩

文件上传

有点坑吧,弄这么一个简单的东西弄了一天多,身边还有大神指导着,网上各种百度着。

下面总结一下遇到的问题:

文件上传,在页面上传的时候,不要想着去操作绝对路径,浏览器会对客户端的信息进行保护,避免用户信息收到攻击。

在上传图片,或者文件时,使用form表单来操作。

前台通过form表单传输一个流到后台,而不是ajax传递参数到后台,代码如下:

<form action=&

- 怎么实现qq空间批量点赞

换个号韩国红果果

qq

纯粹为了好玩!!

逻辑很简单

1 打开浏览器console;输入以下代码。

先上添加赞的代码

var tools={};

//添加所有赞

function init(){

document.body.scrollTop=10000;

setTimeout(function(){document.body.scrollTop=0;},2000);//加

- 判断是否为中文

灵静志远

中文

方法一:

public class Zhidao {

public static void main(String args[]) {

String s = "sdf灭礌 kjl d{';\fdsjlk是";

int n=0;

for(int i=0; i<s.length(); i++) {

n = (int)s.charAt(i);

if((

- 一个电话面试后总结

a-john

面试

今天,接了一个电话面试,对于还是初学者的我来说,紧张了半天。

面试的问题分了层次,对于一类问题,由简到难。自己觉得回答不好的地方作了一下总结:

在谈到集合类的时候,举几个常用的集合类,想都没想,直接说了list,map。

然后对list和map分别举几个类型:

list方面:ArrayList,LinkedList。在谈到他们的区别时,愣住了

- MSSQL中Escape转义的使用

aijuans

MSSQL

IF OBJECT_ID('tempdb..#ABC') is not null

drop table tempdb..#ABC

create table #ABC

(

PATHNAME NVARCHAR(50)

)

insert into #ABC

SELECT N'/ABCDEFGHI'

UNION ALL SELECT N'/ABCDGAFGASASSDFA'

UNION ALL

- 一个简单的存储过程

asialee

mysql存储过程构造数据批量插入

今天要批量的生成一批测试数据,其中中间有部分数据是变化的,本来想写个程序来生成的,后来想到存储过程就可以搞定,所以随手写了一个,记录在此:

DELIMITER $$

DROP PROCEDURE IF EXISTS inse

- annot convert from HomeFragment_1 to Fragment

百合不是茶

android导包错误

创建了几个类继承Fragment, 需要将创建的类存储在ArrayList<Fragment>中; 出现不能将new 出来的对象放到队列中,原因很简单;

创建类时引入包是:import android.app.Fragment;

创建队列和对象时使用的包是:import android.support.v4.ap

- Weblogic10两种修改端口的方法

bijian1013

weblogic端口号配置管理config.xml

一.进入控制台进行修改 1.进入控制台: http://127.0.0.1:7001/console 2.展开左边树菜单 域结构->环境->服务器-->点击AdminServer(管理) &

- mysql 操作指令

征客丶

mysql

一、连接mysql

进入 mysql 的安装目录;

$ bin/mysql -p [host IP 如果是登录本地的mysql 可以不写 -p 直接 -u] -u [userName] -p

输入密码,回车,接连;

二、权限操作[如果你很了解mysql数据库后,你可以直接去修改系统表,然后用 mysql> flush privileges; 指令让权限生效]

1、赋权

mys

- 【Hive一】Hive入门

bit1129

hive

Hive安装与配置

Hive的运行需要依赖于Hadoop,因此需要首先安装Hadoop2.5.2,并且Hive的启动前需要首先启动Hadoop。

Hive安装和配置的步骤

1. 从如下地址下载Hive0.14.0

http://mirror.bit.edu.cn/apache/hive/

2.解压hive,在系统变

- ajax 三种提交请求的方法

BlueSkator

Ajaxjqery

1、ajax 提交请求

$.ajax({

type:"post",

url : "${ctx}/front/Hotel/getAllHotelByAjax.do",

dataType : "json",

success : function(result) {

try {

for(v

- mongodb开发环境下的搭建入门

braveCS

运维

linux下安装mongodb

1)官网下载mongodb-linux-x86_64-rhel62-3.0.4.gz

2)linux 解压

gzip -d mongodb-linux-x86_64-rhel62-3.0.4.gz;

mv mongodb-linux-x86_64-rhel62-3.0.4 mongodb-linux-x86_64-rhel62-

- 编程之美-最短摘要的生成

bylijinnan

java数据结构算法编程之美

import java.util.HashMap;

import java.util.Map;

import java.util.Map.Entry;

public class ShortestAbstract {

/**

* 编程之美 最短摘要的生成

* 扫描过程始终保持一个[pBegin,pEnd]的range,初始化确保[pBegin,pEnd]的ran

- json数据解析及typeof

chengxuyuancsdn

jstypeofjson解析

// json格式

var people='{"authors": [{"firstName": "AAA","lastName": "BBB"},'

+' {"firstName": "CCC&

- 流程系统设计的层次和目标

comsci

设计模式数据结构sql框架脚本

流程系统设计的层次和目标

- RMAN List和report 命令

daizj

oraclelistreportrman

LIST 命令

使用RMAN LIST 命令显示有关资料档案库中记录的备份集、代理副本和映像副本的

信息。使用此命令可列出:

• RMAN 资料档案库中状态不是AVAILABLE 的备份和副本

• 可用的且可以用于还原操作的数据文件备份和副本

• 备份集和副本,其中包含指定数据文件列表或指定表空间的备份

• 包含指定名称或范围的所有归档日志备份的备份集和副本

• 由标记、完成时间、可

- 二叉树:红黑树

dieslrae

二叉树

红黑树是一种自平衡的二叉树,它的查找,插入,删除操作时间复杂度皆为O(logN),不会出现普通二叉搜索树在最差情况时时间复杂度会变为O(N)的问题.

红黑树必须遵循红黑规则,规则如下

1、每个节点不是红就是黑。 2、根总是黑的 &

- C语言homework3,7个小题目的代码

dcj3sjt126com

c

1、打印100以内的所有奇数。

# include <stdio.h>

int main(void)

{

int i;

for (i=1; i<=100; i++)

{

if (i%2 != 0)

printf("%d ", i);

}

return 0;

}

2、从键盘上输入10个整数,

- 自定义按钮, 图片在上, 文字在下, 居中显示

dcj3sjt126com

自定义

#import <UIKit/UIKit.h>

@interface MyButton : UIButton

-(void)setFrame:(CGRect)frame ImageName:(NSString*)imageName Target:(id)target Action:(SEL)action Title:(NSString*)title Font:(CGFloa

- MySQL查询语句练习题,测试足够用了

flyvszhb

sqlmysql

http://blog.sina.com.cn/s/blog_767d65530101861c.html

1.创建student和score表

CREATE TABLE student (

id INT(10) NOT NULL UNIQUE PRIMARY KEY ,

name VARCHAR

- 转:MyBatis Generator 详解

happyqing

mybatis

MyBatis Generator 详解

http://blog.csdn.net/isea533/article/details/42102297

MyBatis Generator详解

http://git.oschina.net/free/Mybatis_Utils/blob/master/MybatisGeneator/MybatisGeneator.

- 让程序员少走弯路的14个忠告

jingjing0907

工作计划学习

无论是谁,在刚进入某个领域之时,有再大的雄心壮志也敌不过眼前的迷茫:不知道应该怎么做,不知道应该做什么。下面是一名软件开发人员所学到的经验,希望能对大家有所帮助

1.不要害怕在工作中学习。

只要有电脑,就可以通过电子阅读器阅读报纸和大多数书籍。如果你只是做好自己的本职工作以及分配的任务,那是学不到很多东西的。如果你盲目地要求更多的工作,也是不可能提升自己的。放

- nginx和NetScaler区别

流浪鱼

nginx

NetScaler是一个完整的包含操作系统和应用交付功能的产品,Nginx并不包含操作系统,在处理连接方面,需要依赖于操作系统,所以在并发连接数方面和防DoS攻击方面,Nginx不具备优势。

2.易用性方面差别也比较大。Nginx对管理员的水平要求比较高,参数比较多,不确定性给运营带来隐患。在NetScaler常见的配置如健康检查,HA等,在Nginx上的配置的实现相对复杂。

3.策略灵活度方

- 第11章 动画效果(下)

onestopweb

动画

index.html

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/

- FAQ - SAP BW BO roadmap

blueoxygen

BOBW

http://www.sdn.sap.com/irj/boc/business-objects-for-sap-faq

Besides, I care that how to integrate tightly.

By the way, for BW consultants, please just focus on Query Designer which i

- 关于java堆内存溢出的几种情况

tomcat_oracle

javajvmjdkthread

【情况一】:

java.lang.OutOfMemoryError: Java heap space:这种是java堆内存不够,一个原因是真不够,另一个原因是程序中有死循环; 如果是java堆内存不够的话,可以通过调整JVM下面的配置来解决: <jvm-arg>-Xms3062m</jvm-arg> <jvm-arg>-Xmx

- Manifest.permission_group权限组

阿尔萨斯

Permission

结构

继承关系

public static final class Manifest.permission_group extends Object

java.lang.Object

android. Manifest.permission_group 常量

ACCOUNTS 直接通过统计管理器访问管理的统计

COST_MONEY可以用来让用户花钱但不需要通过与他们直接牵涉的权限

D