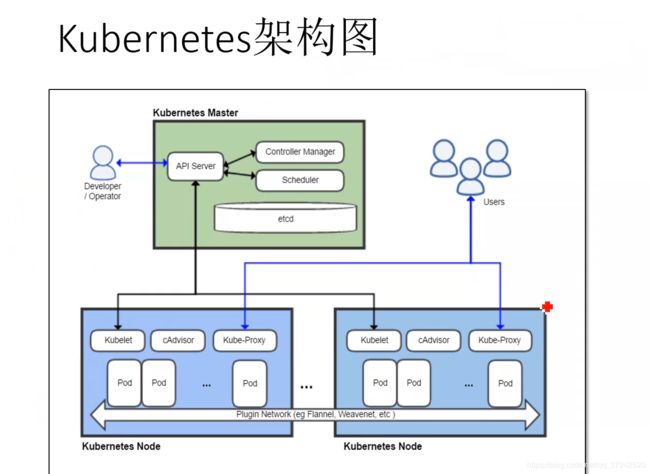

k8s的架构

k8s集群的安装

官方文档

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.13.md#downloads-for-v1131

https://kubernetes.io/docs/home/?path=users&persona=app-developer&level=foundational

https://github.com/etcd-io/etcd

https://shengbao.org/348.html

https://github.com/coreos/flannel

http://www.cnblogs.com/blogscc/p/10105134.html

https://blog.csdn.net/xiegh2014/article/details/84830880

https://blog.csdn.net/tiger435/article/details/85002337

https://www.cnblogs.com/wjoyxt/p/9968491.html

https://blog.csdn.net/zhaihaifei/article/details/79098564

http://blog.51cto.com/jerrymin/1898243

http://www.cnblogs.com/xuxinkun/p/5696031.html

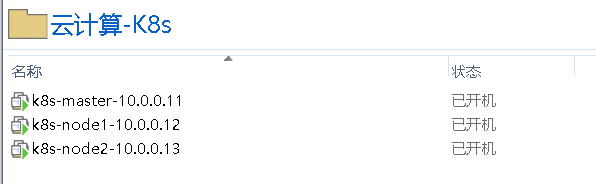

环境准备

#准备三台优化好的全新虚拟机环境

#内存根据电脑的配置情况给,最小给1G

10.0.0.11 k8s-master 1G

10.0.0.12 k8s-node-1 1G

10.0.0.13 k8s-node-2 1G

#所有节点需要做hosts解析

[root@k8s-master ~]# vim /etc/hosts

10.0.0.11 k8s-master

10.0.0.12 k8s-node1

scp -rp /etc/hosts 10.0.0.12:/etc/hosts

scp -rp /etc/hosts 10.0.0.13:/etc/hosts

做一些基础的优化后拍摄快照并克隆

#hosts本地劫持,此操作只用来作者本地网络环境使用

rm -rf /etc/yum.repos.d/local.repo

echo "192.168.37.202 mirrors.aliyun.com" >>/etc/hosts

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

k8s-master上配置

yum install etcd -y

vim /etc/etcd/etcd.conf

6行:ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

21行:ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.11:2379"

systemctl restart etcd.service

systemctl enable etcd.service

netstat -lntup

127.0.0.1:2380

:::2379

扩展—发起http请求查询etcd的值

master节点安装kubernetes

#安装kubernetes-master

[root@k8s-master ~]# yum install -y kubernetes-master.x86_64

#修改apiserver配置文件

[root@k8s-master ~]# vim /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--port=8080"

KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.11:2379"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,

SecurityContextDeny,ResourceQuota"

[root@k8s-master ~]# vim /etc/kubernetes/config

KUBE_MASTER="--master=http://10.0.0.11:8080"

#重启服务

systemctl restart kube-apiserver.service

systemctl enable kube-apiserver.service

systemctl restart kube-controller-manager.service

systemctl enable kube-controller-manager.service

systemctl restart kube-scheduler.service

systemctl enable kube-scheduler.service

#查看美服务是否安装正常

[root@k8s-master ~]# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

node节点安装kubernetes

yum install kubernetes-node.x86_64 -y

vim /etc/kubernetes/config

22行:KUBE_MASTER="--master=http://10.0.0.11:8080"

vim /etc/kubernetes/kubelet

5行:KUBELET_ADDRESS="--address=0.0.0.0"

8行:KUBELET_PORT="--port=10250"

11行:KUBELET_HOSTNAME="--hostname-override=10.0.0.12"

14行:KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080"

#重启服务

systemctl enable kubelet.service

systemctl restart kubelet.service

systemctl enable kube-proxy.service

systemctl restart kube-proxy.service

#docker也启动了

systemctl status docker

在k8s-master节点上检查

[root@k8s-master ~]# kubectl get node

NAME STATUS AGE

k8s-node1 Ready 2m

k8s-node2 Ready 6s

所有节点配置flannel网络

yum install flannel -y

sed -i 's#http://127.0.0.1:2379#http://10.0.0.11:2379#g' /etc/sysconfig/flanneld

##master节点:

etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16" }'

yum install docker -y

systemctl enable flanneld.service

systemctl restart flanneld.service

service docker restart

systemctl enable docker

systemctl restart kube-apiserver.service

systemctl restart kube-controller-manager.service

systemctl restart kube-scheduler.service

##node节点:

systemctl enable flanneld.service

systemctl restart flanneld.service

service docker restart

systemctl restart kubelet.service

systemctl restart kube-proxy.service

vim /usr/lib/systemd/system/docker.service

#在[Service]区域下增加一行

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

systemctl daemon-reload

systemctl restart docker

====================================================

#三台机器都执行,拉取一个自己的镜像

wget http://192.168.37.202/linux59/docker_busybox.tar.gz

docker load -i docker_busybox.tar.gz

#所有节点设置防火墙规则,并让生效

iptables -P FORWARD ACCEPT

vim /usr/lib/systemd/system/docker.service

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

systemctl daemon-reload

#将所有节点重启

reboot

#所有节点都创建一台容器,并测试能否相互ping通

docker run -it docker.io/busybox:latest

为什么添加iptables规则

阮一峰—Systemd 入门教程

配置master为镜像仓库

所有节点

vim /etc/sysconfig/docker

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=https://registry.docker-cn.com --insecure-registry=10.0.0.11:5000'

systemctl restart docker

master节点

[root@k8s-master ~]# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://registry.docker-cn.com"],

"insecure-registries": ["10.0.0.11:5000"]

}

#上传registry.tar.gz 镜像

#下载链接: 提取码: h9cg

#https://pan.baidu.com/s/1OONeJ_pa1WnYjkvdYqjLnw

#添加仓库容器

docker run -d -p 5000:5000 --restart=always --name registry -v /opt/myregistry:/var/lib/registry registry

node节点上

#打标签并上传镜像

docker images

docker tag docker.io/busybox:latest 10.0.0.11:5000/busybox:latest

docker images

docker push 10.0.0.11:5000/busybox:latest

master节点上查看

[root@k8s-master ~]# ll /opt/myregistry/docker/registry/v2/repositories/

total 0

drwxr-xr-x 5 root root 55 Sep 11 12:18 busybox

什么是k8s,k8s有什么功能?

k8s是一个docker集群的管理工具 core rkt

k8s的核心功能

自愈:重新启动失败的容器,在节点不可用时,替换和重新调度节点上的容器,对用户定义的健康检查不响应的容器会被中止,并且在容器准备好服务之前不会把其向客户端广播。

弹性伸缩:通过监控容器的cpu的负载值,如果这个平均高于80%,增加容器的数量,如果这个平均低于10%,减少容器的数量

服务的自动发现和负载均衡:不需要修改您的应用程序来使用不熟悉的服务发现机制,Kubernetes 为容器提供了自己的 IP 地址和一组容器的单个 DNS 名称,并可以在它们之间进行负载均衡。

滚动升级和一键回滚:Kubernetes 逐渐部署对应用程序或其配置的更改,同时监视应用程序运行状况,以确保它不会同时终止所有实例。 如果出现问题,Kubernetes会为您恢复更改,利用日益增长的部署解决方案的生态系统。

k8s的历史

2014年 docker容器编排工具,立项

2015年7月 发布kubernetes 1.0, 加入cncf基金会

2016年,kubernetes干掉两个对手,docker swarm,mesos 1.2版

2017年 1.5

2018年 k8s 从cncf基金会 毕业项目

2019年: 1.13, 1.14 ,1.15

cncf cloud native compute foundation

kubernetes (k8s): 希腊语 舵手,领航 容器编排领域,

谷歌15年容器使用经验,borg容器管理平台,使用golang重构borg,kubernetes

k8s的安装

yum安装 1.5 最容易安装成功,最适合学习的

源码编译安装---难度最大 可以安装最新版

二进制安装---步骤繁琐 可以安装最新版 shell,ansible,saltstack

kubeadm 安装最容易, 网络 可以安装最新版

minikube 适合开发人员体验k8s, 网络

k8s的应用场景

k8s最适合跑微服务项目!

微服务和k8s ,弹性伸缩

微服务的好处

能承载更高的并发

业务健壮性,高可用

修改代码,重新编译时间短

持续集成,持续发布

jenkins代码自动上线

k8s常用的资源

创建pod资源

pod介绍—Kubernetes之POD

pod是最小资源单位.

k8s yaml的主要组成

apiVersion: v1 api版本

kind: pod 资源类型

metadata: 属性

spec: 详细

k8s_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

pod资源:至少由两个容器组成,pod基础容器和业务容器组成(最多1+4)

k8s_test.yaml:

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

- name: busybox

image: 10.0.0.11:5000/busybox:latest

command: ["sleep","10000"]

mkdir k8s_yaml

cd k8s_yaml/

mkdir pod

cd pod/

[root@k8s-master pod]# vim k8s_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

==================================================

master上执行

[root@k8s-master pod]# kubectl create -f k8s_pod.yaml

pod "nginx" created

[root@k8s-master pod]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 7m

[root@k8s-master pod]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 0/1 ContainerCreating 0 2m k8s-node2

wget http://192.168.37.202/linux59/docker_nginx1.13.tar.gz

docker load -i docker_nginx1.13.tar.gz

docker tag docker.io/nginx:1.13 10.0.0.11:5000/nginx:1.13

docker push 10.0.0.11:5000/nginx:1.13

kubectl describe pod nginx

kubectl get nodes

#上传pod-infrastructure-latest.tar.gz 镜像包

[root@k8s-master ~]# ls pod-infrastructure-latest.tar.gz

pod-infrastructure-latest.tar.gz

#打标签并上传镜像

docker tag docker.io/tianyebj/pod-infrastructure:latest 10.0.0.11:5000/rhel7/pod-infrastructure:latest

docker push 10.0.0.11:5000/rhel7/pod-infrastructure:latest

node执行

#修改配置文件

[root@k8s-node2 ~]# vim /etc/kubernetes/kubelet

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.11:5000/rhel7/pod-infrastructure:latest"

#重启kubelet

systemctl restart kubelet.service

master上执行查看

[root@k8s-master pod]# kubectl describe pod nginx

[root@k8s-master pod]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 27m

#添加配置文件

[root@k8s-master pod]# vim k8s_test.yaml

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

- name: busybox

image: 10.0.0.11:5000/busybox:latest

command: ["sleep","10000"]

[root@k8s-master pod]# kubectl create -f k8s_test.yaml

[root@k8s-master pod]# kubectl describe pod test

[root@k8s-master pod]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 55m 172.18.49.2 k8s-node2

test 2/2 Running 0 11m 172.18.42.2 k8s-node1

#在node1上查看容器

[root@k8s-node1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

142ce61f2cbb 10.0.0.11:5000/busybox:latest "sleep 10000" 9 minutes ago Up 9 minutes k8s_busybox.7e7ae56a_test_default_6b11a096-d478-11e9-b324-000c29b2785a_dde70056

f09e9c10deda 10.0.0.11:5000/nginx:1.13 "nginx -g 'daemon ..." 9 minutes ago Up 9 minutes k8s_nginx.91390390_test_default_6b11a096-d478-11e9-b324-000c29b2785a_0d95902d

eec2c8045724 10.0.0.11:5000/rhel7/pod-infrastructure:latest "/pod" 10 minutes ago Up 10 minutes k8s_POD.e5ea03c1_test_default_6b11a096-d478-11e9-b324-000c29b2785a_4df2c4f4

pod是k8s最小的资源单位

ReplicationController资源

副本控制器

rc:保证指定数量的pod始终存活,rc通过标签选择器来关联pod

#创建rc

[root@k8s-master k8s_yaml]# vim k8s_rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 5

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

[root@k8s-master k8s_yaml]# kubectl create -f k8s_rc.yaml

replicationcontroller "nginx" created

[root@k8s-master k8s_yaml]# kubectl get rc

NAME DESIRED CURRENT READY AGE

nginx 5 5 0 6s

[root@k8s-master k8s_yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 2 15h

nginx-b2l78 1/1 Running 0 15s

nginx-gh210 1/1 Running 0 15s

nginx-gs025 1/1 Running 0 15s

nginx-k4hp5 1/1 Running 0 15s

nginx-twf7x 1/1 Running 0 15s

test 2/2 Running 4 15h

k8s资源的常见操作:增删改查

kubectl get pod|rc

kubectl describe pod nginx

kubectl delete pod nginx 或者kubectl delete -f xxx.yaml

kubectl edit pod nginx

在node节点上重启 kubelet.service 恢复

[root@k8s-master k8s_yaml]# kubectl edit rc nginx

spec:

replicas: 10

[root@k8s-master k8s_yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 2 16h 172.18.49.2 k8s-node2

nginx-4dht9 0/1 ContainerCreating 0 6s k8s-node1

nginx-9661w 0/1 ContainerCreating 0 6s k8s-node1

nginx-9ntg5 1/1 Running 0 1m 172.18.49.4 k8s-node2

nginx-b2l78 1/1 Running 0 11m 172.18.42.3 k8s-node1

nginx-gh210 1/1 Running 0 11m 172.18.49.3 k8s-node2

nginx-gs025 1/1 Running 0 11m 172.18.42.4 k8s-node1

nginx-jfg7f 0/1 ContainerCreating 0 6s k8s-node2

nginx-l8l6h 0/1 ContainerCreating 0 6s k8s-node1

nginx-nl4s0 1/1 Running 0 1m 172.18.49.5 k8s-node2

nginx-sld3s 0/1 ContainerCreating 0 6s k8s-node2

test 2/2 Running 4 15h 172.18.42.2 k8s-node1

rc的滚动升级

新建一个nginx-rc1.15.yaml

#上传docker_nginx1.15的镜像包,并打标签

wget http://192.168.37.202/linux59/docker_nginx1.15.tar.gz

docker load -i docker_nginx1.15.tar.gz

docker tag docker.io/nginx:latest 10.0.0.11:5000/nginx:1.15

docker push 10.0.0.11:5000/nginx:1.15

#创建k8s_rc2.yaml 配置文件

cd k8s_yaml/

mkdir /rc

mv k8s_rc.yaml rc/

cd rc/

cp k8s_rc.yaml k8s_rc2.yaml

查看内容修改的差别 vim k8s_rc2.yaml

#检查当前nginx_1.13的版本

[root@k8s-master rc]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 2 17h 172.18.49.2 k8s-node2

nginx-309cg 1/1 Running 0 5m 172.18.49.3 k8s-node2

nginx-nt1tr 1/1 Running 0 5m 172.18.49.4 k8s-node2

nginx-sh229 1/1 Running 0 5m 172.18.42.3 k8s-node1

nginx-w517q 1/1 Running 0 5m 172.18.42.2 k8s-node1

nginx-wkhcv 1/1 Running 0 5m 172.18.42.4 k8s-node1

test 2/2 Running 0 51m 172.18.49.6 k8s-node2

[root@k8s-master rc]# curl -I 172.18.49.4

HTTP/1.1 200 OK

Server: nginx/1.13.12

升级为nginx_1.15

kubectl rolling-update nginx -f k8s_rc2.yaml --update-period=10s

回滚到nginx_1.13

kubectl rolling-update nginx2 -f k8s_rc.yaml --update-period=1s

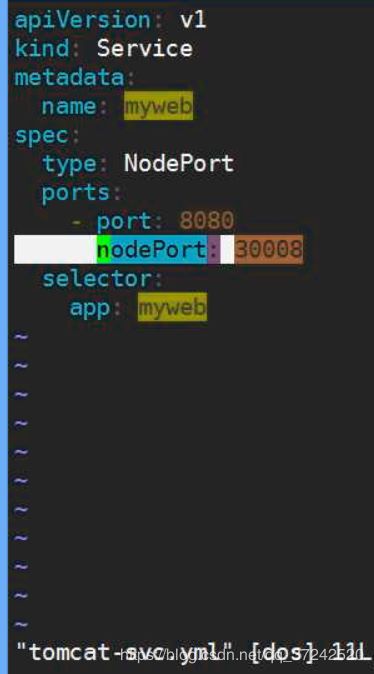

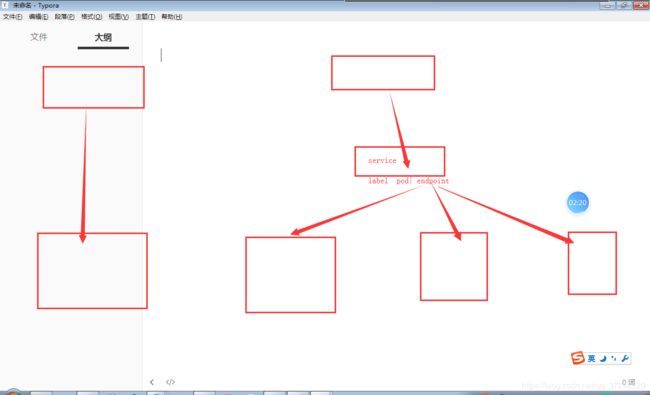

service资源

service帮助pod暴露端口

创建一个service

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort #ClusterIP

ports:

- port: 80 #clusterIP

nodePort: 30000 #node port

targetPort: 80 #pod port

selector:

app: myweb2

具体配置步骤

[root@k8s-master svc]# mkdir svc

[root@k8s-master svc]# cd svc/

[root@k8s-master svc]# pwd

/root/k8s_yaml/svc

[root@k8s-master svc]# vim k8s_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort #ClusterIP

ports:

- port: 80 #clusterIP

nodePort: 30000 #node port

targetPort: 80 #pod port

selector:

app: myweb

#生成svc

kubectl create -f k8s_svc.yaml

#查看svc的两种方法

kubectl get svc

kubectl get service

#查看所有资源类型

kubectl get all -o wide

#确保标签一样,修改为myweb

kubectl edit svc myweb

app: myweb

#查看端口是否暴露成功

kubectl describe svc myweb

浏览器访问已经可以访问了

10.0.0.12:30000

10.0.0.13:30000

负载均衡

#另一种修改数量的方法

[root@k8s-master svc]# kubectl scale rc nginx --replicas=3

#k8s进入容器的方法

[root@k8s-master svc]# kubectl exec -it nginx-5mf4r /bin/bash

root@nginx-5mf4r:/# echo '11111' >/usr/share/nginx/html/index.html

root@nginx-5mf4r:/# exit

[root@k8s-master svc]# kubectl exec -it nginx-ppjb3 /bin/bash

root@nginx-ppjb3:/# echo '2222' >/usr/share/nginx/html/index.html

root@nginx-ppjb3:/# exit

添加随机端口

[root@k8s-master svc]# vim /etc/kubernetes/apiserver

KUBE_API_ARGS="--service-node-port-range=3000-50000"

[root@k8s-master svc]# systemctl restart kube-apiserver.service

[root@k8s-master svc]# kubectl expose rc nginx --port=80 --type=NodePort

service "nginx" exposed

[root@k8s-master svc]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/nginx 3 3 3 1h myweb 10.0.0.11:5000/nginx:1.13 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 1d

svc/myweb 10.254.173.22 80:30000/TCP 52m app=myweb

svc/nginx 10.254.22.101 80:4336/TCP 2s app=myweb

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx 1/1 Running 2 19h 172.18.49.2 k8s-node2

po/nginx-5mf4r 1/1 Running 0 1h 172.18.42.4 k8s-node1

po/nginx-ppjb3 1/1 Running 0 1h 172.18.49.3 k8s-node2

po/nginx-vvh1m 1/1 Running 0 1h 172.18.49.4 k8s-node2

po/test 2/2 Running 0 2h 172.18.49.6 k8s-node2

service默认使用iptables来实现负载均衡, k8s 1.8新版本中推荐使用lvs(四层负载均衡 传输层tcp,udp)

1. deployment资源

有rc在滚动升级之后,会造成服务访问中断,于是k8s引入了deployment资源

创建deployment

cd k8s_yaml/

mkdir deploy

cd deploy/

[root@k8s-master deploy]# cat k8s_delpoy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

[root@k8s-master deploy]# kubectl create -f k8s_delpoy.yaml

[root@k8s-master deploy]# kubectl get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

nginx-deployment 3 3 3 3 8m

[root@k8s-master deploy]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 3 4d

nginx-5mf4r 1/1 Running 1 3d

nginx-deployment-3014407781-1msh5 1/1 Running 0 4m

nginx-deployment-3014407781-67f4s 1/1 Running 0 4m

nginx-deployment-3014407781-tj854 1/1 Running 0 4m

[root@k8s-master deploy]# kubectl expose deployment nginx-deployment --type=NodePort --port=80

[root@k8s-master deploy]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 443/TCP 4d

myweb 10.254.173.22 80:30000/TCP 3d

nginx 10.254.22.101 80:4336/TCP 3d

nginx-deployment 10.254.87.197 80:40510/TCP 8m

[root@k8s-master deploy]# curl -I 10.0.0.12:40510

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Mon, 16 Sep 2019 02:10:10 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

#修改配置文件中的此行改为nginx 1.15

[root@k8s-master deploy]# kubectl edit deployment nginx-deployment

- image: 10.0.0.11:5000/nginx:1.15

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

5

6

#回滚的命令

[root@k8s-master deploy]# kubectl rollout undo deployment nginx-deployment

deployment "nginx-deployment" rolled back

[root@k8s-master deploy]# curl -I 10.0.0.12:40510

HTTP/1.1 200 OK

Server: nginx/1.13.12

#再执行回滚命令

[root@k8s-master deploy]# kubectl rollout undo deployment nginx-deployment

deployment "nginx-deployment" rolled back

[root@k8s-master deploy]# curl -I 10.0.0.12:40510

HTTP/1.1 200 OK

Server: nginx/1.15.5

#再run一个资源

[root@k8s-master deploy]# kubectl run lcx --image=10.0.0.11:5000/nginx:1.13 --replicas=3

deployment "lcx" created

[root@k8s-master deploy]# kubectl rollout history deployment lcx

deployments "lcx"

REVISION CHANGE-CAUSE

1

#删除资源

[root@k8s-master deploy]# kubectl delete deployment lcx

deployment "lcx" deleted

#run一个资源

[root@k8s-master deploy]# kubectl run lcx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

deployment "lcx" created

[root@k8s-master deploy]# kubectl rollout history deployment lcx

deployments "lcx"

REVISION CHANGE-CAUSE

1 kubectl run lcx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

#修改配置为nginx:1.15

[root@k8s-master deploy]# kubectl edit deployment lcx

spec:

containers:

- image: 10.0.0.11:5000/nginx:1.15

[root@k8s-master deploy]# kubectl rollout history deployment lcx

deployments "lcx"

REVISION CHANGE-CAUSE

1 kubectl run lcx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

2 kubectl edit deployment lcx #将执行的命令记录了下来

#再次更新版本为1.16

[root@k8s-master deploy]# kubectl edit deployment lcx

spec:

containers:

- image: 10.0.0.11:5000/nginx:1.16

#查看还是不显示版本

[root@k8s-master deploy]# kubectl rollout history deployment lcx

deployments "lcx"

REVISION CHANGE-CAUSE

1 kubectl run lcx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

2 kubectl edit deployment lcx

3 kubectl edit deployment lcx

#因为不显示版本,所以要引用一条新的命令

[root@k8s-master deploy]# kubectl set image deploy lcx lcx=10.0.0.11:5000/nginx:1.15

deployment "lcx" image updated

#第二条执行的命令已经回滚为nginx:1.15了

[root@k8s-master deploy]# kubectl rollout history deployment lcx

deployments "lcx"

REVISION CHANGE-CAUSE

1 kubectl run lcx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

3 kubectl edit deployment lcx

4 kubectl set image deploy lcx lcx=10.0.0.11:5000/nginx:1.15

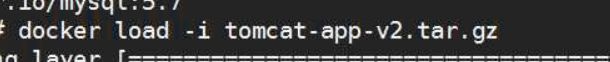

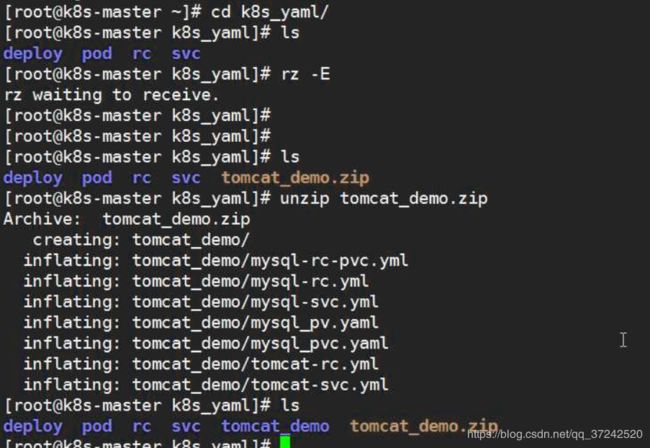

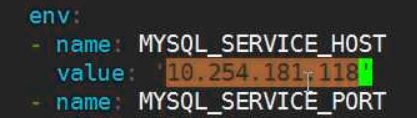

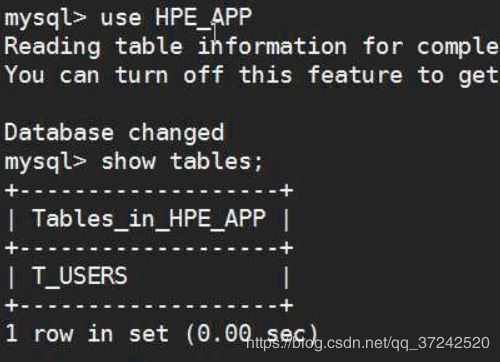

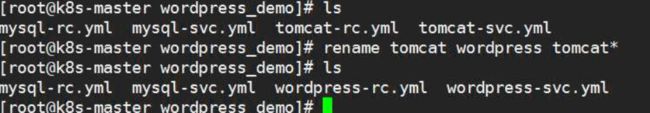

tomcat+mysql练习

在k8s中容器之间相互访问,通过VIP地址!

搭建过程(截图较多)

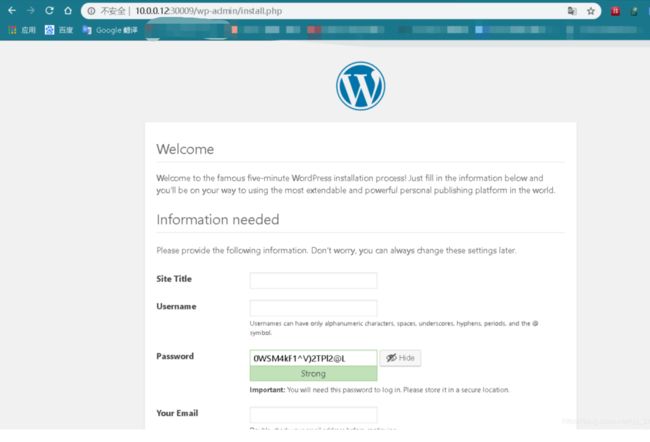

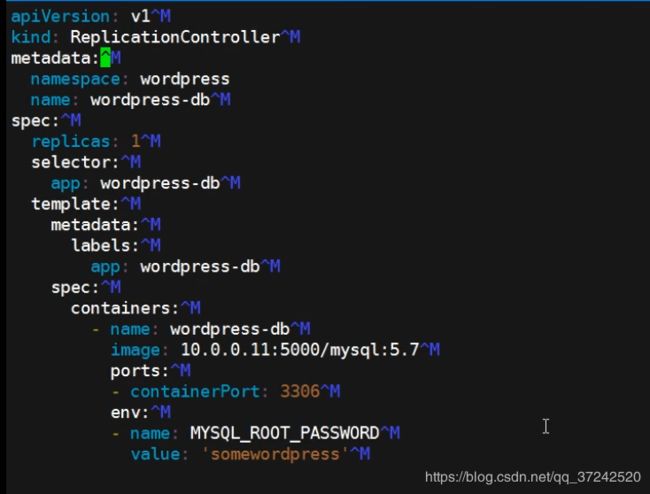

扩展—实现wordpress

version: '3'

services:

db:

image: mysql:5.7

volumes:

- /data/db_data:/var/lib/mysql

restart: always

environment:

MYSQL_ROOT_PASSWORD: somewordpress

MYSQL_DATABASE: wordpress

MYSQL_USER: wordpress

MYSQL_PASSWORD: wordpress

wordpress:

depends_on:

- db

image: wordpress:latest

volumes:

- /data/web_data:/var/www/html

ports:

- "80:80"

restart: always

environment:

WORDPRESS_DB_HOST: db

WORDPRESS_DB_USER: wordpress

WORDPRESS_DB_PASSWORD: wordpress

k8s的附加组件

dns服务()

作用:把svc的名字解析成VIP的地址

kubectl get all -n kube-system -o wide

#1:下载dns_docker镜像包

wget http://192.168.12.202/docker_image/docker_k8s_dns.tar.gz

#2:导入dns_docker镜像包(node1节点)

#3:修改skydns-rc.yaml, 在node1 创建dns服务

spec:

nodeSelector:

kubernetes.io/hostname: 10.0.0.12

containers:

#4:创建dns服务

kubectl create -f skydns-deploy.yaml

kubectl create -f skydns-svc.yaml

#5:检查

kubectl get all --namespace=kube-system

#6:修改所有node节点kubelet的配置文件

vim /etc/kubernetes/kubelet

KUBELET_ARGS="--cluster_dns=10.254.230.254 --cluster_domain=cluster.local"

systemctl restart kubelet

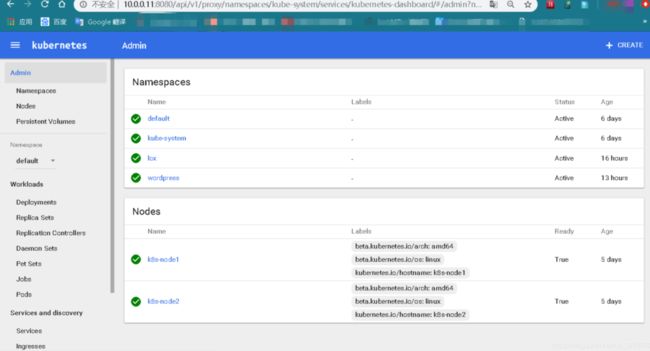

namespace命令空间

namespace做资源隔离

[root@k8s-master wordpress_demo]# kubectl get namespace

NAME STATUS AGE

default Active 5d

kube-system Active 5d

#增

kubectl create namespace lcx

#删

kubectl delete namespace lcx

测试

[root@k8s-master wordpress_demo]# pwd

/root/k8s_yaml/wordpress_demo

#创建wordpress的空间

[root@k8s-master wordpress_demo]# kubectl create namespace wordpress

namespace "wordpress" created

#删除当前的环境

[root@k8s-master wordpress_demo]# kubectl delete -f .

#修改所以配置文件添加namespace空间

[root@k8s-master wordpress_demo]# ls

mysql-rc.yml mysql-svc.yml wordpress-rc.yml wordpress-svc.yml

[root@k8s-master wordpress_demo]# sed -i '3a \ \ namespace: wordpress' *

#创建新环境

[root@k8s-master wordpress_demo]# kubectl create -f .

replicationcontroller "wordpress-db" created

service "wordpress-db" created

replicationcontroller "wordpress-web" created

service "wordpress-web" created

#查看wordpress的空间

[root@k8s-master wordpress_demo]# kubectl get all -n wordpress

NAME DESIRED CURRENT READY AGE

rc/wordpress-db 1 1 1 1m

rc/wordpress-web 1 1 1 1m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/wordpress-db 10.254.47.172 3306/TCP 1m

svc/wordpress-web 10.254.226.90 80:30009/TCP 1m

NAME READY STATUS RESTARTS AGE

po/wordpress-db-dv5f4 1/1 Running 0 1m

po/wordpress-web-v3bqd 1/1 Running 0 1m

访问一下 10.0.0.12:30009

yum install dos2unix.x86_64

dos2unix <文件名> 可以修复排版问题

健康检查

探针的种类

livenessProbe:

健康状态检查,周期性检查服务是否存活,检查结果失败,将重启容器

readinessProbe:

可用性检查,周期性检查服务是否可用,不可用将从service的endpoints中移除

探针的检测方法

exec:执行一段命令

httpGet:检测某个 http 请求的返回状态码

tcpSocket:测试某个端口是否能够连接

liveness探针的exec使用

[root@k8s-master k8s_yaml]# mkdir healthy

[root@k8s-master k8s_yaml]# cd healthy

[root@k8s-master healthy]# cat nginx_pod_exec.yaml

apiVersion: v1

kind: Pod

metadata:

name: exec

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

[root@k8s-master healthy]# kubectl create -f nginx_pod_exec.yaml

liveness探针的httpGet使用

[root@k8s-master healthy]# vim nginx_pod_httpGet.yaml

apiVersion: v1

kind: Pod

metadata:

name: httpget

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /index.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

liveness探针的tcpSocket使用

[root@k8s-master healthy]# vim nginx_pod_tcpSocket.yaml

apiVersion: v1

kind: Pod

metadata:

name: tcpsocket

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- tailf /etc/hosts

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 60

periodSeconds: 3

#查看pod,1分钟后重启了一次

root@k8s-master healthy]# kubectl create -f nginx_pod_tcpSocket.yaml

[root@k8s-master healthy]# kubectl get pod

NAME READY STATUS RESTARTS AGE

tcpsocket 1/1 Running 1 4m

readiness探针的httpGet使用

可用性检查

readinessprobe

[root@k8s-master healthy]# vim nginx-rc-httpGet.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: readiness

spec:

replicas: 2

selector:

app: readiness

template:

metadata:

labels:

app: readiness

spec:

containers:

- name: readiness

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

readinessProbe:

httpGet:

path: /lcx.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

[root@k8s-master healthy]# kubectl create -f nginx-rc-httpGet.yaml

dashboard服务

1:上传并导入镜像,打标签

2:创建dashborad的deployment和service

3:访问http://10.0.0.11:8080/ui/

在master上传镜像

官网配置文件下载链

镜像下载链接: 提取码: qjb7

docker load -i kubernetes-dashboard-amd64_v1.4.1.tar.gz

#在k8s-node2上上传镜像

[root@k8s-node2 ~]# docker load -i kubernetes-dashboard-amd64_v1.4.1.tar.gz

5f70bf18a086: Loading layer 1.024 kB/1.024 kB

2e350fa8cbdf: Loading layer 86.96 MB/86.96 MB

Loaded image: index.tenxcloud.com/google_containers/kubernetes-dashboard-amd64:v1.4.1

dashboard.yaml

[root@k8s-master dashboard]# cat dashboard.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

# Keep the name in sync with image version and

# gce/coreos/kube-manifests/addons/dashboard counterparts

name: kubernetes-dashboard-latest

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

version: latest

kubernetes.io/cluster-service: "true"

spec:

nodeName: k8s-node2

containers:

- name: kubernetes-dashboard

image: index.tenxcloud.com/google_containers/kubernetes-dashboard-amd64:v1.4.1

imagePullPolicy: IfNotPresent

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

ports:

- containerPort: 9090

args:

- --apiserver-host=http://10.0.0.11:8080

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

dashboard-svc.yaml

[root@k8s-master dashboard]# vim dashboard-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090

创建

[root@k8s-master dashboard]# kubectl create -f .

service "kubernetes-dashboard" created

deployment "kubernetes-dashboard-latest" created

#检查是否 Runing

[root@k8s-master dashboard]# kubectl get all -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 17h

deploy/kubernetes-dashboard-latest 1 1 1 1 20s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.254.230.254 53/UDP,53/TCP 17h

svc/kubernetes-dashboard 10.254.216.169 80/TCP 20s

NAME DESIRED CURRENT READY AGE

rs/kube-dns-2622810276 1 1 1 17h

rs/kubernetes-dashboard-latest-3233121221 1 1 1 20s

NAME READY STATUS RESTARTS AGE

po/kube-dns-2622810276-wvh5m 4/4 Running 4 17h

po/kubernetes-dashboard-latest-3233121221-km08b 1/1 Running 0 20s

通过apiservicer反向代理访问service

第一种:NodePort类型

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30008

第二种:ClusterIP类型

type: ClusterIP

ports:

- port: 80

targetPort: 80

http://10.0.0.11:8080/api/v1/proxy/namespaces/命令空间/services/service的名字/

http://10.0.0.11:8080/api/v1/proxy/namespaces/default/services/myweb/

k8s弹性伸缩

k8s弹性伸缩,需要附加插件heapster监控

安装heapster监控

1:上传并导入镜像,打标签

k8s-node2上

[root@k8s-node2 opt]# ll

total 1492076

-rw-r--r-- 1 root root 275096576 Sep 17 11:42 docker_heapster_grafana.tar.gz

-rw-r--r-- 1 root root 260942336 Sep 17 11:43 docker_heapster_influxdb.tar.gz

-rw-r--r-- 1 root root 991839232 Sep 17 11:44 docker_heapster.tar.gz

for n in `ls *.tar.gz`;do docker load -i $n ;done

docker tag docker.io/kubernetes/heapster_grafana:v2.6.0 10.0.0.11:5000/heapster_grafana:v2.6.0

docker tag docker.io/kubernetes/heapster_influxdb:v0.5 10.0.0.11:5000/heapster_influxdb:v0.5

docker tag docker.io/kubernetes/heapster:canary 10.0.0.11:5000/heapster:canary

2:上传配置文件 kubectl create -f .

influxdb-grafana-controller.yaml

mkdir heapster

cd heapster/

[root@k8s-master heapster]# cat influxdb-grafana-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

labels:

name: influxGrafana

name: influxdb-grafana

namespace: kube-system

spec:

replicas: 1

selector:

name: influxGrafana

template:

metadata:

labels:

name: influxGrafana

spec:

nodeName: k8s-node2

containers:

- name: influxdb

image: 10.0.0.11:5000/heapster_influxdb:v0.5

volumeMounts:

- mountPath: /data

name: influxdb-storage

- name: grafana

image: 10.0.0.11:5000/heapster_grafana:v2.6.0

env:

- name: INFLUXDB_SERVICE_URL

value: http://monitoring-influxdb:8086

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /api/v1/proxy/namespaces/kube-system/services/monitoring-grafana/

volumeMounts:

- mountPath: /var

name: grafana-storage

volumes:

- name: influxdb-storage

emptyDir: {}

- name: grafana-storage

emptyDir: {}

grafana-service.yaml

[root@k8s-master heapster]# cat grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

# In a production setup, we recommend accessing Grafana through an external Loadbalancer

# or through a public IP.

# type: LoadBalancer

ports:

- port: 80

targetPort: 3000

selector:

name: influxGrafana

influxdb-service.yaml

[root@k8s-master heapster]# vim influxdb-service.yaml

apiVersion: v1

kind: Service

metadata:

labels: null

name: monitoring-influxdb

namespace: kube-system

spec:

ports:

- name: http

port: 8083

targetPort: 8083

- name: api

port: 8086

targetPort: 8086

selector:

name: influxGrafana

heapster-service.yaml

[root@k8s-master heapster]# cat heapster-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

heapster-controller.yaml

[root@k8s-master heapster]# cat heapster-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

labels:

k8s-app: heapster

name: heapster

version: v6

name: heapster

namespace: kube-system

spec:

replicas: 1

selector:

k8s-app: heapster

version: v6

template:

metadata:

labels:

k8s-app: heapster

version: v6

spec:

nodeName: k8s-node2

containers:

- name: heapster

image: 10.0.0.11:5000/heapster:canary

imagePullPolicy: IfNotPresent

command:

- /heapster

- --source=kubernetes:http://10.0.0.11:8080?inClusterConfig=false

- --sink=influxdb:http://monitoring-influxdb:8086

修改配置文件:

#heapster-controller.yaml

spec:

nodeName: 10.0.0.13

containers:

- name: heapster

image: 10.0.0.11:5000/heapster:canary

imagePullPolicy: IfNotPresent

#influxdb-grafana-controller.yaml

spec:

nodeName: 10.0.0.13

containers:

[root@k8s-master heapster]# kubectl create -f .

3:打开dashboard验证

http://10.0.0.11:8080/api/v1/proxy/namespaces/kube-system/services/kubernetes-dashboard

弹性伸缩

1:修改rc的配置文件

containers:

- name: myweb

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

2:创建弹性伸缩规则

kubectl autoscale deploy nginx-deployment --max=8 --min=1 --cpu-percent=10

kubectl get hpa

3:测试

yum install httpd-tools -y

ab -n 1000000 -c 40 http://172.16.28.6/index.html

扩容截图

缩容截图

持久化存储

数据持久化类型:

emptyDir:

了解

HostPath:

spec:

nodeName: 10.0.0.13

volumes:

- name: mysql

hostPath:

path: /data/wp_mysql

containers:

- name: wp-mysql

image: 10.0.0.11:5000/mysql:5.7

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql

nfs: ☆☆☆

#所有节点安装nfs

yum install nfs-utils -y

===========================================

master节点:

#创建目录

mkdir -p /data/tomcat-db

#修改nfs配置文件

[root@k8s-master tomcat-db]# vim /etc/exports

/data 10.0.0.0/24(rw,sync,no_root_squash,no_all_squash)

#重启服务

[root@k8s-master tomcat-db]# systemctl restart rpcbind

[root@k8s-master tomcat-db]# systemctl restart nfs

#检查

[root@k8s-master tomcat-db]# showmount -e 10.0.0.11

Export list for 10.0.0.11:

/data 10.0.0.0/24

添加配置文件mysql-rc-nfs.yaml

#需要修改的地方:

volumes:

- name: mysql

nfs:

path: /data/tomcat-db

server: 10.0.0.11

================================================

[root@k8s-master tomcat_demo]# pwd

/root/k8s_yaml/tomcat_demo

[root@k8s-master tomcat_demo]# cat mysql-rc-nfs.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

volumes:

- name: mysql

nfs:

path: /data/tomcat-db

server: 10.0.0.11

containers:

- name: mysql

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql

image: 10.0.0.11:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

kubectl delete -f mysql-rc-nfs.yaml

kubectl create -f mysql-rc-nfs.yaml

kubectl get pod

#查看/data目录是否共享成功

[root@k8s-master tomcat_demo]# ls /data/tomcat-db/

auto.cnf ib_buffer_pool ib_logfile0 ibtmp1 performance_schema

HPE_APP ibdata1 ib_logfile1 mysql sys

查看是否挂在共享目录

#在node1上

[root@k8s-node1 ~]# df -h|grep nfs

10.0.0.11:/data/tomcat-db 48G 6.8G 42G 15% /var/lib/kubelet/pods/8675fe7e-d927-11e9-a65f-000c29b2785a/volumes/kubernetes.io~nfs/mysql

#重启kubelet

[root@k8s-node1 ~]# systemctl restart kubelet.service

#在master节点查看node状态

[root@k8s-master tomcat_demo]# kubectl get nodes

NAME STATUS AGE

k8s-node1 Ready 5d

k8s-node2 Ready 6d

#查看当前的mysql在node1上运行

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

mysql-kld7f 1/1 Running 0 1m 172.18.19.5 k8s-node1

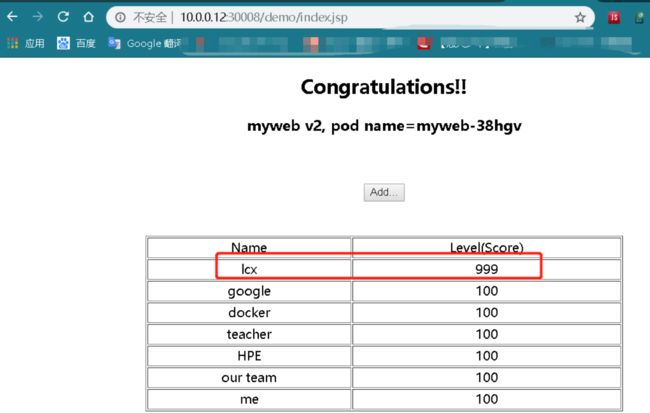

myweb-38hgv 1/1 Running 1 23h 172.18.19.4 k8s-node1

nginx-847814248-hq268 1/1 Running 0 4h 172.18.19.2 k8s-node1

#将mysql删除掉,重新生成的mysql后跳到了node2上

[root@k8s-master ~]# kubectl delete pod mysql-kld7f

pod "mysql-kld7f" deleted

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

mysql-14kj0 0/1 ContainerCreating 0 1s k8s-node2

mysql-kld7f 1/1 Terminating 0 2m 172.18.19.5 k8s-node1

myweb-38hgv 1/1 Running 1 23h 172.18.19.4 k8s-node1

nginx-847814248-hq268 1/1 Running 0 4h 172.18.19.2 k8s-node1

nginx-deployment-2807576163-c9g0n 1/1 Running 0 4h 172.18.53.4 k8s-node2

#在node2上查看挂载目录

[root@k8s-node2 ~]# df -h|grep nfs

10.0.0.11:/data/tomcat-db 48G 6.8G 42G 15% /var/lib/kubelet/pods/ed09eb26-d929-11e9-a65f-000c29b2785a/volumes/kubernetes.io~nfs/mysql

刷新网页查看之前添加的数据还在,说明nfs持久化配置成功

pvc:

资料

**

pv: persistent volume 全局资源,k8s集群

pvc: persistent volume claim, 局部资源属于某一个namespace

分布式存储glusterfs ☆☆☆☆☆

a: 什么是glusterfs

Glusterfs是一个开源分布式文件系统,具有强大的横向扩展能力,可支持数PB存储容量和数千客户端,通过网络互联成一个并行的网络文件系统。具有可扩展性、高性能、高可用性等特点。

b: 安装glusterfs

1.三个节点都添加俩块硬盘

测试环境,大小随意

2.三个节点都热添加硬盘不重启

echo "- - -" > /sys/class/scsi_host/host0/scan

echo "- - -" > /sys/class/scsi_host/host1/scan

echo "- - -" > /sys/class/scsi_host/host2/scan

#一定要都添加hosts解析

cat /etc/hosts

10.0.0.11 k8s-master

10.0.0.12 k8s-node1

10.0.0.13 k8s-node2

3.三个节点查看磁盘是否能够识别出来,然后格式化

fdisk -l

mkfs.xfs /dev/sdb

mkfs.xfs /dev/sdc

4.所有节点创建目录

mkdir -p /gfs/test1

mkdir -p /gfs/test2

5.防止挂载后重启盘符改变,需要修改UUID

master节点

#blkid 查看每块盘的ID

[root@k8s-master ~]# blkid

/dev/sda1: UUID="72aabc10-44b8-4c05-86bd-049157d771f8" TYPE="swap"

/dev/sda2: UUID="35076632-0a8a-4234-bd8a-45dc7df0fdb3" TYPE="xfs"

/dev/sdb: UUID="577ef260-533b-45f5-94c6-60e73b17d1fe" TYPE="xfs"

/dev/sdc: UUID="5a907588-80a1-476b-8805-d458e22dd763" TYPE="xfs"

[root@k8s-master ~]# vim /etc/fstab

UUID=35076632-0a8a-4234-bd8a-45dc7df0fdb3 / xfs defaults 0 0

UUID=72aabc10-44b8-4c05-86bd-049157d771f8 swap swap defaults 0 0

UUID=577ef260-533b-45f5-94c6-60e73b17d1fe /gfs/test1 xfs defaults 0 0

UUID=5a907588-80a1-476b-8805-d458e22dd763 /gfs/test2 xfs defaults 0 0

#挂载并查看

[root@k8s-master ~]# mount -a

[root@k8s-master ~]# df -h

.....

/dev/sdb 10G 33M 10G 1% /gfs/test1

/dev/sdc 10G 33M 10G 1% /gfs/test2

node1节点

[root@k8s-node1 ~]# blkid

/dev/sda1: UUID="72aabc10-44b8-4c05-86bd-049157d771f8" TYPE="swap"

/dev/sda2: UUID="35076632-0a8a-4234-bd8a-45dc7df0fdb3" TYPE="xfs"

/dev/sdb: UUID="c9a47468-ce5c-4aac-bffc-05e731e28f5b" TYPE="xfs"

/dev/sdc: UUID="7340cc1b-2c83-40be-a031-1aad8bdd5474" TYPE="xfs"

[root@k8s-node1 ~]# vim /etc/fstab

UUID=35076632-0a8a-4234-bd8a-45dc7df0fdb3 / xfs defaults 0 0

UUID=72aabc10-44b8-4c05-86bd-049157d771f8 swap swap defaults 0 0

UUID=c9a47468-ce5c-4aac-bffc-05e731e28f5b /gfs/test1 xfs defaults 0 0

UUID=7340cc1b-2c83-40be-a031-1aad8bdd5474 /gfs/test2 xfs defaults 0 0

[root@k8s-node1 ~]# mount -a

[root@k8s-node1 ~]# df -h

/dev/sdb 10G 33M 10G 1% /gfs/test1

/dev/sdc 10G 33M 10G 1% /gfs/test2

node2节点

[root@k8s-node2 ~]# blkid

/dev/sda1: UUID="72aabc10-44b8-4c05-86bd-049157d771f8" TYPE="swap"

/dev/sda2: UUID="35076632-0a8a-4234-bd8a-45dc7df0fdb3" TYPE="xfs"

/dev/sdb: UUID="6a2f2bbb-9011-41b6-b62b-37f05e167283" TYPE="xfs"

/dev/sdc: UUID="3a259ad4-7738-4fb8-925c-eb6251e8dd18" TYPE="xfs"

[root@k8s-node2 ~]# vim /etc/fstab

UUID=35076632-0a8a-4234-bd8a-45dc7df0fdb3 / xfs defaults 0 0

UUID=72aabc10-44b8-4c05-86bd-049157d771f8 swap swap defaults 0 0

UUID=6a2f2bbb-9011-41b6-b62b-37f05e167283 /gfs/test1 xfs defaults 0 0

UUID=3a259ad4-7738-4fb8-925c-eb6251e8dd18 /gfs/test2 xfs defaults 0 0

[root@k8s-node2 ~]# mount -a

[root@k8s-node2 ~]# df -h

/dev/sdb 10G 33M 10G 1% /gfs/test1

/dev/sdc 10G 33M 10G 1% /gfs/test2

6. master节点上下载软件并启动

#为节省带宽下载前打开缓存

[root@k8s-master volume]# vim /etc/yum.conf

keepcache=1

yum install centos-release-gluster -y

yum install install glusterfs-server -y

systemctl start glusterd.service

systemctl enable glusterd.service

然后在两个node节点上安装软件并启动

yum install centos-release-gluster -y

yum install install glusterfs-server -y

systemctl start glusterd.service

systemctl enable glusterd.service

7.查看gluster节点

#当前只能看到自己

[root@k8s-master volume]# bash

[root@k8s-master volume]# gluster pool list

UUID Hostname State

a335ea83-fcf9-4b7d-ba3d-43968aa8facf localhost Connected

#将另外两个节点加入进来

[root@k8s-master volume]# gluster peer probe k8s-node1

peer probe: success.

[root@k8s-master volume]# gluster peer probe k8s-node2

peer probe: success.

[root@k8s-master volume]# gluster pool list

UUID Hostname State

ebf5838a-4de2-447b-b559-475799551895 k8s-node1 Connected

78678387-cc5b-4577-b0fe-b11b4ca80a67 k8s-node2 Connected

a335ea83-fcf9-4b7d-ba3d-43968aa8facf localhost Connected

8.去资源池创建卷查看后再删除

#wahaha是卷名

[root@k8s-master volume]# gluster volume create wahaha k8s-master:/gfs/test1 k8s-master:/gfs/test2 k8s-node1:/gfs/test1 k8s-node1:/gfs/test2 force

volume create: wahaha: success: please start the volume to access data

#查看创建卷的属性

[root@k8s-master volume]# gluster volume info wahaha

#删除卷

[root@k8s-master volume]# gluster volume delete wahaha

Deleting volume will erase all information about the volume. Do you want to continue? (y/n) y

volume delete: wahaha: success

9.再次创建分布式复制卷☆☆☆

分布式复制卷图解

#查询帮助的命令

[root@k8s-master volume]# gluster volume create --help

#创建卷,在上次创建的命令上指定副本数

[root@k8s-master volume]# gluster volume create wahaha replica 2 k8s-master:/gfs/test1 k8s-master:/gfs/test2 k8s-node1:/gfs/test1 k8s-node1:/gfs/test2 force

volume create: wahaha: success: please start the volume to access data

#必须启动后才能volume此数据

[root@k8s-master volume]# gluster volume start wahaha

volume start: wahaha: success

10挂载卷

#在node2上挂载已经成为20G了

[root@k8s-node2 ~]# mount -t glusterfs 10.0.0.11:/wahaha /mnt

[root@k8s-node2 ~]# df -h

/dev/sdb 10G 33M 10G 1% /gfs/test1

/dev/sdc 10G 33M 10G 1% /gfs/test2

10.0.0.11:/wahaha 20G 270M 20G 2% /mnt

11测试是否共享

#在node2上复制一些内容到/mnt下

[root@k8s-node2 ~]# cp -a /etc/hosts /mnt/

[root@k8s-node2 ~]# ll /mnt/

total 1

-rw-r--r-- 1 root root 253 Sep 11 10:19 hosts

#在master节点上查看

[root@k8s-master volume]# ll /gfs/test1/

total 4

-rw-r--r-- 2 root root 253 Sep 11 10:19 hosts

[root@k8s-master volume]# ll /gfs/test2/

total 4

-rw-r--r-- 2 root root 253 Sep 11 10:19 hosts

12.扩容

#在master节点上

[root@k8s-master volume]# gluster volume add-brick wahaha k8s-node2:/gfs/test1 k8s-node2:/gfs/test2 force

volume add-brick: success

#在node2上查看已经扩容成功了

[root@k8s-node2 ~]# df -h

10.0.0.11:/wahaha 30G 404M 30G 2% /mnt

13.扩展_添加节点、添加副本的方法

#新加节点后,均衡数据的命令,建议访问量低的时候进行

[root@k8s-master ~]# gluster volume rebalance wahaha start force

k8s 对接glusterfs存储

a:创建endpoint

#查看

kubectl describe svc myweb

kubectl get endpoints myweb

kubectl describe endpoints myweb

#创建

[root@k8s-master ~]# cd k8s_yaml/

[root@k8s-master k8s_yaml]# mkdir gfs

[root@k8s-master k8s_yaml]# cd gfs/

#添加文件

[root@k8s-master gfs]# vim glusterfs-ep.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: glusterfs

namespace: default

subsets:

- addresses:

- ip: 10.0.0.11

- ip: 10.0.0.12

- ip: 10.0.0.13

ports:

- port: 49152

protocol: TCP

#创建并查看

[root@k8s-master gfs]# kubectl create -f glusterfs-ep.yaml

endpoints "glusterfs" created

[root@k8s-master gfs]# kubectl get endpoints

NAME ENDPOINTS AGE

glusterfs 10.0.0.11:49152,10.0.0.12:49152,10.0.0.13:49152 9s

kubernetes 10.0.0.11:6443 6d

mysql 1d

myweb 172.18.13.7:8080 1d

nginx 172.18.13.7:80 5d

nginx-deployment 172.18.13.5:80 1d

b:glusterfs-svc.yaml

[root@k8s-master gfs]# vim glusterfs-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: glusterfs

namespace: default

spec:

ports:

- port: 49152

protocol: TCP

targetPort: 49152

sessionAffinity: None

type: ClusterIP

[root@k8s-master gfs]# kubectl create -f glusterfs-svc.yaml

service "glusterfs" created

c: 创建gluster类型pv

#配置文件中的glusterfs名是用昨天创建好的wahaha

[root@k8s-master gfs]# vim glusterfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: gluster

labels:

type: glusterfs

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteMany

glusterfs:

endpoints: "glusterfs"

path: "wahaha"

readOnly: false

[root@k8s-master gfs]# kubectl create -f glusterfs-pv.yaml

persistentvolume "gluster" created

[root@k8s-master gfs]# kubectl get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM REASON AGE

gluster 20Gi RWX Retain Available 5s

d:k8s_pvc.yaml

[root@k8s-master gfs]# vim k8s_pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: tomcat-mysql

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20Gi

[root@k8s-master gfs]# kubectl create -f k8s_pvc.yaml

persistentvolumeclaim "tomcat-mysql" created

[root@k8s-master gfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESSMODES AGE

tomcat-mysql Bound gluster 20Gi RWX 9s

[root@k8s-master gfs]# kubectl get pvc -n default

NAME STATUS VOLUME CAPACITY ACCESSMODES AGE

tomcat-mysql Bound gluster 20Gi RWX 19s

e:mysql-rc-pvc.yaml

[root@k8s-master tomcat_demo]# cp mysql-rc-nfs.yaml mysql-rc-pvc.yaml

[root@k8s-master tomcat_demo]# cat mysql-rc-pvc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

volumes:

- name: mysql

persistentVolumeClaim:

claimName: tomcat-mysql

containers:

- name: mysql

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql

image: 10.0.0.11:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

[root@k8s-master tomcat_demo]# kubectl delete -f mysql-rc-pvc.yaml

replicationcontroller "mysql" deleted

[root@k8s-master tomcat_demo]# kubectl create -f mysql-rc-pvc.yaml

replicationcontroller "mysql" created

[root@k8s-master tomcat_demo]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

mysql-p2xkq 1/1 Running 0 10m 172.18.81.4 k8s-node1

myweb-41l9f 1/1 Running 1 16h 172.18.13.7 k8s-node2

f:浏览器访问添加数据后再删除pod

#创建新数据后删除mysql的pod会自动生成新的pod

kubectl delete pod mysql-m3zm9

浏览器再次访问数据还在

g:在node2节点上查看/mnt目录

[root@k8s-node2 ~]# mount -t glusterfs 10.0.0.11:/wahaha /mnt

[root@k8s-node2 mnt]# df -h

10.0.0.11:/wahaha 30G 615M 30G 3% /mnt

[root@k8s-node2 mnt]# ll

total 188434

-rw-r----- 1 polkitd input 56 Sep 18 09:45 auto.cnf

drwxr-x--- 2 polkitd input 4096 Sep 18 09:47 HPE_APP

-rw-r----- 1 polkitd input 719 Sep 18 09:47 ib_buffer_pool

-rw-r----- 1 polkitd input 79691776 Sep 18 09:47 ibdata1

-rw-r----- 1 polkitd input 50331648 Sep 18 09:47 ib_logfile0

-rw-r----- 1 polkitd input 50331648 Sep 18 09:45 ib_logfile1

-rw-r----- 1 polkitd input 12582912 Sep 18 09:47 ibtmp1

drwxr-x--- 2 polkitd input 4096 Sep 18 09:45 mysql

drwxr-x--- 2 polkitd input 4096 Sep 18 09:45 performance_schema

drwxr-x--- 2 polkitd input 4096 Sep 18 09:45 sys

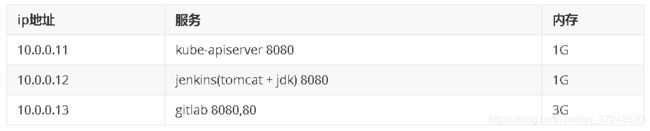

与jenkins集成实现ci/cd

jenkins 工具

自动化代码上线

核心功能支持大量的插件

jenkins java代码

比如之前做的小鸟飞飞是纯html页面

如果想换个小鸟的颜色怎么做呢

html文件 站点

开发写好代码传给运维 然后运维进行上传解压

代码版本管理软件

git: github

git check out

git tag

shell脚本

java类型

java项目代码上线链接

java

需要编译

.cless

安装gitlab并上传代码

需要的软件下载链接 提取码: dshc

#上传代码包

[root@k8s-node2 jenkins-k8s]# ll

total 890176

-rw-r--r-- 1 root root 9128610 Sep 18 10:48 apache-tomcat-8.0.27.tar.gz

-rw-r--r-- 1 root root 569408968 Sep 18 10:49 gitlab-ce-11.9.11-ce.0.el7.x86_64.rpm

-rw-r--r-- 1 root root 166044032 Sep 18 10:48 jdk-8u102-linux-x64.rpm

-rw-r--r-- 1 root root 89566714 Sep 18 10:49 jenkin-data.tar.gz

-rw-r--r-- 1 root root 77289987 Sep 18 10:49 jenkins.war

-rw-r--r-- 1 root root 91014 Sep 18 10:49 xiaoniaofeifei.zip

#安装

[root@k8s-node2 jenkins-k8s]# rpm -ivh gitlab-ce-11.9.11-ce.0.el7.x86_64.rpm

#配置

vim /etc/gitlab/gitlab.rb

external_url 'http://10.0.0.13'

prometheus_monitoring['enable'] = false

#应用并启动服务

gitlab-ctl reconfigure

#使用浏览器访问http://10.0.0.13,修改root用户密码,创建project

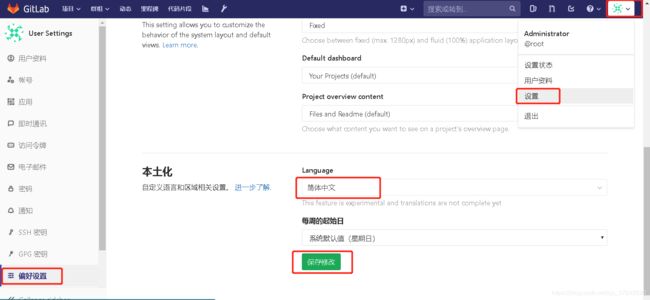

gitlab 11.1.4(不限版本)汉化方法

创建项目

[root@k8s-node2 opt]# cd /srv/

[root@k8s-node2 srv]# ls

[root@k8s-node2 srv]# git clone https://gitlab.com/xhang/gitlab.git

Cloning into 'gitlab'...

#确保有git命令

[root@k8s-node2 srv]# which git

/usr/bin/git

[root@k8s-node2 srv]# git config --global user.name "Administrator"

[root@k8s-node2 srv]# git config --global user.email "[email protected]"

[root@k8s-node2 srv]# cat /root/.gitconfig

[user]

name = Administrator

email = [email protected]

[root@k8s-node2 srv]# mv /root/jenkins-k8s/xiaoniaofeifei.zip .

[root@k8s-node2 srv]# unzip xiaoniaofeifei.zip

[root@k8s-node2 srv]# ls

2000.png 21.js icon.png img index.html sound1.mp3 xiaoniaofeifei.zip

上传代码

git init

git remote add origin http://10.0.0.13/root/xiaoniao.git

git add .

git commit -m "Initial commit"

#登录账户密码

[root@k8s-node2 srv]# git push -u origin master

Username for 'http://10.0.0.13': root

Password for 'http://[email protected]':

上传成功

安装jenkins,并自动构建docker镜像

node1上安装jenkins

[root@k8s-node1 opt]# ll

total 334020

-rw-r--r-- 1 root root 9128610 Sep 18 11:53 apache-tomcat-8.0.27.tar.gz

-rw-r--r-- 1 root root 166044032 Sep 18 11:54 jdk-8u102-linux-x64.rpm

-rw-r--r-- 1 root root 89566714 Sep 18 11:54 jenkin-data.tar.gz

-rw-r--r-- 1 root root 77289987 Sep 18 11:54 jenkins.war

rpm -ivh jdk-8u102-linux-x64.rpm

mkdir /app

tar xf apache-tomcat-8.0.27.tar.gz -C /app

rm -fr /app/apache-tomcat-8.0.27/webapps/*

mv jenkins.war /app/apache-tomcat-8.0.27/webapps/ROOT.war

tar xf jenkin-data.tar.gz -C /root

/app/apache-tomcat-8.0.27/bin/startup.sh

netstat -lntup

浏览器访问jenkins

访问http://10.0.0.12:8080/,默认账号密码admin:123456

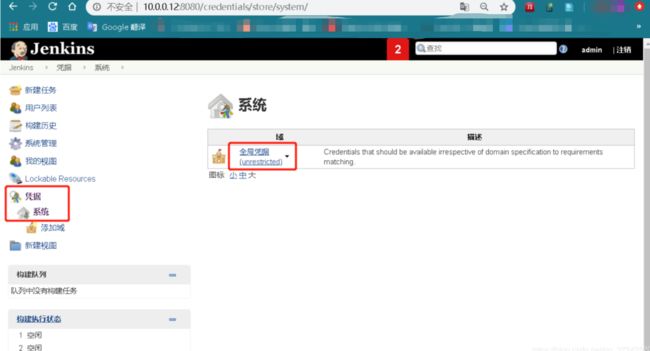

2.4 配置jenkins拉取gitlab代码凭据

a:在jenkins上生成秘钥对

#生成秘钥 一路回车

[root@k8s-node1 opt]# ssh-keygen -t rsa

[root@k8s-node1 opt]# ls /root/.ssh/

id_rsa id_rsa.pub known_hosts

b:复制公钥粘贴gitlab上

新建秘钥的进入方法

c:在node1上查看秘钥

[root@k8s-node1 opt]# cat /root/.ssh/id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCrlIgDVptvmipg00CP7P955Nbn2h+oy06hUiYWE+htG6VjLSCFjEhrxgXOCX2EAKGLgveWA46MLt4XN2Gi4E1H3aDsM/gBu8D+4487bKuLKv1ZeMeWECcDKL16cjtSQw6ShsCLBwh3aq5TT85I/ypUYMsQ1+N4Iiv4i3g3ozn0yPsyMq9rekW+nHbs8eJL1OzIue6hL78AgI8QuZ7QaCQ5TJDmCwKuLC+B+6ajyNezSxBIlZeBuUE5lacKmvxxnX5Dqzlvf5uGrVRSgPCR6oTTDTHmx2GVHIl7BJLZH/uR4tP7gYoY9fFOM1VyJ8Pjq+XcLGYFWNQKTgxKQO/08sjr root@k8s-node1

d:jenkins上创建全局凭据

[root@k8s-node1 opt]# cat /root/.ssh/id_rsa

-----BEGIN RSA PRIVATE KEY-----

MIIEpAIBAAKCAQEAq5SIA1abb5oqYNNAj+z/eeTW59ofqMtOoVImFhPobRulYy0g

hYxIa8YFzgl9hAChi4L3lgOOjC7eFzdhouBNR92g7DP4AbvA/uOPO2yriyr9WXjH

lhAnAyi9enI7UkMOkobAiwcId2quU0/OSP8qVGDLENfjeCIr+It4N6M59Mj7MjKv

a3pFvpx27PHiS9TsyLnuoS+/AICPELme0GgkOUyQ5gsCriwvgfumo8jXs0sQSJWX

gblBOZWnCpr8cZ1+Q6s5b3+bhq1UUoDwkeqE0w0x5sdhlRyJewSS2R/7keLT+4GK

GPXxTjNVcifD46vl3CxmBVjUCk4MSkDv9PLI6wIDAQABAoIBAQCZBKL0TzXaJuQq

a9xFPzhsLgDWzvmzIHWke03KHMEJJUGvHBzH3V7s9rJQmLgelC197TP+znc/X8Dj

dZmWl3F0aRxN6t8ANMCe0LT5ayXlvFYriAJ/OzJ/p8Krw9pRt8n0NUbb9k6/qR2E

4UR4Z1AJ5jTTdaXAisEqLL/u5pwWR/yJgDeeUY5SC3QU1s2dvFUR/r9sqeXHjaCu

scu3EwU4Mo6+8yEFRpNLf2wMYPZyrb4RCDXpLRnrOHc9M67tiKpdQYplhuuSDD8Y

KOQO2isDigz56CIMBzYKysNG3Vg8LVHkEZ65DVoSF5bI8PcLuZ9Fod2ZYI212z48

Rb6IRUThAoGBAOJNo033aqqEcnXA+0pFgP1uL2q+eSWsztnw4M3OQl9hYZUIcaq6

cGBoIRlw/RiR1b0Q+lvj59+0/hJ9nkAqhcw/gAGPClQQZlVfwJOcx2++3vvDvRoA

3WZlRLswjktNBBDTaCBxEXoEuH1z/zWCEyXQAA1PMLHSkwWk03iazqHRAoGBAMIY

ix5OGBMVkwGzT6+pUojCNoRy5fZ0CxgiO2ILjFo7fYFNHGPXlJ0p85t7izq7hncb

tE8P6LaFGBjoe2+rf3e35TEXgUntmV3BucmodqiNM11RBXP4VW5ed7URCxtrgieo

xPUwDeerBlb0YP9Uohsf+qmqrRAhd5BxvAgjd1H7AoGBANhXWFLkwHga/kFMJ+8s

2s9sUrA9PxuhRG5dNMwK7rC4K82JsQCCE4RWh64Gsi6W3DpOzMij67uVD38lz++P

tzE3U2wqDrmmo+3iB/wV2SMe2ZTd3x3Izd9h2H8LQD0Ed2qOb/Dzpr17XdOw3L2O

iDeRzTrsBaU5pYuzsuaNOBTBAoGADh77ABluZvUK3PTOt1j6SDjY/ondcTDAHeFf

sKJmc6ogV2fkyN7GUSjcMFOsrXk3LzM0ywu9QoosVqOTV2yCuZMHearcHSTMI6YU

fjdjap/bPM7INse6b20wCFxVEomfzoLY0X3NhS1MKMdexzTBFngdJHrmXGYS7M9Z

fr4V0EECgYAb1ZXLghOZDFp80DLryQPaFz63kIf5HKGQM5KJBos7bq5vmxnuyT3f

5BTLNSdmpAdjXmHXvzynEWeg/bkDRXK4iFMvGQj0fo1MNbodyj6BcUSH6M7C1Srm

MVroh40pZQbAdeCOlJmDSdxhiRc0rVlmtTuIzS80yHW91Cfn4nk3ag==

-----END RSA PRIVATE KEY-----

e:新建任务

克隆url

粘贴url

选择执行shell后保存

立即构建查看控制台输出内容

编写dockerfile并测试

#node2上编写

[root@k8s-node2 srv]# ls

2000.png 21.js icon.png img index.html sound1.mp3 xiaoniaofeifei.zip

[root@k8s-node2 srv]# vim dockerfile

a

FROM 10.0.0.11:5000/nginx:1.13

ADD . /usr/share/nginx/html

#构建

[root@k8s-node2 srv]# docker build -t xiaoniao:v1 .

#创建容器并查看

[root@k8s-node2 srv]# docker run -d -P xiaoniao:v1

74fe566ac2e1f53eafec83904ea17a2ec0393f82cbb22e05e1465e0f5d29e86f

[root@k8s-node2 srv]# docker ps -a -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

74fe566ac2e1 xiaoniao:v1 "nginx -g 'daemon ..." 20 seconds ago Up 16 seconds 0.0.0.0:32768->80/tcp optimistic_bartik

访问10.0.0.13:32768

[root@k8s-node1 ~]# cd /root/.jenkins/workspace/xiaoniao

[root@k8s-node1 xiaoniao]# ls

2000.png 21.js icon.png img index.html sound1.mp3 xiaoniaofeifei.zip

在gitlab上上传代码

添加dockerfile文件和配置内容

再次点击配置,构建修改shell命令

docker build -t 10.0.0.11:5000/xiaoniao:v1 .

docker push 10.0.0.11:5000/xiaoniao:v1

立即构建

控制台查看

去私有仓库查看一下有没有xiaoniao的镜像,并查看版本

[root@k8s-master ~]# cd /opt/myregistry/docker/registry/v2/repositories/

[root@k8s-master repositories]# ls

busybox mysql nginx rhel7 tomcat-app wordpress xiaoniao

[root@k8s-master repositories]# ls xiaoniao/_manifests/tags/

v1

修改或升级代码的方法

如果开发要修改首页文件

让代码回滚的方法

docker build -t 10.0.0.11:5000/xiaoniao:v$BUILD_ID .

docker push 10.0.0.11:5000/xiaoniao:v$BUILD_ID

利用环境变量

立即构建

再次去私有仓库查看一下

[root@k8s-master repositories]# ls xiaoniao/_manifests/tags/

v1 v4

扩展_使用kubeadm安装最新版的k8s

适合生产环境

准备两台新的虚拟机

#hosts解析

[root@k8s-node-1 docker_rpm]# cat /etc/hosts

10.0.0.11 k8s-master

10.0.0.12 k8s-node1

上传需要的安装包并解压安装

docker安装包下载链接: 提取码: scvn

#两台虚拟机都需要执行

tar xf docker_rpm.tar.gz

ls

cd docker_rpm/

ls

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

yum localinstall *.rpm -y

systemctl start docker

systemctl enable docker

kubernetes安装

kubernetes安装包下载链接: 提取码: 12dy

#两台虚拟机都需要执行

tar xf k8s_rpm.tar.gz

ls

cd k8s_rpm/

ls

yum localinstall *.rpm -y

systemctl start kubelet

systemctl enable kubelet

#添加自动补全功能

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

使用kubeadm初始化k8s集群

k8s_1.15版本下载链接: 提取码: 6n93

#两台虚拟机都需要执行

cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

#关闭swap,将swap注释

vim /etc/fstab

swapoff -a

#在master控制节点执行

kubeadm init --kubernetes-version=v1.15.0 --pod-network-cidr=172.18.0.0/16 --service-cidr=10.254.0.0/16

给k8s集群加入node节点

#node节点执行

为k8s集群配置网络插件

[root@k8s-master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml