主机环境 redhat6.5 64位

实验环境 服务端1 ip 172.25.29.1 主机名:server1.example.com ricci

服务端2 ip 172.25.29.2 主机名:server2.example.com ricci

管理端1 ip 172.25.29.3 主机名:server3.example.com luci

管理端2 ip 172.25.29.250 fence_virtd

防火墙状态:关闭

1. 安装ricci、luci及创建节点

1.安装、开启ricci(服务端1)

[[email protected]]# vim dvd.repo #安装之前,修改yum源

#repos on instructor for cla***oom use

#Main rhel6.5 server

[base]

name=InstructorServer Repository

baseurl=http://172.25.29.250/rhel6.5

gpgcheck=0

#HighAvailability rhel6.5

[HighAvailability]

name=InstructorHighAvailability Repository

baseurl=http://172.25.29.250/rhel6.5/HighAvailability

gpgcheck=0

#LoadBalancer packages

[LoadBalancer]

name=InstructorLoadBalancer Repository

baseurl=http://172.25.29.250/rhel6.5/LoadBalancer

gpgcheck=0

#ResilientStorage

[ResilientStorage]

name=InstructorResilientStorage Repository

baseurl=http://172.25.29.250/rhel6.5/ResilientStorage

gpgcheck=0

#ScalableFileSystem

[ScalableFileSystem]

name=InstructorScalableFileSystem Repository

baseurl=http://172.25.29.250/rhel6.5/ScalableFileSystem

gpgcheck=0

[[email protected]]# yum clean all #清除缓存

Loadedplugins: product-id, subscription-manager

Thissystem is not registered to Red Hat Subscription Management. You can usesubscription-manager to register.

Cleaningrepos: HighAvailability LoadBalancer ResilientStorage

: ScalableFileSystem base

Cleaningup Everything

[[email protected]]# yum install ricci -y #安装ricci

[[email protected]]# passwd ricci #修改ricci密码

Changingpassword for user ricci.

Newpassword:

BADPASSWORD: it is based on a dictionary word

BADPASSWORD: is too simple

Retypenew password:

passwd:all authentication tokens updated successfully.

[[email protected]]# /etc/init.d/ricci start #开启ricci

Startingsystem message bus: [ OK ]

Startingoddjobd: [ OK ]

generatingSSL certificates... done

GeneratingNSS database... done

Startingricci: [ OK ]

[[email protected]]# chkconfig ricci on #开机自动开启

服务端2和服务端1做相同的操作

2.安装、开启luci (管理端1)

安装之前,与服务端1一样修改yum源

[[email protected]]# yum install luci -y #安装luci

[[email protected]]# /etc/init.d/luci start #开启ruci

Startluci... [ OK ]

Pointyour web browser to https://server3.example.com:8084 (or equivalent) to accessluci

在登陆之前,必须有DNS解析,也就是在/etc/hosts添加

例如: 172.25.29.3 server3.example.com

3.创建节点

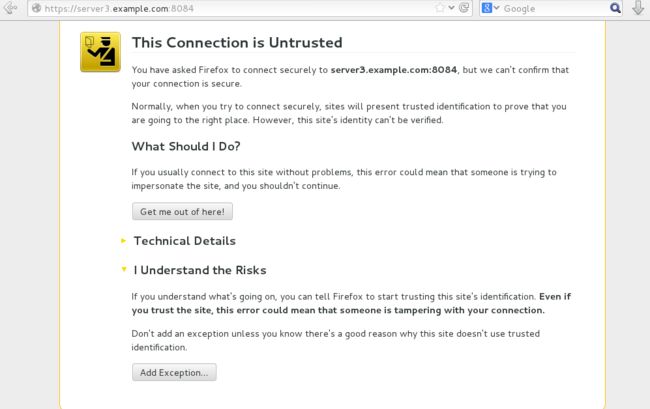

登陆 https://server3.example.com:8084 #luci开放的是8084端口

安全证书,选I Understand Risks

点击Confirm Security Excepton

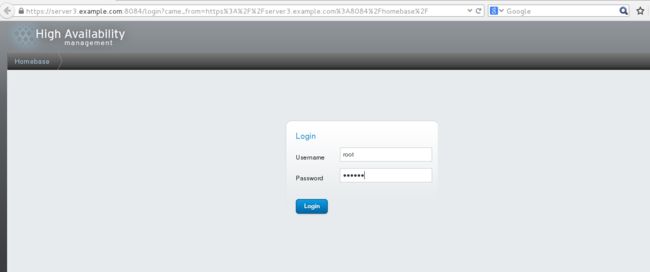

进入到管理服务器的luci界面,登陆时的密码是安装luci虚拟机的root密码

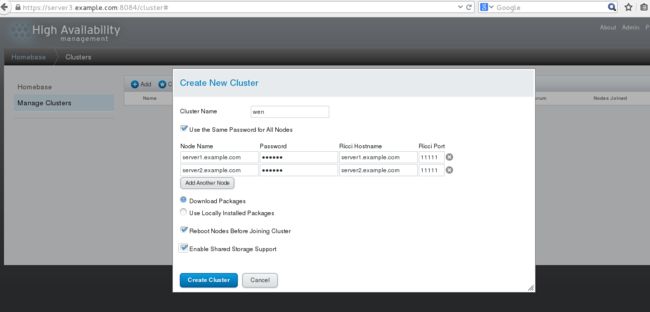

选择Manage Clusters,之后点击Create创建集群

如图,Cluster Name创建集群的名称,勾选Use the Same Passwordfor All Nodes,指的是所有结点所用的是相同的密码,填写要创建的结点名称和密码,名称是服务端的主机名,密码是上面提到的passwd ricci的修改的密码。勾选Download PackagesReboot和Enable,选择Create Cluster

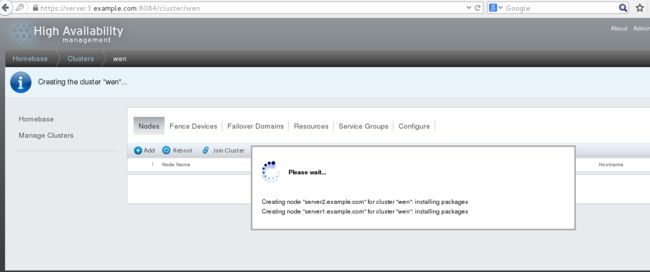

正在创建节点,如图

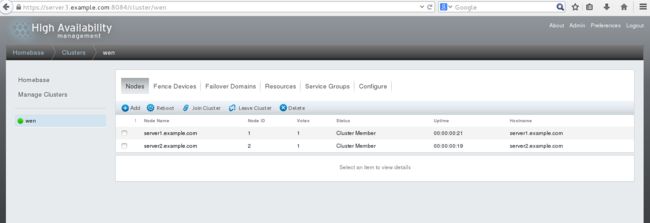

创建完成,如图

创建完成之后,在服务端1和服务端2的/etc/cluster/下会生成cluster.conf文件,查看如下

[root@server1~]# cd /etc/cluster/

[root@server1cluster]# ls

cluster.conf cman-notify.d

[root@server1cluster]# cat cluster.conf #查看文件内容

2.安装fence_virtd、创建fence设备

1.安装、开启fence_virtd(管理端2)

[root@foundation29Desktop]# yum install fence-virtd* -y

[root@foundation29Desktop]# fence_virtd -c #设置

Modulesearch path [/usr/lib64/fence-virt]:

Availablebackends:

libvirt 0.1

Availablelisteners:

multicast 1.2

serial 0.4

Listenermodules are responsible for accepting requests

fromfencing clients.

Listenermodule [multicast]: #多播

Themulticast listener module is designed for use environments

wherethe guests and hosts may communicate over a network using

multicast.

Themulticast address is the address that a client will use to

sendfencing requests to fence_virtd.

MulticastIP Address [225.0.0.12]: #多播ip

Usingipv4 as family.

MulticastIP Port [1229]: #多播端口号

Settinga preferred interface causes fence_virtd to listen only

onthat interface. Normally, it listens onall interfaces.

Inenvironments where the virtual machines are using the host

machineas a gateway, this *must* be set (typically to virbr0).

Setto 'none' for no interface.

Interface[br0]:

Thekey file is the shared key information which is used to

authenticatefencing requests. The contents of thisfile must

bedistributed to each physical host and virtual machine within

acluster.

KeyFile [/etc/cluster/fence_xvm.key]: #key文件的路径

Backendmodules are responsible for routing requests to

theappropriate hypervisor or management layer.

Backendmodule [libvirt]:

Configurationcomplete.

===Begin Configuration ===

backends{

libvirt {

uri = "qemu:///system";

}

}

listeners{

multicast {

port = "1229";

family = "ipv4";

interface = "br0";

address = "225.0.0.12";

key_file ="/etc/cluster/fence_xvm.key";

}

}

fence_virtd{

module_path ="/usr/lib64/fence-virt";

backend = "libvirt";

listener = "multicast";

}

===End Configuration ===

Replace/etc/fence_virt.conf with the above [y/N]? y

[root@foundation29Desktop]# mkdir /etc/cluster #创建cluster目录

[root@foundation29Desktop]# cd /etc/cluster/

[root@foundation29cluster]# dd if=/dev/urandom of=fence_xvm.key bs=128 count=1 #生成文件

1+0records in

1+0records out

128bytes (128 B) copied, 0.000167107 s, 766 kB/s

[root@foundation29Desktop]# scp fence_xvm.key [email protected]:/etc/cluster/ #将文件传给服务端1

[email protected]'spassword:

fence_xvm.key 100% 512 0.5KB/s 00:00

测试

[root@server1cluster]# ls #查看

cluster.conf cman-notify.d fence_xvm.key

以同样的方法传送给服务端2

[root@foundation29Desktop]# systemctl start fence_fence_virtd.service #开启fence(由于管理端2是7.1的系统,开启时的命令不太一。如果是6.5系统,则用/etc/init.d/fence_virtd start即可)

2.创建fence设备

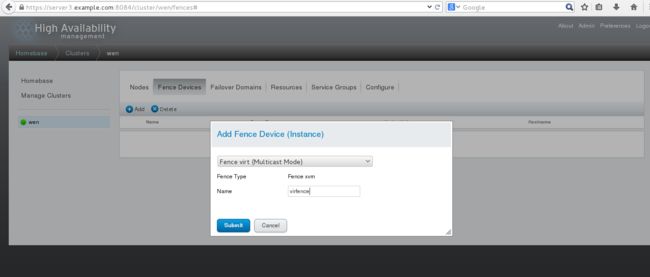

选择Fance Devices

选择Add,如下图,Name指的是添加fence设备的名称,写完之后选择Submit

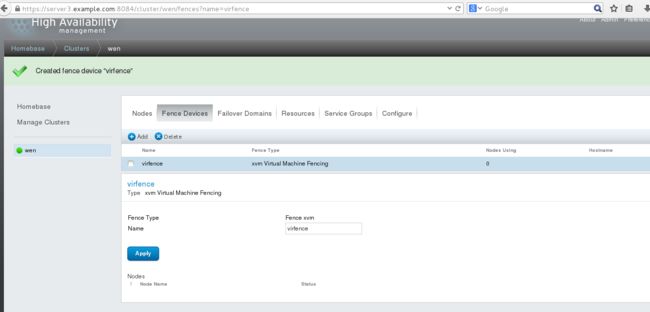

结果

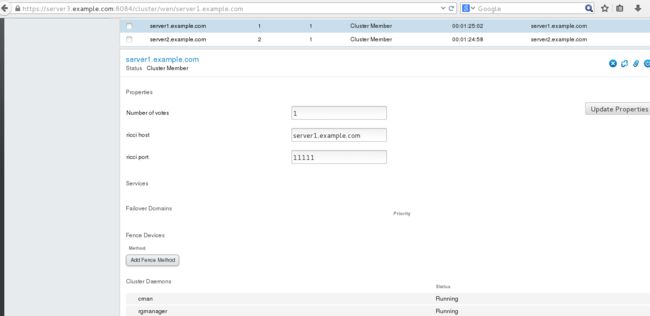

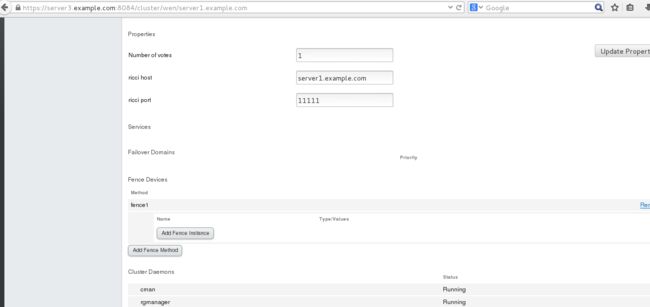

选择server1.example.com

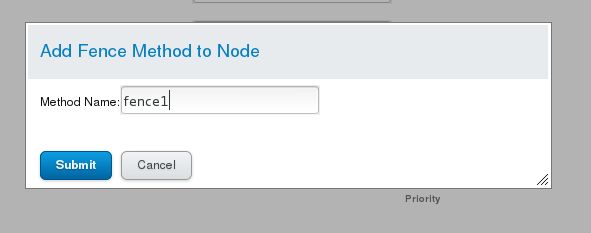

点击Add Fence Method ,添加Method Name

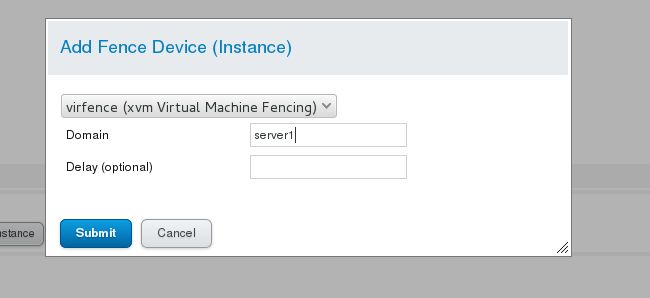

如图,选择Add Tence Instance

填写Domin,选择Submit

完成之后,服务端2和服务端1的配置相同

测试

[root@server1cluster]# fence_node server2.example.com #测试server2的结点

fenceserver2.example.com success

[root@server2cluster]# fence_node server1.example.com #测试server1的结点

fenceserver1.example.com success

至此,高可用集群(HA)搭建完毕,可以根据自己的需要在上面铺设自己所需要的业务。