环境:Ubuntu 14.04.1 LTS (GNU/Linux 3.13.0-105-generic x86_64)

1 TensorFlow 相关函数理解

1.1 tf.truncated_normal

truncated_normal(

shape,

mean=0.0,

stddev=1.0,

dtype=tf.float32,

seed=None,

name=None

)

功能说明:

产生截断正态分布随机数,取值范围为[mean - 2 * stddev, mean + 2 * stddev]。

示例代码:

现在在 /home/ubuntu 目录下创建源文件 truncated_normal.py:

truncated_normal.py

#!/usr/bin/python

import tensorflow as tf

initial = tf.truncated_normal(shape=[3,3], mean=0, stddev=1)

print tf.Session().run(initial)

然后执行:

python /home/ubuntu/truncated_normal.py

执行结果:

[[-0.18410809-1.285927770.58813173]

[-1.58745313 -0.48672566 -0.27244243]

[-1.458331470.513067361.20532846]]

将得到一个取值范围 [-2, 2] 的 3 * 3 矩阵,可以尝试修改源代码看看输出结果有什么变化。

1.2 tf.constant

constant(

value,

dtype=None,

shape=None,

name='Const',

verify_shape=False

)

功能说明:

根据 value 的值生成一个 shape 维度的常量张量

示例代码:

现在在 /home/ubuntu 目录下创建源文件 constant.py,内容可参考:

constant.py

#!/usr/bin/python

import tensorflow as tf

import numpy as np

a = tf.constant([1,2,3,4,5,6],shape=[2,3])

b = tf.constant(-1,shape=[3,2])

c = tf.matmul(a,b)

e = tf.constant(np.arange(1,13,dtype=np.int32),shape=[2,2,3])

f = tf.constant(np.arange(13,25,dtype=np.int32),shape=[2,3,2])

g = tf.matmul(e,f)

with tf.Session() as sess:

print sess.run(a)

print("##################################")

print sess.run(b)

print("##################################")

print sess.run(c)

print("##################################")

print sess.run(e)

print("##################################")

print sess.run(f)

print("##################################")

print sess.run(g)

然后执行:

python /home/ubuntu/constant.py

执行结果:

[[1 2 3]

[4 5 6]]

##################################

[[-1 -1]

[-1 -1]

[-1 -1]]

##################################

[[-6 -6]

[-15 -15]]

##################################

[[[1 2 3]

[ 4 5 6]]

[[ 7 8 9]

[10 11 12]]]

##################################

[[[13 14]

[15 16]

[17 18]]

[[19 20]

[21 22]

[23 24]]]

##################################

[[[94 100]

[229 244]]

[[508 532]

[697 730]]]

a: 2x3 维张量;

b: 3x2 维张量;

c: 2x2 维张量;

e: 2x2x3 维张量;

f: 2x3x2 维张量;

g: 2x2x2 维张量。

可以尝试修改源代码看看输出结果有什么变化。

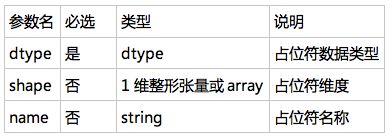

1.3 tf.placeholder

placeholder(

dtype,

shape=None,

name=None

)

功能说明:

是一种占位符,在执行时候需要为其提供数据

示例代码:

现在在 /home/ubuntu 目录下创建源文件 placeholder.py,内容可参考:

placeholder.py

#!/usr/bin/python

import tensorflow as tf

import numpy as np

x = tf.placeholder(tf.float32,[None,10])

y = tf.matmul(x,x)

with tf.Session() as sess:

rand_array = np.random.rand(10,10)

print sess.run(y,feed_dict={x:rand_array})

然后执行:

python /home/ubuntu/placeholder.py

执行结果:

[[1.81712103 2.02877522 1.68924046 2.40462661 1.90574181 2.21769357

1.66864204 1.87491691 2.32046914 2.07164645]

[ 3.18995905 2.80489087 2.53446984 2.93795609 2.35939479 2.61397004

1.47369146 1.69601274 2.96881104 2.80005288]

[ 3.40027285 3.17128634 2.83247375 3.58863354 2.67104673 2.81708789

2.04706836 2.4437325 3.10964417 3.03987789]

[ 2.04807019 2.11296868 1.85848451 2.26381588 2.00105739 2.1591928

1.59371364 1.69079185 2.35918951 2.3390758 ]

[ 3.14326477 3.03518987 2.70114732 3.35116243 2.97751141 3.10402942

2.12285256 2.45907426 3.64020634 3.09404778]

[ 2.46236205 2.59506202 2.11775351 2.43848658 2.24290538 2.07725525

1.73363113 1.79471815 2.22352362 2.47508812]

[ 2.3489728 3.25824308 2.53069353 3.52486014 3.3552053 3.18628955

2.6079123 2.44158649 3.47814059 3.41102791]

[ 2.39285374 2.33928251 2.19442534 2.28283715 1.99198937 1.68016291

1.41813767 2.16835332 1.86814547 1.73498607]

[ 2.71498179 2.88635182 2.35225129 3.11072111 2.72122979 2.57475829

2.12802029 2.54610658 2.97226429 2.80705166]

[ 2.99051809 3.2901628 2.51092815 3.67744827 2.57051396 2.53983688

2.18044734 2.18324852 2.58032012 3.19048524]]

输出一个 10x10 维的张量。也可以尝试修改源代码看看输出结果有什么变化。

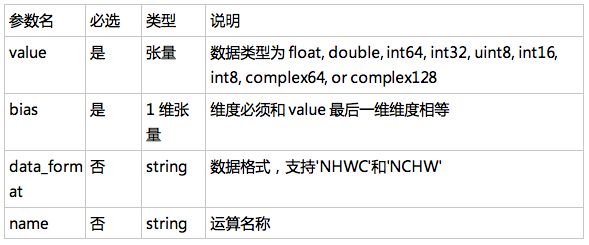

1.4 tf.nn.bias_add

bias_add(

value,

bias,

data_format=None,

name=None

)

功能说明:

将偏差项 bias 加到 value 上面,可以看做是 tf.add 的一个特例,其中 bias 必须是一维的,并且维度和 value的最后一维相同,数据类型必须和 value 相同。

示例代码:

现在在 /home/ubuntu 目录下创建源文件 bias_add.py,内容可参考:

bias_add.py

#!/usr/bin/python

import tensorflow as tf

import numpy as np

a = tf.constant([[1.0, 2.0],[1.0, 2.0],[1.0, 2.0]])

b = tf.constant([2.0,1.0])

c = tf.constant([1.0])

sess = tf.Session()

print sess.run(tf.nn.bias_add(a, b))

#print sess.run(tf.nn.bias_add(a,c)) error

print("##################################")

print sess.run(tf.add(a, b))

print("##################################")

print sess.run(tf.add(a, c))

然后执行:

python /home/ubuntu/bias_add.py

执行结果:

[[3.3.]

[ 3.3.]

[ 3.3.]]

##################################

[[3.3.]

[ 3.3.]

[ 3.3.]]

##################################

[[2.3.]

[ 2.3.]

[ 2.3.]]

3 个 3x2 维张量。也可以尝试修改源代码看看输出结果有什么变化。

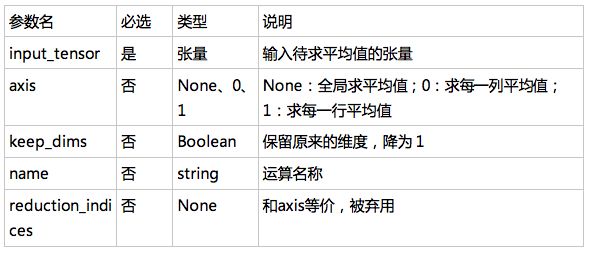

1.5 tf.reduce_mean

reduce_mean(

input_tensor,

axis=None,

keep_dims=False,

name=None,

reduction_indices=None

)

功能说明:

计算张量 input_tensor 平均值

示例代码:

现在在 /home/ubuntu 目录下创建源文件 reduce_mean.py,内容可参考:

reduce_mean.py

#!/usr/bin/python

import tensorflow as tf

import numpy as np

initial = [[1.,1.],[2.,2.]]

x = tf.Variable(initial,dtype=tf.float32)

init_op = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init_op)

print sess.run(tf.reduce_mean(x))

print sess.run(tf.reduce_mean(x,0)) #Column

print sess.run(tf.reduce_mean(x,1)) #row

然后执行:

python /home/ubuntu/reduce_mean.py

执行结果:

1.5

[ 1.51.5]

[ 1.2.]

也可以尝试修改源代码看看输出结果有什么变化。

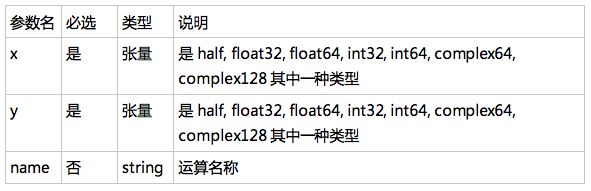

1.6 tf.squared_difference

squared_difference(

x,

y,

name=None

)

功能说明:

计算张量 x、y 对应元素差平方

示例代码:

现在在 /home/ubuntu 目录下创建源文件 squared_difference.py,内容可参考:

squared_difference.py

#!/usr/bin/python

import tensorflow as tf

import numpy as np

initial_x = [[1.,1.],[2.,2.]]

x = tf.Variable(initial_x,dtype=tf.float32)

initial_y = [[3.,3.],[4.,4.]]

y = tf.Variable(initial_y,dtype=tf.float32)

diff = tf.squared_difference(x,y)

init_op = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init_op)

print sess.run(diff)

然后执行:

python /home/ubuntu/squared_difference.py

执行结果:

[[ 4.4.]

[ 4.4.]]

也可以尝试修改源代码看看输出结果有什么变化。

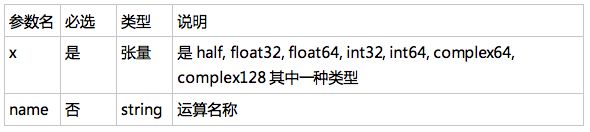

1.7 tf.square

square(

x,

name=None

)

功能说明:

计算张量对应元素平方

示例代码:

现在在 /home/ubuntu 目录下创建源文件 square.py,内容可参考:

square.py

#!/usr/bin/python

import tensorflow as tf

import numpy as np

initial_x = [[1.,1.],[2.,2.]]

x = tf.Variable(initial_x,dtype=tf.float32)

initial_y = [[3.,3.],[4.,4.]]

y = tf.Variable(initial_y,dtype=tf.float32)

diff = tf.squared_difference(x,y)

init_op = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init_op)

print sess.run(diff)

然后执行:

python /home/ubuntu/square.py

执行结果:

[[ 1.1.]

[ 4.4.]]

也可以尝试修改源代码看看输出结果有什么变化。

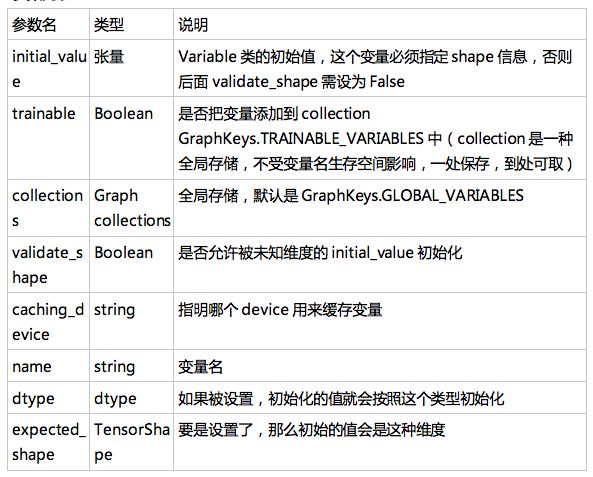

2 TensorFlow 相关类理解

2.1 tf.Variable

__init__(

initial_value=None,

trainable=True,

collections=None,

validate_shape=True,

caching_device=None,

name=None,

variable_def=None,

dtype=None,

expected_shape=None,

import_scope=None

)

功能说明:

维护图在执行过程中的状态信息,例如神经网络权重值的变化。

示例代码:

现在在 /home/ubuntu 目录下创建源文件 Variable.py,内容可参考:

Variable.py

#!/usr/bin/python

import tensorflow as tf

initial = tf.truncated_normal(shape=[10,10],mean=0,stddev=1)

W = tf.Variable(initial)

list = [[1.,1.],[2.,2.]]

X = tf.Variable(list,dtype=tf.float32)

init_op = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init_op)

print("##################(1)################")

print sess.run(W)

print("##################(2)################")

print sess.run(W[:2,:2])

op = W[:2,:2].assign(22.*tf.ones((2,2)))

print("###################(3)###############")

print sess.run(op)

print("###################(4)###############")

print (W.eval(sess)) #computes and returnsthe value of this variable

print("####################(5)##############")

print (W.eval())#Usage with the default session

print("#####################(6)#############")

print W.dtype

print sess.run(W.initial_value)

print sess.run(W.op)

print W.shape

print("###################(7)###############")

print sess.run(X)

然后执行:

python /home/ubuntu/Variable.py

执行结果:

##################(1)################

[[ 0.56087822 0.32767066 1.24723649 -0.38045949 -1.58120871 0.61508512 -0.50005329 0.557872 0.24264131 1.15695083]

[ 0.8403486 0.14245604 -1.13870573 0.2471588 0.48664871 0.89047027 1.03071976 1.1539737 -0.64689875 -0.87872595]

[-0.09499338 0.40910682 1.70955396 -1.12553477 0.58261496 0.27552807 0.9310683 -0.80871385 -0.10735693 -1.08375466]

[-0.62496728 -0.26538777 0.07361894 -0.44500601 0.58208632 -1.08835173 -1.80241001 -1.10108757 -0.00228581 1.81949258]

[-1.56699359 1.59961379 1.14145374 -0.41384494 -1.24469018 -1.04554486 0.30459064 -1.59272766 -0.20161593 0.02574082]

[-0.00295581 0.4494803 -0.09411573 0.85826468 0.01789743 -0.33853438 0.21242785 -0.00592159 0.20592701 -0.61374348]

[ 1.73881531 -0.38042647 0.460399 -1.6453017 -0.58561307 -0.7130214 0.32697856 -0.84689331 -1.15418518 1.21276581]

[-1.15040958 0.88829482 0.73727763 0.63111001 0.90698457 -0.33168671 0.21835616 -0.26278856 0.63736057 -0.4095172 ]

[-1.20656824 -0.6755206 -0.21640387 -0.03773152 -0.1836649 -1.38785648 -0.48950577 0.81531078 0.1250588 -0.15474565]

[-0.46234027 -0.32404706 -0.3527672 0.70526761 0.2378609 -0.56674719 0.47251439 1.03810799 0.34087342 0.21140042]]

##################(2)################

[[ 0.56087822 0.32767066] [ 0.8403486 0.14245604]]

###################(3)###############

[[ 2.20000000e+01 2.20000000e+01 1.24723649e+00 -3.80459487e-01 -1.58120871e+00 6.15085125e-01 -5.00053287e-01 5.57871997e-01 2.42641315e-01 1.15695083e+00]

[ 2.20000000e+01 2.20000000e+01 -1.13870573e+00 2.47158796e-01 4.86648709e-01 8.90470266e-01 1.03071976e+00 1.15397370e+00 -6.46898746e-01 -8.78725946e-01]

[ -9.49933752e-02 4.09106821e-01 1.70955396e+00 -1.12553477e+00 5.82614958e-01 2.75528073e-01 9.31068301e-01 -8.08713853e-01 -1.07356928e-01 -1.08375466e+00]

[ -6.24967277e-01 -2.65387774e-01 7.36189410e-02 -4.45006013e-01 5.82086325e-01 -1.08835173e+00 -1.80241001e+00 -1.10108757e+00 -2.28581345e-03 1.81949258e+00]

[ -1.56699359e+00 1.59961379e+00 1.14145374e+00 -4.13844943e-01 -1.24469018e+00 -1.04554486e+00 3.04590642e-01 -1.59272766e+00 -2.01615930e-01 2.57408191e-02]

[ -2.95581389e-03 4.49480295e-01 -9.41157266e-02 8.58264685e-01 1.78974271e-02 -3.38534385e-01 2.12427855e-01 -5.92159014e-03 2.05927014e-01 -6.13743484e-01]

[ 1.73881531e+00 -3.80426466e-01 4.60399002e-01 -1.64530170e+00 -5.85613072e-01 -7.13021398e-01 3.26978564e-01 -8.46893311e-01 -1.15418518e+00 1.21276581e+00]

[ -1.15040958e+00 8.88294816e-01 7.37277627e-01 6.31110013e-01 9.06984568e-01 -3.31686705e-01 2.18356162e-01 -2.62788564e-01 6.37360573e-01 -4.09517199e-01]

[ -1.20656824e+00 -6.75520599e-01 -2.16403872e-01 -3.77315208e-02 -1.83664903e-01 -1.38785648e+00 -4.89505768e-01 8.15310776e-01 1.25058800e-01 -1.54745653e-01]

[ -4.62340266e-01 -3.24047059e-01 -3.52767199e-01 7.05267608e-01 2.37860903e-01 -5.66747189e-01 4.72514391e-01 1.03810799e+00 3.40873420e-01 2.11400419e-01]]

###################(4)###############

[[ 2.20000000e+01 2.20000000e+01 1.24723649e+00 -3.80459487e-01 -1.58120871e+00 6.15085125e-01 -5.00053287e-01 5.57871997e-01 2.42641315e-01 1.15695083e+00]

[ 2.20000000e+01 2.20000000e+01 -1.13870573e+00 2.47158796e-01 4.86648709e-01 8.90470266e-01 1.03071976e+00 1.15397370e+00 -6.46898746e-01 -8.78725946e-01]

[ -9.49933752e-02 4.09106821e-01 1.70955396e+00 -1.12553477e+00 5.82614958e-01 2.75528073e-01 9.31068301e-01 -8.08713853e-01 -1.07356928e-01 -1.08375466e+00]

[ -6.24967277e-01 -2.65387774e-01 7.36189410e-02 -4.45006013e-01 5.82086325e-01 -1.08835173e+00 -1.80241001e+00 -1.10108757e+00 -2.28581345e-03 1.81949258e+00]

[ -1.56699359e+00 1.59961379e+00 1.14145374e+00 -4.13844943e-01 -1.24469018e+00 -1.04554486e+00 3.04590642e-01 -1.59272766e+00 -2.01615930e-01 2.57408191e-02]

[ -2.95581389e-03 4.49480295e-01 -9.41157266e-02 8.58264685e-01 1.78974271e-02 -3.38534385e-01 2.12427855e-01 -5.92159014e-03 2.05927014e-01 -6.13743484e-01]

[ 1.73881531e+00 -3.80426466e-01 4.60399002e-01 -1.64530170e+00 -5.85613072e-01 -7.13021398e-01 3.26978564e-01 -8.46893311e-01 -1.15418518e+00 1.21276581e+00]

[ -1.15040958e+00 8.88294816e-01 7.37277627e-01 6.31110013e-01 9.06984568e-01 -3.31686705e-01 2.18356162e-01 -2.62788564e-01 6.37360573e-01 -4.09517199e-01]

[ -1.20656824e+00 -6.75520599e-01 -2.16403872e-01 -3.77315208e-02 -1.83664903e-01 -1.38785648e+00 -4.89505768e-01 8.15310776e-01 1.25058800e-01 -1.54745653e-01]

[ -4.62340266e-01 -3.24047059e-01 -3.52767199e-01 7.05267608e-01 2.37860903e-01 -5.66747189e-01 4.72514391e-01 1.03810799e+00 3.40873420e-01 2.11400419e-01]]

####################(5)##############

[[ 2.20000000e+01 2.20000000e+01 1.24723649e+00 -3.80459487e-01 -1.58120871e+00 6.15085125e-01 -5.00053287e-01 5.57871997e-01 2.42641315e-01 1.15695083e+00]

[ 2.20000000e+01 2.20000000e+01 -1.13870573e+00 2.47158796e-01 4.86648709e-01 8.90470266e-01 1.03071976e+00 1.15397370e+00 -6.46898746e-01 -8.78725946e-01]

[ -9.49933752e-02 4.09106821e-01 1.70955396e+00 -1.12553477e+00 5.82614958e-01 2.75528073e-01 9.31068301e-01 -8.08713853e-01 -1.07356928e-01 -1.08375466e+00]

[ -6.24967277e-01 -2.65387774e-01 7.36189410e-02 -4.45006013e-01 5.82086325e-01 -1.08835173e+00 -1.80241001e+00 -1.10108757e+00 -2.28581345e-03 1.81949258e+00]

[ -1.56699359e+00 1.59961379e+00 1.14145374e+00 -4.13844943e-01 -1.24469018e+00 -1.04554486e+00 3.04590642e-01 -1.59272766e+00 -2.01615930e-01 2.57408191e-02]

[ -2.95581389e-03 4.49480295e-01 -9.41157266e-02 8.58264685e-01 1.78974271e-02 -3.38534385e-01 2.12427855e-01 -5.92159014e-03 2.05927014e-01 -6.13743484e-01]

[ 1.73881531e+00 -3.80426466e-01 4.60399002e-01 -1.64530170e+00 -5.85613072e-01 -7.13021398e-01 3.26978564e-01 -8.46893311e-01 -1.15418518e+00 1.21276581e+00]

[ -1.15040958e+00 8.88294816e-01 7.37277627e-01 6.31110013e-01 9.06984568e-01 -3.31686705e-01 2.18356162e-01 -2.62788564e-01 6.37360573e-01 -4.09517199e-01]

[ -1.20656824e+00 -6.75520599e-01 -2.16403872e-01 -3.77315208e-02 -1.83664903e-01 -1.38785648e+00 -4.89505768e-01 8.15310776e-01 1.25058800e-01 -1.54745653e-01]

[ -4.62340266e-01 -3.24047059e-01 -3.52767199e-01 7.05267608e-01 2.37860903e-01 -5.66747189e-01 4.72514391e-01 1.03810799e+00 3.40873420e-01 2.11400419e-01]]

#####################(6)#############

[[ 1.81914055 -0.4915559 -0.15831701 -0.88427407 -1.07733405 -0.60749137 1.66635537 -1.72299039 -1.61444342 0.27295882]

[ 0.446365 0.5297941 0.9737168 -0.50106817 -1.59801197 1.08469987 -0.10664631 0.08602872 -1.16334164 -0.31328002]

[ 1.02102256 0.84310216 -1.63820982 -0.37840167 1.2725147 -1.46472263 0.81902218 0.70780081 0.32180747 -0.22242352]

[-0.76061416 0.06686125 1.22337008 -0.76162207 0.26712251 -0.184366 -0.18723577 -1.27243066 1.1201812 0.74929941]

[ 1.55394351 0.95762426 -0.77478319 -0.62725532 0.99874109 0.11631405 0.55721915 -1.99805415 -1.81725216 -0.33845708]

[ 0.38020468 -0.22800203 1.18337238 -0.05378164 0.50396085 -1.87139273 -0.09195592 -1.9437803 0.19355652 0.75287497]

[ 0.87766737 -0.58997762 1.7898128 1.15790749 1.89991117 -0.86276245 -0.55173373 0.52809429 1.03385186 -0.17748916]

[ 0.85077554 0.69927084 -0.70190752 -0.09315278 -0.05869755 0.61413532 -0.18304662 1.41501033 0.49717629 1.04668236]

[-0.03881529 -0.64575118 -0.99053252 -0.99590522 0.13150445 1.85600221 -0.12806618 -0.80717343 -1.21601212 -0.819583 ]

[-0.17798649 0.38206637 0.92168695 1.59679687 -0.70975852 -1.37671721 1.63708949 -0.1433745 -1.37151611 0.24576309]]

None

(10, 10)

###################(7)###############

[[ 1. 1.]

[ 2. 2.]]

3 完成

到此,我们大致了解了TensorFlow相关API的含义和用法。

以上。