内容:(会在后期继续整理扩充该部分内容;欢迎探讨)

1. LVM基本创建及管理

2. LVM快照

3. LVM与RAID的结合使用:基于RAID的LVM

LVM创建:

描述:

LVM是逻辑盘卷管理(LogicalVolumeManager)的简称,它是Linux环境下对磁盘分区进行管理的一种机制,LVM是建立在硬盘和分区之上的一个逻辑层,来提高磁盘分区管理的灵活性.

通过创建LVM,我们可以更轻松的管理磁盘分区,将若干个不同大小的不同形式的磁盘整合为一个整块的卷组,然后在卷组上随意的创建逻辑卷,既避免了大量不同规格硬盘的管理难题,也使逻辑卷容量的扩充缩减不再受限于磁盘规格;并且LVM的snapshot(快照)功能给数据的物理备份提供了便捷可靠的方式;

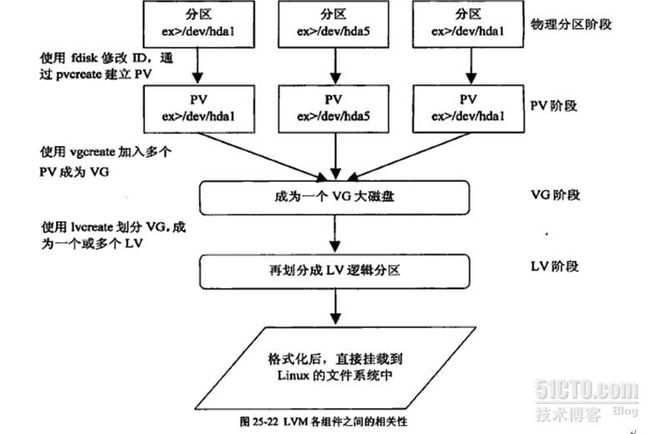

创建LVM过程;(如图)

1. 在物理设备上创建物理分区,每个物理分区称为一个PE

2. 使用fdisk工具创建物理分区卷标(修改为8e),形成PV(Physical Volume 物理卷)

3. 使用vgcreate 将多个PV添加到一个VG(Volume Group 卷组)中,此时VG成为一个大磁盘;

4. 在VG大磁盘上划分LV(Logical Volume 逻辑卷),将逻辑卷格式化后即可挂载使用;

各阶段可用的命令工具:(详细选项信息请be a man)

| 阶段 |

显示信息 |

创建 |

删除组员 |

扩大大小 |

缩减大小 |

| PV |

pvdisplay |

pvcreat |

pvremove |

----- |

----- |

| VG |

vgdisplay |

vgcreat |

vgremove |

vgextend |

vgreduce |

| LV |

lvdispaly |

lvcreat |

lvremove |

lvextend |

lvreduce |

创建示例:

1. 创建PV

- Device Boot Start End Blocks Id System

- /dev/sdb1 1 609 4891761 8e Linux LVM

- /dev/sdc1 1 609 4891761 8e Linux LVM

- /dev/sdd1 1 609 4891761 8e Linux LVM

- [root@bogon ~]# pvcreate /dev/sd[bcd]1

- Physical volume "/dev/sdb1" successfully created

- Physical volume "/dev/sdc1" successfully created

- Physical volume "/dev/sdd1" successfully created

查看PV信息:

- [root@bogon ~]# pvdisplay

- --- Physical volume ---

- PV Name /dev/sda2

- VG Name vol0

- PV Size 40.00 GB / not usable 2.61 MB

- Allocatable yes

- PE Size (KByte) 32768

- Total PE 1280

- Free PE 281

- Allocated PE 999

- PV UUID GxfWc2-hzKw-tP1E-8cSU-kkqY-z15Z-11Gacd

- "/dev/sdb1" is a new physical volume of "4.67 GB"

- --- NEW Physical volume ---

- PV Name /dev/sdb1

- VG Name

- PV Size 4.67 GB

- Allocatable NO

- PE Size (KByte) 0

- Total PE 0

- Free PE 0

- Allocated PE 0

- PV UUID 1rrc9i-05Om-Wzd6-dM9G-bo08-2oJj-WjRjLg

- "/dev/sdc1" is a new physical volume of "4.67 GB"

- --- NEW Physical volume ---

- PV Name /dev/sdc1

- VG Name

- PV Size 4.67 GB

- Allocatable NO

- PE Size (KByte) 0

- Total PE 0

- Free PE 0

- Allocated PE 0

- PV UUID RCGft6-l7tj-vuBX-bnds-xbLn-PE32-mCSeE8

- "/dev/sdd1" is a new physical volume of "4.67 GB"

- --- NEW Physical volume ---

- PV Name /dev/sdd1

- VG Name

- PV Size 4.67 GB

- Allocatable NO

- PE Size (KByte) 0

- Total PE 0

- Free PE 0

- Allocated PE 0

- PV UUID SLiAAp-43zX-6BC8-wVzP-6vQu-uyYF-ugdWbD

2. 创建VG

- [[root@bogon ~]# vgcreate myvg -s 16M /dev/sd[bcd]1

- Volume group "myvg" successfully created

- ##-s 在创建时指定PE块的大小,默认是4M。

- 查看系统上VG状态

- [root@bogon ~]# vgdisplay

- --- Volume group ---

- VG Name myvg

- System ID

- Format lvm2

- Metadata Areas 3

- Metadata Sequence No 1

- VG Access read/write

- VG Status resizable

- MAX LV 0

- Cur LV 0

- Open LV 0

- Max PV 0

- Cur PV 3

- Act PV 3

- VG Size 13.97 GB ##VG总大小

- PE Size 16.00 MB ##默认的PE块大小是4M

- Total PE 894 ##总PE块数

- Alloc PE / Size 0 / 0 ##已经使用的PE块数目

- Free PE / Size 894 / 13.97 GB ##可用的PE数目及磁盘大小

- VG UUID RJC6Z1-N2Jx-2Zjz-26m6-LLoB-PcWQ-FXx3lV

3. 在VG中划分出LV:

- [root@bogon ~]# lvcreate -L 256M -n data1 myvg

- Logical volume "data1" created

- ## -L指定LV大小

- ## -n 指定lv卷名称

- [root@bogon ~]# lvcreate -l 20 -n test myvg

- Logical volume "test" created

- ## -l 指定LV大小占用多少个PE块;上面大小为:20*16M=320M

- [root@bogon ~]# lvdisplay

- --- Logical volume ---

- LV Name /dev/myvg/data

- VG Name myvg

- LV UUID d0SYy1-DQ9T-Sj0R-uPeD-xn0z-raDU-g9lLeK

- LV Write Access read/write

- LV Status available

- # open 0

- LV Size 256.00 MB

- Current LE 16

- Segments 1

- Allocation inherit

- Read ahead sectors auto

- - currently set to 256

- Block device 253:2

- --- Logical volume ---

- LV Name /dev/myvg/test

- VG Name myvg

- LV UUID os4UiH-5QAG-HqOJ-DoNT-mVeT-oYyy-s1xArV

- LV Write Access read/write

- LV Status available

- # open 0

- LV Size 320.00 MB

- Current LE 20

- Segments 1

- Allocation inherit

- Read ahead sectors auto

- - currently set to 256

- Block device 253:3

4. 然后就可以格式化LV,挂载使用:

- [root@bogon ~]# mkfs -t ext3 -b 2048 -L DATA /dev/myvg/data

- [root@bogon ~]# mount /dev/myvg/data /data/

- ## 拷贝进去一些文件,测试后面在线扩展及缩减效果:

- [root@bogon data]# cp /etc/* /data/

5. 扩大LV容量:(2.6kernel+ext3filesystem)

lvextend命令可以增长逻辑卷

resize2fs可以增长filesystem在线或非在线

(我的系统内核2.6.18,ext3文件系统,查man文档,现在只有2.6的内核+ext3文件系统才可以在线增容量)

首先逻辑扩展:

- [root@bogon data]# lvextend -L 500M /dev/myvg/data

- Rounding up size to full physical extent 512.00 MB

- Extending logical volume data to 512.00 MB

- Logical volume data successfully resized

- ##-L 500M :指扩展到500M,系统此时会找最近的柱面进行匹配;

- ##-L +500M :值在原有大小的基础上扩大500M;

- ##-l [+]50 类似上面,但是以Pe块为单位进行扩展;

然后文件系统物理扩展:

- [root@bogon data]# resize2fs -p /dev/myvg/data

- resize2fs 1.39 (29-May-2006)

- Filesystem at /dev/myvg/data is mounted on /data; on-line resizing required

- Performing an on-line resize of /dev/myvg/data to 262144 (2k) blocks.

- The filesystem on /dev/myvg/data is now 262144 blocks long.

- ##据上面信息,系统自动识别并进行了在线扩展;

- 查看状态:

- [root@bogon ~]# lvdisplay

- --- Logical volume ---

- LV Name /dev/myvg/data

- VG Name myvg

- LV UUID d0SYy1-DQ9T-Sj0R-uPeD-xn0z-raDU-g9lLeK

- LV Write Access read/write

- LV Status available

- # open 1

- LV Size 512.00 MB

- Current LE 32

- Segments 1

- Allocation inherit

- Read ahead sectors auto

- - currently set to 256

- Block device 253:2

- ##此时查看挂载目录,文件应该完好;

6. 缩减LV容量:

缩减容量是一件危险的操作;缩减必须在离线状态下执行;并且必须先强制检查文件系统错误,防止缩减过程损坏数据;

- [root@bogon ~]# umount /dev/myvg/data

- [root@bogon ~]# e2fsck -f /dev/myvg/data

- e2fsck 1.39 (29-May-2006)

- Pass 1: Checking inodes, blocks, and sizes

- Pass 2: Checking directory structure

- Pass 3: Checking directory connectivity

- Pass 4: Checking reference counts

- Pass 5: Checking group summary information

- DATA: 13/286720 files (7.7% non-contiguous), 23141/573440 blocks

先缩减物理大小:

- [root@bogon ~]# resize2fs /dev/myvg/data 256M

- resize2fs 1.39 (29-May-2006)

- Resizing the filesystem on /dev/myvg/data to 131072 (2k) blocks.

- The filesystem on /dev/myvg/data is now 131072 blocks long.

再缩减逻辑大小:

- [root@bogon ~]# lvreduce -L 256M /dev/myvg/data

- WARNING: Reducing active logical volume to 256.00 MB

- THIS MAY DESTROY YOUR DATA (filesystem etc.)

- Do you really want to reduce data? [y/n]: y

- Reducing logical volume data to 256.00 MB

- Logical volume data successfully resized

查看状态、重新挂载:

- [root@bogon ~]# lvdisplay

- --- Logical volume ---

- LV Name /dev/myvg/data

- VG Name myvg

- LV UUID d0SYy1-DQ9T-Sj0R-uPeD-xn0z-raDU-g9lLeK

- LV Write Access read/write

- LV Status available

- # open 0

- LV Size 256.00 MB

- Current LE 16

- Segments 1

- Allocation inherit

- Read ahead sectors auto

- - currently set to 256

- Block device 253:2

- 重新挂载:

- [root@bogon ~]# mount /dev/myvg/data /data/

- [root@bogon data]# df /data/

- Filesystem 1K-blocks Used Available Use% Mounted on

- /dev/mapper/myvg-data

- 253900 9508 236528 4% /data

7. 扩展VG,向VG中添加一个PV:

- [root@bogon data]# pvcreate /dev/sdc2

- Physical volume "/dev/sdc2" successfully created

- [root@bogon data]# vgextend myvg /dev/sdc2

- Volume group "myvg" successfully extended

- [root@bogon data]# pvdisplay

- --- Physical volume ---

- PV Name /dev/sdc2

- VG Name myvg

- PV Size 4.67 GB / not usable 9.14 MB

- Allocatable yes

- PE Size (KByte) 16384

- Total PE 298

- Free PE 298

- Allocated PE 0

- PV UUID hrveTu-2JUH-aSgT-GKAJ-VVv2-Hit0-PyoOOr

8. 缩减VG,取出VG中的某个PV:

移除某个PV时,需要先转移该PV上数据到其他PV,然后再将该PV删除;

移出指定PV中的数据:

- [root@bogon data]# pvmove /dev/sdc2

- No data to move for myvg

- ##如果sdc2上面有数据,则会花一段时间移动,并且显示警告信息,再次确认后才会执行;

- ##如上,提示该分区中没有数据;

移除PV:

- [root@bogon data]# vgreduce myvg /dev/sdc2

- Removed "/dev/sdc2" from volume group "myvg"

- ##若发现LVM中磁盘工作不太正常,怀疑是某一块磁盘工作由问题后就可以用该方法移出问题盘

- ##上数据,然后删掉问题盘;

LVM快照:

描述:

在一个非常繁忙的服务器上,备份大量的数据时,需要停掉大量的服务,否则备份下来的数据极容易出现不一致状态,而使备份根本不能起效;这时快照就起作用了;

原理:

逻辑卷快照实质是访问原始数据的另外一个路径而已;快照保存的是做快照那一刻的数据状态;做快照以后,任何对原始数据的修改,会在修改前拷贝一份到快照区域,所以通过快照查看到的数据永远是生成快照那一刻的数据状态;但是对于快照大小有限制,做快照前需要估算在一定时间内数据修改量大小,如果在创建快照期间数据修改量大于快照大小了,数据会溢出照成快照失效崩溃;

快照不是永久的。如果你卸下LVM或重启,它们就丢失了,需要重新创建。

创建快照:

- [root@bogon ~]# lvcreate -L 500M -p r -s -n datasnap /dev/myvg/data

- Rounding up size to full physical extent 512.00 MB

- Logical volume "datasnap" created

- ## -L –l 设置大小

- ## -p :permission,设置生成快照的读写权限,默认为RW;r为只读

- ##-s 指定lvcreate生成的是一个快照

- ##-n 指定快照名称

- 挂载快照到指定位置:

- [root@bogon ~]# mount /dev/myvg/datasnap /backup/

- mount: block device /dev/myvg/datasnap is write-protected, mounting read-only

- 然后备份出快照中文件即可,备份后及时删除快照:

- [root@bogon ~]# ls /backup/

- inittab lost+found

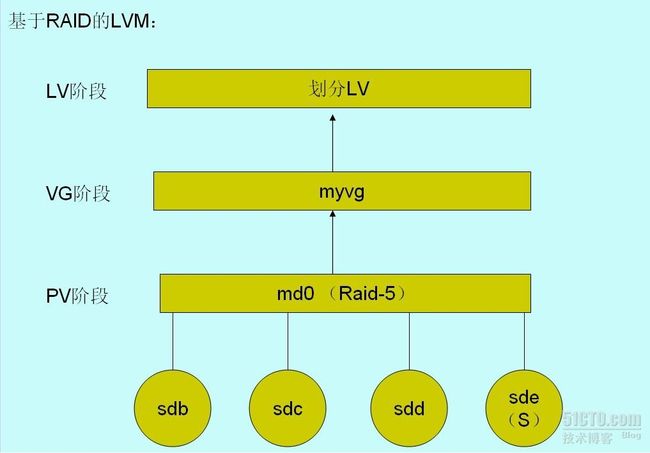

基于RAID的LVM的建立:

描述:

基于RAID的LVM,可以在底层实现RAID对数据的冗余或是提高读写性能的基础上,可以在上层实现LVM的可以灵活管理磁盘的功能;

如图:

建立过程:

1. 建立LinuxRAID形式的分区:

- Device Boot Start End Blocks Id System

- /dev/sdb1 1 62 497983+ fd Linux raid autodetect

- /dev/sdc1 1 62 497983+ fd Linux raid autodetect

- /dev/sdd1 1 62 497983+ fd Linux raid autodetect

- /dev/sde1 1 62 497983+ fd Linux raid autodetect

2. 创建RAID-5:

- [root@nod1 ~]# mdadm -C /dev/md0 -a yes -l 5 -n 3 -x 1 /dev/sd{b,c,d,e}1

- mdadm: array /dev/md0 started.

- # RAID-5有一个spare盘,三个活动盘。

- # 查看状态,发现跟创建要求一致,且创建正在进行中:

- [root@nod1 ~]# cat /proc/mdstat

- Personalities : [raid6] [raid5] [raid4]

- md0 : active raid5 sdd1[4] sde1[3](S) sdc1[1] sdb1[0]

- 995712 blocks level 5, 64k chunk, algorithm 2 [3/2] [UU_]

- [=======>.............] recovery = 36.9% (184580/497856) finish=0.2min speed=18458K/sec

- unused devices:

- # 查看RAID详细信息:

- [root@nod1 ~]# mdadm -D /dev/md0

- /dev/md0:

- Version : 0.90

- Creation Time : Wed Apr 6 04:27:46 2011

- Raid Level : raid5

- Array Size : 995712 (972.54 MiB 1019.61 MB)

- Used Dev Size : 497856 (486.27 MiB 509.80 MB)

- Raid Devices : 3

- Total Devices : 4

- Preferred Minor : 0

- Persistence : Superblock is persistent

- Update Time : Wed Apr 6 04:36:08 2011

- State : clean

- Active Devices : 3

- Working Devices : 4

- Failed Devices : 0

- Spare Devices : 1

- Layout : left-symmetric

- Chunk Size : 64K

- UUID : 5663fc8e:68f539ee:3a4040d6:ccdac92a

- Events : 0.4

- Number Major Minor RaidDevice State

- 0 8 17 0 active sync /dev/sdb1

- 1 8 33 1 active sync /dev/sdc1

- 2 8 49 2 active sync /dev/sdd1

- 3 8 65 - spare /dev/sde1

3. 建立RAID配置文件:

- [root@nod1 ~]# mdadm -Ds > /etc/mdadm.conf

- [root@nod1 ~]# cat /etc/mdadm.conf

- ARRAY /dev/md0 level=raid5 num-devices=3 metadata=0.90 spares=1 UUID=5663fc8e:68f539ee:3a4040d6:ccdac92a

4. 基于刚建立的RAID设备创建LVM:

- # 将md0创建成为PV(物理卷):

- [root@nod1 ~]# pvcreate /dev/md0

- Physical volume "/dev/md0" successfully created

- # 查看物理卷:

- [root@nod1 ~]# pvdisplay

- "/dev/md0" is a new physical volume of "972.38 MB"

- --- NEW Physical volume ---

- PV Name /dev/md0

- VG Name #此时该PV不包含在任何VG中,故为空;

- PV Size 972.38 MB

- Allocatable NO

- PE Size (KByte) 0

- Total PE 0

- Free PE 0

- Allocated PE 0

- PV UUID PUb3uj-ObES-TXsM-2oMS-exps-LPXP-jD218u

- # 创建VG

- [root@nod1 ~]# vgcreate myvg -s 32M /dev/md0

- Volume group "myvg" successfully created

- # 查看VG状态:

- [root@nod1 ~]# vgdisplay

- --- Volume group ---

- VG Name myvg

- System ID

- Format lvm2

- Metadata Areas 1

- Metadata Sequence No 1

- VG Access read/write

- VG Status resizable

- MAX LV 0

- Cur LV 0

- Open LV 0

- Max PV 0

- Cur PV 1

- Act PV 1

- VG Size 960.00 MB

- PE Size 32.00 MB

- Total PE 30

- Alloc PE / Size 0 / 0

- Free PE / Size 30 / 960.00 MB

- VG UUID 9NKEWK-7jrv-zC2x-59xg-10Ai-qA1L-cfHXDj

- # 创建LV:

- [root@nod1 ~]# lvcreate -L 500M -n mydata myvg

- Rounding up size to full physical extent 512.00 MB

- Logical volume "mydata" created

- # 查看LV状态:

- [root@nod1 ~]# lvdisplay

- --- Logical volume ---

- LV Name /dev/myvg/mydata

- VG Name myvg

- LV UUID KQQUJq-FU2C-E7lI-QJUp-xeVd-3OpA-TMgI1D

- LV Write Access read/write

- LV Status available

- # open 0

- LV Size 512.00 MB

- Current LE 16

- Segments 1

- Allocation inherit

- Read ahead sectors auto

- - currently set to 512

- Block device 253:2

问题:(实验环境,很难做出判断,望有经验的多做指教)

在RAID上实现LVM后,不能再手动控制阵列的停止。

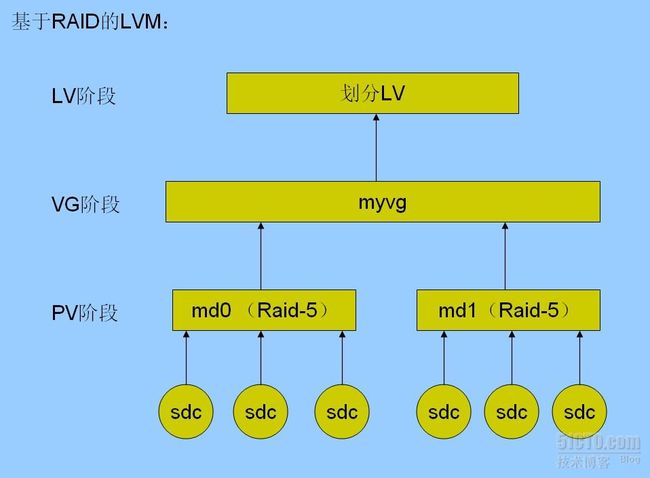

raid设备在建成以后,是不可以改变活动磁盘数目的。只能新增备份盘, 删除故障盘。故,若对存储需求估计失误,raid上层的LVM分区即使可以扩展, 但是仍可能无法满足存储需求;

如果整个raid不能满足容量需求,在试验中是可以实现将两个RAID同时加入 一个VG,从而实现RAID间的无缝衔接~但是这样做在实际生产中效果怎么样?是 否会因为架构太复杂造成系统负过大,或是对拖慢I/O响应速度?!这仍旧是个问 题;

基于RAID之上的LVM,若划分多个LV,多个LV之间的数据分布情况怎么样?! 会不会拖慢RAID本来带来的性能提升?

RAID间的无缝衔接示意图: