agenda

- memory leak排查

- python gc机制

- 后记

所有分析都是基于cpython

memory leak排查

通常内存泄漏比较难排查, 可以借助工具和开闭实验. 主要问题是如何定位泄漏部分, 可能自己写的代码泄漏, 也可能是调用库造成的泄漏, 可能是py程序中忽略的引用造成的, 也可能引用或者自己native so造成. 之前负责调查一个7x24的线上服务, 服务启动后, 随着请求内存不不规则增加, 从启动时的1G多占用一天内能达到14G之多.

思路是:

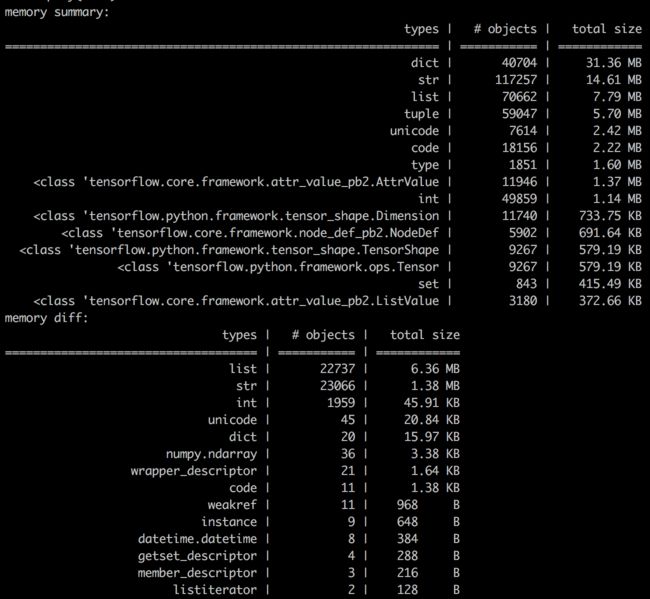

watch_result = watch py heap memory diff via pylmer

if watch_result is continues increase:

focus on py module

else:

focus on naive module

通过一段时间的观察(pylmer模块扫描这个py heap非常消耗性能, 在线上抽样数据需要注意), py heap有涨有跌, 这里随着业务场景复杂度有差异, 之前负责的业务在单位时间内会保存一些的图片(numpy.array), 这里会带来局部时间的不稳定, 局部时间内确实内存增长迅猛, 所以需要根据业务场景观察一个周期内的内存变化.

当时锁定在native library: tensorflow, cv2, numpy这几块儿上, 由于发现越是高峰期泄漏越发明显, 首要怀疑tensorflow的问题, 简单写了一个每个模型并发做FG实验, 观察内存没有变化, 排除tensorflow. 后续绕了一大圈最后solid repro是tensorflow的memory leak, 开始的实验没有没有严格按照业务场景来.当然是后话, 由于是第一次排查py memory leak, 对于pmlyer的结果并不是特别有信心, 感觉上tensorflow作为广泛使用框架,不应该有这个问题, 犯了主观主义的错误.

当时初步怀疑是py code的问题.

现在反过来想, 上面用py伪代码的思路是ok, 通过单位周期的内存变化就可以大体定位py程序是否有泄漏, 比较糟糕的是py和native都有泄漏.

python gc机制

调查python内存问题就一定要了解python内存机制

reference counting

python中的垃圾回收基于引用计数, 优点是简单高效, 缺点不能完全摆脱互引用(虽然有cycle reference detect), 引自官网:

The principle is simple: every object contains a counter, which is incremented when a reference to the object is stored somewhere, and which is decremented when a reference to it is deleted. When the counter reaches zero, the last reference to the object has been deleted and the object is freed.

在解释器层面通过调用Py_INCREF(x)增加引用, Py_DECREF(x)降低引用,当引用数等于0后可以释放(具体时机控制在gc里).

通常Py_INCREF的场景是:

- 赋值

- 传参

- 将变量放入list, dict, tuple中

通常Py_DECREF(x):

- 变量作用域出离

-

显示调用del

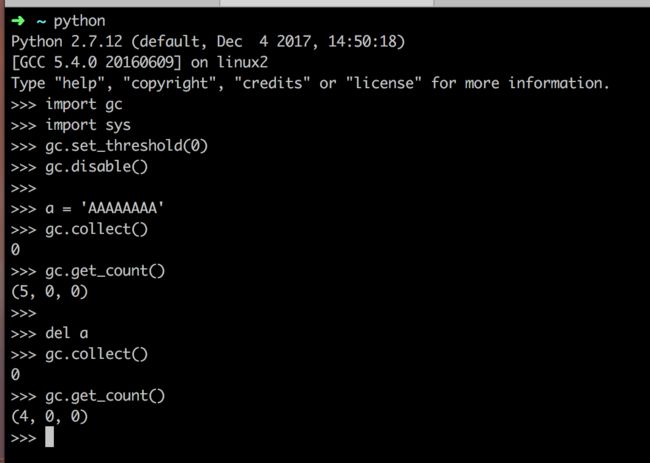

获得一个变量的引用计数可以通过sys.getrefcount(x)函数, 所有引用变量可以通过gc.get_referrers(x), 实例如下:

当程序有特定场景是某些情况造成某些不需要的object的ref count一直不等于0(没有调用Py_DECREF)就造成了memory leak.

sys.getrefcount函数获取了一个引用, 所以变量a的引用是2.

ownership rules

python中区分mutable object & immutable object(其实这一套背后东西和java是相同的),对于immutable object py gc后面一套收集机制, 具体可以参考Memory management in Python, 需要注意的是python的内存机制中会缓存类似内存池的机制, 有的内存是不释放回os.

Unlike many other languages, Python does not necessarily release the memory back to the Operating System. Instead, it has a specialized object allocator for small objects (smaller or equal to 512 bytes), which keeps some chunks of already allocated memory for further use in future. The amount of memory that Python holds depending on the usage patterns, in some cases all allocated memory is never released.

Therefore, if a long-running Python process takes more memory over time, it does not necessarily mean that you have memory leaks

需要注意的是: 通过PyInt_FromLong/Py_BuildValue返回的是reference的owner ship, 需要区分对待返回的是一个transfer owner ship的ref还是一个borrow的ref( PyImport_AddModule() also returns a borrowed reference, even though it may actually create the object it returns: this is possible because an owned reference to the object is stored in sys.modules).

从python调用c时传参数时borrow ref, 在函数返回前变量的生命周期可以保证, 当C程序需要留存对象就需要调用Py_INCREF以确保对象不被释放.同样C程序的返回也是以borrow ref的身份返回给了python段, python程序在适当的时机释放.

关于borrow ref引发的问题可以参见1.10.3 Thin Ice

cycle reference detect & hazard

ref counting先天的缺陷就是循环引用(这也就java gc使用引用链可达分析方式), 因为这一点python饱受诟病, 由于这块很早就扎根于py内部, 至今还是沿袭, 严格来说python可以检测cycle reference只不过只限于双方都在同一代中, 如果不在同一代中就会造成memory leak.代码实例如下:

import sys

import gc

gc.disable()

gc.set_threshold(0)

a = []

b = []

a.append(b)

b.append(a)

print(gc.get_count())

del a

del b

print ('gc collect = ' + str(gc.collect()))

print(gc.get_count())

运行输出如下:

(541, 8, 0)

gc collect = 2

(1, 0, 0)

可以看到gc检测到了,并释放了.

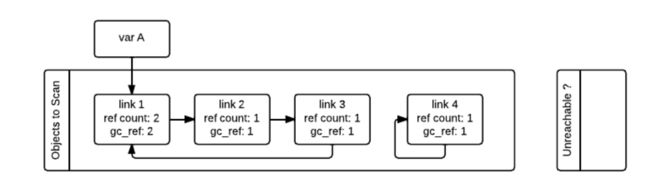

python检测到circular reference的实现在gcmodule.c中, 伪代码流程如下和注释如下:

/* This is the main function. Read this to understand how the collection process works. */

static Py_ssize_t collect(int generation, Py_ssize_t *n_collected, Py_ssize_t *n_uncollectable, int nofail){

// update_refs() copies the true refcount to gc_refs, for each object in the generation being collected.

// decouple real ref count with gc_refs

update_refs(young);

//subtract_refs() then adjusts gc_refs so that it equals the number of times an object is referenced directly from outside the generation being collected.

// only concern with outer ref, gc_ref != 0 ===> exist some outer ref

subtract_refs(young);

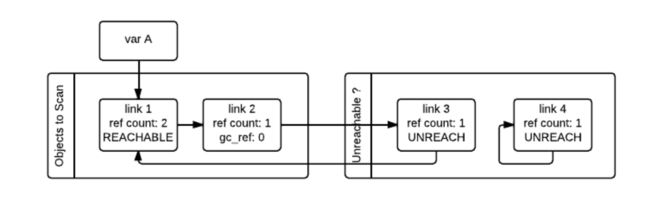

//move gc_ref == 0 's items into a list of temp unreachable, mark each item with GC_TENTATIVELY_UNREACHABLE

gc_list_init(&unreachable);

//start current young, go though left item that exist outer ref(gc_ref != 0), if there exist a link between unreachable item, bring it back and re-do for newly added one

move_unreachable(young, &unreachable);

}

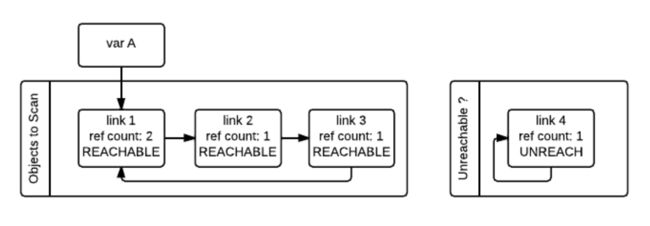

图例解释如下:

经过subtract_refs, 把内部引用去掉

上图中已经操作完'link3'和'link4', 正在操作'link2', 可知'link2'也会进入unreachable list, 'link1'是reachable的

最终只有link4 还留在unreachable list中,这就是真的达不到了, free掉它

由此看出, 假如外部存在孤立的circular reference, 还是会造成memory leak.

再有一点, 加入了circular reference gc collect的时间复杂度从O(N)变成了O(N^2), 造成了gc stop the world时长非线性增长.

performance concern & tips

从上面的分析可以看出, 因为circular reference的关系, py heap STW时间不是线性关系, python对full gc有额外保护, 仅当 last_none_full_gc_survived_obj_counts > 25%* last_full_gc_survived_obj_counts时, 才会进行full gc. gc实现中代码注释如下:

Using the above ratio, instead, yields amortized linear performance in the total number of objects (the effect of which can be summarized thusly: "each full garbage collection is more and more costly as the number of objects grows, but we do fewer and fewer of them").

因为在python中full gc是越来越慢的, 所以假如程序中没有循环引用, 可以将gc disable, 根据自己的业务场景清除无用的内存垃圾.

前人总结 tips:

- 避免使用finalizer

- 假如不得已要使用finalizer, 需要提升python版本3.4以上

- 针对场景是用weak reference

-

针对业务场景disable gc, 手动清除

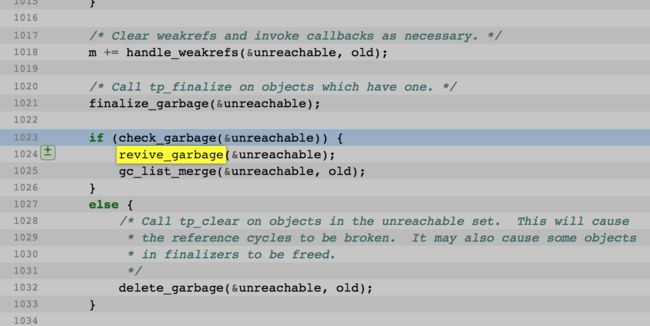

finalizer是所有gc的噩梦, 因为经过了finalizer之后, 原来'死'的object有可能又活过来了, 造成原有的引用关系失效, 在py3.4之前, 假如在finalizer中改变引用关系, gc是感知不到, 会造成memory leak. 3.4之后, 那些起死回生的object被称为'revived object', 在gc collect最后又做了一次检查,代码如下:

后记

经过学习py gc机制和排查定位问题不在py端, 但是gc得略态缺省是作为服务端语言的一个concern, 后面有时间整理一下tensorflow在GPU模式下的内存机制和泄漏问题

ref link:

https://docs.python.org/2.0/ext/refcounts.html

https://docs.python.org/2.0/ext/refcountsInPython.html

https://docs.python.org/2.0/ext/ownershipRules.html

https://docs.python.org/2.0/ext/thinIce.html

https://rushter.com/blog/python-garbage-collector/

https://rushter.com/blog/python-memory-managment/

https://pythoninternal.wordpress.com/2014/08/04/the-garbage-collector/

https://hg.python.org/cpython/file/eafe4007c999/Modules/gcmodule.c#l1023

https://docs.python.org/2.7/library/gc.html

https://www.quora.com/How-does-garbage-collection-in-Python-work-What-are-the-pros-and-cons