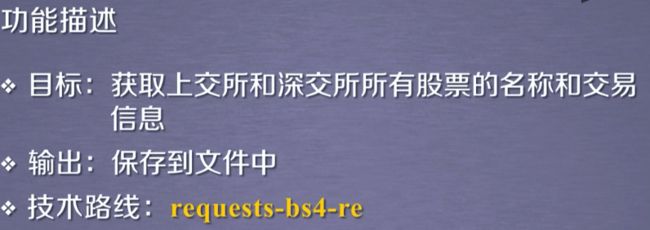

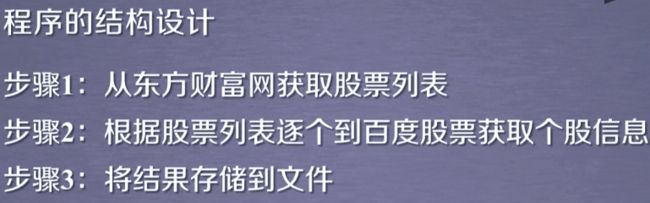

1. 股票数据定向爬虫

https://gupiao.baidu.com/stock

http://quote.eastmoney.com/stock_list.html

2. 实例编写

2.1 获取HTML页面

def getHTMLText(url): try: r = requests.get(url, timeout = 30) r.raise_for_status() r.encoding = r.apparent_encoding print("url:", r.request.url) return r.text except: return ""

2.2 获取股票列表信息(bs4+正则)

def getStockList(lst, stockURL): html = getHTMLText(stockURL) soup = BeautifulSoup(html, 'html.parser') # 个股链接在 标签中 a = soup.find_all('a') for i in a: try: # 个股链接在 标签的 href 属性中 # 我们需要获得 sh/sz + 6 个数字,利用正则表达式 href = i.attrs['href'] lst.append(re.findall(r"[s][hz]\d{6}", href)[0]) except: continue print(lst)

2.3 获取股票信息主体

def getStockInfo(lst, stockURL, fpath): for stock in lst: url = stockURL + stock + ".html" html = getHTMLText(url) try: if html == "": continue # 使用键值对记录个股信息 infoDict = {} soup = BeautifulSoup(html, 'html.parser') stockInfo = soup.find('div', attrs={'class':'stock-bets'}) # 获取股票名称 name = stockInfo.find_all(attrs={'class':'bets-name'})[0] # 将信息添加到字典中 # split()以空格分隔 infoDict.update({'股票名称' : name.text.split()[0]}) # 在标签中获取其他信息,用键值对维护 keyList = stockInfo.find_all('dt') valueList = stockInfo.find_all('dd') for i in range(len(keyList)): key = keyList[i].text val = valueList[i].text infoDict[key] = val # 将获得额信息写入相应文件中 with open(fpath, 'a', encoding='utf-8') as f: f.write(str(infoDict) + '\n') # 利用 traceback 跟踪并输出异常信息 except: traceback.print_exc() continue

3. 完整代码

# -*- coding: utf-8 -*- """ Created on Sat Feb 1 00:40:47 2020 @author: douzi """ import requests from bs4 import BeautifulSoup # traceback模块被用来跟踪异常返回信息 import traceback import re def getHTMLText(url, code = 'utf-8'): try: r = requests.get(url, timeout = 30) r.raise_for_status() # r.encoding = r.apparent_encoding # 定向爬虫可以直接固定 r.encoding = code print("url:", r.request.url) return r.text except: return "" def getStockList(lst, stockURL): html = getHTMLText(stockURL, "GB2312") soup = BeautifulSoup(html, 'html.parser') # 个股链接在 标签中 a = soup.find_all('a') for i in a: try: # 个股链接在 标签的 href 属性中 # 我们需要获得 sh/sz + 6 个数字,利用正则表达式 href = i.attrs['href'] lst.append(re.findall(r"[s][hz]\d{6}", href)[0]) except: continue print(lst) def getStockInfo(lst, stockURL, fpath): count = 0 for stock in lst: url = stockURL + stock + ".html" html = getHTMLText(url) try: if html == "": continue # 使用键值对记录个股信息 infoDict = {} soup = BeautifulSoup(html, 'html.parser') stockInfo = soup.find('div', attrs={'class':'stock-bets'}) # 获取股票名称 name = stockInfo.find_all(attrs={'class':'bets-name'})[0] # 将信息添加到字典中 # split()以空格分隔 infoDict.update({'股票名称' : name.text.split()[0]}) # 在标签中获取其他信息,用键值对维护 keyList = stockInfo.find_all('dt') valueList = stockInfo.find_all('dd') for i in range(len(keyList)): key = keyList[i].text val = valueList[i].text infoDict[key] = val # 将获得额信息写入相应文件中 with open(fpath, 'a', encoding='utf-8') as f: f.write(str(infoDict) + '\n') count = count + 1 # \r print('\r当前速度: {:.2f}%'.format(count * 100 / len(lst)), end='') # 利用 traceback 跟踪并输出异常信息 except: count = count + 1 print('\r当前速度: {:.2f}%'.format(count * 100 / len(lst)), end='') traceback.print_exc() continue def main(): # 股票列表信息 stock_list_url = "http://quote.eastmoney.com/stock_list.html" # 股票信息主体 stock_info_url = "https://gupiao.baidu.com/stock/" output_file = ".//Result_stock.txt" slist = [] # 获取股票列表信息 getStockList(slist, stock_list_url) # 获得股票信息主体 getStockInfo(slist, stock_info_url, output_file) if __name__ == "__main__": main()