版本记录

| 版本号 | 时间 |

|---|---|

| V1.0 | 2019.12.14 星期六 |

前言

AVFoundation框架是ios中很重要的框架,所有与视频音频相关的软硬件控制都在这个框架里面,接下来这几篇就主要对这个框架进行介绍和讲解。感兴趣的可以看我上几篇。

1. AVFoundation框架解析(一)—— 基本概览

2. AVFoundation框架解析(二)—— 实现视频预览录制保存到相册

3. AVFoundation框架解析(三)—— 几个关键问题之关于框架的深度概括

4. AVFoundation框架解析(四)—— 几个关键问题之AVFoundation探索(一)

5. AVFoundation框架解析(五)—— 几个关键问题之AVFoundation探索(二)

6. AVFoundation框架解析(六)—— 视频音频的合成(一)

7. AVFoundation框架解析(七)—— 视频组合和音频混合调试

8. AVFoundation框架解析(八)—— 优化用户的播放体验

9. AVFoundation框架解析(九)—— AVFoundation的变化(一)

10. AVFoundation框架解析(十)—— AVFoundation的变化(二)

11. AVFoundation框架解析(十一)—— AVFoundation的变化(三)

12. AVFoundation框架解析(十二)—— AVFoundation的变化(四)

13. AVFoundation框架解析(十三)—— 构建基本播放应用程序

14. AVFoundation框架解析(十四)—— VAssetWriter和AVAssetReader的Timecode支持(一)

15. AVFoundation框架解析(十五)—— VAssetWriter和AVAssetReader的Timecode支持(二)

16. AVFoundation框架解析(十六)—— 一个简单示例之播放、录制以及混合视频(一)

17. AVFoundation框架解析(十七)—— 一个简单示例之播放、录制以及混合视频之源码及效果展示(二)

18. AVFoundation框架解析(十八)—— AVAudioEngine之基本概览(一)

19. AVFoundation框架解析(十九)—— AVAudioEngine之详细说明和一个简单示例(二)

20. AVFoundation框架解析(二十)—— AVAudioEngine之详细说明和一个简单示例源码(三)

21. AVFoundation框架解析(二十一)—— 一个简单的视频流预览和播放示例之解析(一)

22. AVFoundation框架解析(二十二)—— 一个简单的视频流预览和播放示例之源码(二)

23. AVFoundation框架解析(二十三) —— 向视频层添加叠加层和动画(一)

源码

1. Swift

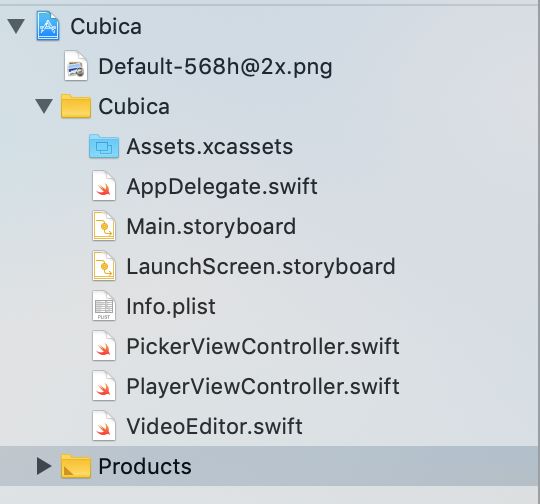

首先看下工程组织结构

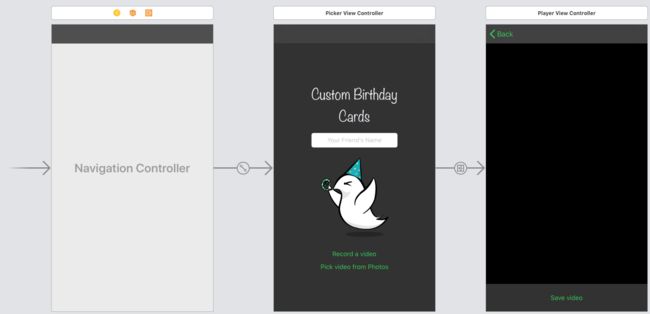

接着就是看sb中的内容了

接着就是看源码了

1. PickerViewController.swift

import UIKit

import MobileCoreServices

import AVKit

class PickerViewController: UIViewController {

private let editor = VideoEditor()

@IBOutlet weak var activityIndicator: UIActivityIndicatorView!

@IBOutlet weak var recordButton: UIButton!

@IBOutlet weak var pickButton: UIButton!

@IBOutlet weak var imageView: UIImageView!

@IBOutlet weak var nameTextField: UITextField!

@IBAction func recordButtonTapped(_ sender: Any) {

pickVideo(from: .camera)

}

@IBAction func pickVideoButtonTapped(_ sender: Any) {

pickVideo(from: .savedPhotosAlbum)

}

override func viewDidLoad() {

super.viewDidLoad()

nameTextField.addTarget(self, action: #selector(nameTextFieldChanged), for: .editingChanged)

nameTextField.delegate = self

nameTextField.returnKeyType = .done

recordButton.isEnabled = false

pickButton.isEnabled = false

}

@objc private func nameTextFieldChanged(_ textField: UITextField) {

let text = textField.text ?? ""

if text.isEmpty {

recordButton.isEnabled = false

pickButton.isEnabled = false

} else {

recordButton.isEnabled = UIImagePickerController.isSourceTypeAvailable(.camera)

pickButton.isEnabled = true

}

}

override func viewWillAppear(_ animated: Bool) {

super.viewWillAppear(animated)

navigationController?.setNavigationBarHidden(true, animated: animated)

}

private func pickVideo(from sourceType: UIImagePickerController.SourceType) {

let pickerController = UIImagePickerController()

pickerController.sourceType = sourceType

pickerController.mediaTypes = [kUTTypeMovie as String]

pickerController.videoQuality = .typeIFrame1280x720

if sourceType == .camera {

pickerController.cameraDevice = .front

}

pickerController.delegate = self

present(pickerController, animated: true)

}

private func showVideo(at url: URL) {

let player = AVPlayer(url: url)

let playerViewController = AVPlayerViewController()

playerViewController.player = player

present(playerViewController, animated: true) {

player.play()

}

}

private var pickedURL: URL?

override func prepare(for segue: UIStoryboardSegue, sender: Any?) {

guard

let url = pickedURL,

let destination = segue.destination as? PlayerViewController

else {

return

}

destination.videoURL = url

}

private func showInProgress() {

activityIndicator.startAnimating()

imageView.alpha = 0.3

pickButton.isEnabled = false

recordButton.isEnabled = false

}

private func showCompleted() {

activityIndicator.stopAnimating()

imageView.alpha = 1

pickButton.isEnabled = true

recordButton.isEnabled = UIImagePickerController.isSourceTypeAvailable(.camera)

}

}

extension PickerViewController: UIImagePickerControllerDelegate, UINavigationControllerDelegate {

func imagePickerController(_ picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [UIImagePickerController.InfoKey : Any]) {

guard

let url = info[.mediaURL] as? URL,

let name = nameTextField.text

else {

print("Cannot get video URL")

return

}

showInProgress()

dismiss(animated: true) {

self.editor.makeBirthdayCard(fromVideoAt: url, forName: name) { exportedURL in

self.showCompleted()

guard let exportedURL = exportedURL else {

return

}

self.pickedURL = exportedURL

self.performSegue(withIdentifier: "showVideo", sender: nil)

}

}

}

}

extension PickerViewController: UITextFieldDelegate {

func textFieldShouldReturn(_ textField: UITextField) -> Bool {

textField.resignFirstResponder()

return true

}

}

2. PlayerViewController.swift

import UIKit

import AVKit

import Photos

class PlayerViewController: UIViewController {

var videoURL: URL!

private var player: AVPlayer!

private var playerLayer: AVPlayerLayer!

@IBOutlet weak var videoView: UIView!

@IBAction func saveVideoButtonTapped(_ sender: Any) {

PHPhotoLibrary.requestAuthorization { [weak self] status in

switch status {

case .authorized:

self?.saveVideoToPhotos()

default:

print("Photos permissions not granted.")

return

}

}

}

private func saveVideoToPhotos() {

PHPhotoLibrary.shared().performChanges( {

PHAssetChangeRequest.creationRequestForAssetFromVideo(atFileURL: self.videoURL)

}) { [weak self] (isSaved, error) in

if isSaved {

print("Video saved.")

} else {

print("Cannot save video.")

print(error ?? "unknown error")

}

DispatchQueue.main.async {

self?.navigationController?.popViewController(animated: true)

}

}

}

override func viewDidLoad() {

super.viewDidLoad()

player = AVPlayer(url: videoURL)

playerLayer = AVPlayerLayer(player: player)

playerLayer.frame = videoView.bounds

videoView.layer.addSublayer(playerLayer)

player.play()

NotificationCenter.default.addObserver(

forName: .AVPlayerItemDidPlayToEndTime,

object: nil,

queue: nil) { [weak self] _ in self?.restart() }

}

override func viewWillAppear(_ animated: Bool) {

super.viewWillAppear(animated)

navigationController?.setNavigationBarHidden(false, animated: animated)

}

override func viewDidLayoutSubviews() {

super.viewDidLayoutSubviews()

playerLayer.frame = videoView.bounds

}

private func restart() {

player.seek(to: .zero)

player.play()

}

deinit {

NotificationCenter.default.removeObserver(

self,

name: .AVPlayerItemDidPlayToEndTime,

object: nil)

}

}

3. VideoEditor.swift

import UIKit

import AVFoundation

class VideoEditor {

func makeBirthdayCard(fromVideoAt videoURL: URL, forName name: String, onComplete: @escaping (URL?) -> Void) {

print(videoURL)

let asset = AVURLAsset(url: videoURL)

let composition = AVMutableComposition()

guard

let compositionTrack = composition.addMutableTrack(

withMediaType: .video, preferredTrackID: kCMPersistentTrackID_Invalid),

let assetTrack = asset.tracks(withMediaType: .video).first

else {

print("Something is wrong with the asset.")

onComplete(nil)

return

}

do {

let timeRange = CMTimeRange(start: .zero, duration: asset.duration)

try compositionTrack.insertTimeRange(timeRange, of: assetTrack, at: .zero)

if let audioAssetTrack = asset.tracks(withMediaType: .audio).first,

let compositionAudioTrack = composition.addMutableTrack(

withMediaType: .audio,

preferredTrackID: kCMPersistentTrackID_Invalid) {

try compositionAudioTrack.insertTimeRange(

timeRange,

of: audioAssetTrack,

at: .zero)

}

} catch {

print(error)

onComplete(nil)

return

}

compositionTrack.preferredTransform = assetTrack.preferredTransform

let videoInfo = orientation(from: assetTrack.preferredTransform)

let videoSize: CGSize

if videoInfo.isPortrait {

videoSize = CGSize(

width: assetTrack.naturalSize.height,

height: assetTrack.naturalSize.width)

} else {

videoSize = assetTrack.naturalSize

}

let backgroundLayer = CALayer()

backgroundLayer.frame = CGRect(origin: .zero, size: videoSize)

let videoLayer = CALayer()

videoLayer.frame = CGRect(origin: .zero, size: videoSize)

let overlayLayer = CALayer()

overlayLayer.frame = CGRect(origin: .zero, size: videoSize)

backgroundLayer.backgroundColor = UIColor(named: "rw-green")?.cgColor

videoLayer.frame = CGRect(

x: 20,

y: 20,

width: videoSize.width - 40,

height: videoSize.height - 40)

backgroundLayer.contents = UIImage(named: "background")?.cgImage

backgroundLayer.contentsGravity = .resizeAspectFill

addConfetti(to: overlayLayer)

addImage(to: overlayLayer, videoSize: videoSize)

add(

text: "Happy Birthday,\n\(name)",

to: overlayLayer,

videoSize: videoSize)

let outputLayer = CALayer()

outputLayer.frame = CGRect(origin: .zero, size: videoSize)

outputLayer.addSublayer(backgroundLayer)

outputLayer.addSublayer(videoLayer)

outputLayer.addSublayer(overlayLayer)

let videoComposition = AVMutableVideoComposition()

videoComposition.renderSize = videoSize

videoComposition.frameDuration = CMTime(value: 1, timescale: 30)

videoComposition.animationTool = AVVideoCompositionCoreAnimationTool(

postProcessingAsVideoLayer: videoLayer,

in: outputLayer)

let instruction = AVMutableVideoCompositionInstruction()

instruction.timeRange = CMTimeRange(

start: .zero,

duration: composition.duration)

videoComposition.instructions = [instruction]

let layerInstruction = compositionLayerInstruction(

for: compositionTrack,

assetTrack: assetTrack)

instruction.layerInstructions = [layerInstruction]

guard let export = AVAssetExportSession(

asset: composition,

presetName: AVAssetExportPresetHighestQuality)

else {

print("Cannot create export session.")

onComplete(nil)

return

}

let videoName = UUID().uuidString

let exportURL = URL(fileURLWithPath: NSTemporaryDirectory())

.appendingPathComponent(videoName)

.appendingPathExtension("mov")

export.videoComposition = videoComposition

export.outputFileType = .mov

export.outputURL = exportURL

export.exportAsynchronously {

DispatchQueue.main.async {

switch export.status {

case .completed:

onComplete(exportURL)

default:

print("Something went wrong during export.")

print(export.error ?? "unknown error")

onComplete(nil)

break

}

}

}

}

private func addImage(to layer: CALayer, videoSize: CGSize) {

let image = UIImage(named: "overlay")!

let imageLayer = CALayer()

let aspect: CGFloat = image.size.width / image.size.height

let width = videoSize.width

let height = width / aspect

imageLayer.frame = CGRect(

x: 0,

y: -height * 0.15,

width: width,

height: height)

imageLayer.contents = image.cgImage

layer.addSublayer(imageLayer)

}

private func add(text: String, to layer: CALayer, videoSize: CGSize) {

let attributedText = NSAttributedString(

string: text,

attributes: [

.font: UIFont(name: "ArialRoundedMTBold", size: 60) as Any,

.foregroundColor: UIColor(named: "rw-green")!,

.strokeColor: UIColor.white,

.strokeWidth: -3])

let textLayer = CATextLayer()

textLayer.string = attributedText

textLayer.shouldRasterize = true

textLayer.rasterizationScale = UIScreen.main.scale

textLayer.backgroundColor = UIColor.clear.cgColor

textLayer.alignmentMode = .center

textLayer.frame = CGRect(

x: 0,

y: videoSize.height * 0.66,

width: videoSize.width,

height: 150)

textLayer.displayIfNeeded()

let scaleAnimation = CABasicAnimation(keyPath: "transform.scale")

scaleAnimation.fromValue = 0.8

scaleAnimation.toValue = 1.2

scaleAnimation.duration = 0.5

scaleAnimation.repeatCount = .greatestFiniteMagnitude

scaleAnimation.autoreverses = true

scaleAnimation.timingFunction = CAMediaTimingFunction(name: .easeInEaseOut)

scaleAnimation.beginTime = AVCoreAnimationBeginTimeAtZero

scaleAnimation.isRemovedOnCompletion = false

textLayer.add(scaleAnimation, forKey: "scale")

layer.addSublayer(textLayer)

}

private func orientation(from transform: CGAffineTransform) -> (orientation: UIImage.Orientation, isPortrait: Bool) {

var assetOrientation = UIImage.Orientation.up

var isPortrait = false

if transform.a == 0 && transform.b == 1.0 && transform.c == -1.0 && transform.d == 0 {

assetOrientation = .right

isPortrait = true

} else if transform.a == 0 && transform.b == -1.0 && transform.c == 1.0 && transform.d == 0 {

assetOrientation = .left

isPortrait = true

} else if transform.a == 1.0 && transform.b == 0 && transform.c == 0 && transform.d == 1.0 {

assetOrientation = .up

} else if transform.a == -1.0 && transform.b == 0 && transform.c == 0 && transform.d == -1.0 {

assetOrientation = .down

}

return (assetOrientation, isPortrait)

}

private func compositionLayerInstruction(for track: AVCompositionTrack, assetTrack: AVAssetTrack) -> AVMutableVideoCompositionLayerInstruction {

let instruction = AVMutableVideoCompositionLayerInstruction(assetTrack: track)

let transform = assetTrack.preferredTransform

instruction.setTransform(transform, at: .zero)

return instruction

}

private func addConfetti(to layer: CALayer) {

let images: [UIImage] = (0...5).map { UIImage(named: "confetti\($0)")! }

let colors: [UIColor] = [.systemGreen, .systemRed, .systemBlue, .systemPink, .systemOrange, .systemPurple, .systemYellow]

let cells: [CAEmitterCell] = (0...16).map { _ in

let cell = CAEmitterCell()

cell.contents = images.randomElement()?.cgImage

cell.birthRate = 3

cell.lifetime = 12

cell.lifetimeRange = 0

cell.velocity = CGFloat.random(in: 100...200)

cell.velocityRange = 0

cell.emissionLongitude = 0

cell.emissionRange = 0.8

cell.spin = 4

cell.color = colors.randomElement()?.cgColor

cell.scale = CGFloat.random(in: 0.2...0.8)

return cell

}

let emitter = CAEmitterLayer()

emitter.emitterPosition = CGPoint(x: layer.frame.size.width / 2, y: layer.frame.size.height + 5)

emitter.emitterShape = .line

emitter.emitterSize = CGSize(width: layer.frame.size.width, height: 2)

emitter.emitterCells = cells

layer.addSublayer(emitter)

}

}

下面就是一个效果,视频是从相册里面选的

后记

本篇主要讲述了向视频层添加叠加层和动画,感兴趣的给个赞或者关注~~~