核心:通过共享纹理id和glcontext实现。

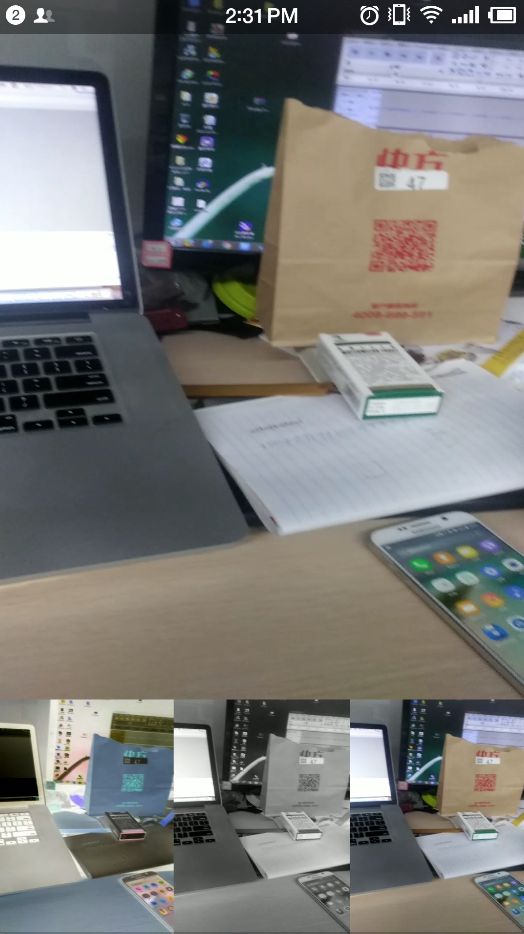

效果图:

修改课件代码:

CameraActivity.java

package com.ywl5320.wllivepusher;

import android.content.res.Configuration;

import android.os.Bundle;

import android.support.annotation.Nullable;

import android.support.v7.app.AppCompatActivity;

import android.view.ViewGroup;

import android.view.ViewTreeObserver;

import android.widget.LinearLayout;

import com.ywl5320.wllivepusher.camera.WlCameraRender;

import com.ywl5320.wllivepusher.camera.WlCameraView;

public class CameraActivity extends AppCompatActivity{

private WlCameraView wlCameraView;

private LinearLayout lyContent;

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_camera);

wlCameraView = findViewById(R.id.cameraview);

lyContent = findViewById(R.id.ly_content);

}

@Override

protected void onDestroy() {

super.onDestroy();

wlCameraView.onDestory();

}

@Override

public void onConfigurationChanged(Configuration newConfig) {

super.onConfigurationChanged(newConfig);

wlCameraView.previewAngle(this);

}

@Override

protected void onResume() {

super.onResume();

wlCameraView.getViewTreeObserver().addOnGlobalLayoutListener(new ViewTreeObserver.OnGlobalLayoutListener() {

@Override

public void onGlobalLayout() {

wlCameraView.getWlTextureRender().setOnRenderCreateListener(new WlCameraRender.OnRenderCreateListener() {

@Override

public void onCreate(final int textid) {

runOnUiThread(new Runnable() {

@Override

public void run() {

if(lyContent.getChildCount() > 0)

{

lyContent.removeAllViews();

}

for(int i = 0; i <3; i ++)

{

WlMutiSurfaceVeiw wlMutiSurfaceVeiw = new WlMutiSurfaceVeiw(CameraActivity.this);

wlMutiSurfaceVeiw.setTextureId(textid, i);

wlMutiSurfaceVeiw.setSurfaceAndEglContext(null, wlCameraView.getEglContext());

LinearLayout.LayoutParams lp = new LinearLayout.LayoutParams(ViewGroup.LayoutParams.MATCH_PARENT, ViewGroup.LayoutParams.MATCH_PARENT);

lp.height = lyContent.getMeasuredHeight();

lp.width = lp.height*wlCameraView.getMeasuredWidth()/wlCameraView.getMeasuredHeight();

wlMutiSurfaceVeiw.setLayoutParams(lp);

lyContent.addView(wlMutiSurfaceVeiw);

}

}

});

}

});

}

});

}

}

WlMutiSurfaceView.java

package com.ywl5320.wllivepusher;

import android.content.Context;

import android.util.AttributeSet;

import com.ywl5320.wllivepusher.egl.WLEGLSurfaceView;

public class WlMutiSurfaceVeiw extends WLEGLSurfaceView {

private WlMutiRender wlMutiRender;

public WlMutiSurfaceVeiw(Context context) {

this(context, null);

}

public WlMutiSurfaceVeiw(Context context, AttributeSet attrs) {

this(context, attrs, 0);

}

public WlMutiSurfaceVeiw(Context context, AttributeSet attrs, int defStyleAttr) {

super(context, attrs, defStyleAttr);

wlMutiRender = new WlMutiRender(context);

setRender(wlMutiRender);

}

public void setTextureId(int textureId, int index)

{

if(wlMutiRender != null)

{

wlMutiRender.setTextureId(textureId, index);

}

}

}

WlMutiRender.java

package com.ywl5320.wllivepusher;

import android.content.Context;

import android.opengl.GLES20;

import com.ywl5320.wllivepusher.egl.WLEGLSurfaceView;

import com.ywl5320.wllivepusher.egl.WlShaderUtil;

import java.nio.ByteBuffer;

import java.nio.ByteOrder;

import java.nio.FloatBuffer;

public class WlMutiRender implements WLEGLSurfaceView.WlGLRender{

private Context context;

private float[] vertexData = {

-1f, -1f,

1f, -1f,

-1f, 1f,

1f, 1f

};

private FloatBuffer vertexBuffer;

private float[] fragmentData = {

0f, 1f,

1f, 1f,

0f, 0f,

1f, 0f

};

private FloatBuffer fragmentBuffer;

private int program;

private int vPosition;

private int fPosition;

private int textureid;

private int sampler;

private int vboId;

private int textureId;

private int index;

public void setTextureId(int textureId, int index) {

this.textureId = textureId;

this.index = index;

}

public WlMutiRender(Context context) {

this.context = context;

vertexBuffer = ByteBuffer.allocateDirect(vertexData.length * 4)

.order(ByteOrder.nativeOrder())

.asFloatBuffer()

.put(vertexData);

vertexBuffer.position(0);

fragmentBuffer = ByteBuffer.allocateDirect(fragmentData.length * 4)

.order(ByteOrder.nativeOrder())

.asFloatBuffer()

.put(fragmentData);

fragmentBuffer.position(0);

}

@Override

public void onSurfaceCreated() {

String vertexSource = WlShaderUtil.getRawResource(context, R.raw.vertex_shader_screen);

String fragmentSource = WlShaderUtil.getRawResource(context, R.raw.fragment_shader_screen);

if(index == 0)

{

fragmentSource = WlShaderUtil.getRawResource(context, R.raw.fragment_shader1);

}

else if(index == 1)

{

fragmentSource = WlShaderUtil.getRawResource(context, R.raw.fragment_shader2);

}

else if(index == 2)

{

fragmentSource = WlShaderUtil.getRawResource(context, R.raw.fragment_shader3);

}

program = WlShaderUtil.createProgram(vertexSource, fragmentSource);

vPosition = GLES20.glGetAttribLocation(program, "v_Position");

fPosition = GLES20.glGetAttribLocation(program, "f_Position");

sampler = GLES20.glGetUniformLocation(program, "sTexture");

int [] vbos = new int[1];

GLES20.glGenBuffers(1, vbos, 0);

vboId = vbos[0];

GLES20.glBindBuffer(GLES20.GL_ARRAY_BUFFER, vboId);

GLES20.glBufferData(GLES20.GL_ARRAY_BUFFER, vertexData.length * 4 + fragmentData.length * 4, null, GLES20. GL_STATIC_DRAW);

GLES20.glBufferSubData(GLES20.GL_ARRAY_BUFFER, 0, vertexData.length * 4, vertexBuffer);

GLES20.glBufferSubData(GLES20.GL_ARRAY_BUFFER, vertexData.length * 4, fragmentData.length * 4, fragmentBuffer);

GLES20.glBindBuffer(GLES20.GL_ARRAY_BUFFER, 0);

}

@Override

public void onSurfaceChanged(int width, int height) {

GLES20.glViewport(0, 0, width, height);

}

@Override

public void onDrawFrame() {

GLES20.glClear(GLES20.GL_COLOR_BUFFER_BIT);

GLES20.glClearColor(1f,0f, 0f, 1f);

GLES20.glUseProgram(program);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureId);

GLES20.glBindBuffer(GLES20.GL_ARRAY_BUFFER, vboId);

GLES20.glEnableVertexAttribArray(vPosition);

GLES20.glVertexAttribPointer(vPosition, 2, GLES20.GL_FLOAT, false, 8,

0);

GLES20.glEnableVertexAttribArray(fPosition);

GLES20.glVertexAttribPointer(fPosition, 2, GLES20.GL_FLOAT, false, 8,

vertexData.length * 4);

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, 0);

GLES20.glBindBuffer(GLES20.GL_ARRAY_BUFFER, 0);

}

}

注意纹理id不要传错了

注意这几个shader

fragment_shader1.glsl

precision mediump float;

varying vec2 ft_Position;

uniform sampler2D sTexture;

void main() {

gl_FragColor = vec4(vec3(1.0 - texture2D(sTexture, ft_Position)), 1.0);

}

fragment_shader2.glsl

precision mediump float;

varying vec2 ft_Position;

uniform sampler2D sTexture;

void main() {

gl_FragColor = vec4(vec3(1.0 - texture2D(sTexture, ft_Position)), 1.0);

}

fragment_shader3.glsl

precision mediump float;

varying vec2 ft_Position;

uniform sampler2D sTexture;

void main() {

gl_FragColor = vec4(vec3(1.0 - texture2D(sTexture, ft_Position)), 1.0);

}