ServiceManager的启动

ServiceManager作为Android系统服务的管理者,同时提供注册、获取系统服务等功能。它是由init进程启动的,作为守护进程运行。

service servicemanager /system/bin/servicemanager

class core

user system

group system readproc

critical

onrestart restart healthd

onrestart restart zygote

onrestart restart audioserver

onrestart restart media

onrestart restart surfaceflinger

onrestart restart inputflinger

onrestart restart drm

onrestart restart cameraserver

writepid /dev/cpuset/system-background/tasks

ServiceManager的运行

int main()

{

struct binder_state *bs;

// 打开binder,分配用于接收binder数据的地址空间(128K)

bs = binder_open(128*1024);

if (!bs) {

ALOGE("failed to open binder driver\n");

return -1;

}

// 注册为上下文(服务)管理者

if (binder_become_context_manager(bs)) {

ALOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

......

// 进入binder消息循环,处理binder请求

binder_loop(bs, svcmgr_handler);

return 0;

}

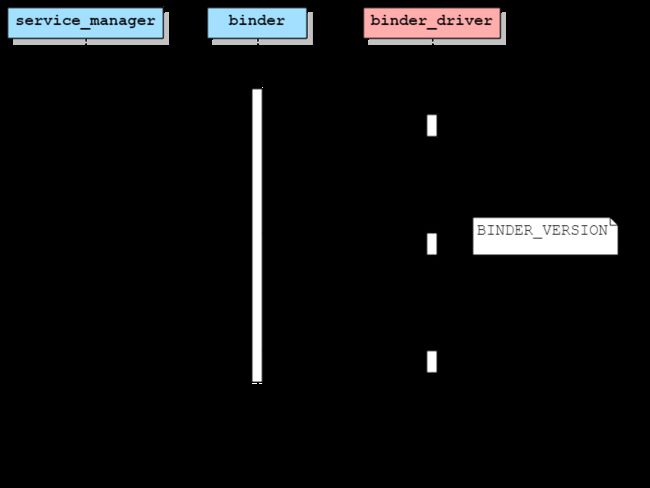

打开binder

下面从binder_open开始分析

struct binder_state *binder_open(size_t mapsize)

{

struct binder_state *bs;

struct binder_version vers;

bs = malloc(sizeof(*bs));

if (!bs) {

errno = ENOMEM;

return NULL;

}

// 打开binder设备文件,最终调用binder_open,请参考binder驱动

bs->fd = open("/dev/binder", O_RDWR | O_CLOEXEC);

if (bs->fd < 0) {

fprintf(stderr,"binder: cannot open device (%s)\n",

strerror(errno));

goto fail_open;

}

......

// 检查binder驱动协议版本,避免用户态与binder驱动使用的协议不一致

if ((ioctl(bs->fd, BINDER_VERSION, &vers) == -1) ||

(vers.protocol_version != BINDER_CURRENT_PROTOCOL_VERSION)) {

fprintf(stderr,

"binder: kernel driver version (%d) differs from user space version (%d)\n",

vers.protocol_version, BINDER_CURRENT_PROTOCOL_VERSION);

goto fail_open;

}

bs->mapsize = mapsize;

// 分配用于接收binder数据的地址空间,最终调用binder_mmap,请参考binder驱动

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

if (bs->mapped == MAP_FAILED) {

fprintf(stderr,"binder: cannot map device (%s)\n",

strerror(errno));

goto fail_map;

}

return bs;

......

}

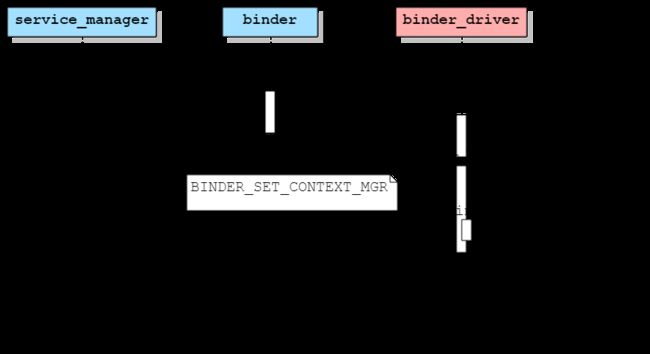

注册为服务管理者

下面从binder_become_context_manager开始分析

int binder_become_context_manager(struct binder_state *bs)

{

// 直接调用ioctl进入binder驱动

return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0);

}

下面看binder驱动的binder_ioctl处理BINDER_SET_CONTEXT_MGR请求.

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

// 进程的binder_proc

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

// ubuf = 0

void __user *ubuf = (void __user *)arg;

/*pr_info("binder_ioctl: %d:%d %x %lx\n",

proc->pid, current->pid, cmd, arg);*/

trace_binder_ioctl(cmd, arg);

......

binder_lock(__func__);

// 获取当前线程的binder_thread

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

......

case BINDER_SET_CONTEXT_MGR:

// 注册为上下文管理者

ret = binder_ioctl_set_ctx_mgr(filp);

if (ret)

goto err;

break;

......

}

......

}

先分析binder_get_thread的实现

static struct binder_thread *binder_get_thread(struct binder_proc *proc)

{

struct binder_thread *thread = NULL;

struct rb_node *parent = NULL;

struct rb_node **p = &proc->threads.rb_node;

// 以当前线程的pid为key搜索红黑树

while (*p) {

parent = *p;

thread = rb_entry(parent, struct binder_thread, rb_node);

if (current->pid < thread->pid)

p = &(*p)->rb_left;

else if (current->pid > thread->pid)

p = &(*p)->rb_right;

else

break;

}

// 当前线程对应的binder_thread不存在,创建

if (*p == NULL) {

thread = kzalloc(sizeof(*thread), GFP_KERNEL);

if (thread == NULL)

return NULL;

binder_stats_created(BINDER_STAT_THREAD);

thread->proc = proc;

thread->pid = current->pid;

init_waitqueue_head(&thread->wait);

INIT_LIST_HEAD(&thread->todo);

// 添加到binder_proc管理的binder线程红黑树中

rb_link_node(&thread->rb_node, parent, p);

rb_insert_color(&thread->rb_node, &proc->threads);

thread->looper |= BINDER_LOOPER_STATE_NEED_RETURN;

thread->return_error = BR_OK;

thread->return_error2 = BR_OK;

}

return thread;

}

下面看binder_ioctl_set_ctx_mgr的实现

static int binder_ioctl_set_ctx_mgr(struct file *filp)

{

int ret = 0;

// 进程的binder_proc

struct binder_proc *proc = filp->private_data;

// 线程的uid

kuid_t curr_euid = current_euid();

if (binder_context_mgr_node != NULL) {

pr_err("BINDER_SET_CONTEXT_MGR already set\n");

ret = -EBUSY;

goto out;

}

ret = security_binder_set_context_mgr(proc->tsk);

if (ret < 0)

goto out;

if (uid_valid(binder_context_mgr_uid)) {

if (!uid_eq(binder_context_mgr_uid, curr_euid)) {

pr_err("BINDER_SET_CONTEXT_MGR bad uid %d != %d\n",

from_kuid(&init_user_ns, curr_euid),

from_kuid(&init_user_ns,

binder_context_mgr_uid));

ret = -EPERM;

goto out;

}

} else {

binder_context_mgr_uid = curr_euid;

}

// 创建binder_node对象,保存到全局变量binder_context_mgr_node

binder_context_mgr_node = binder_new_node(proc, 0, 0);

if (binder_context_mgr_node == NULL) {

ret = -ENOMEM;

goto out;

}

// 增加引用计数

binder_context_mgr_node->local_weak_refs++;

binder_context_mgr_node->local_strong_refs++;

binder_context_mgr_node->has_strong_ref = 1;

binder_context_mgr_node->has_weak_ref = 1;

out:

return ret;

}

下面看binder_new_node的实现

static struct binder_node *binder_new_node(struct binder_proc *proc,

binder_uintptr_t ptr,

binder_uintptr_t cookie)

{

// 对于binder_context_mgr_node,ptr和cookie均为0

struct rb_node **p = &proc->nodes.rb_node;

struct rb_node *parent = NULL;

struct binder_node *node;

// 以ptr为key搜索binder_node红黑树

while (*p) {

parent = *p;

node = rb_entry(parent, struct binder_node, rb_node);

if (ptr < node->ptr)

p = &(*p)->rb_left;

else if (ptr > node->ptr)

p = &(*p)->rb_right;

else

return NULL;

}

// 创建binder_node

node = kzalloc(sizeof(*node), GFP_KERNEL);

if (node == NULL)

return NULL;

binder_stats_created(BINDER_STAT_NODE);

// 添加到binder_proc的binder_node红黑树中

rb_link_node(&node->rb_node, parent, p);

rb_insert_color(&node->rb_node, &proc->nodes);

node->debug_id = ++binder_last_id;

node->proc = proc;

node->ptr = ptr;

node->cookie = cookie;

node->work.type = BINDER_WORK_NODE;

INIT_LIST_HEAD(&node->work.entry);

INIT_LIST_HEAD(&node->async_todo);

binder_debug(BINDER_DEBUG_INTERNAL_REFS,

"%d:%d node %d u%016llx c%016llx created\n",

proc->pid, current->pid, node->debug_id,

(u64)node->ptr, (u64)node->cookie);

return node;

}

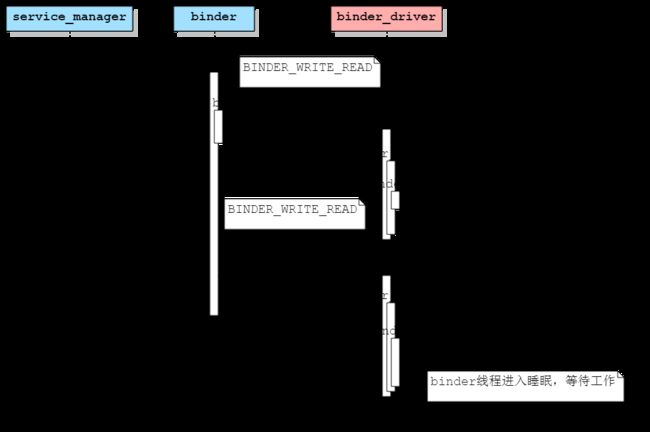

进入binder消息循环,处理binder请求

下面从binder_loop开始分析

// binder_write_read为用户态与binder驱动数据交互的控制结构体

struct binder_write_read {

binder_size_t write_size; // 用户态发给binder驱动的命令数据大小

binder_size_t write_consumed; // binder驱动消耗的数据的大小

binder_size_t write_buffer; // 命令数据buffer地址

binder_size_t read_size; // 用于接收binder驱动返回的命令数据的buffer大小

binder_size_t read_consumed; // binder驱动返回给用户态的命令数据大小

binder_size_t read_buffer; // 接收binder驱动返回的命令数据的buffer地址

}

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

// 向binder驱动发送命令协议BC_ENTER_LOOPER

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

// binder线程进入睡眠,等待客户端的请求

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

......

// 处理客户端的请求,如服务注册、获取等

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

......

}

}

首先看binder驱动处理BC_ENTER_LOOPER

int binder_write(struct binder_state *bs, void *data, size_t len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len; // len = 4

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) data; // data中存放BC_ENTER_LOOPER

bwr.read_size = 0; // read_size为0,binder线程不会等待

bwr.read_consumed = 0;

bwr.read_buffer = 0;

// 向binder驱动发送ioctl命令BINDER_WRITE_READ

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder_write: ioctl failed (%s)\n",

strerror(errno));

}

return res;

}

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

......

switch (cmd) {

case BINDER_WRITE_READ:

// 调用binder_ioctl_write_read处理BINDER_WRITE_READ

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

if (ret)

goto err;

break;

......

}

......

}

下面分析binder_ioctl_write_read

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg; // 用户态bwr地址

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto out;

}

// 用户态数据拷贝至内核态

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

......

if (bwr.write_size > 0) {

// 处理用户态发送的命令协议数据

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

// 当前read_size = 0

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

......

// 内核态bwr拷贝至用户态

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}

下面分析binder_thread_write处理命令协议数据

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

// buffer中存放BC_ENTER_LOOPER命令协议

// *consumed为0

// size为4

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

// 从用户态地址中读取命令协议

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

trace_binder_command(cmd);

// 更新binder状态

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

binder_stats.bc[_IOC_NR(cmd)]++;

proc->stats.bc[_IOC_NR(cmd)]++;

thread->stats.bc[_IOC_NR(cmd)]++;

}

switch (cmd) {

......

// 处理BC_ENTER_LOOPER

case BC_ENTER_LOOPER:

binder_debug(BINDER_DEBUG_THREADS,

"%d:%d BC_ENTER_LOOPER\n",

proc->pid, thread->pid);

if (thread->looper & BINDER_LOOPER_STATE_REGISTERED) {

thread->looper |= BINDER_LOOPER_STATE_INVALID;

binder_user_error("%d:%d ERROR: BC_ENTER_LOOPER called after BC_REGISTER_LOOPER\n",

proc->pid, thread->pid);

}

// 修改binder线程looper标志

thread->looper |= BINDER_LOOPER_STATE_ENTERED;

break;

......

}

*consumed = ptr - buffer;

}

return 0;

}

binder驱动处理命令协议BC_ENTER_LOOPER只是修改binder线程的looper标志,然后返回用户态。下面回到binder_loop分析binder线程进入睡眠,等待客户端的请求的过程。

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg; // 用户态bwr地址

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto out;

}

// 用户态数据拷贝至内核态

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

......

// 此时bwr.write_size为0

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

if (bwr.read_size > 0) {

// 调用binder_thread_read处理请求,如果没有请求binder线程进入睡眠等待状态

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

......

}

下面分析binder_thread_read

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

// 用户态read_buffer

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

// 向read_buffer中放空操作返回协议

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

// binder线程没有工作

wait_for_proc_work = thread->transaction_stack == NULL &&

list_empty(&thread->todo);

......

// 修改binder线程looper标志

thread->looper |= BINDER_LOOPER_STATE_WAITING;

if (wait_for_proc_work)

// 增加binder_proc中空闲的binder线程计数

proc->ready_threads++;

binder_unlock(__func__);

trace_binder_wait_for_work(wait_for_proc_work,

!!thread->transaction_stack,

!list_empty(&thread->todo));

if (wait_for_proc_work) {

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("%d:%d ERROR: Thread waiting for process work before calling BC_REGISTER_LOOPER or BC_ENTER_LOOPER (state %x)\n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait,

binder_stop_on_user_error < 2);

}

binder_set_nice(proc->default_priority);

if (non_block) {

if (!binder_has_proc_work(prc, thread))

ret = -EAGAIN;

} else

// binder线程进入睡眠,等待binder_has_proc_work(proc, thread)为true

ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread));

} else {

......

}

......

}

Native层ServiceManager代理对象(BpServiceManager)的获取

参见binder之服务注册