关于部署和相关原理 请自行搜索 这里 给出我的操作记录和排查问题的思路

这一节对后面的学习有巨大的作用!!!

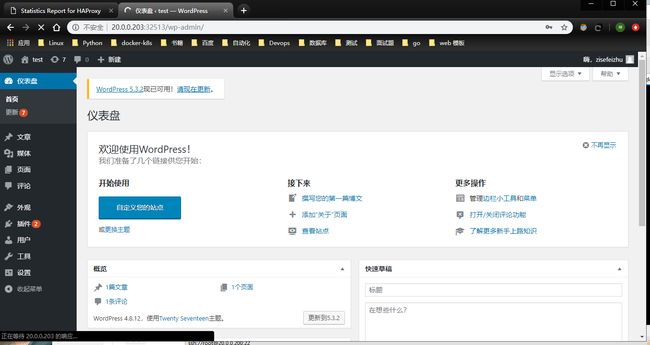

1 [root@bs-k8s-ceph ~]# ceph -s 2 cluster: 3 id: 11880418-1a9a-4b55-a353-4b141e2199d8 4 health: HEALTH_OK 5 6 services: 7 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 8 mgr: bs-hk-hk02(active), standbys: bs-hk-hk01, bs-k8s-ceph 9 osd: 6 osds: 6 up, 6 in 10 11 data: 12 pools: 3 pools, 384 pgs 13 objects: 0 objects, 0 B 14 usage: 6.1 GiB used, 108 GiB / 114 GiB avail 15 pgs: 384 active+clean 16 [root@bs-k8s-ceph ~]# ceph osd lspools 17 2020-02-14 08:44:14.362 7f23e0cc1700 0 -- 20.0.0.208:0/750273184 >> 20.0.0.207:6809/1207 conn(0x7f23c4003d50 :-1 s=STATE_CONNECTING_WAIT_CONNECT_REPLY_AUTH pgs=0 cs=0 l=1).handle_connect_reply connect got BADAUTHORIZER 18 2020-02-14 08:44:14.786 7f23e0cc1700 0 -- 20.0.0.208:0/750273184 >> 20.0.0.207:6809/1207 conn(0x7f23c4003d50 :-1 s=STATE_CONNECTING_WAIT_CONNECT_REPLY_AUTH pgs=0 cs=0 l=1).handle_connect_reply connect got BADAUTHORIZER 19 1 rbd 20 2 data 21 3 metadata 22 [root@bs-k8s-ceph ~]# systemctl restart ceph.target 23 [root@bs-hk-hk01 ~]# systemctl restart ceph.target 24 [root@bs-hk-hk02 ~]# systemctl restart ceph.target 25 [root@bs-k8s-ceph ~]# ceph osd lspools 26 1 rbd 27 2 data 28 3 metadata 29 [root@bs-k8s-ceph ~]# ceph osd pool rm rbd rbd 30 Error EPERM: WARNING: this will *PERMANENTLY DESTROY* all data stored in pool rbd. If you are *ABSOLUTELY CERTAIN* that is what you want, pass the pool name *twice*, followed by --yes-i-really-really-mean-it. 31 [root@bs-k8s-ceph ~]# ceph osd pool rm rbd rbd --yes-i-really-really-mean-it 32 Error EPERM: pool deletion is disabled; you must first set the mon_allow_pool_delete config option to true before you can destroy a pool 33 [root@bs-k8s-ceph ceph]# cp ceph.conf ceph.conf_`date '+%Y%m%d%H%M%S'` 34 [root@bs-k8s-ceph ceph]# ll 35 -rw-r--r-- 1 root root 335 2月 11 17:48 ceph.conf 36 -rw-r--r-- 1 root root 335 2月 14 08:51 ceph.conf_20200214085110 37 [root@bs-k8s-ceph ceph]# diff ceph.conf ceph.conf_20200214085110 38 14,15d13 39 < [mon] 40 < mon_allow_pool_delete = true 41 [root@bs-k8s-ceph ceph]# ceph-deploy --overwrite-conf config push bs-k8s-ceph bs-hk-hk01 bs-hk-hk02 42 [root@bs-k8s-ceph ceph]# systemctl restart ceph-mon.target 43 [root@bs-hk-hk01 ceph]# systemctl restart ceph-mon.target 44 [root@bs-hk-hk02 ceph]# systemctl restart ceph-mon.target 45 46 [root@bs-k8s-ceph ceph]# ceph osd pool rm rbd rbd --yes-i-really-really-mean-it 47 pool 'rbd' removed 48 [root@bs-k8s-ceph ceph]# ceph osd pool rm data data --yes-i-really-really-mean-it 49 pool 'data' removed 50 [root@bs-k8s-ceph ceph]# ceph osd pool rm metadata metadata --yes-i-really-really-mean-it 51 pool 'metadata' removed 52 53 [root@bs-k8s-ceph ceph]# ceph osd pool create rbd 32 54 pool 'rbd' created 55 [root@bs-k8s-ceph ceph]# ceph osd lspools 56 4 rbd 57 [root@bs-k8s-ceph ceph]# rbd create ceph-image -s 1G --image-feature layering 58 [root@bs-k8s-ceph ceph]# rbd ls 59 ceph-image 60 [root@bs-k8s-ceph ceph]# ceph -s 61 cluster: 62 id: 11880418-1a9a-4b55-a353-4b141e2199d8 63 health: HEALTH_WARN 64 application not enabled on 1 pool(s) 65 too few PGs per OSD (16 < min 30) 66 67 services: 68 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 69 mgr: bs-hk-hk02(active), standbys: bs-hk-hk01, bs-k8s-ceph 70 osd: 6 osds: 6 up, 6 in 71 72 data: 73 pools: 1 pools, 32 pgs 74 objects: 4 objects, 36 B 75 usage: 6.1 GiB used, 108 GiB / 114 GiB avail 76 pgs: 32 active+clean 77 78 [root@bs-k8s-ceph ceph]# ceph osd pool application enable rbd ceph-image 79 enabled application 'ceph-image' on pool 'rbd' 80 [root@bs-k8s-ceph ceph]# ceph -s 81 cluster: 82 id: 11880418-1a9a-4b55-a353-4b141e2199d8 83 health: HEALTH_WARN 84 too few PGs per OSD (16 < min 30) 85 86 services: 87 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 88 mgr: bs-hk-hk02(active), standbys: bs-hk-hk01, bs-k8s-ceph 89 osd: 6 osds: 6 up, 6 in 90 91 data: 92 pools: 1 pools, 32 pgs 93 objects: 4 objects, 36 B 94 usage: 6.1 GiB used, 108 GiB / 114 GiB avail 95 pgs: 32 active+clean 96 97 [root@bs-k8s-ceph ceph]# ceph osd pool get rbd pg_num 98 pg_num: 32 99 [root@bs-k8s-ceph ceph]# ceph osd pool set rbd pg_num 128 100 set pool 4 pg_num to 128 101 [root@bs-k8s-ceph ceph]# ceph -s 102 cluster: 103 id: 11880418-1a9a-4b55-a353-4b141e2199d8 104 health: HEALTH_WARN 105 Reduced data availability: 25 pgs peering 106 1 pools have pg_num > pgp_num 107 108 services: 109 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 110 mgr: bs-hk-hk02(active), standbys: bs-hk-hk01, bs-k8s-ceph 111 osd: 6 osds: 6 up, 6 in 112 113 data: 114 pools: 1 pools, 128 pgs 115 objects: 3 objects, 36 B 116 usage: 6.1 GiB used, 108 GiB / 114 GiB avail 117 pgs: 16.406% pgs unknown 118 51.562% pgs not active 119 41 active+clean 120 41 activating 121 25 peering 122 21 unknown 123 124 [root@bs-k8s-ceph ceph]# ceph osd pool set rbd pgp_num 128 125 set pool 4 pgp_num to 128 126 [root@bs-k8s-ceph ceph]# ceph -s 127 cluster: 128 id: 11880418-1a9a-4b55-a353-4b141e2199d8 129 health: HEALTH_WARN 130 Reduced data availability: 25 pgs inactive, 62 pgs peering 131 132 services: 133 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 134 mgr: bs-hk-hk02(active), standbys: bs-hk-hk01, bs-k8s-ceph 135 osd: 6 osds: 6 up, 6 in 136 137 data: 138 pools: 1 pools, 128 pgs 139 objects: 2 objects, 9 B 140 usage: 6.1 GiB used, 108 GiB / 114 GiB avail 141 pgs: 57.812% pgs not active 142 74 peering 143 54 active+clean 144 145 [root@bs-k8s-ceph ceph]# ceph -s 146 cluster: 147 id: 11880418-1a9a-4b55-a353-4b141e2199d8 148 health: HEALTH_OK 149 150 services: 151 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 152 mgr: bs-hk-hk02(active), standbys: bs-hk-hk01, bs-k8s-ceph 153 osd: 6 osds: 6 up, 6 in 154 155 data: 156 pools: 1 pools, 128 pgs 157 objects: 5 objects, 27 B 158 usage: 6.1 GiB used, 108 GiB / 114 GiB avail 159 pgs: 128 active+clean 160 161 [root@bs-k8s-ceph ceph]# rbd info ceph-image 162 rbd image 'ceph-image': 163 size 1 GiB in 256 objects 164 order 22 (4 MiB objects) 165 id: 910d66b8b4567 166 block_name_prefix: rbd_data.910d66b8b4567 167 format: 2 168 features: layering 169 op_features: 170 flags: 171 create_timestamp: Fri Feb 14 09:01:13 2020 172 [root@bs-k8s-node01 ~]# grep key /etc/ceph/ceph.client.admin.keyring |awk '{printf "%s", $NF}'|base64 173 QVFDNmNVSmV2eU8yRnhBQVBxYzE5Mm5PelNnZk5acmg5aEFQYXc9PQ== 174 [root@bs-k8s-master01 ~]# cd /data/k8s/yaml/ 175 [root@bs-k8s-master01 yaml]# mkdir k8s_ceph 176 [root@bs-k8s-master01 yaml]# cd k8s_ceph/ 177 [root@bs-k8s-master01 k8s_ceph]# mkdir static 178 [root@bs-k8s-master01 k8s_ceph]# cd static/ 179 [root@bs-k8s-master01 static]# mkdir rbd 180 [root@bs-k8s-master01 static]# cd rbd/ 181 [root@bs-k8s-master01 ~]# kubectl explain secret 182 [root@bs-k8s-master01 rbd]# vim ceph-secret.yaml 183 [root@bs-k8s-master01 rbd]# kubectl apply -f ceph-secret.yaml 184 secret/ceph-secret created 185 [root@bs-k8s-master01 rbd]# kubectl get secrets ceph-secret 186 NAME TYPE DATA AGE 187 ceph-secret kubernetes.io/rbd 1 12s 188 [root@bs-k8s-master01 rbd]# cat ceph-secret.yaml 189 ########################################################################## 190 #Author: zisefeizhu 191 #QQ: 2********0 192 #Date: 2020-02-14 193 #FileName: ceph-secret.yaml 194 #URL: https://www.cnblogs.com/zisefeizhu/ 195 #Description: The test script 196 #Copyright (C): 2020 All rights reserved 197 ########################################################################### 198 apiVersion: v1 199 kind: Secret 200 metadata: 201 name: ceph-secret 202 type: kubernetes.io/rbd 203 data: 204 key: QVFDNmNVSmV2eU8yRnhBQVBxYzE5Mm5PelNnZk5acmg5aEFQYXc9PQ== 205 [root@bs-k8s-master01 ~]# kubectl explain pv 206 [root@bs-k8s-master01 rbd]# vim ceph-pv.yaml 207 [root@bs-k8s-master01 rbd]# kubectl apply -f ceph-pv.yaml 208 persistentvolume/ceph-pv created 209 [root@bs-k8s-master01 rbd]# kubectl get pv 210 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 211 ceph-pv 1Gi RWO Recycle Available rbd 6s 212 [root@bs-k8s-master01 rbd]# cat ceph-pv.yaml 213 ########################################################################## 214 #Author: zisefeizhu 215 #QQ: 2********0 216 #Date: 2020-02-14 217 #FileName: ceph-pv.yaml 218 #URL: https://www.cnblogs.com/zisefeizhu/ 219 #Description: The test script 220 #Copyright (C): 2020 All rights reserved 221 ########################################################################### 222 apiVersion: v1 223 kind: PersistentVolume 224 metadata: 225 name: ceph-pv 226 spec: 227 capacity: 228 storage: 1Gi 229 accessModes: 230 - ReadWriteOnce 231 storageClassName: "rbd" 232 rbd: 233 monitors: 234 - 20.0.0.206:6789 235 - 20.0.0.207:6789 236 - 20.0.0.208:6789 237 pool: rbd 238 image: ceph-image 239 user: admin 240 secretRef: 241 name: ceph-secret 242 fsType: xfs 243 readOnly: false 244 persistentVolumeReclaimPolicy: Recycle 245 [root@bs-k8s-master01 ~]# kubectl explain pvc 246 [root@bs-k8s-master01 rbd]# vim ceph-claim.yaml 247 [root@bs-k8s-master01 rbd]# kubectl apply -f ceph-claim.yaml 248 persistentvolumeclaim/ceph-claim created 249 [root@bs-k8s-master01 rbd]# kubectl get pvc ceph-claim 250 NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE 251 ceph-claim Bound ceph-pv 1Gi RWO rbd 17s 252 [root@bs-k8s-master01 rbd]# cat ceph-claim.yaml 253 ########################################################################## 254 #Author: zisefeizhu 255 #QQ: 2********0 256 #Date: 2020-02-14 257 #FileName: ceph-claim.yaml 258 #URL: https://www.cnblogs.com/zisefeizhu/ 259 #Description: The test script 260 #Copyright (C): 2020 All rights reserved 261 ########################################################################### 262 apiVersion: v1 263 kind: PersistentVolumeClaim 264 metadata: 265 name: ceph-claim 266 spec: 267 storageClassName: "rbd" 268 accessModes: 269 - ReadWriteOnce 270 resources: 271 requests: 272 storage: 1Gi 273 [root@bs-k8s-master01 rbd]# kubectl get pv 274 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 275 ceph-pv 1Gi RWO Recycle Bound default/ceph-claim rbd 5m6s 276 [root@bs-k8s-master01 ~]# kubectl explain pod 277 [root@bs-k8s-master01 rbd]# vim ceph-pod1.yaml 278 [root@bs-k8s-master01 rbd]# kubectl apply -f ceph-pod1.yaml 279 pod/ceph-pod1 created 280 [root@bs-k8s-master01 rbd]# kubectl get pod ceph-pod1 -o wide 281 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES 282 ceph-pod1 0/1 ContainerCreating 0 24sbs-k8s-node01 283 [root@bs-k8s-master01 rbd]# kubectl describe pod ceph-pod1 284 Events: 285 Type Reason Age From Message 286 ---- ------ ---- ---- ------- 287 Normal Scheduled default-scheduler Successfully assigned default/ceph-pod1 to bs-k8s-node01 288 Normal SuccessfulAttachVolume 91s attachdetach-controller AttachVolume.Attach succeeded for volume "ceph-pv" 289 Warning FailedMount 27s kubelet, bs-k8s-node01 MountVolume.WaitForAttach failed for volume "ceph-pv" : rbd: map failed exit status 110, rbd output: rbd: sysfs write failed 290 In some cases useful info is found in syslog - try "dmesg | tail". 291 rbd: map failed: (110) Connection timed out 292 [root@bs-k8s-node01 ~]# dmesg | tail 293 [ 6885.200084] libceph: mon1 20.0.0.207:6789 feature set mismatch, my 106b84a842a42 < server's 40106b84a842a42, missing 400000000000000 294 [ 6885.202581] libceph: mon1 20.0.0.207:6789 missing required protocol features 295 [ 6895.233148] libceph: mon1 20.0.0.207:6789 feature set mismatch, my 106b84a842a42 < server's 40106b84a842a42, missing 400000000000000 296 [ 6895.234731] libceph: mon1 20.0.0.207:6789 missing required protocol features 297 [ 6915.160936] libceph: mon2 20.0.0.208:6789 feature set mismatch, my 106b84a842a42 < server's 40106b84a842a42, missing 400000000000000 298 [ 6915.162590] libceph: mon2 20.0.0.208:6789 missing required protocol features 299 [ 6925.523935] libceph: mon0 20.0.0.206:6789 feature set mismatch, my 106b84a842a42 < server's 40106b84a842a42, missing 400000000000000 300 [ 6925.525880] libceph: mon0 20.0.0.206:6789 missing required protocol features 301 [ 6935.197796] libceph: mon1 20.0.0.207:6789 feature set mismatch, my 106b84a842a42 < server's 40106b84a842a42, missing 400000000000000 302 [ 6935.221446] libceph: mon1 20.0.0.207:6789 missing required protocol features 303 出现该信息的原因在于待映射机器内核太低不支 feature flag 400000000000000,需要kernel>=4.5的机器才能map成功。 304 在ceph集群版本大于v10.0.2 (jewel)时候,会需要这个400000000000000这个特性,可以手动关闭 305 bs-k8s-ceph bs-hk-hk01 bs-hk-hk02 306 [root@bs-k8s-ceph ceph]# ceph osd crush tunables hammer 307 adjusted tunables profile to hammer 308 [root@bs-k8s-master01 rbd]# kubectl delete -f ceph-pod1.yaml 309 pod "ceph-pod1" deleted 310 [root@bs-k8s-master01 rbd]# kubectl create -f ceph-pod1.yaml 311 pod/ceph-pod1 created 312 [root@bs-k8s-master01 rbd]# kubectl get pod ceph-pod1 -o wide 313 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES 314 ceph-pod1 1/1 Running 0 104s 10.209.145.5 bs-k8s-node02 315 [root@bs-k8s-master01 rbd]# kubectl exec -it ceph-pod1 -- /bin/sh 316 / # cd /usr/share/busybox/ 317 /usr/share/busybox # echo 'Test static storage by zisefeizhu' > test.txt 318 /usr/share/busybox # exit 319 [root@bs-k8s-master01 rbd]# kubectl delete -f ceph-pod1.yaml 320 pod "ceph-pod1" deleted 321 [root@bs-k8s-master01 rbd]# kubectl apply -f ceph-pod1.yaml 322 pod/ceph-pod1 created 323 [root@bs-k8s-master01 rbd]# kubectl exec ceph-pod1 -- cat /usr/share/busybox/test.txt 324 Test static storage by zisefeizhu 325 [root@bs-k8s-master01 rbd]# kubectl delete -f . 326 persistentvolumeclaim "ceph-claim" deleted 327 pod "ceph-pod1" deleted 328 persistentvolume "ceph-pv" deleted 329 secret "ceph-secret" deleted 330 331 332 [root@bs-k8s-node01 ~]# ceph osd pool create k8s 64 64 333 pool 'k8s' created 334 [root@bs-k8s-node01 ~]# ceph osd pool ls 335 rbd 336 k8s 337 [root@bs-k8s-node01 ~]# ceph -s 338 cluster: 339 id: 11880418-1a9a-4b55-a353-4b141e2199d8 340 health: HEALTH_OK 341 342 services: 343 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 344 mgr: bs-hk-hk02(active), standbys: bs-hk-hk01, bs-k8s-ceph 345 osd: 6 osds: 6 up, 6 in 346 347 data: 348 pools: 2 pools, 192 pgs 349 objects: 72 objects, 237 MiB 350 usage: 6.8 GiB used, 107 GiB / 114 GiB avail 351 pgs: 192 active+clean 352 [root@bs-k8s-master01 k8s_ceph]# pwd 353 /data/k8s/yaml/k8s_ceph 354 [root@bs-k8s-master01 k8s_ceph]# mkdir dynamic 355 [root@bs-k8s-master01 k8s_ceph]# cd dynamic/ 356 [root@bs-k8s-node01 ~]# ceph auth get-key client.admin | base64 357 QVFDNmNVSmV2eU8yRnhBQVBxYzE5Mm5PelNnZk5acmg5aEFQYXc9PQ== 358 使用StorageClass动态创建PV时,controller-manager会自动在Ceph上创建image,所以我们要为其准备好rbd命令。 359 如果集群是用kubeadm部署的,由于controller-manager官方镜像中没有rbd命令,所以我们要导入外部配置 360 git clone https://github.com/xiaotech/ceph-pvc 我这里是直接荡下来了 361 [root@bs-k8s-master01 dynamic]# ll 362 总用量 11392 363 -rw-r--r-- 1 root root 11662216 2月 13 16:10 external-storage-master.zip 364 [root@bs-k8s-master01 dynamic]# unzip external-storage-master.zip 365 [root@bs-k8s-master01 dynamic]# cd external-storage-master/ 366 [root@bs-k8s-master01 external-storage-master]# ls 367 aws CONTRIBUTING.md flex Gopkg.toml LICENSE nfs OWNERS repo-infra test.sh 368 ceph deploy.sh gluster hack local-volume nfs-client README.md SECURITY_CONTACTS unittests.sh 369 code-of-conduct.md digitalocean Gopkg.lock iscsi Makefile openebs RELEASE.md snapshot vendor 370 [root@bs-k8s-master01 external-storage-master]# cd ceph/ 371 [root@bs-k8s-master01 ceph]# ls 372 cephfs rbd 373 [root@bs-k8s-master01 ceph]# cd rbd/ 374 [root@bs-k8s-master01 rbd]# ls 375 CHANGELOG.md cmd deploy Dockerfile Dockerfile.release examples local-start.sh Makefile OWNERS pkg README.md 376 [root@bs-k8s-master01 rbd]# cd deploy/ 377 [root@bs-k8s-master01 deploy]# ls 378 non-rbac rbac README.md 379 [root@bs-k8s-master01 deploy]# cd rbac/ 380 [root@bs-k8s-master01 rbac]# ls 381 clusterrolebinding.yaml clusterrole.yaml deployment.yaml rolebinding.yaml role.yaml serviceaccount.yaml 382 大致将上面的六个yaml文件 瞅瞅 看一下 配置什么的 看着似乎是没啥问题 执行吧 遇坑填坑吧 383 [root@bs-k8s-master01 rbac]# kubectl apply -f . 384 clusterrole.rbac.authorization.k8s.io/rbd-provisioner created 385 clusterrolebinding.rbac.authorization.k8s.io/rbd-provisioner created 386 deployment.apps/rbd-provisioner created 387 role.rbac.authorization.k8s.io/rbd-provisioner created 388 rolebinding.rbac.authorization.k8s.io/rbd-provisioner created 389 serviceaccount/rbd-provisioner created 390 [root@bs-k8s-master01 rbd]# pwd 391 /data/k8s/yaml/k8s_ceph/dynamic/rbd 392 [root@bs-k8s-master01 ~]# kubectl explain storageclass 393 [root@bs-k8s-master01 rbd]# kubectl apply -f storage_class.yaml 394 storageclass.storage.k8s.io/ceph-sc created 395 [root@bs-k8s-master01 rbd]# kubectl get storageclasses.storage.k8s.io 396 NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE 397 ceph-sc ceph.com/rbd Retain Immediate false 21s 398 [root@bs-k8s-master01 rbd]# cat storage_class.yaml 399 ########################################################################## 400 #Author: zisefeizhu 401 #QQ: 2********0 402 #Date: 2020-02-14 403 #FileName: storage_class.yaml 404 #URL: https://www.cnblogs.com/zisefeizhu/ 405 #Description: The test script 406 #Copyright (C): 2020 All rights reserved 407 ########################################################################### 408 apiVersion: storage.k8s.io/v1 409 kind: StorageClass 410 metadata: 411 name: ceph-sc 412 namespace: default 413 annotations: 414 storageclass.kubernetes.io/is-default-class: "false" 415 provisioner: ceph.com/rbd 416 reclaimPolicy: Retain 417 parameters: 418 monitors: 20.0.0.206:6789,20.0.0.207:6789,20.0.0.208:6789 419 adminId: admin 420 adminSecretName: storage-secret 421 adminSecretNamespace: default 422 pool: k8s 423 fsType: xfs 424 userId: admin 425 userSecretName: storage-secret 426 imageFormat: "2" 427 imageFeatures: "layering" 428 [root@bs-k8s-master01 rbd]# kubectl apply -f storage_secret.yaml 429 secret/storage-secret created 430 [root@bs-k8s-master01 rbd]# kubectl get secrets 431 NAME TYPE DATA AGE 432 default-token-qx5c2 kubernetes.io/service-account-token 3 4d23h 433 rbd-provisioner-token-62j7s kubernetes.io/service-account-token 3 13m 434 storage-secret kubernetes.io/rbd 1 16s 435 [root@bs-k8s-master01 rbd]# cat storage_class.yaml 436 ########################################################################## 437 #Author: zisefeizhu 438 #QQ: 2********0 439 #Date: 2020-02-14 440 #FileName: storage_class.yaml 441 #URL: https://www.cnblogs.com/zisefeizhu/ 442 #Description: The test script 443 #Copyright (C): 2020 All rights reserved 444 ########################################################################### 445 apiVersion: storage.k8s.io/v1 446 kind: StorageClass 447 metadata: 448 name: ceph-sc 449 namespace: default 450 annotations: 451 storageclass.kubernetes.io/is-default-class: "false" 452 provisioner: ceph.com/rbd 453 reclaimPolicy: Retain 454 parameters: 455 monitors: 20.0.0.206:6789,20.0.0.207:6789,20.0.0.208:6789 456 adminId: admin 457 adminSecretName: storage-secret 458 adminSecretNamespace: default 459 pool: k8s 460 fsType: xfs 461 userId: admin 462 userSecretName: storage-secret 463 imageFormat: "2" 464 imageFeatures: "layering" 465 [root@bs-k8s-master01 rbd]# kubectl apply -f storage_pvc.yaml 466 persistentvolumeclaim/ceph-pvc created 467 [root@bs-k8s-master01 rbd]# kubectl get pv 468 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 469 pvc-5abb701f-b970-4893-a611-1858229ff96a 1Gi RWO Retain Bound default/ceph-pvc ceph-sc 2s 470 [root@bs-k8s-master01 rbd]# kubectl get pvc 471 NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE 472 ceph-pvc Bound pvc-5abb701f-b970-4893-a611-1858229ff96a 1Gi RWO ceph-sc 18s 473 [root@bs-k8s-master01 rbd]# cat storage_pvc.yaml 474 ########################################################################## 475 #Author: zisefeizhu 476 #QQ: 2********0 477 #Date: 2020-02-14 478 #FileName: storage_pvc.yaml 479 #URL: https://www.cnblogs.com/zisefeizhu/ 480 #Description: The test script 481 #Copyright (C): 2020 All rights reserved 482 ########################################################################### 483 apiVersion: v1 484 kind: PersistentVolumeClaim 485 metadata: 486 name: ceph-pvc 487 namespace: default 488 spec: 489 storageClassName: ceph-sc 490 accessModes: 491 - ReadWriteOnce 492 resources: 493 requests: 494 storage: 1Gi 495 [root@bs-k8s-master01 rbd]# kubectl apply -f storage_pod.yaml 496 pod/ceph-pod1 created 497 [root@bs-k8s-master01 rbd]# kubectl get pods -o wide 498 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES 499 ceph-pod1 1/1 Running 0 60s 10.209.208.7 bs-k8s-node03 500 rbd-provisioner-75b85f85bd-n52q5 1/1 Running 0 23m 10.209.46.67 bs-k8s-node01 501 [root@bs-k8s-master01 rbd]# cat storage_pod.yaml 502 ########################################################################## 503 #Author: zisefeizhu 504 #QQ: 2********0 505 #Date: 2020-02-14 506 #FileName: storage_pod.yaml 507 #URL: https://www.cnblogs.com/zisefeizhu/ 508 #Description: The test script 509 #Copyright (C): 2020 All rights reserved 510 ########################################################################### 511 apiVersion: v1 512 kind: Pod 513 metadata: 514 name: ceph-pod1 515 spec: 516 nodeName: bs-k8s-node03 517 containers: 518 - name: ceph-busybox 519 image: busybox 520 command: ["sleep","60000"] 521 volumeMounts: 522 - name: ceph-vol2 523 mountPath: /usr/share/busybox 524 readOnly: false 525 volumes: 526 - name: ceph-vol2 527 persistentVolumeClaim: 528 claimName: ceph-pvc 529 [root@bs-k8s-master01 rbd]# kubectl exec -it ceph-pod1 -- /bin/sh 530 / # echo 'Test dynamic storage by zisefeizhu' > /usr/share/busybox/ceph.txt 531 / # exit 532 [root@bs-k8s-master01 rbd]# kubectl delete -f storage_pod.yaml 533 pod "ceph-pod1" deleted 534 [root@bs-k8s-master01 rbd]# vim storage_pod.yaml 535 [root@bs-k8s-master01 rbd]# kubectl apply -f storage_pod.yaml 536 pod/ceph-pod1 created 537 [root@bs-k8s-master01 rbd]# kubectl get pods -o wide 538 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES 539 ceph-pod1 0/1 ContainerCreating 0 9s bs-k8s-node02 540 rbd-provisioner-75b85f85bd-n52q5 1/1 Running 0 32m 10.209.46.67 bs-k8s-node01 541 [root@bs-k8s-master01 rbd]# kubectl get pods -o wide 542 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES 543 ceph-pod1 1/1 Running 0 32s 10.209.145.7 bs-k8s-node02 544 rbd-provisioner-75b85f85bd-n52q5 1/1 Running 0 32m 10.209.46.67 bs-k8s-node01 545 [root@bs-k8s-ceph ceph]# rbd -p k8s ls 546 kubernetes-dynamic-pvc-74f37c8a-4ecf-11ea-af16-763550eb2d21 547 [root@bs-k8s-master01 rbd]# kubectl exec ceph-pod1 -- cat /usr/share/busybox/ceph.txt 548 Test dynamic storage by zisefeizhu 549 [root@bs-k8s-master01 rbd]# cat storage_pod.yaml 550 ########################################################################## 551 #Author: zisefeizhu 552 #QQ: 2********0 553 #Date: 2020-02-14 554 #FileName: storage_pod.yaml 555 #URL: https://www.cnblogs.com/zisefeizhu/ 556 #Description: The test script 557 #Copyright (C): 2020 All rights reserved 558 ########################################################################### 559 apiVersion: v1 560 kind: Pod 561 metadata: 562 name: ceph-pod1 563 spec: 564 nodeName: bs-k8s-node02 565 containers: 566 - name: ceph-busybox 567 image: busybox 568 command: ["sleep","60000"] 569 volumeMounts: 570 - name: ceph-vol2 571 mountPath: /usr/share/busybox 572 readOnly: false 573 volumes: 574 - name: ceph-vol2 575 persistentVolumeClaim: 576 claimName: ceph-pvc 577 [root@bs-k8s-ceph ceph]# rbd status k8s/kubernetes-dynamic-pvc-74f37c8a-4ecf-11ea-af16-763550eb2d21 578 Watchers: 579 watcher=20.0.0.204:0/1688154193 client.594271 cookie=1 580 [root@bs-k8s-ceph ceph]# ceph -s 581 cluster: 582 id: 11880418-1a9a-4b55-a353-4b141e2199d8 583 health: HEALTH_WARN 584 application not enabled on 1 pool(s) 585 586 services: 587 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 588 mgr: bs-hk-hk02(active), standbys: bs-hk-hk01, bs-k8s-ceph 589 osd: 6 osds: 6 up, 6 in 590 591 data: 592 pools: 2 pools, 192 pgs 593 objects: 88 objects, 252 MiB 594 usage: 6.8 GiB used, 107 GiB / 114 GiB avail 595 pgs: 192 active+clean 596 597 [root@bs-k8s-ceph ceph]# rbd status k8s/kubernetes-dynamic-pvc-74f37c8a-4ecf-11ea-af16-763550eb2d21 598 Watchers: 599 watcher=20.0.0.204:0/1688154193 client.594271 cookie=1 600 [root@bs-k8s-ceph ceph]# ceph osd pool application enable k8s rbd 601 enabled application 'rbd' on pool 'k8s' 602 [root@bs-k8s-ceph ceph]# ceph -s 603 cluster: 604 id: 11880418-1a9a-4b55-a353-4b141e2199d8 605 health: HEALTH_OK 606 607 services: 608 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 609 mgr: bs-hk-hk02(active), standbys: bs-hk-hk01, bs-k8s-ceph 610 osd: 6 osds: 6 up, 6 in 611 612 data: 613 pools: 2 pools, 192 pgs 614 objects: 88 objects, 252 MiB 615 usage: 6.8 GiB used, 107 GiB / 114 GiB avail 616 pgs: 192 active+clean 617 //至此 完成 kubernetes 和 ceph rbd 相结合 实现 静态/动态存储 但是这并非我想要的 下面我将对动态存储进行改动以 满足我的需求 618 619 [root@bs-k8s-master01 rbd]# kubectl delete -f . 620 storageclass.storage.k8s.io "ceph-sc" deleted 621 pod "ceph-pod1" deleted 622 persistentvolumeclaim "ceph-pvc" deleted 623 secret "storage-secret" deleted 624 [root@bs-k8s-master01 dynamic]# cd external-storage-master/ceph/rbd/deploy/rbac/ 625 [root@bs-k8s-master01 rbac]# kubectl delete -f . 626 clusterrole.rbac.authorization.k8s.io "rbd-provisioner" deleted 627 clusterrolebinding.rbac.authorization.k8s.io "rbd-provisioner" deleted 628 deployment.apps "rbd-provisioner" deleted 629 role.rbac.authorization.k8s.io "rbd-provisioner" deleted 630 rolebinding.rbac.authorization.k8s.io "rbd-provisioner" deleted 631 serviceaccount "rbd-provisioner" deleted 632 [root@bs-k8s-ceph ceph]# ceph osd pool rm rbd rbd --yes-i-really-really-mean-it 633 pool 'rbd' removed 634 [root@bs-k8s-ceph ceph]# ceph osd pool rm k8s k8s --yes-i-really-really-mean-it 635 pool 'k8s' removed 636 637 638 //前面是在ceph的admin用户下进行的 现在搞个普通用户 试试 639 [root@bs-k8s-ceph ~]# ceph -s 640 2020-02-14 15:43:59.295 7f0380dc4700 0 -- 20.0.0.208:0/2791728362 >> 20.0.0.206:6809/2365 conn(0x7f0364005090 :-1 s=STATE_CONNECTING_WAIT_CONNECT_REPLY_AUTH pgs=0 cs=0 l=1).handle_connect_reply connect got BADAUTHORIZER 641 2020-02-14 15:43:59.670 7f0380dc4700 0 -- 20.0.0.208:0/2791728362 >> 20.0.0.206:6809/2365 conn(0x7f0364005090 :-1 s=STATE_CONNECTING_WAIT_CONNECT_REPLY_AUTH pgs=0 cs=0 l=1).handle_connect_reply connect got BADAUTHORIZER 642 cluster: 643 id: 11880418-1a9a-4b55-a353-4b141e2199d8 644 health: HEALTH_OK 645 646 services: 647 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 648 mgr: bs-hk-hk01(active), standbys: bs-k8s-ceph, bs-hk-hk02 649 osd: 6 osds: 6 up, 6 in 650 651 data: 652 pools: 0 pools, 0 pgs 653 objects: 0 objects, 0 B 654 usage: 6.1 GiB used, 108 GiB / 114 GiB avail 655 pgs: 656 [root@bs-hk-hk01 ~]# systemctl restart ceph.target 657 [root@bs-k8s-ceph ~]# ceph -s 658 cluster: 659 id: 11880418-1a9a-4b55-a353-4b141e2199d8 660 health: HEALTH_OK 661 662 services: 663 mon: 3 daemons, quorum bs-hk-hk01,bs-hk-hk02,bs-k8s-ceph 664 mgr: bs-hk-hk01(active), standbys: bs-k8s-ceph, bs-hk-hk02 665 osd: 6 osds: 6 up, 6 in 666 667 data: 668 pools: 0 pools, 0 pgs 669 objects: 0 objects, 0 B 670 usage: 6.1 GiB used, 108 GiB / 114 GiB avail 671 pgs: 672 [root@bs-k8s-ceph ceph]#ceph osd pool create kube 128 673 [root@bs-k8s-ceph ceph]#ceph auth get-or-create client.kube mon 'allow r' osd 'allow class-read, allow rwx pool=kube' -o ceph.client.kube.keyring 674 [root@bs-k8s-ceph ceph]# ceph auth get client.kube 675 exported keyring for client.kube 676 [client.kube] 677 key = AQCpU0ZeO0WkLBAAalZbyVaQQYMApOfFds9lng== 678 caps mon = "allow r" 679 caps osd = "allow class-read, allow rwx pool=kube" 680 [root@bs-k8s-node01 ~]# ceph auth get-key client.admin | base64 681 QVFDNmNVSmV2eU8yRnhBQVBxYzE5Mm5PelNnZk5acmg5aEFQYXc9PQ== 682 [root@bs-k8s-node01 ~]# ceph auth get-key client.kube | base64 683 QVFDcFUwWmVPMFdrTEJBQWFsWmJ5VmFRUVlNQXBPZkZkczlsbmc9PQ== 684 [root@bs-k8s-master01 rbd01]# cat ceph-kube-secret.yaml 685 ########################################################################## 686 #Author: zisefeizhu 687 #QQ: 2********0 688 #Date: 2020-02-14 689 #FileName: storage_secret.yaml 690 #URL: https://www.cnblogs.com/zisefeizhu/ 691 #Description: The test script 692 #Copyright (C): 2020 All rights reserved 693 ########################################################################### 694 apiVersion: v1 695 kind: Secret 696 metadata: 697 name: ceph-admin-secret 698 namespace: default 699 data: 700 key: QVFDNmNVSmV2eU8yRnhBQVBxYzE5Mm5PelNnZk5acmg5aEFQYXc9PQ== 701 type: kubernetes.io/rbd 702 --- 703 apiVersion: v1 704 kind: Secret 705 metadata: 706 name: ceph-kube-secret 707 namespace: default 708 data: 709 key: QVFDcFUwWmVPMFdrTEJBQWFsWmJ5VmFRUVlNQXBPZkZkczlsbmc9PQ== 710 type: kubernetes.io/rbd 711 [root@bs-k8s-master01 rbd01]# kubectl apply -f ceph-kube-secret.yaml 712 secret/ceph-admin-secret created 713 secret/ceph-kube-secret created 714 [root@bs-k8s-master01 rbd01]# kubectl get secret 715 NAME TYPE DATA AGE 716 ceph-admin-secret kubernetes.io/rbd 1 7s 717 ceph-kube-secret kubernetes.io/rbd 1 6s 718 default-token-qx5c2 kubernetes.io/service-account-token 3 5d22h 719 rbd-provisioner-token-vjfk7 kubernetes.io/service-account-token 3 11m 720 [root@bs-k8s-master01 rbd01]# cat ceph-storageclass.yaml 721 ########################################################################## 722 #Author: zisefeizhu 723 #QQ: 2********0 724 #Date: 2020-02-14 725 #FileName: storage_class.yaml 726 #URL: https://www.cnblogs.com/zisefeizhu/ 727 #Description: The test script 728 #Copyright (C): 2020 All rights reserved 729 ########################################################################### 730 apiVersion: storage.k8s.io/v1 731 kind: StorageClass 732 metadata: 733 name: ceph-rbd 734 namespace: default 735 annotations: 736 storageclass.kubernetes.io/is-default-class: "false" 737 provisioner: ceph.com/rbd 738 reclaimPolicy: Retain 739 parameters: 740 monitors: 20.0.0.206:6789,20.0.0.207:6789,20.0.0.208:6789 741 adminId: admin 742 adminSecretName: ceph-admin-secret 743 adminSecretNamespace: default 744 pool: kube 745 fsType: xfs 746 userId: kube 747 userSecretName: ceph-kube-secret 748 imageFormat: "2" 749 imageFeatures: "layering" 750 [root@bs-k8s-master01 rbd01]# kubectl apply -f ceph-storageclass.yaml 751 storageclass.storage.k8s.io/ceph-rbd created 752 [root@bs-k8s-master01 rbd01]# kubectl get sc 753 NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE 754 ceph-rbd ceph.com/rbd Retain Immediate false 4s 755 756 [root@bs-k8s-master01 rbd01]# kubectl apply -f ceph-pvc.yaml 757 persistentvolumeclaim/ceph-pvc created 758 [root@bs-k8s-master01 rbd01]# kubectl get pv 759 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 760 pvc-c7af60b3-261d-4102-94ca-dccca9f081dc 1Gi RWO Retain Bound default/ceph-pvc ceph-rbd 3s 761 [root@bs-k8s-master01 rbd01]# kubectl get pvc 762 NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE 763 ceph-pvc Bound pvc-c7af60b3-261d-4102-94ca-dccca9f081dc 1Gi RWO ceph-rbd 8s 764 [root@bs-k8s-master01 rbd01]# cat ceph-pvc.yaml 765 ########################################################################## 766 #Author: zisefeizhu 767 #QQ: 2********0 768 #Date: 2020-02-14 769 #FileName: storage_pvc.yaml 770 #URL: https://www.cnblogs.com/zisefeizhu/ 771 #Description: The test script 772 #Copyright (C): 2020 All rights reserved 773 ########################################################################### 774 apiVersion: v1 775 kind: PersistentVolumeClaim 776 metadata: 777 name: ceph-pvc 778 namespace: default 779 spec: 780 storageClassName: ceph-rbd 781 accessModes: 782 - ReadWriteOnce 783 resources: 784 requests: 785 storage: 1Gi 786 787 788 789 The PersistentVolumeClaim "ceph-pvc" is invalid: spec: Forbidden: is immutable after creation except resources.requests for bound claims 790 [root@bs-k8s-master01 rbd]# fgrep -R "ceph-pvc" ./* 791 ./storage_pod.yaml: claimName: ceph-pvc 792 ./storage_pvc.yaml: name: ceph-pvc 793 794 //一个小实验 795 796 [root@bs-k8s-master01 wm]# kubectl -n default create secret generic mysql-pass --from-literal=password=zisefeizhu 797 secret/mysql-pass created 798 799 [root@bs-k8s-master01 wm]# kubectl apply -f mysql-deployment.yaml 800 service/wordpress-mysql created 801 persistentvolumeclaim/mysql-pv-claim created 802 deployment.apps/wordpress-mysql created 803 [root@bs-k8s-master01 wm]# kubectl get pvc 804 NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE 805 ceph Bound pvc-3cbafafc-9b98-45e3-94b2-2badeaec3af2 1Gi RWO ceph-sc 10m 806 ceph-pvc Bound pvc-59fb2bce-a8cf-466c-99b5-56f880f6807a 1Gi RWO ceph-rbd 12m 807 mysql-pv-claim Bound pvc-453f1f97-8a7a-4bc1-8c8d-d75bdcafbf24 20Gi RWO ceph-rbd 9s 808 [root@bs-k8s-master01 wm]# kubectl get pv 809 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 810 pvc-3cbafafc-9b98-45e3-94b2-2badeaec3af2 1Gi RWO Retain Bound default/ceph ceph-sc 11m 811 pvc-453f1f97-8a7a-4bc1-8c8d-d75bdcafbf24 20Gi RWO Retain Bound default/mysql-pv-claim ceph-rbd 19s 812 pvc-59fb2bce-a8cf-466c-99b5-56f880f6807a 1Gi RWO Retain Bound default/ceph-pvc ceph-rbd 12m 813 [root@bs-k8s-master01 wm]# kubectl get pod -o wide 814 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES 815 ceph-pod1 1/1 Running 0 15m 10.209.145.10 bs-k8s-node02 816 rbd-provisioner-75b85f85bd-8ftdm 1/1 Running 0 49m 10.209.46.72 bs-k8s-node01 817 wordpress-mysql-5b697dbbfc-dkrvm 1/1 Running 0 5m14s 10.209.208.8 bs-k8s-node03 818 819 [root@bs-k8s-master01 wm]# cat mysql-deployment.yaml 820 ########################################################################## 821 #Author: zisefeizhu 822 #QQ: 2********0 823 #Date: 2020-02-15 824 #FileName: mysql-deployment.yaml 825 #URL: https://www.cnblogs.com/zisefeizhu/ 826 #Description: The test script 827 #Copyright (C): 2020 All rights reserved 828 ########################################################################### 829 apiVersion: v1 830 kind: Service 831 metadata: 832 name: wordpress-mysql 833 namespace: default 834 labels: 835 app: wordpress 836 spec: 837 ports: 838 - port: 3306 839 selector: 840 app: wordpress 841 tier: mysql 842 clusterIP: None 843 --- 844 apiVersion: v1 845 kind: PersistentVolumeClaim 846 metadata: 847 name: mysql-pv-claim 848 namespace: default 849 labels: 850 app: wordpress 851 spec: 852 storageClassName: ceph-rbd 853 accessModes: 854 - ReadWriteOnce 855 resources: 856 requests: 857 storage: 20Gi 858 --- 859 apiVersion: apps/v1 860 kind: Deployment 861 metadata: 862 name: wordpress-mysql 863 namespace: default 864 labels: 865 app: wordpress 866 spec: 867 selector: 868 matchLabels: 869 app: wordpress 870 tier: mysql 871 strategy: 872 type: Recreate 873 template: 874 metadata: 875 labels: 876 app: wordpress 877 tier: mysql 878 spec: 879 containers: 880 - image: mysql:5.6 881 name: mysql 882 env: 883 - name: MYSQL_ROOT_PASSWORD 884 valueFrom: 885 secretKeyRef: 886 name: mysql-pass 887 key: password 888 ports: 889 - containerPort: 3306 890 name: mysql 891 volumeMounts: 892 - name: mysql-persistent-storage 893 mountPath: /var/lib/mysql 894 volumes: 895 - name: mysql-persistent-storage 896 persistentVolumeClaim: 897 claimName: mysql-pv-claim 898 899 [root@bs-k8s-master01 wm]# kubectl apply -f wordpress.yaml 900 service/wordpress created 901 persistentvolumeclaim/wp-pv-claim created 902 deployment.apps/wordpress created 903 [root@bs-k8s-master01 wm]# kubectl get pv 904 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 905 pvc-3cbafafc-9b98-45e3-94b2-2badeaec3af2 1Gi RWO Retain Bound default/ceph ceph-sc 27m 906 pvc-453f1f97-8a7a-4bc1-8c8d-d75bdcafbf24 20Gi RWO Retain Bound default/mysql-pv-claim ceph-rbd 17m 907 pvc-59fb2bce-a8cf-466c-99b5-56f880f6807a 1Gi RWO Retain Bound default/ceph-pvc ceph-rbd 29m 908 pvc-78c33394-2aac-4683-b5c6-424d4c2d6891 20Gi RWO Retain Bound default/wp-pv-claim ceph-rbd 4s 909 [root@bs-k8s-master01 wm]# kubectl get pvc 910 NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE 911 ceph Bound pvc-3cbafafc-9b98-45e3-94b2-2badeaec3af2 1Gi RWO ceph-sc 28m 912 ceph-pvc Bound pvc-59fb2bce-a8cf-466c-99b5-56f880f6807a 1Gi RWO ceph-rbd 29m 913 mysql-pv-claim Bound pvc-453f1f97-8a7a-4bc1-8c8d-d75bdcafbf24 20Gi RWO ceph-rbd 17m 914 wp-pv-claim Bound pvc-78c33394-2aac-4683-b5c6-424d4c2d6891 20Gi RWO ceph-rbd 13s 915 [root@bs-k8s-master01 wm]# kubectl get pods -o wide 916 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES 917 ceph-pod1 1/1 Running 0 34m 10.209.145.10 bs-k8s-node02 918 rbd-provisioner-75b85f85bd-8ftdm 1/1 Running 0 67m 10.209.46.72 bs-k8s-node01 919 wordpress-578744754c-x7msj 1/1 Running 0 6m17s 10.209.145.11 bs-k8s-node02 920 wordpress-mysql-5b697dbbfc-dkrvm 1/1 Running 0 23m 10.209.208.8 bs-k8s-node03 921 [root@bs-k8s-master01 wm]# cat wordpress.yaml 922 ########################################################################## 923 #Author: zisefeizhu 924 #QQ: 2********0 925 #Date: 2020-02-15 926 #FileName: wordpress.yaml 927 #URL: https://www.cnblogs.com/zisefeizhu/ 928 #Description: The test script 929 #Copyright (C): 2020 All rights reserved 930 ########################################################################### 931 apiVersion: v1 932 kind: Service 933 metadata: 934 name: wordpress 935 namespace: default 936 labels: 937 app: wordpress 938 spec: 939 ports: 940 - port: 80 941 selector: 942 app: wordpress 943 tier: frontend 944 type: LoadBalancer 945 --- 946 apiVersion: v1 947 kind: PersistentVolumeClaim 948 metadata: 949 name: wp-pv-claim 950 namespace: default 951 labels: 952 app: wordpress 953 spec: 954 storageClassName: ceph-rbd 955 accessModes: 956 - ReadWriteOnce 957 resources: 958 requests: 959 storage: 20Gi 960 --- 961 apiVersion: apps/v1 962 kind: Deployment 963 metadata: 964 name: wordpress 965 namespace: default 966 labels: 967 app: wordpress 968 spec: 969 selector: 970 matchLabels: 971 app: wordpress 972 tier: frontend 973 strategy: 974 type: Recreate 975 template: 976 metadata: 977 labels: 978 app: wordpress 979 tier: frontend 980 spec: 981 containers: 982 - image: wordpress:4.8-apache 983 name: wordpress 984 env: 985 - name: WORDPRESS_DB_HOST 986 value: wordpress-mysql 987 - name: WORDPRESS_DB_PASSWORD 988 valueFrom: 989 secretKeyRef: 990 name: mysql-pass 991 key: password 992 ports: 993 - containerPort: 80 994 name: wordpress 995 volumeMounts: 996 - name: wordpress-persistent-storage 997 mountPath: /var/www/html 998 volumes: 999 - name: wordpress-persistent-storage 1000 persistentVolumeClaim: 1001 claimName: wp-pv-claim 1002 [root@bs-k8s-master01 wm]# kubectl get services 1003 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE 1004 kubernetes ClusterIP 10.96.0.1 443/TCP 5d23h 1005 wordpress LoadBalancer 10.104.98.3480:32513/TCP 7m8s 1006 wordpress-mysql ClusterIP None3306/TCP 24m

//至此 完成 kubernetes 和 ceph rbd 相结合 实现 静态/动态存储 但是这并非我想要的 下面我将对动态存储进行改动以 满足我的需求