Tensorflow2.0对不平衡数据的分类(含混淆矩阵与ROC图)

文章目录

- 数据集介绍

- 代码实现

- 1、导入需要的库

- 2、导入数据集

- 查看数据集中正样本(欺诈)和负样本(未欺诈)的数量

- 对数据集进行稍微处理

- 3、划分数据集

- 划分训练集、验证集和测试集

- 划分出特征和标签

- 4、标准化处理

- 5、查看正负样本的相关信息

- 区分正负样本

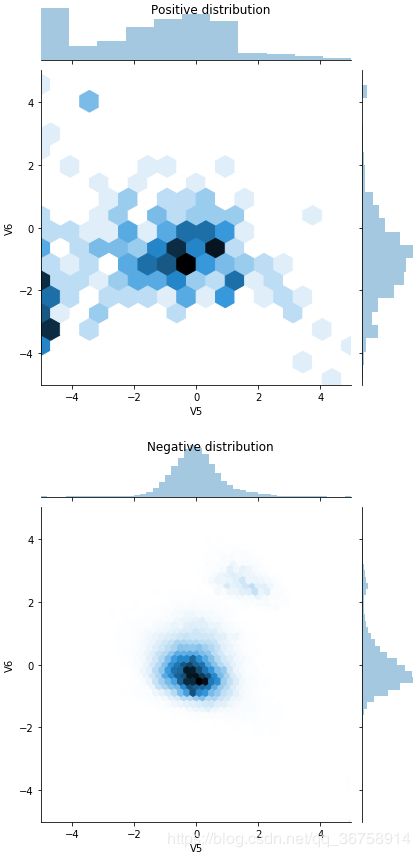

- 在'V5','V6'两个维度上比较正负样本

- 6、构建模型

- 7、对比:有bias_initializer vs 没有bias_initializer

- 没有bias_initializer

- 构建模型

- 用模型预测前十个样本

- 将训练集输入模型来评价模型

- 有bias_initializer

- 计算bias_initializer

- 构建模型

- 用模型预测前十个样本

- 将训练集输入模型来评价模型

- 8、保存初始权值

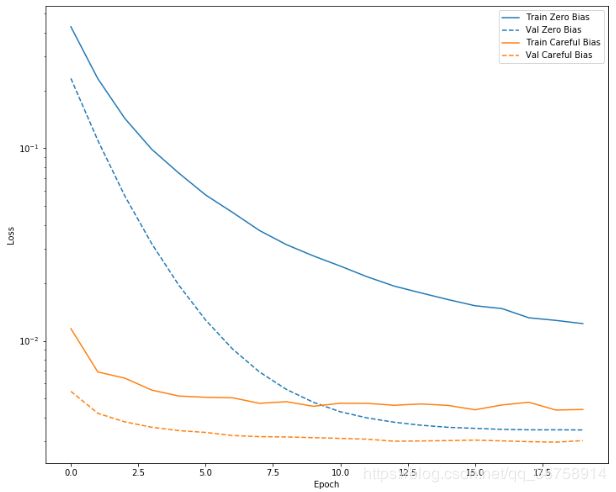

- 9、对比:初始化最后一层偏置

- 将最后一层偏置初始化为0建模并训练

- 不将最后一层偏置初始化为0建模并训练

- 绘制损失图

- 10、将不初始化偏置的模型作为基准

- 训练

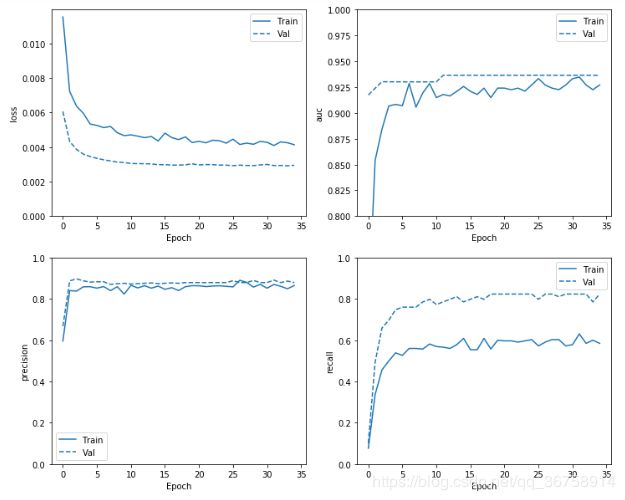

- 画出loss、AUC、precision和recall

- 对训练集和测试集进行预测

- 画出混淆矩阵

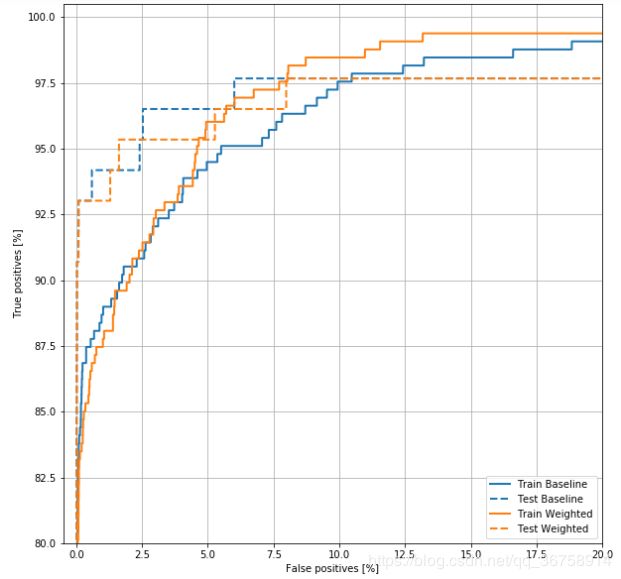

- 画ROC曲线

- 11、给含有较少样本的类别加权重

- 计算权重

- 模型加权并训练

- 画出loss、AUC、precision和recall

- 对训练集和测试集进行预测

- 画出混淆矩阵

- 画ROC曲线

- 12、使用过采样(oversampling)对样本进行处理

- 将正负样本划分开

- 将正负样本转换为Dataset

- 过采样

- 计算训练一次有几个batch

- 建模并训练

- 画出loss、AUC、precision和recall

- 对训练集和测试集进行预测

- 画出混淆矩阵

- 画ROC曲线

数据集介绍

在现实生活中,有这样一种分类问题:一类样本非常多而另一类样本非常少。此文章将演示如何对高度不平衡的数据集进行分类。在这里,我们使用信用卡欺诈检测数据集。其目的是从总共284807宗交易中发现492宗欺诈性交易。

代码实现

1、导入需要的库

from tensorflow import keras

import os

import tempfile

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

import sklearn

from sklearn.metrics import confusion_matrix

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

mpl.rcParams['figure.figsize'] = (12, 10)

colors = plt.rcParams['axes.prop_cycle'].by_key()['color']

2、导入数据集

file = tf.keras.utils

raw_df = pd.read_csv('https://storage.googleapis.com/download.tensorflow.org/data/creditcard.csv')

查看数据集中正样本(欺诈)和负样本(未欺诈)的数量

neg, pos = np.bincount(raw_df['Class'])

total = neg + pos

print('Examples:\n Total: {}\n Positive: {} ({:.2f}% of total)\n'.format(

total, pos, 100 * pos / total))

Examples:

Total: 284807

Positive: 492 (0.17% of total)

由此看出,该数据集中只有0.17%属于正样例。

对数据集进行稍微处理

观察到该数据集中的‘Amount’一列中的数据跨度很大,为了使这些数据更加接近,我们将这些数值映射到对数空间。

cleaned_df = raw_df.copy()

# You don't want the `Time` column.

cleaned_df.pop('Time')

# The `Amount` column covers a huge range. Convert to log-space.

eps=0.001 # 0 => 0.1¢

cleaned_df['Log Ammount'] = np.log(cleaned_df.pop('Amount')+eps)

3、划分数据集

划分训练集、验证集和测试集

# Use a utility from sklearn to split and shuffle our dataset.

train_df, test_df = train_test_split(cleaned_df, test_size=0.2)

train_df, val_df = train_test_split(train_df, test_size=0.2)

划分出特征和标签

# Form np arrays of labels and features.

train_labels = np.array(train_df.pop('Class'))

bool_train_labels = train_labels != 0

val_labels = np.array(val_df.pop('Class'))

test_labels = np.array(test_df.pop('Class'))

train_features = np.array(train_df)

val_features = np.array(val_df)

test_features = np.array(test_df)

4、标准化处理

在这里,我们使用sklearn库中的函数对特征进行标准化处理,并将处理后的所有数据强制限定在-5到5的范围内(即大于5的令其等于5;小于-5的令其等于-5)。

scaler = StandardScaler()

train_features = scaler.fit_transform(train_features)

val_features = scaler.transform(val_features)

test_features = scaler.transform(test_features)

train_features = np.clip(train_features, -5, 5)

val_features = np.clip(val_features, -5, 5)

test_features = np.clip(test_features, -5, 5)

print('Training labels shape:', train_labels.shape)

print('Validation labels shape:', val_labels.shape)

print('Test labels shape:', test_labels.shape)

print('Training features shape:', train_features.shape)

print('Validation features shape:', val_features.shape)

print('Test features shape:', test_features.shape)

Training labels shape: (182276,)

Validation labels shape: (45569,)

Test labels shape: (56962,)

Training features shape: (182276, 29)

Validation features shape: (45569, 29)

Test features shape: (56962, 29)

5、查看正负样本的相关信息

区分正负样本

pos_df = pd.DataFrame(train_features[bool_train_labels], columns = train_df.columns)

neg_df = pd.DataFrame(train_features[~bool_train_labels], columns = train_df.columns)

在’V5’,'V6’两个维度上比较正负样本

sns.jointplot(pos_df['V5'], pos_df['V6'],

kind='hex', xlim = (-5,5), ylim = (-5,5))

plt.suptitle("Positive distribution")

sns.jointplot(neg_df['V5'], neg_df['V6'],

kind='hex', xlim = (-5,5), ylim = (-5,5))

_ = plt.suptitle("Negative distribution")

6、构建模型

METRICS = [

keras.metrics.TruePositives(name='tp'),

keras.metrics.FalsePositives(name='fp'),

keras.metrics.TrueNegatives(name='tn'),

keras.metrics.FalseNegatives(name='fn'),

keras.metrics.BinaryAccuracy(name='accuracy'),

keras.metrics.Precision(name='precision'),

keras.metrics.Recall(name='recall'),

keras.metrics.AUC(name='auc'),

]

EPOCHS = 100

BATCH_SIZE = 2048

early_stopping = tf.keras.callbacks.EarlyStopping(

monitor='val_auc',

verbose=1,

patience=10,

mode='max',

restore_best_weights=True)

def make_model(metrics = METRICS, output_bias=None):

if output_bias is not None:

output_bias = tf.keras.initializers.Constant(output_bias)

model = keras.Sequential([

keras.layers.Dense(16, activation='relu', input_shape=(train_features.shape[-1],)),

keras.layers.Dropout(0.5),

keras.layers.Dense(1, activation='sigmoid', bias_initializer=output_bias),

])

model.compile(

optimizer=keras.optimizers.Adam(lr=1e-3),

loss=keras.losses.BinaryCrossentropy(),

metrics=metrics)

return model

7、对比:有bias_initializer vs 没有bias_initializer

没有bias_initializer

构建模型

model = make_model()

用模型预测前十个样本

model.predict(train_features[:10])

array([[0.9193252 ],

[0.7635404 ],

[0.95460016],

[0.8937819 ],

[0.7636404 ],

[0.85707057],

[0.9284621 ],

[0.9763104 ],

[0.8973746 ],

[0.8980395 ]], dtype=float32)

将训练集输入模型来评价模型

results = model.evaluate(train_features, train_labels, batch_size=BATCH_SIZE, verbose=0)

print("Loss: {:0.4f}".format(results[0]))

Loss: 2.6132

有bias_initializer

计算bias_initializer

initial_bias = np.log([pos/neg])

initial_bias

array([-6.35935934])

构建模型

model = make_model(output_bias = initial_bias)

用模型预测前十个样本

model.predict(train_features[:10])

array([[0.00111789],

[0.00115025],

[0.00066873],

[0.00131339],

[0.00064212],

[0.0013302 ],

[0.00132826],

[0.00138992],

[0.00076297],

[0.00147521]], dtype=float32)

将训练集输入模型来评价模型

results = model.evaluate(train_features, train_labels, batch_size=BATCH_SIZE, verbose=0)

print("Loss: {:0.4f}".format(results[0]))

Loss: 0.0167

由此可见,考虑了数据集本身的不平衡性之后,所得的的损失更小。

8、保存初始权值

为了使各种模型的训练更具可比性,我们将初始模型的权重保存在checkpoint文件中,并在训练之前将其加载到每个模型中。

initial_weights = os.path.join(tempfile.mkdtemp(),'initial_weights')

model.save_weights(initial_weights)

9、对比:初始化最后一层偏置

将最后一层偏置初始化为0建模并训练

model = make_model()

model.load_weights(initial_weights)

model.layers[-1].bias.assign([0.0])

zero_bias_history = model.fit(

train_features,

train_labels,

batch_size=BATCH_SIZE,

epochs=20,

validation_data=(val_features, val_labels),

verbose=0)

不将最后一层偏置初始化为0建模并训练

model = make_model()

model.load_weights(initial_weights)

careful_bias_history = model.fit(

train_features,

train_labels,

batch_size=BATCH_SIZE,

epochs=20,

validation_data=(val_features, val_labels),

verbose=0)

绘制损失图

def plot_loss(history, label, n):

# Use a log scale to show the wide range of values.

plt.semilogy(history.epoch, history.history['loss'],

color=colors[n], label='Train '+label)

plt.semilogy(history.epoch, history.history['val_loss'],

color=colors[n], label='Val '+label,

linestyle="--")

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plot_loss(zero_bias_history, "Zero Bias", 0)

plot_loss(careful_bias_history, "Careful Bias", 1)

10、将不初始化偏置的模型作为基准

训练

显示我们之前所定义的METRICS,以及加入了早停机制。

model = make_model()

model.load_weights(initial_weights)

baseline_history = model.fit(

train_features,

train_labels,

batch_size=BATCH_SIZE,

epochs=EPOCHS,

callbacks = [early_stopping],

validation_data=(val_features, val_labels))

画出loss、AUC、precision和recall

def plot_metrics(history):

metrics = ['loss', 'auc', 'precision', 'recall']

for n, metric in enumerate(metrics):

name = metric

plt.subplot(2,2,n+1)

plt.plot(history.epoch, history.history[metric], color=colors[0], label='Train')

plt.plot(history.epoch, history.history['val_'+metric],

color=colors[0], linestyle="--", label='Val')

plt.xlabel('Epoch')

plt.ylabel(name)

if metric == 'loss':

plt.ylim([0, plt.ylim()[1]])

elif metric == 'auc':

plt.ylim([0.8,1])

else:

plt.ylim([0,1])

plt.legend()

plot_metrics(baseline_history)

对训练集和测试集进行预测

train_predictions_baseline = model.predict(train_features, batch_size=BATCH_SIZE)

test_predictions_baseline = model.predict(test_features, batch_size=BATCH_SIZE)

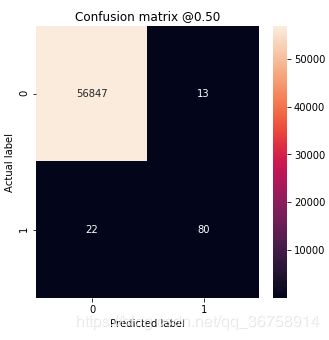

画出混淆矩阵

def plot_cm(labels, predictions, p=0.5):

cm = confusion_matrix(labels, predictions > p)

plt.figure(figsize=(5,5))

sns.heatmap(cm, annot=True, fmt="d")

plt.title('Confusion matrix @{:.2f}'.format(p))

plt.ylabel('Actual label')

plt.xlabel('Predicted label')

print('Legitimate Transactions Detected (True Negatives): ', cm[0][0])

print('Legitimate Transactions Incorrectly Detected (False Positives): ', cm[0][1])

print('Fraudulent Transactions Missed (False Negatives): ', cm[1][0])

print('Fraudulent Transactions Detected (True Positives): ', cm[1][1])

print('Total Fraudulent Transactions: ', np.sum(cm[1]))

baseline_results = model.evaluate(test_features, test_labels,

batch_size=BATCH_SIZE, verbose=0)

for name, value in zip(model.metrics_names, baseline_results):

print(name, ': ', value)

print()

plot_cm(test_labels, test_predictions_baseline)

loss : 0.0033584331309548607

tp : 80.0

fp : 13.0

tn : 56847.0

fn : 22.0

accuracy : 0.99938554

precision : 0.86021507

recall : 0.78431374

auc : 0.9309929

Legitimate Transactions Detected (True Negatives): 56847

Legitimate Transactions Incorrectly Detected (False Positives): 13

Fraudulent Transactions Missed (False Negatives): 22

Fraudulent Transactions Detected (True Positives): 80

Total Fraudulent Transactions: 102

如果模型能完美地预测一切,这将是一个对角线矩阵,其中主对角线以外的值表示错误的预测,将为零。然而,我们可能会想要更少的假阴性(将欺诈误判成了非欺诈),尽管增加了假阳性(将非欺诈误判成了欺诈)的数量。这种取舍可能更可取,因为假阴性会允许欺诈交易进行,而假阳性可能会导致向客户发送电子邮件,要求他们验证他们信用卡的活动。

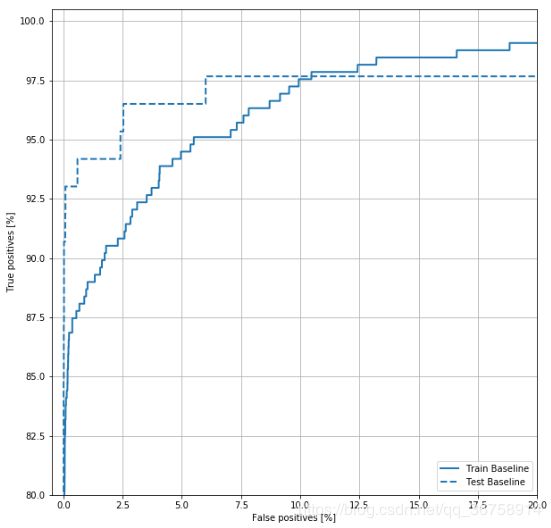

画ROC曲线

def plot_roc(name, labels, predictions, **kwargs):

fp, tp, _ = sklearn.metrics.roc_curve(labels, predictions)

plt.plot(100*fp, 100*tp, label=name, linewidth=2, **kwargs)

plt.xlabel('False positives [%]')

plt.ylabel('True positives [%]')

plt.xlim([-0.5,20])

plt.ylim([80,100.5])

plt.grid(True)

ax = plt.gca()

ax.set_aspect('equal')

plot_roc("Train Baseline", train_labels, train_predictions_baseline, color=colors[0])

plot_roc("Test Baseline", test_labels, test_predictions_baseline, color=colors[0], linestyle='--')

plt.legend(loc='lower right')

上图看起来精度相对较高,但召回率和ROC曲线下的面积(AUC)并没有那么高。分类器在试图最大化精度和召回率时常常面临挑战,尤其是在处理不平衡数据集时。而在特点问题的背景下,考虑不同类型错误的代价是很重要的。在本例中,假阴性(漏掉欺诈性交易)可能会产生财务成本,而假阳性(错误地标记为欺诈性交易)可能会降低用户的幸福感。

上图看起来精度相对较高,但召回率和ROC曲线下的面积(AUC)并没有那么高。分类器在试图最大化精度和召回率时常常面临挑战,尤其是在处理不平衡数据集时。而在特点问题的背景下,考虑不同类型错误的代价是很重要的。在本例中,假阴性(漏掉欺诈性交易)可能会产生财务成本,而假阳性(错误地标记为欺诈性交易)可能会降低用户的幸福感。

11、给含有较少样本的类别加权重

我们的目标是识别欺诈行为,但是我们没有太多的正样本可供使用,因此我们需要让分类器对可用的少数示例进行大量加权。这将导致模型“更加关注”来自样本较少的类别。

计算权重

# Scaling by total/2 helps keep the loss to a similar magnitude.

# The sum of the weights of all examples stays the same.

weight_for_0 = (1 / neg)*(total)/2.0

weight_for_1 = (1 / pos)*(total)/2.0

class_weight = {0: weight_for_0, 1: weight_for_1}

print('Weight for class 0: {:.2f}'.format(weight_for_0))

print('Weight for class 1: {:.2f}'.format(weight_for_1))

Weight for class 0: 0.50

Weight for class 1: 289.44

即对正样本加权为289.44,而对负样本加权为0.50。

模型加权并训练

weighted_model = make_model()

weighted_model.load_weights(initial_weights)

weighted_history = weighted_model.fit(

train_features,

train_labels,

batch_size=BATCH_SIZE,

epochs=EPOCHS,

callbacks = [early_stopping],

validation_data=(val_features, val_labels),

# The class weights go here

class_weight=class_weight)

画出loss、AUC、precision和recall

plot_metrics(weighted_history)

对训练集和测试集进行预测

train_predictions_weighted = weighted_model.predict(train_features, batch_size=BATCH_SIZE)

test_predictions_weighted = weighted_model.predict(test_features, batch_size=BATCH_SIZE)

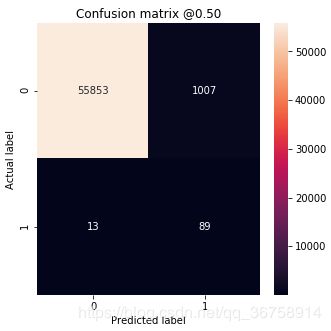

画出混淆矩阵

weighted_results = weighted_model.evaluate(test_features, test_labels,

batch_size=BATCH_SIZE, verbose=0)

for name, value in zip(weighted_model.metrics_names, weighted_results):

print(name, ': ', value)

print()

plot_cm(test_labels, test_predictions_weighted)

loss : 0.07764337301026158

tp : 89.0

fp : 1007.0

tn : 55853.0

fn : 13.0

accuracy : 0.98209333

precision : 0.08120438

recall : 0.872549

auc : 0.98502827

Legitimate Transactions Detected (True Negatives): 55853

Legitimate Transactions Incorrectly Detected (False Positives): 1007

Fraudulent Transactions Missed (False Negatives): 13

Fraudulent Transactions Detected (True Positives): 89

Total Fraudulent Transactions: 102

由混淆矩阵知,使用权重时,由于误报的数量较多,准确性和精确度较低,但相反,召回率和AUC较高,因为模型也发现了更多的真阳性(正确判断了正样本)。尽管准确性较低,但该模型具有较高的召回率(并识别出更多的欺诈交易)。

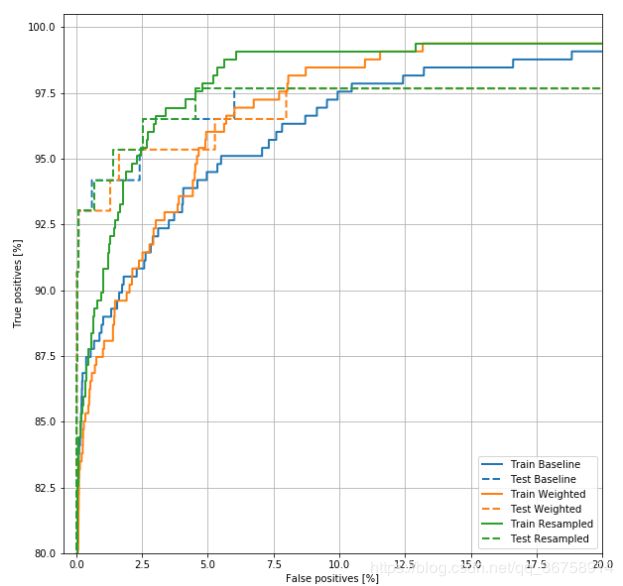

画ROC曲线

plot_roc("Train Baseline", train_labels, train_predictions_baseline, color=colors[0])

plot_roc("Test Baseline", test_labels, test_predictions_baseline, color=colors[0], linestyle='--')

plot_roc("Train Weighted", train_labels, train_predictions_weighted, color=colors[1])

plot_roc("Test Weighted", test_labels, test_predictions_weighted, color=colors[1], linestyle='--')

plt.legend(loc='lower right')

12、使用过采样(oversampling)对样本进行处理

过采样即将正样本通过重复抽取的方式增加到和负样本的个数相当。

将正负样本划分开

pos_features = train_features[bool_train_labels]

neg_features = train_features[~bool_train_labels]

pos_labels = train_labels[bool_train_labels]

neg_labels = train_labels[~bool_train_labels]

将正负样本转换为Dataset

BUFFER_SIZE = 100000

def make_ds(features, labels):

ds = tf.data.Dataset.from_tensor_slices((features, labels))#.cache()

ds = ds.shuffle(BUFFER_SIZE).repeat()

return ds

pos_ds = make_ds(pos_features, pos_labels)

neg_ds = make_ds(neg_features, neg_labels)

pos_features.shape

过采样

resampled_ds = tf.data.experimental.sample_from_datasets([pos_ds, neg_ds], weights=[0.5, 0.5])

resampled_ds = resampled_ds.batch(BATCH_SIZE).prefetch(2)

通过运行:

for features, label in resampled_ds.take(1):

print(label.numpy().mean())

0.50048828125

我们可以看到,现在正负样本数几乎相等了。

计算训练一次有几个batch

resampled_steps_per_epoch = np.ceil(2.0*neg/BATCH_SIZE)

resampled_steps_per_epoch

278.0

建模并训练

resampled_model = make_model()

resampled_model.load_weights(initial_weights)

# Reset the bias to zero, since this dataset is balanced.

output_layer = resampled_model.layers[-1]

output_layer.bias.assign([0])

val_ds = tf.data.Dataset.from_tensor_slices((val_features, val_labels)).cache()

val_ds = val_ds.batch(BATCH_SIZE).prefetch(2)

resampled_history = resampled_model.fit(

resampled_ds,

epochs=EPOCHS,

steps_per_epoch=resampled_steps_per_epoch,

callbacks = [early_stopping],

validation_data=val_ds)

画出loss、AUC、precision和recall

plot_metrics(resampled_history)

对训练集和测试集进行预测

train_predictions_resampled = resampled_model.predict(train_features, batch_size=BATCH_SIZE)

test_predictions_resampled = resampled_model.predict(test_features, batch_size=BATCH_SIZE)

画出混淆矩阵

resampled_results = resampled_model.evaluate(test_features, test_labels,

batch_size=BATCH_SIZE, verbose=0)

for name, value in zip(resampled_model.metrics_names, resampled_results):

print(name, ': ', value)

print()

plot_cm(test_labels, test_predictions_weighted)

loss : 0.1262956837339392

tp : 88.0

fp : 998.0

tn : 55862.0

fn : 14.0

accuracy : 0.98223376

precision : 0.08103131

recall : 0.8627451

auc : 0.9784746

Legitimate Transactions Detected (True Negatives): 55853

Legitimate Transactions Incorrectly Detected (False Positives): 1007

Fraudulent Transactions Missed (False Negatives): 13

Fraudulent Transactions Detected (True Positives): 89

Total Fraudulent Transactions: 102

画ROC曲线

plot_roc("Train Baseline", train_labels, train_predictions_baseline, color=colors[0])

plot_roc("Test Baseline", test_labels, test_predictions_baseline, color=colors[0], linestyle='--')

plot_roc("Train Weighted", train_labels, train_predictions_weighted, color=colors[1])

plot_roc("Test Weighted", test_labels, test_predictions_weighted, color=colors[1], linestyle='--')

plot_roc("Train Resampled", train_labels, train_predictions_resampled, color=colors[2])

plot_roc("Test Resampled", test_labels, test_predictions_resampled, color=colors[2], linestyle='--')

plt.legend(loc='lower right')