网卡性能测试报告(2015/3/31)

测试结论

(1)网卡处理大包的实际能力约为网卡宣称的80%-90%,比如10G网卡支持的最大流量约为8Gb/s-9Gb/s,注意单位是bit。

(2) 1G网卡处理小包的能力约为560K包/秒。

(3) 网卡的带宽参数指的是单向带宽。

1 TCP性能

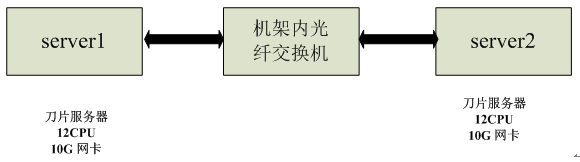

1.1组网

1.2测试

测试方法: sever2 作为服务端绑定端口9999,server1作为客户端与server2建立N个连接。在server1上,每个连接对应一个线程,不停的向服务端发送数据,每次发送10KB数据。在server2上,每个连接对应一个线程,测量接收数据的速率,并通过sar –nDEV 20 1000命令查看每秒处理包的个数。

| 连接数量 |

总数据传输速率 |

包 |

| 1 |

550 MB/S |

|

| 2 |

620 MB/S |

|

| 4 |

900 MB/S |

|

| 8 |

1000 MB/S |

750 000包/S |

| 10 |

900 MB/S |

|

| 15 |

800 MB/S |

|

| 20 |

740 MB/S |

|

2 UDP性能

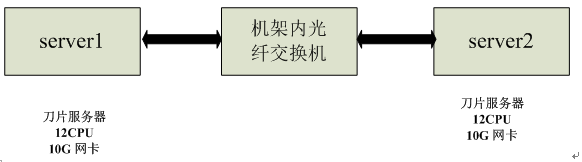

2.1 组网

2.2 多个端口

测试方法:

server2绑定N个端口,每个端口对应一个线程。server1启动N个线程,每个线程向不同的端口发送udp包,包大小为1400byte。在server2上设置接收缓冲区大小为255K。

| 连接数量 |

数据传输速率 |

包 |

| 1 |

560 MB/S |

|

| 2 |

1000 MB/S |

750 000 包/S |

| 4 |

1000 MB/S |

|

| 8 |

830 MB/S |

|

| 10 |

760 MB/S |

|

| 15 |

700 MB/S |

|

| 20 |

700 MB/S |

|

3.3 一个端口

测试方法:

server2绑定1个端口。server1启动N个线程,每个线程均需同一端口发送udp包,包大小为1400byte。在server2上设置接收缓冲区大小为255K。

| 连接数量 |

数据传输速率 |

包(包/s) |

| 2 |

850 MB/S |

|

| 4 |

1000 MB/S |

|

| 8 |

840 MB/S |

|

| 10 |

770 MB/S |

|

| 15 |

700 MB/S |

|

| 20 |

700 MB/S |

|

3 测试三

3.1组网

server-1

root@ubuntu:home# dmesg |grep -i em1

[ 17.417793] ixgbe 0000:03:00.0 em1: detected SFP+: 6

[ 17.549678] ixgbe 0000:03:00.0 em1: NIC Link is Up 10 Gbps, Flow Control: RX/TX

root@ubuntu:home# ethtool em1

Settings for em1:

Supported ports: [ FIBRE ]

Supported link modes: 10000baseT/Full

Supported pause frame use: No

Supports auto-negotiation: No

Advertised link modes: 10000baseT/Full

Advertised pause frame use: No

Advertised auto-negotiation: No

Speed: 10000Mb/s #网卡带宽

Duplex: Full

Port: FIBRE

PHYAD: 0

Transceiver: external

Auto-negotiation: off

Supports Wake-on: umbg

Wake-on: g

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes

root@ubuntu:home# lspci |grep -i eth

03:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01) #网卡型号

03:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

[root@nqadb home]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 1 #每个核有1个超线程,即不支持超线程

Core(s) per socket: 4 #每个CPU有4个核

CPU socket(s): 2 #2个物理CPU

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 44

Stepping: 2

CPU MHz: 1200.000

BogoMIPS: 4265.06

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 8192K

NUMA node0 CPU(s): 0,2,4,6

NUMA node1 CPU(s): 1,3,5,7

server-2

[root@nqadb home]# dmesg |grep -i eth0

bnx2 0000:03:00.0: eth0: Broadcom NetXtreme II BCM5709 1000Base-T (C0) PCI Express found at mem f4000000, IRQ 16, node addr e8:39:35:23:4f:84

[root@nqadb home]# ethtool eth0

Settings for eth0:

Supported ports: [ TP ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supports auto-negotiation: Yes

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Speed: 1000Mb/s

Duplex: Full

Port: Twisted Pair

PHYAD: 1

Transceiver: internal

Auto-negotiation: on

MDI-X: Unknown

Supports Wake-on: g

Wake-on: g

Link detected: yes

[root@nqadb home]# lspci |grep -i eth

03:00.0 Ethernet controller: Broadcom Corporation NetXtreme II BCM5709 Gigabit Ethernet (rev 20)

03:00.1 Ethernet controller: Broadcom Corporation NetXtreme II BCM5709 Gigabit Ethernet (rev 20)

04:00.0 Ethernet controller: Broadcom Corporation NetXtreme II BCM5709 Gigabit Ethernet (rev 20)

04:00.1 Ethernet controller: Broadcom Corporation NetXtreme II BCM5709 Gigabit Ethernet (rev 20)

[root@nqadb home]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 1

Core(s) per socket: 4

CPU socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 44

Stepping: 2

CPU MHz: 1200.000

BogoMIPS: 4265.06

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 8192K

NUMA node0 CPU(s): 0,2,4,6

NUMA node1 CPU(s): 1,3,5,7Speed: 10000Mb/s #网卡带宽

Duplex: Full

Port: FIBRE

PHYAD: 0

Transceiver: external

Auto-negotiation: off

Supports Wake-on: umbg

Wake-on: g

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes

root@ubuntu:home# lspci |grep -i eth

03:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01) #网卡型号

03:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

[root@nqadb home]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 1 #每个核有1个超线程,即不支持超线程

Core(s) per socket: 4 #每个CPU有4个核

CPU socket(s): 2 #2个物理CPU

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 44

Stepping: 2

CPU MHz: 1200.000

BogoMIPS: 4265.06

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 8192K

NUMA node0 CPU(s): 0,2,4,6

NUMA node1 CPU(s): 1,3,5,7

server-2

[root@nqadb home]# dmesg |grep -i eth0

bnx2 0000:03:00.0: eth0: Broadcom NetXtreme II BCM5709 1000Base-T (C0) PCI Express found at mem f4000000, IRQ 16, node addr e8:39:35:23:4f:84

[root@nqadb home]# ethtool eth0

Settings for eth0:

Supported ports: [ TP ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supports auto-negotiation: Yes

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Speed: 1000Mb/s

Duplex: Full

Port: Twisted Pair

PHYAD: 1

Transceiver: internal

Auto-negotiation: on

MDI-X: Unknown

Supports Wake-on: g

Wake-on: g

Link detected: yes

[root@nqadb home]# lspci |grep -i eth

03:00.0 Ethernet controller: Broadcom Corporation NetXtreme II BCM5709 Gigabit Ethernet (rev 20)

03:00.1 Ethernet controller: Broadcom Corporation NetXtreme II BCM5709 Gigabit Ethernet (rev 20)

04:00.0 Ethernet controller: Broadcom Corporation NetXtreme II BCM5709 Gigabit Ethernet (rev 20)

04:00.1 Ethernet controller: Broadcom Corporation NetXtreme II BCM5709 Gigabit Ethernet (rev 20)

[root@nqadb home]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 1

Core(s) per socket: 4

CPU socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 44

Stepping: 2

CPU MHz: 1200.000

BogoMIPS: 4265.06

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 8192K

NUMA node0 CPU(s): 0,2,4,6

NUMA node1 CPU(s): 1,3,5,73.2 小包测试

测试目的:server-2的网卡处理小包的能力。

测试方法:发送进程位于server-1,接收进程位于server-2,发送数据包大小为64,UDP,所以实际每个包的大小为64+8+20=92。

测试结果:1G网卡处理小包的最大能力是560 Kpck/s。

| 发送者线程数量 |

rxKpck/s |

rxMB/s |

| 1 |

154 |

9 |

| 2 |

300 |

18 |

| 3 |

440 |

26 |

| 4 |

560 |

35 |

| 5 |

500 |

30 |

| 6 |

365 |

22 |

3.3 大包测试

测试目的:server-2网卡处理大包的能力。

测试方法:发送进程位于server-1,接收进程位于server-2,发送数据包大小为1460,UDP,所以实际每个包的大小为1460+8+20=1488。

测试结果:1G网卡处理大包的最大能力是900 MB/s。

| 发送者线程数量 |

rxKpck/s |

rxMB/s |

| 1 |

80 |

112 |

| 2 |

80 |

112 |

| 3 |

80 |

112 |

3.4 网卡上下行测试

测试目的:网卡带宽是1G,是单向流量,还是上行加下行?

测试方法:

发送进程A位于server-1,向server-2的8000端口发包。

发送进程B位于server-2,向server-1的9000端口发包。

接收进程C位于server-2,在8000端口收包。

接收进程D位于server-1,在9000端口收包。

每个进程只有一个线程,包大小为1460。UDP,所以实际每个包的大小为1460+8+20=1488。

在server-2上执行sar命令:

[root@nqadb ~]# sar -n DEV 10 50

04:04:01 PM IFACE rxpck/s txpck/s rxkB/s txkB/s rxcmp/s txcmp/s rxmcst/s

04:04:11 PM eth0 83772.62 84014.56 123204.50 123560.38 0.00 0.00 0.00

测试结果:网卡的带宽参数指的是单向带宽。从sar的结果看出,上下行可以同时达到960Mb/s。