二、通过requests等爬取数据

写一篇爬虫吧

目录

- 二、完整代码

- 三、结果

- 四、总结

同时推荐前面作者另外两个系列文章:

-

快速入门之Tableau系列:快速入门之Tableau

-

快速入门之爬虫系列:快速入门之爬虫

下面我们要做的就是通过每个国家的编号访问每个国家历史数据的地址,然后获取每一个国家的历史数据。

start = time.time()

for country_id in country_dict: # 遍历每个国家的编号

try:

# 按照编号访问每个国家的数据地址,并获取json数据

url = 'https://c.m.163.com/ug/api/wuhan/app/data/list-by-area-code?areaCode='+country_id

r = requests.get(url, headers=headers)

json_data = json.loads(r.text)

# 生成每个国家的数据

country_data = get_data(json_data['data']['list'],['date'])

country_data['name'] = country_dict[country_id]

# 数据叠加

if country_id == '9577772':

alltime_world = country_data

else:

alltime_world = pd.concat([alltime_world,country_data])

print('-'*20,country_dict[country_id],'成功',country_data.shape,alltime_world.shape,

',累计耗时:',round(time.time()-start),'-'*20)

time.sleep(10)

except:

print('-'*20,country_dict[country_id],'wrong','-'*20)

二、完整代码

- 第一部分

# =============================================

# --*-- coding: utf-8 --*--

# @Time : 2020-03-27

# @Author : 不温卜火

# @CSDN : https://blog.csdn.net/qq_16146103

# @FileName: All collection.py

# @Software: PyCharm

# =============================================

headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:73.0) Gecko/20100101 Firefox/73.0'}

url = 'https://c.m.163.com/ug/api/wuhan/app/data/list-total' # 定义要访问的地址

r = requests.get(url, headers=headers) # 使用requests发起请求

data_json = json.loads(r.text)

data = data_json['data']

data_province = data['areaTree'][2]['children']

areaTree = data['areaTree']

# 将提取数据的方法封装成函数

def get_data(data, info_list):

info = pd.DataFrame(data)[info_list] # 主要信息

today_data = pd.DataFrame([i['today'] for i in data]) # 提取today的数据

today_data.columns = ['today_' + i for i in today_data.columns]

total_data = pd.DataFrame([i['total'] for i in data])

total_data.columns = ['total_' + i for i in total_data.columns]

return pd.concat([info, total_data, today_data], axis=1)

today_province = get_data(data_province,['id','lastUpdateTime','name'])

province_dict = { num:name for num ,name in zip(today_province['id'],today_province['name'])}

start = time.time()

for province_id in province_dict: # 遍历各省编号

try:

# 按照省编号访问每个省的数据地址,并获取json数据

url = 'https://c.m.163.com/ug/api/wuhan/app/data/list-by-area-code?areaCode=' + province_id

r = requests.get(url, headers=headers)

data_json = json.loads(r.text)

# 提取各省数据,然后写入各省名称

province_data = get_data(data_json['data']['list'], ['date'])

province_data['name'] = province_dict[province_id]

# 合并数据

if province_id == '420000':

alltime_province = province_data

else:

alltime_province = pd.concat([alltime_province, province_data])

print('-' * 20, province_dict[province_id], '成功',

province_data.shape, alltime_province.shape,

',累计耗时:', round(time.time() - start), '-' * 20)

# 设置延迟等待

time.sleep(10)

except:

print('-' * 20, province_dict[province_id], 'wrong', '-' * 20)

def save_data(data, name):

file_name = name + '_' + time.strftime('%Y_%m_%d', time.localtime(time.time())) + '.csv'

data.to_csv(file_name, index=None, encoding='utf_8_sig')

print(file_name + '保存成功!')

if __name__ == '__main__':

save_data(alltime_province, 'alltime_province')

- 第二部分

# =============================================

# --*-- coding: utf-8 --*--

# @Time : 2020-03-27

# @Author : 不温卜火

# @CSDN : https://blog.csdn.net/qq_16146103

# @FileName: All collection.py

# @Software: PyCharm

# =============================================

import requests

import pandas as pd

import json

import time

pd.set_option('max_rows',500)

headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:73.0) Gecko/20100101 Firefox/73.0'}

url = 'https://c.m.163.com/ug/api/wuhan/app/data/list-total' # 定义要访问的地址

r = requests.get(url, headers=headers) # 使用requests发起请求

data_json = json.loads(r.text)

data = data_json['data']

data_province = data['areaTree'][2]['children']

areaTree = data['areaTree']

# 将提取数据的方法封装成函数

def get_data(data, info_list):

info = pd.DataFrame(data)[info_list] # 主要信息

today_data = pd.DataFrame([i['today'] for i in data]) # 提取today的数据

today_data.columns = ['today_' + i for i in today_data.columns]

total_data = pd.DataFrame([i['total'] for i in data])

total_data.columns = ['total_' + i for i in total_data.columns]

return pd.concat([info, total_data, today_data], axis=1)

today_world = get_data(areaTree,['id','lastUpdateTime','name'])

country_dict = {key: value for key, value in zip(today_world['id'], today_world['name'])}

start = time.time()

for country_id in country_dict: # 遍历每个国家的编号

try:

# 按照编号访问每个国家的数据地址,并获取json数据

url = 'https://c.m.163.com/ug/api/wuhan/app/data/list-by-area-code?areaCode=' + country_id

r = requests.get(url, headers=headers)

json_data = json.loads(r.text)

# 生成每个国家的数据

country_data = get_data(json_data['data']['list'], ['date'])

country_data['name'] = country_dict[country_id]

# 数据叠加

if country_id == '9577772':

alltime_world = country_data

else:

alltime_world = pd.concat([alltime_world, country_data])

print('-' * 20, country_dict[country_id], '成功', country_data.shape, alltime_world.shape,

',累计耗时:', round(time.time() - start), '-' * 20)

time.sleep(10)

except:

print('-' * 20, country_dict[country_id], 'wrong', '-' * 20)

def save_data(data, name):

file_name = name + '_' + time.strftime('%Y_%m_%d', time.localtime(time.time())) + '.csv'

data.to_csv(file_name, index=None, encoding='utf_8_sig')

print(file_name + '保存成功!')

if __name__ == '__main__':

save_data(alltime_world,'alltime_world')

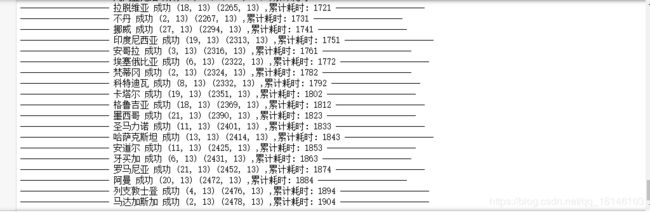

三、结果

四、总结

- 代码可能还有更高效的写法

- 由于是第一次写这种类型的博文,逻辑结构可能会差点

- 没有采用IP代理池,只是设置了延时进行反爬,速度会有一点慢,可以采用高并发多线程的IP代理池完成爬取。

- 如果像要爬取数据或者自己爬取不出来的话可以私聊博主。

本次的分享就到这里了,

![]()

看 完 就 赞 , 养 成 习 惯 ! ! ! \color{#FF0000}{看完就赞,养成习惯!!!} 看完就赞,养成习惯!!!^ _ ^ ❤️ ❤️ ❤️

码字不易,大家的支持就是我坚持下去的动力。点赞后不要忘了关注我哦!