《机器学习Python实践》学习笔记(一)

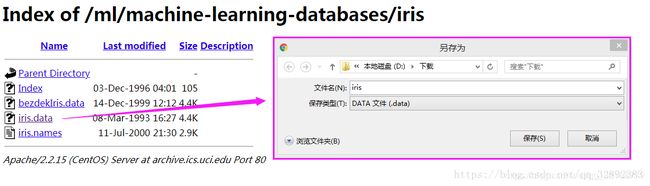

UCI机器学习数据集

http://archive.ics.uci.edu/ml/datasets.html

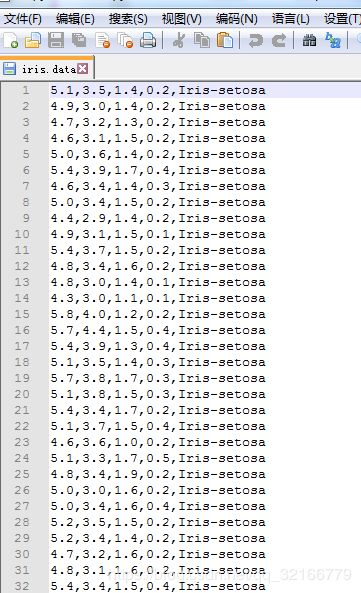

首先是分析数据,查看数据维度。

from pandas import read_csv

filename = 'E:/python_code/python_ml/ml_data/iris.data'

names = ['seper-length','separ-width','petal-length','petal-width','class']

dataset = read_csv(filename,names=names)

#显示数据维度

print('数据维度: 行 %s 列 %s '% dataset.shape)

可以把iris.data保存为iris.data.csv类型 结果是一样的,原文中是变成csv文件

#查看前10行

print(dataset.head(10))

统计描述数据

print(dataset.describe())

output:

seper-length separ-width petal-length petal-width

count 150.000000 150.000000 150.000000 150.000000

mean 5.843333 3.054000 3.758667 1.198667

std 0.828066 0.433594 1.764420 0.763161

min 4.300000 2.000000 1.000000 0.100000

25% 5.100000 2.800000 1.600000 0.300000

50% 5.800000 3.000000 4.350000 1.300000

75% 6.400000 3.300000 5.100000 1.800000

max 7.900000 4.400000 6.900000 2.500000

查看分类分布情况

最开始的names = [‘seper-length’,‘separ-width’,‘petal-length’,‘petal-width’,‘class’]

所以用‘class’查看名字

print(dataset.groupby('class').size())

output:

class

Iris-setosa 50

Iris-versicolor 50

Iris-virginica 50

dtype: int64

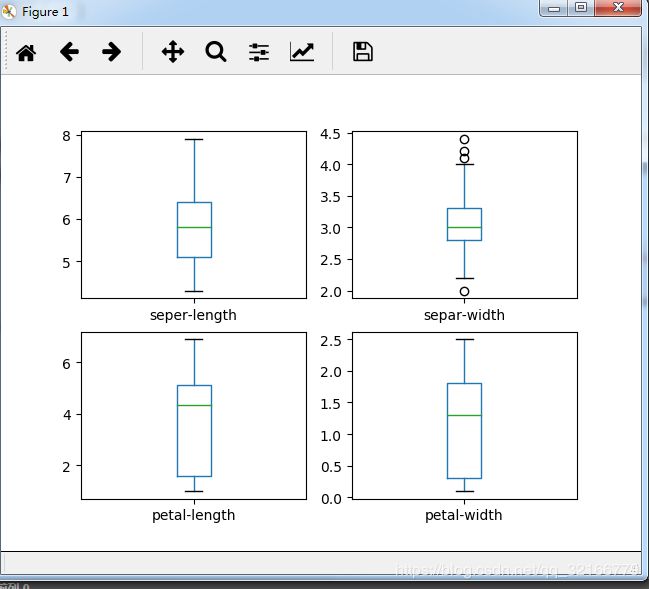

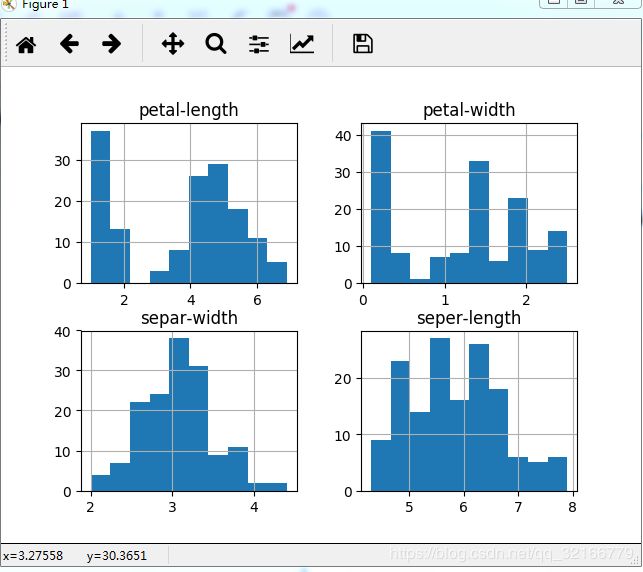

数据可视化

使用单变量图表可以更好的理解每一个特征属性

多变量图表用于理解不同特征属性之间的关系

箱线图

dataset.plot(kind ='box', subplots=True, layout= (2,2), sharex= False, sharey= False)

pyplot.show()

dataset.hist()

pyplot.show()

from pandas import scatter_matrix

scatter_matrix(dataset)

pyplot.show()

这里 scatter_matrix的导入,文章好像给错了。我这么写才是正确的

评估算法

(1)分离出评估数据集

(2)采用10折交叉验证来评估算法模型

(3)生成6个不同的模型来预测新数据

(4)选择最优模型

from pandas import read_csv

from matplotlib import pyplot

from pandas import scatter_matrix

from sklearn.model_selection import train_test_split

from sklearn.model_selection import KFold

from sklearn.model_selection import cross_val_score

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrix

from sklearn.metrics import accuracy_score

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

filename = 'E:/python_code/python_ml/ml_data/iris.data'

names = ['seper-length','separ-width','petal-length','petal-width','class']

dataset = read_csv(filename,names=names)

array = dataset.values

X= array[:,0:4]

Y= array[:,4]

validation_size =0.2

seed = 7

X_train,X_validation ,Y_train, Y_validation =\

train_test_split(X,Y,test_size=validation_size,random_state=seed)

models = {}

models['LR'] = LogisticRegression()

models['LDA'] = LinearDiscriminantAnalysis()

models['KNN'] = KNeighborsClassifier()

models['KART'] = DecisionTreeClassifier()

models['NB'] = GaussianNB()

models['SVM']= SVC()

results = []

for key in models:

kfold = KFold(n_splits=10,random_state=seed)

cv_results = cross_val_score(models[key],X_train,Y_train,cv=kfold,scoring='accuracy')

results.append(cv_results)

print('%s: %f(%f)'%(key,cv_results.mean(),cv_results.std()))

output:

LR: 0.966667(0.040825)

LDA: 0.975000(0.038188)

KNN: 0.983333(0.033333)

KART: 0.983333(0.033333)

NB: 0.975000(0.053359)

SVM: 0.991667(0.025000)