Python爬虫:多线程爬取盗墓笔记

用到的库函数

import requests

import time

from lxml import etree

from multiprocessing.pool import Pool

爬取用xpath

爬取 盗墓笔记的标题、章节、正文

直接上代码:

页面获取;

def get_info(url):

headers = {

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3722.400 QQBrowser/10.5.3738.400'

}

response = requests.get(url=url,headers=headers)

return response.text正文抽取解析:

def paser_conten(contents):

selectors = etree.HTML(contents)

pages = selectors.xpath('//article[@class="article-content"]//p/text()')

pages = ''.join(pages)

#print(pages)

return pages

文件保存:

def save(content):

with open('daomubiji1.doc','a',encoding='utf-8')as f:

f.write(content+'\n')

信息提取:

def get_books(url):

selector = etree.HTML(get_info(url))

title = selector.xpath('//h1[@class="focusbox-title"]/text()')[0]

print(title)

save(title)

items = selector.xpath('//article[@class="excerpt excerpt-c3"]')

for item in items:

section = item.xpath('./a/text()')[0]

print(section)

save(section)

detail_href = item.xpath('./a/@href')[0]

contents = get_info(detail_href)

detail_content = paser_conten(contents)

print(detail_content)

save(detail_content)创建线程运行:

if __name__ == "__main__":

print('***开始爬取****'*50)

url = ['http://www.daomubiji.com/dao-mu-bi-ji-%s' %i for i in range(1,2)]

pool = Pool()

reaponse = pool.map(get_books,url)

pool.close()

pool.join()

time.sleep(1)

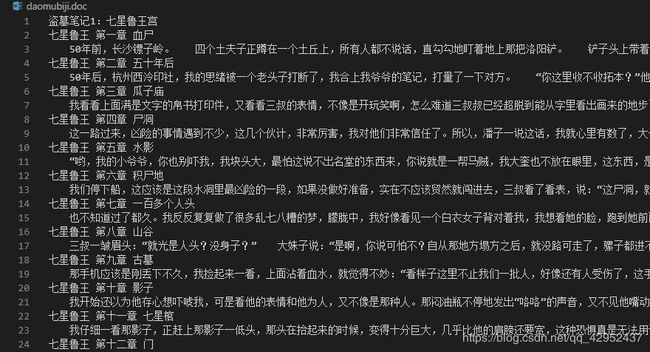

爬取结果(内容截屏不全):