【天池入门笔记】【算法入门】sklearn入门系列二:聚类算法与特征选择

聚类算法主要有三种:层次聚类,划分聚类(sklearn),密度聚类(DBSCAN)

1、聚类

#层次聚类

from sklearn.cluster import Agglomerative Clustering

import pandas as pd

from sklearn.preprocessing import StandardScaler

data = pd.read_csv('data.csv').fillna(0)

label = data.label

feature = data.drop('label',axis=1)

feature = StandardScaler().fit_transform(feature)

cluster = AgglomerativeClustering(n_clusters=2)

cluster.fit(feature)

pred = cluster.fit_predict(feature)

from sklearn.metrics import accuracy_score

print(accuracy_score(label,pred))

#划分聚类(kmeans)

cluster = KMeans(n_clusters=2,n_jobs=-1,init='k_means++')

#密度聚类(DBSCAN)

from sklearn import DBSCAN

cluster = DBSCAN(n_jobs=-1,eps=0.01)

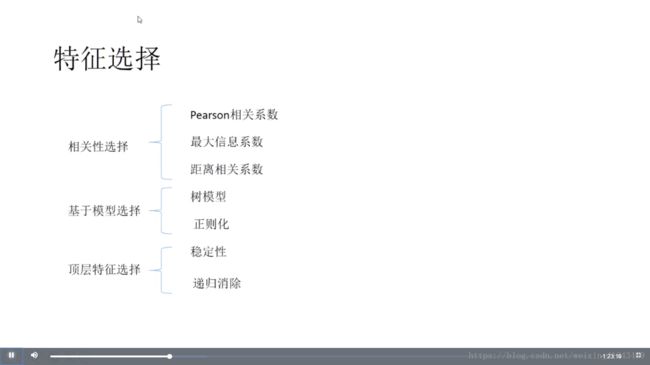

pred = cluster.fit_predict(feature)2、特征选择

#采用pearsonr相关系数选特征

import numpy as np

import pandas as pd

data.label.replace(-1,0,inplace = True)

data = data.fillna(0)

y = data.label

x = data.drop('label',axis=1)

from sklearn.model_selection import train_test_split

X_train,X_test,y_train,y_test = train_test_split(x,y,test_size=0.1,random_state=0)10%的数据作为测试集

from scipy.stats import pearsonr

columns = X_train.columns

pearsonr()

feature_importance = [(column,pearsonr(X_train[column],y_train)[0]) for column in columns]

#pearsonr()函数返回pearsonr值和p值,我们只需要pearsonr值,故取[0]

feature_importance.sort(key = lambda x:x[1])

#lambda函数是取其pearsonr值进行排序,丢弃column

#采用xgboost检验一下特征选择效果

import xgboost as xgb

dtrain = xbg.DMatrix(X_train,label=y_train)

dtest = xgb.DMatrix(X_test,label=y_test)

params = {

'booster':'gbtree',

'objective':'rank:pairwise',

'eval_metric':'auc',

'gamma':0.1,

'min_child_weight':2,

'max_depth':5,

'lambda':10,

'subsample':0.7,

'colsample bytree':0.7,

'eta':0.01,

'tree_method':'exact',

'seed':0,

'nthead':7

}

watchlist = [(dtrain,'train'),(dtest,'test')]

model = xgb.train(params,dtrain,num_boost_round=100,evals=watchlist)

#再看一下删除相关系数小的特征之后的结果

#查看feature_importance,发现['merchant_max_distance']的pearsonr值较小

delete_feature = ['merchant_max_distance']

X_train = X_train[[for i in columns if i not in delete_feature]]

X_test = X_test[[for i in columns if i not in delete_feature]]

dtrain = xbg.DMatrix(X_train,label=y_train)

dtest = xgb.DMatrix(X_test,label=y_test)

watchlist = [(dtrain,'train'),(dtest,'test')]

model = xgb.train(params,dtrain,num_boost_round=100,evals=watchlist)

#运行之后比较出来的结果的auc值

#使用模型进行特征选择

#LogisticRegression

from sklearn.metrics import roc_auc_score

from sklearn.linear_model import LogisticRegression

X_train,X_test,y_train,y_test = train_test_split(x,y,test_size=0.1,random_state=0)

lr = LogisticRegression(penalty='l2',random_state=0,n_jobs=-1).fit(X_train,y_train)

pred = lr.predict_proba(X_test)[:,1]

print(roc_auc_score(y_test,pred))

#Lasso

from sklearn.linear_model import RandomizedLasso

from sklearn.datasets import load_boston

boston = load_boston()

X = boston['data']

Y = boston['target']

names = boston['feature_names']

rlasso = RandomizedLasso(alpha=0.025).fit(X,Y)

feature_importance = sorted(zip(names,rlasso.scores_))

#RFE

from sklearn.feature selection import RFE

X_train,X_test,y_train,y_test = train_test_split(x,y,test_size=0.1,random_state=0)

rf = RandomForestClassifier

rfe =fit(X_train,y_train)

feature_importance = sorted(zip(

map(lambda x:round(x,4),rfe.ranking_),columns),reverse=True)

#SVC

cls = SVC(probability = True,kernel = 'rbf',c=0.1,max_iter=10)

cls.fit(X_train,y_train)

y_pred = cls.predict_proba(X_test)[:,1]

metrics.roc_auc_score(y_test)

#MLPRegresson

from sklearn.neural_network import MLPClassifier,MLPRegression

reg = MLPRegression(hidden_layer_sizes = (10,10,10),learning_rate = 0.1)

#DecisionTreeClassifier

from sklearn.tree import DecisionTreeClassifier

cls = DecisionTreeClassifier(max_depth=6,min_samples_split=10,

min_samples_leaf=5,max_features=0.7)

cls.fit(X_train,y_train)

y_pred = cls.predict_proba(X_test)[:,1]

metrics.roc_auc_score(y_test)

#RandomForestClassifier

cls = RandomForestClassifier(max_depth=6,min_samples_split=10,

min_samples_leaf=5,max_features=0.7)

cls.fit(X_train,y_train)

y_pred = cls.predict_proba(X_test)[:,1]

metrics.roc_auc_score(y_test)

#ExtraTreesClassifier