基于selenium+scrapy爬取复仇者联盟4豆瓣影评数据

基于selenium+scrapy爬取复仇者联盟4豆瓣影评数据

参考资料:

- 黑马程序员爬虫教程

- 静觅爬虫教程

- mac下anaconda安装selenium+PhantomJS

- scrapy下载中间件结合selenium抓取全国空气质量检测数据

- 使用xpath的轴(Axis)进行元素定位

以下内容只用于学习使用,请勿用于商业用途.

五一放假看了《复仇者联盟4》,对影片内容不是很懂,所以写个爬虫,抓取下影评,加深对电影的理解。

一开始打算使用简单的python工具库来爬取数据。电脑系统是mac,安装了Anaconda和python3.7。查看以前写的python爬虫笔记,都是使用urllib2来爬取数据。而Anaconda默认安装的是urllib3,所以就研究下urllib3。

urllib3学习

urllib3是一个功能强大,条理清晰,用于HTTP客户端的Python库,许多Python的原生系统已经开始使用urllib3。

urllib3提供了很多python标准库里所没有的重要特性:

- 线程安全

- 连接池

- 客户端SSL / TLS验证

- 文件分部编码上传

- 协助处理重复请求和HTTP重定位

- 支持压缩编码

- 支持HTTP和SOCKS代理

- 100 % 测试覆盖率

def askURL(url, fields):

'''

使用urllib3得到页面全部内容

:param url: 请求的url

:param fields: 请求参数

:return: 网页内容

'''

# 创建一个PoolManager实例来生成请求,由该实例对象处理与线程池的连接以及线程安全的所有细节,不需要任何人为操作

http = urllib3.PoolManager()

# 通过request()方法创建一个请求,返回一个HTTPResponse对象

response = http.request('GET', url, fields)

# print(response.status) # 打印响应码

html = response.data # 获取网页内容

# print(html)

return html

研究了urllib3后,就使用它去爬取复仇4的影评,结果发现:

- 短评的内容到start为220,即21页的时候,就必须要登录才能查看;

- 影评的内容默认是折叠的,需要要手动点击展开才能查看完整内容;

鉴于以上我原因,我只好使用selenium结合scrapy框架来实现影评爬虫。

mac下anaconda安装selenium+PhantomJS

在Anaconda中查找selenium,点击安装即可。

在phantomJS官网下载压缩包,解压后,拷贝bin/phantomjs文件到Anaconda安装python3的目录anaconda3/bin下。

scrapy框架学习

Scrapy是一个为了爬取网站数据,提取结构性数据而编写的应用框架。 可以应用在包括数据挖掘,信息处理或存储历史数据等一系列的程序中。

其最初是为了页面抓取 (更确切来说, 网络抓取 )所设计的, 也可以应用在获取API所返回的数据(例如 Amazon Associates Web Services ) 或者通用的网络爬虫。Scrapy用途广泛,可以用于数据挖掘、监测和自动化测试。

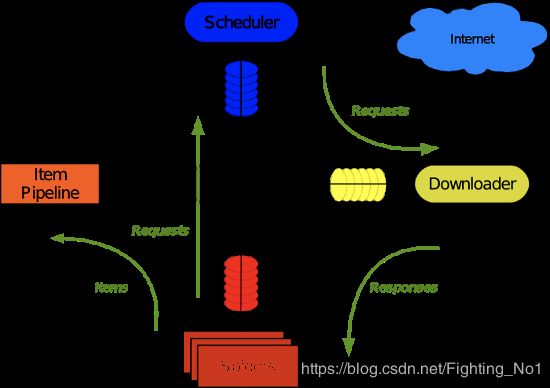

Scrapy 使用了 Twisted 异步网络库来处理网络通讯。整体架构大致如下

Scrapy主要包括了以下组件:

- 引擎(Scrapy): 用来处理整个系统的数据流处理, 触发事务(框架核心)

- 调度器(Scheduler): 用来接受引擎发过来的请求, 压入队列中, 并在引擎再次请求的时候返回. 可以想像成一个URL(抓取网页的网址或者说是链接)的优先队列, 由它来决定下一个要抓取的网址是什么, 同时去除重复的网址

- 下载器(Downloader): 用于下载网页内容, 并将网页内容返回给蜘蛛(Scrapy下载器是建立在twisted这个高效的异步模型上的)

- 爬虫(Spiders): 爬虫是主要干活的, 用于从特定的网页中提取自己需要的信息, 即所谓的实体(Item)。用户也可以从中提取出链接,让Scrapy继续抓取下一个页面

- 项目管道(Pipeline): 负责处理爬虫从网页中抽取的实体,主要的功能是持久化实体、验证实体的有效性、清除不需要的信息。当页面被爬虫解析后,将被发送到项目管道,并经过几个特定的次序处理数据。

- 下载器中间件(Downloader Middlewares): 位于Scrapy引擎和下载器之间的框架,主要是处理Scrapy引擎与下载器之间的请求及响应。

- 爬虫中间件(Spider Middlewares): 介于Scrapy引擎和爬虫之间的框架,主要工作是处理蜘蛛的响应输入和请求输出。

- 调度中间件(Scheduler Middewares): 介于Scrapy引擎和调度之间的中间件,从Scrapy引擎发送到调度的请求和响应。

Scrapy运行流程大概如下:

- 首先,引擎从调度器中取出一个链接(URL)用于接下来的抓取

- 引擎把URL封装成一个请求(Request)传给下载器,下载器把资源下载下来,并封装成应答包(Response)

- 然后,爬虫解析Response

- 若是解析出实体(Item),则交给实体管道进行进一步的处理。

- 若是解析出的是链接(URL),则把URL交给Scheduler等待抓取

安装

在Anaconda中搜索scrapy并安装即可。

代码实现

新建个scrapy爬虫工程

scrapy startproject doubanscrapy

进入爬虫工程目录并新建爬虫类

cd doubanscrapy

scrapy genspider doubanmovie movie.douban.com

启动爬虫使用命令

scrapy crawl doubanmovie

下面我们开始编写代码来对复仇4的影评数据进行爬取。

利用selenium模拟表单登录

首先,我们需要在settings.py中开启Cookie

COOKIES_ENABLED = True

然后,我们要启用下载中间件

DOWNLOADER_MIDDLEWARES = {

'doubanscrapy.middlewares.DoubanscrapyDownloaderMiddleware': 543,

}

接着,在middlewares.py中去修改DoubanscrapyDownloaderMiddleware类的process_request函数实现。

def process_request(self, request, spider):

if (request.url == 'https://accounts.douban.com/passport/login?source=movie'):

'''

利用selenium,模拟表单登录

'''

self.driver = webdriver.PhantomJS()

self.driver.get(request.url)

# 选择账号密码登录

self.driver.find_element_by_class_name('account-tab-account').click()

# 输入账号密码

self.driver.find_element_by_id('username').send_keys('username')

self.driver.find_element_by_id('password').send_keys('password')

# 模拟点击登录

self.driver.find_element_by_class_name('btn-account').click()

# 等待3秒

time.sleep(3)

# 获取请求后得到的源码

html = self.driver.page_source

# spider.logger.info(html)

# 关闭浏览器

self.driver.quit()

# 构造一个请求的结果,将浏览器访问得到的结果构造成response,并返回给引擎

response = http.HtmlResponse(url=request.url, body=html, request=request, encoding='utf-8')

return response

注意,这里通过判断url是否是登录的url,然后登录操作,其他url是不需要去调用登录操作的。

豆瓣登录默认采用的是手机验证码的登录方式,所以我们获取到登录页面的HTML内容后,要先通过selenium选择使用账号密码方式登录,然后在表单中输入用户名和密码,接着点击登录按钮,最后等待3秒,就可以得到登录成功后的请求页面内容了。

最后,我们在DoubanmovieSpider类中设置start_urls为登录的url,这样启动爬虫第一个爬取的url就是登录url,然后中间件就会模拟登录,然后就会返回登录成功的response,最后我们去调用Request去爬取影评url。

class DoubanmovieSpider(scrapy.Spider):

name = 'doubanmovie'

allowed_domains = ['movie.douban.com']

start_urls = ['https://accounts.douban.com/passport/login?source=movie']

base_url = "https://movie.douban.com/subject/26100958/reviews"

# 处理start_urls里的登录url的响应内容

def parse(self, response):

# 爬取复仇者联盟4的影评信息数据

yield scrapy.Request(self.base_url, callback=self.parse_movie)

爬取影评数据

首先,由于豆瓣影评的内容可能是被折叠,或者是未完整展开的,我们需要在DoubanscrapyDownloaderMiddleware类的process_request函数中利用selenium,模拟用户点击查看被折叠的影评,并模拟用户点击展开按钮,获取完整的影评内容HTML。

def process_request(self, request, spider):

if (request.url == 'https://accounts.douban.com/passport/login?source=movie'):

...

elif (request.url.startswith('https://movie.douban.com/subject/26100958/reviews')):

'''

利用selenium,模拟用户查看影评

'''

self.driver = webdriver.PhantomJS()

self.driver.get(request.url)

# 是否存在被隐藏的影评

fold_hd = self.driver.find_elements_by_class_name('fold-hd')

if len(fold_hd) != 0:

# 展开影评列表

self.driver.find_element_by_class_name('btn-unfold').click()

time.sleep(1)

# 展开所有影评内容

reviews = self.driver.find_elements_by_class_name('unfold')

for review in reviews:

review.click()

# 等待5秒

time.sleep(5)

# 获取请求后得到的源码

html = self.driver.page_source

# spider.logger.info(html)

# 关闭浏览器

self.driver.quit()

# 构造一个请求的结果,将浏览器访问得到的结果构造成response,并返回给引擎

response = http.HtmlResponse(url=request.url, body=html, request=request, encoding='utf-8')

return response

else:

return None

注意,有折叠的话,需要sleep1秒等待浏览器反应后再去展开影评内容。

然后我们需要编写个item类去保存我们需要的影评信息。

class DoubanMovieReviewItem(scrapy.Item):

# define the fields for your item here like:

title = scrapy.Field()

# 作者

author = scrapy.Field()

# 推荐度

rating = scrapy.Field()

# 点赞数

vote = scrapy.Field()

# 回应数

reply = scrapy.Field()

# 影评内容

content = scrapy.Field()

# 影评日期

reviewTime = scrapy.Field()

接着,修改pipeline,将获取到的影评信息保存到json文件中。

class DoubanscrapyPipeline(object):

def __init__(self):

self.file = open('movie.json', 'w', encoding='utf-8')

self.file.write("[\n")

def process_item(self, item, spider):

content = json.dumps(dict(item), ensure_ascii=False) + ",\n"

self.file.write(content)

return item

def close_spider(self, spider):

self.file.write("]\n")

self.file.close()

最后,我们编写DoubanmovieSpider中的parse_movie函数来解析影评内容,抽取出我们想要的信息。

# 处理响应内容

def parse_movie(self, response):

# self.log(response)

contents = response.xpath("//div[@class='main review-item']")

# 遍历所有的影评

for content in contents:

item = DoubanMovieReviewItem()

main_hd = content.xpath("./header")

# 影评作者

item['author'] = main_hd.xpath("./a[@class='name']/text()").extract()[0]

# 影评推荐力度

rating = main_hd.xpath("./span[@title]/@title").extract()

if len(rating) != 0:

item['rating'] = rating[0]

# 影评时间

item['reviewTime'] = main_hd.xpath("./span[@class='main-meta']/text()").extract()[0]

main_bd = content.xpath("./div[@class='main-bd']")

# 影评标题

item['title'] = main_bd.xpath("./h2/a/text()").extract()[0]

# 影评内容

item['content'] = main_bd.xpath("./descendant::div[@id='review-content']/*").extract()

action = main_bd.xpath("./div[3]")

# 点赞数

item['vote'] = action.xpath("./a[1]/span/text()").extract()[0].strip()

# 回应数

reply = action.xpath("./a[3]/text()").extract()[0]

if reply:

item['reply'] = reply.replace("回应", "")

# self.log(item)

yield item

# 爬取剩余页面

if self.offset == 0:

# 获取总页数

total_page = response.xpath("//div[@class='paginator']/span[@class='thispage']/@data-total-page").extract()[

0]

# self.log(total_page)

total_page = int(total_page)

# 设置总页数

self.page = total_page

# 设置只爬取前60条影评

if (self.page > 3):

self.page = 3

if total_page > 1:

self.offset = self.offset + 20

url = self.base_url + '?start=' + str(self.offset)

# self.log(url)

yield scrapy.Request(url, callback=self.parse_movie)

else:

if self.offset / 20 != self.page - 1:

self.offset = self.offset + 20

url = self.base_url + '?start=' + str(self.offset)

# self.log(url)

yield scrapy.Request(url, callback=self.parse_movie)

注意:我这边可能是因为网络原因,所以爬取的过程老是中途就没有反应了,所以上述代码中设置page为3,只爬取前60条数据。

爬取结果如下图所示:

完整代码

settings.py

# -*- coding: utf-8 -*-

# Scrapy settings for doubanscrapy project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://doc.scrapy.org/en/latest/topics/settings.html

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'doubanscrapy'

SPIDER_MODULES = ['doubanscrapy.spiders']

NEWSPIDER_MODULE = 'doubanscrapy.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

# USER_AGENT = 'doubanscrapy (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Configure maximum concurrent requests performed by Scrapy (default: 16)

# CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

# DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

# CONCURRENT_REQUESTS_PER_DOMAIN = 16

# CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

COOKIES_ENABLED = True

# Disable Telnet Console (enabled by default)

# TELNETCONSOLE_ENABLED = False

# Override the default request headers:

# DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

# }

# Enable or disable spider middlewares

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

# SPIDER_MIDDLEWARES = {

# 'doubanscrapy.middlewares.DoubanscrapySpiderMiddleware': 543,

# }

# Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {

'doubanscrapy.middlewares.RandomUserAgent': 100,

# 'doubanscrapy.middlewares.RandomProxy': 200,

'doubanscrapy.middlewares.DoubanscrapyDownloaderMiddleware': 543,

}

USER_AGENTS = [

'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0)',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.2)',

'Opera/9.27 (Windows NT 5.2; U; zh-cn)',

'Opera/8.0 (Macintosh; PPC Mac OS X; U; en)',

'Mozilla/5.0 (Macintosh; PPC Mac OS X; U; en) Opera 8.0',

'Mozilla/5.0 (Linux; U; Android 4.0.3; zh-cn; M032 Build/IML74K) AppleWebKit/534.30 (KHTML, like Gecko) Version/4.0 Mobile Safari/534.30',

'Mozilla/5.0 (Windows; U; Windows NT 5.2) AppleWebKit/525.13 (KHTML, like Gecko) Chrome/0.2.149.27 Safari/525.13'

]

PROXIES = [

{"ip_port": "121.42.140.113:16816", "user_passwd": ""},

{"ip_prot": "58.246.96.211:8080", "user_passwd": ""},

{"ip_prot": "58.253.238.242:80", "user_passwd": ""}

]

# Enable or disable extensions

# See https://doc.scrapy.org/en/latest/topics/extensions.html

# EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

# }

# Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'doubanscrapy.pipelines.DoubanscrapyPipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

# AUTOTHROTTLE_ENABLED = True

# The initial download delay

# AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

# AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

# AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

# AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

# HTTPCACHE_ENABLED = True

# HTTPCACHE_EXPIRATION_SECS = 0

# HTTPCACHE_DIR = 'httpcache'

# HTTPCACHE_IGNORE_HTTP_CODES = []

# HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

middlewares.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# Define here the models for your spider middleware

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals, http

from selenium import webdriver

import time

import random

import base64

from doubanscrapy.settings import USER_AGENTS

from doubanscrapy.settings import PROXIES

class DoubanscrapySpiderMiddleware(object):

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(self, response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(self, response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, dict or Item objects.

for i in result:

yield i

def process_spider_exception(self, response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Response, dict

# or Item objects.

pass

def process_start_requests(self, start_requests, spider):

# Called with the start requests of the spider, and works

# similarly to the process_spider_output() method, except

# that it doesn’t have a response associated.

# Must return only requests (not items).

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

class DoubanscrapyDownloaderMiddleware(object):

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

# Must either:

# - return None: continue processing this request

# - or return a Response object

# - or return a Request object

# - or raise IgnoreRequest: process_exception() methods of

# installed downloader middleware will be called

if (request.url == 'https://accounts.douban.com/passport/login?source=movie'):

'''

利用selenium,模拟表单登录

'''

self.driver = webdriver.PhantomJS()

self.driver.get(request.url)

# 选择账号密码登录

self.driver.find_element_by_class_name('account-tab-account').click()

# 输入账号密码

self.driver.find_element_by_id('username').send_keys('18819490304')

self.driver.find_element_by_id('password').send_keys('5354PPmm')

# 模拟点击登录

self.driver.find_element_by_class_name('btn-account').click()

# 等待3秒

time.sleep(3)

# 获取请求后得到的源码

html = self.driver.page_source

# spider.logger.info(html)

# 关闭浏览器

self.driver.quit()

# 构造一个请求的结果,将浏览器访问得到的结果构造成response,并返回给引擎

response = http.HtmlResponse(url=request.url, body=html, request=request, encoding='utf-8')

return response

elif (request.url.startswith('https://movie.douban.com/subject/26100958/reviews')):

'''

利用selenium,模拟用户查看影评

'''

self.driver = webdriver.PhantomJS()

self.driver.get(request.url)

# 是否存在被隐藏的影评

fold_hd = self.driver.find_elements_by_class_name('fold-hd')

if len(fold_hd) != 0:

# 展开影评列表

self.driver.find_element_by_class_name('btn-unfold').click()

time.sleep(1)

# 展开所有影评内容

reviews = self.driver.find_elements_by_class_name('unfold')

for review in reviews:

review.click()

# 等待5秒

time.sleep(5)

# 获取请求后得到的源码

html = self.driver.page_source

# spider.logger.info(html)

# 关闭浏览器

self.driver.quit()

# 构造一个请求的结果,将浏览器访问得到的结果构造成response,并返回给引擎

response = http.HtmlResponse(url=request.url, body=html, request=request, encoding='utf-8')

return response

else:

return None

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

return response

def process_exception(self, request, exception, spider):

# Called when a download handler or a process_request()

# (from other downloader middleware) raises an exception.

# Must either:

# - return None: continue processing this exception

# - return a Response object: stops process_exception() chain

# - return a Request object: stops process_exception() chain

pass

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

# 随机的User-Agent

class RandomUserAgent(object):

def process_request(self, request, spider):

useragent = random.choice(USER_AGENTS)

# print useragent

request.headers.setdefault("User-Agent", useragent)

# class RandomProxy(object):

# def process_request(self, request, spider):

# proxy = random.choice(PROXIES)

# # 没有代理账户验证的代理使用方式

# request.meta['proxy'] = "http://" + proxy['ip_port']

items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class DoubanMovieReviewItem(scrapy.Item):

# define the fields for your item here like:

title = scrapy.Field()

# 作者

author = scrapy.Field()

# 推荐度

rating = scrapy.Field()

# 点赞数

vote = scrapy.Field()

# 回应数

reply = scrapy.Field()

# 影评内容

content = scrapy.Field()

# 影评日期

reviewTime = scrapy.Field()

pipelines.py

# -*- coding: utf-8 -*-

import json

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

class DoubanscrapyPipeline(object):

def __init__(self):

self.file = open('movie.json', 'w', encoding='utf-8')

self.file.write("[\n")

def process_item(self, item, spider):

content = json.dumps(dict(item), ensure_ascii=False) + ",\n"

self.file.write(content)

return item

def close_spider(self, spider):

self.file.write("]\n")

self.file.close()

doubanmovie.py

# -*- coding: utf-8 -*-

import scrapy

from doubanscrapy.items import DoubanMovieReviewItem

class DoubanmovieSpider(scrapy.Spider):

name = 'doubanmovie'

allowed_domains = ['movie.douban.com']

start_urls = ['https://accounts.douban.com/passport/login?source=movie']

base_url = "https://movie.douban.com/subject/26100958/reviews"

offset = 0

page = 1

# 处理start_urls里的登录url的响应内容

def parse(self, response):

# 爬取复仇者联盟4的影评信息数据

yield scrapy.Request(self.base_url, callback=self.parse_movie)

# 处理响应内容

def parse_movie(self, response):

# self.log(response)

contents = response.xpath("//div[@class='main review-item']")

# 遍历所有的影评

for content in contents:

item = DoubanMovieReviewItem()

main_hd = content.xpath("./header")

# 影评作者

item['author'] = main_hd.xpath("./a[@class='name']/text()").extract()[0]

# 影评推荐力度

rating = main_hd.xpath("./span[@title]/@title").extract()

if len(rating) != 0:

item['rating'] = rating[0]

# 影评时间

item['reviewTime'] = main_hd.xpath("./span[@class='main-meta']/text()").extract()[0]

main_bd = content.xpath("./div[@class='main-bd']")

# 影评标题

item['title'] = main_bd.xpath("./h2/a/text()").extract()[0]

# 影评内容

item['content'] = main_bd.xpath("./descendant::div[@id='review-content']/*").extract()

action = main_bd.xpath("./div[3]")

# 点赞数

item['vote'] = action.xpath("./a[1]/span/text()").extract()[0].strip()

# 回应数

reply = action.xpath("./a[3]/text()").extract()[0]

if reply:

item['reply'] = reply.replace("回应", "")

# self.log(item)

yield item

# 爬取剩余页面

if self.offset == 0:

# 获取总页数

total_page = response.xpath("//div[@class='paginator']/span[@class='thispage']/@data-total-page").extract()[

0]

# self.log(total_page)

total_page = int(total_page)

# 设置总页数

self.page = total_page

# 设置只爬取前60条影评

if (self.page > 3):

self.page = 3

if total_page > 1:

self.offset = self.offset + 20

url = self.base_url + '?start=' + str(self.offset)

# self.log(url)

yield scrapy.Request(url, callback=self.parse_movie)

else:

if self.offset / 20 != self.page - 1:

self.offset = self.offset + 20

url = self.base_url + '?start=' + str(self.offset)

# self.log(url)

yield scrapy.Request(url, callback=self.parse_movie)