iOS开发-相册视频编辑裁剪

iOS相册视频编辑有两种方式,一种是使用系统自带的控制器UIVideoEditorController但是该类只提供了基础的视频编辑功能,接口十分有限,界面样式没法修改,效果如下图。

UIVideoEditorController和UIImagePickerController的视频显示界面十分相似,区别就是前者可以编辑,后者不能。

第二种方式就是利用强大的AVFoudation框架自己动手实现。

实现逻辑主要分5步:

* 1.AVPlayer循环播放视频

如果是整段视频循环播放,有两种实现方式,一种是KVO监听AVPlayer的timeControlStatus属性,

typedef NS_ENUM(NSInteger, AVPlayerTimeControlStatus) {

AVPlayerTimeControlStatusPaused,

AVPlayerTimeControlStatusWaitingToPlayAtSpecifiedRate,

AVPlayerTimeControlStatusPlaying

} NS_ENUM_AVAILABLE(10_12, 10_0);当状态为AVPlayerTimeControlStatusPaused的时候让player回到起点并继续播放。

[self.player seekToTime:CMTimeMake(0, 1)];

[self.player play];第二种循环播放方式是,利用计时器设置要播放时长,并循环执行计时器方法。

- (void)repeatPlay{

[self.player play];

CMTime start = CMTimeMakeWithSeconds(self.startTime, self.player.currentTime.timescale);

[self.player seekToTime:start toleranceBefore:kCMTimeZero toleranceAfter:kCMTimeZero];

}编辑视频时的视频段循环播放,显然只能通过第二种方式实现。

- 2.以1秒为单位,获取视频帧图像

编辑区域需要显示视频帧图像,通过AVAssetImageGenerator这个类来获取,在该类的获取视频帧图像接口调用时需要传入要获取视频帧图像的时间节点。

- (void)generateCGImagesAsynchronouslyForTimes:(NSArray<NSValue *> *)requestedTimes completionHandler:(AVAssetImageGeneratorCompletionHandler)handler;在视频编辑功能中,一般的时间节点都是以1秒为单位获取视频帧图像。在该接口回调中由于是异步执行,所以需要在回调中直接显示图片,详细代码实现如下。

#pragma mark 读取解析视频帧

- (void)analysisVideoFrames{

//初始化asset对象

AVURLAsset *videoAsset = [[AVURLAsset alloc]initWithURL:self.videoUrl options:nil];

//获取总视频的长度 = 总帧数 / 每秒的帧数

long videoSumTime = videoAsset.duration.value / videoAsset.duration.timescale;

//创建AVAssetImageGenerator对象

AVAssetImageGenerator *generator = [[AVAssetImageGenerator alloc]initWithAsset:videoAsset];

generator.maximumSize = bottomView.frame.size;

generator.appliesPreferredTrackTransform = YES;

generator.requestedTimeToleranceBefore = kCMTimeZero;

generator.requestedTimeToleranceAfter = kCMTimeZero;

// 添加需要帧数的时间集合

self.framesArray = [NSMutableArray array];

for (int i = 0; i < videoSumTime; i++) {

CMTime time = CMTimeMake(i *videoAsset.duration.timescale , videoAsset.duration.timescale);

NSValue *value = [NSValue valueWithCMTime:time];

[self.framesArray addObject:value];

}

NSMutableArray *imgArray = [NSMutableArray array];

__block long count = 0;

[generator generateCGImagesAsynchronouslyForTimes:self.framesArray completionHandler:^(CMTime requestedTime, CGImageRef img, CMTime actualTime, AVAssetImageGeneratorResult result, NSError *error){

if (result == AVAssetImageGeneratorSucceeded) {

NSLog(@"%ld",count);

UIImageView *thumImgView = [[UIImageView alloc] initWithFrame:CGRectMake(50+count*self.IMG_Width, 0, self.IMG_Width, 70)];

thumImgView.image = [UIImage imageWithCGImage:img];

dispatch_async(dispatch_get_main_queue(), ^{

[editScrollView addSubview:thumImgView];

editScrollView.contentSize = CGSizeMake(100+count*self.IMG_Width, 0);

});

count++;

}

if (result == AVAssetImageGeneratorFailed) {

NSLog(@"Failed with error: %@", [error localizedDescription]);

}

if (result == AVAssetImageGeneratorCancelled) {

NSLog(@"AVAssetImageGeneratorCancelled");

}

}];

[editScrollView setContentOffset:CGPointMake(50, 0)];

}3.添加编辑视图,并控制AVPlayer循环播放该时间区域视频段

视频编辑框,我的思路是左右添加一个视图,根据在其父视图上的添加的拖拽手势,如果当前触点在编辑框视图上,则根据其父视图拖动的距离调整编辑框位置,并调整播放视频的起止时间。4.监听编辑框和视频帧滑动,并调整AVPlayer循环播放的视频段

编辑框的位置移动第3步已经说了,视频帧图像是放到UIScrollView上的,这里也可以用UICollectionView实现,关于滑动区域的位置监听和编辑框移动时视频播放区间的调整的实现逻辑稍复杂些,稍后会附上Demo地址,大家可以详细看代码实现,这里就贴手势处理的部分代码。

#pragma mark 编辑区域手势拖动

- (void)moveOverlayView:(UIPanGestureRecognizer *)gesture{

switch (gesture.state) {

case UIGestureRecognizerStateBegan:

{

[self stopTimer];

BOOL isRight = [rightDragView pointInsideImgView:[gesture locationInView:rightDragView]];

BOOL isLeft = [leftDragView pointInsideImgView:[gesture locationInView:leftDragView]];

_isDraggingRightOverlayView = NO;

_isDraggingLeftOverlayView = NO;

self.touchPointX = [gesture locationInView:bottomView].x;

if (isRight){

self.rightStartPoint = [gesture locationInView:bottomView];

_isDraggingRightOverlayView = YES;

_isDraggingLeftOverlayView = NO;

}

else if (isLeft){

self.leftStartPoint = [gesture locationInView:bottomView];

_isDraggingRightOverlayView = NO;

_isDraggingLeftOverlayView = YES;

}

}

break;

case UIGestureRecognizerStateChanged:

{

CGPoint point = [gesture locationInView:bottomView];

// Left

if (_isDraggingLeftOverlayView){

CGFloat deltaX = point.x - self.leftStartPoint.x;

CGPoint center = leftDragView.center;

center.x += deltaX;

CGFloat durationTime = (SCREEN_WIDTH-100)*2/10; // 最小范围2秒

BOOL flag = (self.endPointX-point.x)>durationTime;

if (center.x >= (50-SCREEN_WIDTH/2) && flag) {

leftDragView.center = center;

self.leftStartPoint = point;

self.startTime = (point.x+editScrollView.contentOffset.x)/self.IMG_Width;

topBorder.frame = CGRectMake(self.boderX+=deltaX/2, 0, self.boderWidth-=deltaX/2, 2);

bottomBorder.frame = CGRectMake(self.boderX+=deltaX/2, 50-2, self.boderWidth-=deltaX/2, 2);

self.startPointX = point.x;

}

CMTime startTime = CMTimeMakeWithSeconds((point.x+editScrollView.contentOffset.x)/self.IMG_Width, self.player.currentTime.timescale);

// 只有视频播放的时候才能够快进和快退1秒以内

[self.player seekToTime:startTime toleranceBefore:kCMTimeZero toleranceAfter:kCMTimeZero];

}

else if (_isDraggingRightOverlayView){ // Right

CGFloat deltaX = point.x - self.rightStartPoint.x;

CGPoint center = rightDragView.center;

center.x += deltaX;

CGFloat durationTime = (SCREEN_WIDTH-100)*2/10; // 最小范围2秒

BOOL flag = (point.x-self.startPointX)>durationTime;

if (center.x <= (SCREEN_WIDTH-50+SCREEN_WIDTH/2) && flag) {

rightDragView.center = center;

self.rightStartPoint = point;

self.endTime = (point.x+editScrollView.contentOffset.x)/self.IMG_Width;

topBorder.frame = CGRectMake(self.boderX, 0, self.boderWidth+=deltaX/2, 2);

bottomBorder.frame = CGRectMake(self.boderX, 50-2, self.boderWidth+=deltaX/2, 2);

self.endPointX = point.x;

}

CMTime startTime = CMTimeMakeWithSeconds((point.x+editScrollView.contentOffset.x)/self.IMG_Width, self.player.currentTime.timescale);

// 只有视频播放的时候才能够快进和快退1秒以内

[self.player seekToTime:startTime toleranceBefore:kCMTimeZero toleranceAfter:kCMTimeZero];

}

else { // 移动scrollView

CGFloat deltaX = point.x - self.touchPointX;

CGFloat newOffset = editScrollView.contentOffset.x + deltaX;

CGPoint currentOffSet = CGPointMake(newOffset, 0);

if (currentOffSet.x >= 0 && currentOffSet.x <= (editScrollView.contentSize.width-SCREEN_WIDTH)) {

editScrollView.contentOffset = CGPointMake(newOffset, 0);

self.touchPointX = point.x;

}

}

}

break;

case UIGestureRecognizerStateEnded:

{

[self startTimer];

}

default:

break;

}

}- # 补充小细节

只有在视频播放时,调用AVPlayer的

- (void)seekToTime:(CMTime)time toleranceBefore:(CMTime)toleranceBefore toleranceAfter:(CMTime)toleranceAfter;这个接口才能实现小于1秒以内的快进和快退,如果是暂停状态,不管如何传时间值,都是以1秒为单位快进和快退。在开发过程中倒是发现了一个在暂停时可以以小于1秒的单位快进和快退的接口,是AVPlayerItem这个类的这个接口,

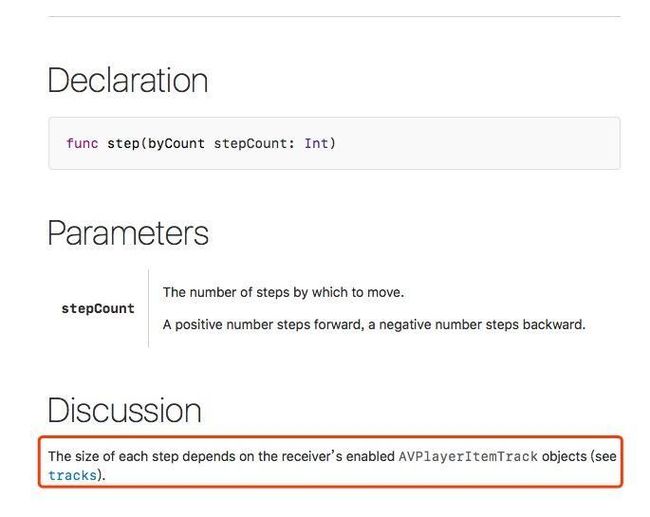

- (void)stepByCount:(NSInteger)stepCount;但是这个stepCount值官方文档说的很含糊,见截图

文档解释:每一步的大小取决于接收机的功能avplayeritemtrack对象(参考[tracks

]),然而我打印了tracks,只有一个音频轨迹一个视频轨迹,实在没找到有价值的东西,暂时放弃了这条路,好在视频播放时时可以以小于1秒单位快进和快退,微信朋友圈也是一直循环播放可能也有这个原因在里面。

- 5.完成时,根据编辑区域截取视频段存入相册并获取URL使用

最终完成视频剪辑需要用到AVAssetExportSession这个类,通过起止时间和源文件,完成视频的最终剪辑,代码如下。

#pragma mark 视频裁剪

- (void)notifyDelegateOfDidChange{

self.tempVideoPath = [NSTemporaryDirectory() stringByAppendingPathComponent:@"tmpMov.mov"];

[self deleteTempFile];

AVAsset *asset = [AVAsset assetWithURL:self.videoUrl];

AVAssetExportSession *exportSession = [[AVAssetExportSession alloc]

initWithAsset:asset presetName:AVAssetExportPresetPassthrough];

NSURL *furl = [NSURL fileURLWithPath:self.tempVideoPath];

exportSession.outputURL = furl;

exportSession.outputFileType = AVFileTypeQuickTimeMovie;

CMTime start = CMTimeMakeWithSeconds(self.startTime, self.player.currentTime.timescale);

CMTime duration = CMTimeMakeWithSeconds(self.endTime - self.startTime, self.player.currentTime.timescale);;

CMTimeRange range = CMTimeRangeMake(start, duration);

exportSession.timeRange = range;

[exportSession exportAsynchronouslyWithCompletionHandler:^{

switch ([exportSession status]) {

case AVAssetExportSessionStatusFailed:

NSLog(@"Export failed: %@", [[exportSession error] localizedDescription]);

break;

case AVAssetExportSessionStatusCancelled:

NSLog(@"Export canceled");

break;

default:

NSLog(@"NONE");

NSURL *movieUrl = [NSURL fileURLWithPath:self.tempVideoPath];

dispatch_async(dispatch_get_main_queue(), ^{

UISaveVideoAtPathToSavedPhotosAlbum([movieUrl relativePath], self,@selector(video:didFinishSavingWithError:contextInfo:), nil);

NSLog(@"编辑后的视频路径: %@",self.tempVideoPath);

self.isEdited = YES;

[self invalidatePlayer];

[self initPlayerWithVideoUrl:movieUrl];

bottomView.hidden = YES;

});

break;

}

}];

}

- (void)video:(NSString*)videoPath didFinishSavingWithError:(NSError*)error contextInfo:(void*)contextInfo {

if (error) {

NSLog(@"保存到相册失败");

} else {

NSLog(@"保存到相册成功");

}

}

- (void)deleteTempFile{

NSURL *url = [NSURL fileURLWithPath:self.tempVideoPath];

NSFileManager *fm = [NSFileManager defaultManager];

BOOL exist = [fm fileExistsAtPath:url.path];

NSError *err;

if (exist) {

[fm removeItemAtURL:url error:&err];

NSLog(@"file deleted");

if (err) {

NSLog(@"file remove error, %@", err.localizedDescription );

}

} else {

NSLog(@"no file by that name");

}

}完成效果如下(手机屏幕投到电脑上的录屏,所有手势没法显示,大家可以下demo自己体验):

MARK:新写了Swift版,过程中遇到一些问题也一起分享下

- Swift对于数学运算的个别数值要求比较严,不同数位需要转换,比如调用方法

public func CMTimeMakeWithSeconds(_ seconds: Float64, _ preferredTimescale: Int32) -> CMTime就需要把传入的非Float64参数强转

let startTim = CMTimeMakeWithSeconds(Float64(second), player.currentTime().timescale)- swift需要抛出异常的函数方法调用时的格式

无参数格式:

do{ try session.setActive(true) }

catch{}有参数格式:

do{try filem.removeItem(at: url)}

catch let err as NSError {

error = err

}反正不管遇到什么问题,就是多看官方文档的相关说明基本都可以解决掉。吐槽下:swift的编译和自动提示确实很慢,据说Xcode9会有所改观。

OC-Demo地址

Swift-Demo地址 GitHub给个Star噢!

喜欢就点个赞呗!

欢迎大家提出更好的改进意见和建议,一起进步!