Kubernetes----二进制集群(单master节点部署)

Kubernetes----二进制集群(单master节点部署)

一:实验环境

本次实验搭建部署是基于上一篇的基础上继续做的。

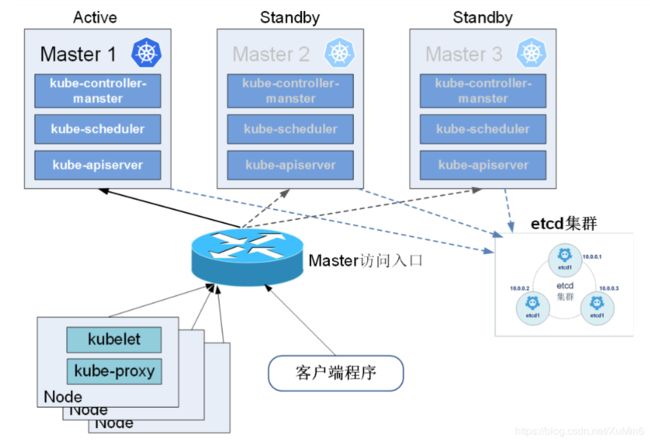

在 Master 上,要部署三大核心组件:

kube-apiserver:是集群的统一入口,各组件协调者,所有对象资源的增删改查和监听操作都交给 APIServer 处理后再提交给 Etcd 存储;

kube-controller-manager:处理群集中常规后台任务,一个资源对应一个控制器,而 controller-manager 就是负责管理这些控制器的;

kube-scheduler:根据调度算法为新创建的 Pod 选择一个 Node 节点,可以任意部署,可以部署在同一个节点上,也可以部署在不同节点上。

在 node上,要部署三大核心组件 :

kubelet:是master在node节点上的agent,可以管理本机运行容器的生命周期,例如创建容器、Pod挂载数据卷、下载secret、获取容器和节点状态等工作,kubelet 将每个 Pod转换成一组容器。

kube-proxy:在 node节点上实现 Pod网络代理,维护网络规划和四层负载均衡工作。

docker:容器

二:实验步骤

(在master上配置)

1、master节点 生成证书

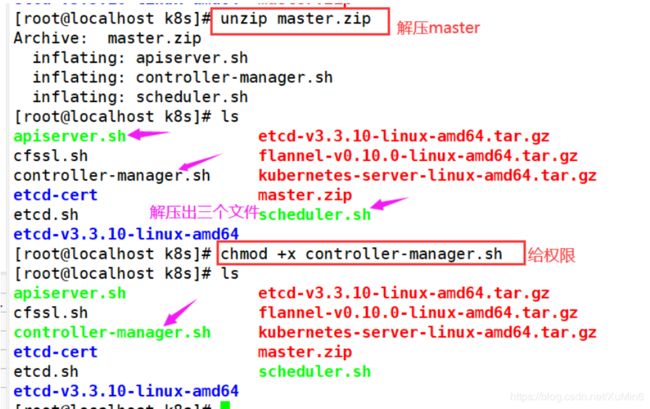

[root@localhost k8s]# unzip master.zip ‘解压master压缩包’

[root@localhost k8s]# chmod +x controller-manager.sh ‘给controll脚本加上权限’

[root@localhost k8s]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@localhost k8s]# mkdir k8s-cert ‘创建 apiserver自签证书的目录’

[root@localhost k8s]# cd k8s-cert/

2、编写证书生成脚本

[root@localhost k8s-cert]# vim k8s-cert.sh

#编写ca证书的配置文件

cat > ca-config.json < ca-csr.json < server-csr.json < admin-csr.json < kube-proxy-csr.json <

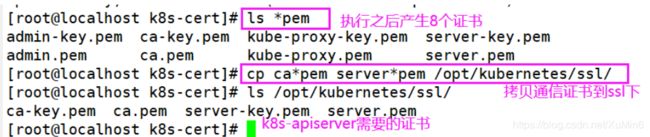

3、执行脚本生成k8s证书, 把通信证书拷贝到 /opt/kubernetes/ssl目录下

[root@localhost k8s-cert]# bash k8s-cert.sh

[root@localhost k8s-cert]# ls *pem ‘查看证书,有8个’

admin-key.pem ca-key.pem kube-proxy-key.pem server-key.pem

admin.pem ca.pem kube-proxy.pem server.pem

[root@localhost k8s-cert]# cp ca*pem server*pem /opt/kubernetes/ssl/

[root@localhost k8s-cert]# ls /opt/kubernetes/ssl/

4、解压二进制k8s文件包, 复制关键命令文件到/opt/kubernetes/bin/

[root@localhost k8s]# tar zxvf kubernetes-server-linux-amd64.tar.gz

[root@localhost ~]# cd /root/k8s/kubernetes/server/bin/

[root@localhost bin]# cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

[root@localhost bin]# cd /root/k8s/

5、制作token令牌, 使用head命令 随机生成序列号

[root@localhost k8s]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

b8e73230e624f7dfd19589f3e96761ef

‘复制序列号写入 token.csv 中’

[root@localhost k8s]# vim /opt/kubernetes/cfg/token.csv

‘序列号,用户名,id,角色’

b8e73230e624f7dfd19589f3e96761ef,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

6、开启apiserver,配置apiserver脚本

[root@localhost k8s]# bash apiserver.sh

[root@localhost k8s]# apiserver.sh

#!/bin/bash

#其中需要指定master节点IP地址和etcd的群集

MASTER_ADDRESS=$1

ETCD_SERVERS=$2

cat </opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \\

--v=4 \\

--etcd-servers=${ETCD_SERVERS} \\

--bind-address=${MASTER_ADDRESS} \\

--secure-port=6443 \\

--advertise-address=${MASTER_ADDRESS} \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/24 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--kubelet-https=true \\

--enable-bootstrap-token-auth \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=30000-50000 \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

#生成api server的启动脚本

cat </usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

7、检查进程是否启动,查看监听的https端口

[root@localhost k8s]# ps aux | grep kube

bash apiserver.sh 192.168.48.152 https://192.168.48.152:2379,https://192.168.48.148:2379,https://192.168.48.138:2379

[root@localhost k8s]# netstat -natp | grep 6443

[root@localhost k8s]# netstat -natp | grep 8080

8、配置scheduler.sh脚本

[root@localhost k8s]# vim scheduler.sh

#!/bin/bash

MASTER_ADDRESS=$1

cat </opt/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true \\

--v=4 \\

--master=${MASTER_ADDRESS}:8080 \\

--leader-elect"

EOF

cat </usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl restart kube-scheduler

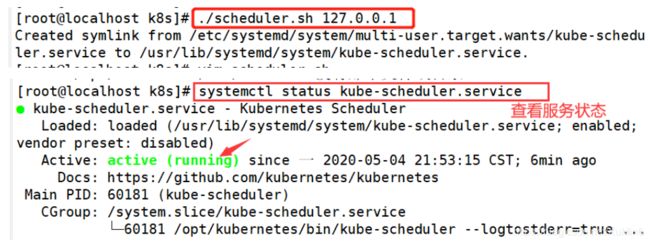

9、启动scheduler服务和controller-manager服务

[root@localhost k8s]# ./scheduler.sh 127.0.0.1 ‘启动scheduler服务’

[root@localhost k8s]# systemctl status kube-scheduler.service ‘查看服务状态’

[root@localhost k8s]# ./controller-manager.sh 127.0.0.1 ‘启动controller-manager服务’

[root@localhost k8s]# /opt/kubernetes/bin/kubectl get cs ‘查看集群健康状态’

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

部署kubeconfig

(在master节点)

1、把master节点的kubelet、kube-proxy拷贝到node节点

[root@localhost k8s]# cd kubernetes/server/bin/

[root@localhost bin]# scp kubelet kube-proxy root@192.168.48.138:/opt/kubernetes/bin/

[root@localhost bin]# scp kubelet kube-proxy root@192.168.48.148:/opt/kubernetes/bin/

(在node节点)

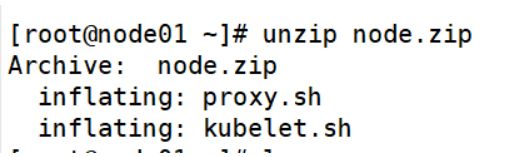

2、 上传node压缩包并解压

[root@node1 ~]# unzip node.zip

(在master节点)

3、 编写kubeconfig脚本

[root@localhost k8s]# mkdir kubeconfig

[root@localhost k8s]# cd kubeconfig/

[root@localhost kubeconfig]# cat /opt/kubernetes/cfg/token.csv ‘获取 token令牌的序列号’

b8e73230e624f7dfd19589f3e96761ef,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

[root@localhost kubeconfig]# vim kubeconfig

#master节点的IP地址

APISERVER=$1

#k8s证书路径

SSL_DIR=$2

# 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://$APISERVER:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

# 修改此处序列号

kubectl config set-credentials kubelet-bootstrap \

--token=b8e73230e624f7dfd19589f3e96761ef \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=$SSL_DIR/kube-proxy.pem \

--client-key=$SSL_DIR/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

4、 设置环境变量 ,检查健康状态

[root@localhost kubeconfig]# export PATH=$PATH:/opt/kubernetes/bin/

[root@localhost kubeconfig]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

5、 运行kubeconfig脚本生成配置文件

[root@localhost kubeconfig]# bash kubeconfig 192.168.48.148/root/k8s/k8s-cert/

[root@localhost kubeconfig]# ls

bootstrap.kubeconfig kubeconfig kube-proxy.kubeconfig ‘同时生成两个配置文件’

6、 把生成的两个配置文件拷贝到node节点

root@localhost kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.48.148:/opt/kubernetes/cfg/

[root@localhost kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.48.138:/opt/kubernetes/cfg/

7、 创建 bootstrap角色赋予权限

[root@localhost kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

(在node01节点)

1、 编写kubelet.sh脚本

[root@node01 ~]# vim kubelet.sh

#!/bin/bash

NODE_ADDRESS=$1

DNS_SERVER_IP=${2:-"10.0.0.2"}

cat </opt/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \\

--v=4 \\

--hostname-override=${NODE_ADDRESS} \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet.config \\

--cert-dir=/opt/kubernetes/ssl \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

EOF

cat </opt/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: ${NODE_ADDRESS}

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- ${DNS_SERVER_IP}

clusterDomain: cluster.local.

failSwapOn: false

authentication:

anonymous:

enabled: true

EOF

cat </usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet

ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

[root@node01 ~]# chmod +x /root/kubelet.sh '给权限'

2、 运行脚本启动kubelet,向master发送请求

[root@node01 ~]# bash kubelet.sh 192.168.48.152

可在master节点检查node01节点的请求

[root@localhost kubeconfig]# kubectl get csr

3、同意请求,颁发证书

[root@node01 kubeconfig]# kubectl certificate approve node-csr-M9Iv_6cKuEvLCzSvoKJGeMhHOaK1S9FnRs6SGIX6hY8

[root@node01 kubeconfig]# kubectl get csr ‘再次查看,状态变成允许’

4、查看集群节点

[root@localhost kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.48.148 Ready 20s v1.12.3

5、配置 proxy.sh 脚本

[root@node01 ~]# vim proxy.sh

#!/bin/bash

NODE_ADDRESS=$1

cat </opt/kubernetes/cfg/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \\

--v=4 \\

--hostname-override=${NODE_ADDRESS} \\

--cluster-cidr=10.0.0.0/24 \\

--proxy-mode=ipvs \\

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"

EOF

cat </usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy

ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

[root@node01 ~]# chmod +x /root/proxy.sh

6、 启动 proxy服务,并查看状态是否正常

[root@localhost ~]# bash proxy.sh

[root@localhost ~]# systemctl status kube-proxy.service

(配置node02)

1、 将 node01上的 /opt/kubernetes目录复制到node02上, 再把kubelet,kube-proxy的service文件拷贝 过来

[root@node01 ~]# scp -r /opt/kubernetes/ root@192.168.48.138:/opt/

[root@node1 ~]# scp /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.48.138:/usr/lib/systemd/system/

2、修改配置文件 kubelet、 kubelet.confg 、kube-proxy 中的IP地址

[root@node02 ~]# cd /opt/kubernetes/ssl/

[root@node02 ssl]# ls

kubelet-client-2020-04-30-11-48-13.pem kubelet.crt

kubelet-client-current.pem kubelet.key

[root@node02 ssl]# rm -rf * ‘先删除所有证书文件,node02会自行申请证书’

[root@node02 ssl]# cd /opt/kubernetes/cfg/

[root@node02 cfg]# vim kubelet

--v=4 \

--hostname-override=192.168.48.138 \ ‘这个地方修改为node02本地地址’

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

3、修改kubelet.conf配置文件

[root@node02 cfg]# vim kubelet.config

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.48.138 ‘修改为node02本地地址’

port: 10250

4、 修改kube-proxy 配置文件

[root@node02 cfg]# vim kube-proxy

--v=4 \

--hostname-override=192.168.48.138 \ ‘修改为node02本地地址’

--cluster-cidr=10.0.0.0/24 \

--proxy-mode=ipvs \

5、 启动node2节点的 kubelet、kube-proxy 服务,并设置开机自启

[root@node02 cfg]# systemctl start kubelet.service

[root@node02 cfg]# systemctl enable kubelet.service

[root@node02 cfg]# systemctl start kube-proxy.service

[root@node02 cfg]# systemctl enable kube-proxy.service

6、 在master上查看node02节点的请求 ,并授权加入集群

[root@localhost kubeconfig]# kubectl get csr

[root@localhost kubeconfig]# kubectl certificate approve node-csr-DcOTtu2KEty9zNGCxLq4Hw8E0EQCq7gdLHvph41lqC0x

7、查看集群状态

[root@localhost kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.48.148 Ready 9m56s v1.12.3

192.168.48.138 Ready 22s v1.12.3

单master节点部署完成了。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-vvb6bfoZ-1588639571747)(C:\Users\xumin\AppData\Roaming\Typora\typora-user-images\1588599565168.png)]](http://img.e-com-net.com/image/info8/22bee56f610741459a964b2164d95c92.jpg)