欠驱动机器人学-麻省理工学院开放课程-MIT 6.832: Underactuated Robotics(译)

课程链接:http://underactuated.csail.mit.edu/Spring2019/

- http://underactuated.csail.mit.edu/underactuated.html

欠驱动机器人学 Underactuated Robotics

Algorithms for Walking, Running, Swimming, Flying, and Manipulation

Spring 2019

课程描述

今天的机器人过于保守地使用控制系统,试图始终保持完全控制权。通过常规执行涉及失去瞬时控制权的动作,人类和动物更加积极地行动。在没有完全控制权限的情况下控制非线性系统需要能够推理和利用我们机器的自然动力学的方法。

本课程介绍非欠驱动机械系统的非线性动力学和控制,重点是计算方法。主题包括机器人操纵器的非线性动力学,应用最优和鲁棒控制以及运动规划。讨论包括从生物学和应用到腿部运动,顺应操纵,水下机器人和飞行机器的例子。

教科书、作业和视频

教科书、作业和讲座视频将全部开放并在线提供:

教科书(链接)

教科书将在整个学期内更新。请参阅教科书部分如何与讲座相对应的时间表。

作业(将在分配时发布)

- 作业1

- A部分:安装软件

- B部分:编写组件

- 作业2

- 完整的作业和说明

- 作业3

- 完整的作业和说明

- 作业4

- 完整的作业和说明

- 作业5

- 完整的作业和说明

作业的链接和截止日期将按计划进行。

Drake(环境配置)

The Drake website is the main source for information and documentation. This goal of this chapter provide a few specific pointers and recommended workflows to get you up and running quickly with the examples provided in these notes.

NOTE: If you are taking the MIT class, we have provided a Docker workflow for you to make sure you are kept up to date week by week. Do NOT use the instructions below. Follow these instructions instead.

First, pick your platform (click on your OS):

Ubuntu Linux (Bionic) | Mac Homebrew

Download the textbook supplement

git clone https://github.com/RussTedrake/underactuated.gitand install the prerequisites using the platform-specific installation script provided:

sudo underactuated/scripts/setup/ubuntu/18.04/install_prereqs

export PYTHONPATH=`pwd`/underactuated/src:${PYTHONPATH}Install Drake

Although I hope that power users will build Drake from source (and contribute back), I recommend using the precompiled binaries to get started. The links below indicate the specific distributions that the examples in this text have been tested against.

Download the binaries

curl -o drake.tar.gz https://drake-packages.csail.mit.edu/drake/continuous/drake-latest-bionic.tar.gz

Unpack and set your PYTHONPATH and Test

sudo tar -xvzf drake.tar.gz -C /opt

sudo /opt/drake/share/drake/setup/install_prereqs

export PYTHONPATH=/opt/drake/lib/python3.6/site-packages:${PYTHONPATH}

python3 -c 'import pydrake; print(pydrake.__file__)

python3 -c 'import pydrake; print(pydrake.__file__)

See Also

Further tips and instructions are available at the python documentation page on the drake website.

Run an example

Note: All of the examples in the textbook supplement currently assume that working directory of your python interpreter is the underactuated/src directory.

python double_pendulum/simulate.pyColab scratchpad

Consider Jupyter Notebook

The examples you'll find throughout the text are provided so that they can be run in any python interpreter. However, many people love the Jupyter Notebook workflow, and the code should work for you there, as well.

Here is an example that shows you how to tell matplotlib to perform well in the notebook (including with animations).

jupyter notebook src/double_pendulum/simulate.ipynbThe next chapter in the Appendix provides a tutorial for working with dynamical systems in Drake.

Listing of Drake examples throughout this text

The best overview we have for the remaining capabilities in Drake are the main chapters of these notes. For convenience, here is a list of software examples that are sprinkled throughout the text:

- Chapter 1: Fully-actuated vs Underactuated Systems

- Robot Manipulators

- Feedback-Cancellation Double Pendulum

- Chapter 2: The Simple Pendulum

- Nonlinear dynamics with a constant torque

- Simple Pendulum in Python

- Chapter 3: Acrobots, Cart-Poles, and Quadrotors

- The Acrobot in Python

- The Cart-Pole System in Python

- LQR for the Acrobot and Cart-Pole

- LQR for a Quadrotors

- Energy Shaping for the Pendulum

- Differential flatness for the Planar Quadrotor

- Chapter 4: Simple Models of Walking and Running

- Numerical simulation of the rimless wheel

- Numerical simulation of the compass gait

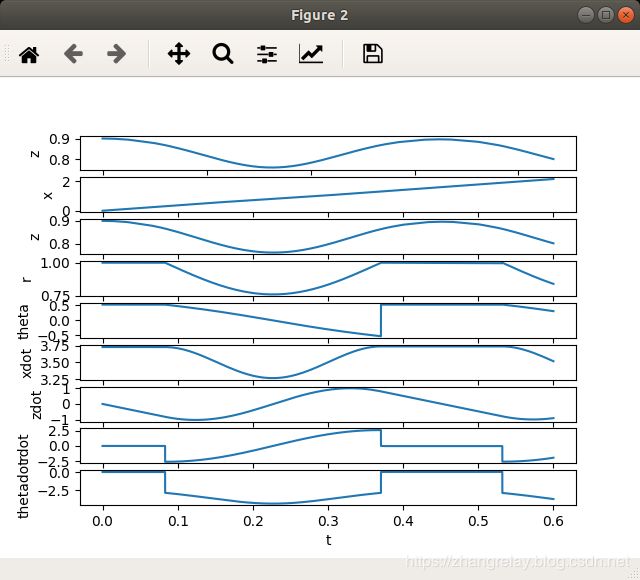

- Simulation of the SLIP model

- Chapter 8: Dynamic Programming

- Grid World

- Dynamic Programming for the Double Integrator

- Dynamic Programming for the Simple Pendulum

- Chapter 10: Lyapunov Analysis

- Common Lyapunov analysis for linear systems

- Global minimization via SOS

- Verifying a Lyapunov candidate via SOS

- Searching for a Lyapunov function via SOS

- Region of attraction for the one-dimensional cubic system

- Searching for the region of attraction

- Region of attraction for the time-reversed Van der Pol oscillator

- Global stability of the simple pendulum via SOS

- Chapter 11: Trajectory Optimization

- Direct Collocation for the Pendulum, Acrobot, and Cart-Pole

- Chapter 12: Motion Planning as Search

- Planning with a Random Tree

- Chapter 16: Algorithms for Limit Cycles

- Finding the limit cycle of the Van der Pol oscillator

- Appendix B: Modeling Input-Output Dynamical Systems in Drake

- A simple continuous-time system

- A simple discrete-time system

- Simulating and plotting the results

- DiagramBuilder

常问问题

我没有任何机器人学经验,如果我上课,可以吗?

是。该课程旨在不承担任何先前的机器人经验。如果你有丰富的机器人经验,那也很棒。

该课程将如何与以前的版本进行比较?(例如,2009年,2015年,2018年)?

该课程的2019版本将涵盖所选主题的扩展版本,包括有关机械臂,强化学习和传感器(输出反馈)的更多内容。

该类的任务也将几乎全部进行彻底检查,包括编程分配现在将使用Python,并将通过界面使用Drakepydrake。

作业会是什么样的?

每周作业将包括手写数学和编程的混合。

编程任务将使用Python,并将使用Drake,这是一个用于机器人规划,控制和分析的工具箱。德雷克是由机器人运动集团开发的,其发展现在由丰田研究所领导。

所有作业(包括手写组件和编程组件)都将使用Gradescope进行评分。

我不确定我是否符合先决条件,我该怎么办?

确定您是否已准备好上课的最佳方法是查看前几年的一些讲座(例如, 2009年, 2015年, 2018年)。如果你很兴奋,并认为你可以处理它,你可能会。如果您想获得有关您的准备情况的反馈,您可以随时联系课程助教([email protected])。另外,请参阅下一个问题。

我能做些什么才能最好地为课堂上的成功做好准备?

该课程采用严格的数学方法进行机器人技术。唯一需要的先决条件是对线性代数和微分方程的基本熟悉。如果你努力工作,我们可以教你其余的。

但是,如果您想尽可能做好准备,那么这就是TAs推荐的课程:

- 线性代数:

- 对线性代数的强烈,直观的理解将非常有助于您完成这门课程。如果你不记得矩阵的等级是什么,或者如何进行奇异值分解,我们建议你检查你的线性代数。整个机器人世界都有丰富的线性代数 - 你不会后悔投入时间掌握基础知识!

- 为了了解线性代数,Gilbert Strang的经典课程MIT 18.06的内容和视频讲座过去曾帮助过许多学生。

- 另一个很好的资源是Sheldon Axler 的教科书“ 线性代数完成”。

- 微分方程(ODEs)

- 虽然对微分方程的深刻理解只会对你有所帮助,但如果你之前没有完成过一个完整的Diff EQ课程,你可能只需要理解基础知识就可以了。

- 将在汗学院微分方程内容应该足以让你在班级去-因为你去,你可以学习休息。

- 非线性动力学

- 虽然不是先决条件,但非常动态的教科书是Steven Strogatz 的教科书“ 非线性动力学与混沌”。

- 用Python编程

- 如果您对Python有点熟悉但希望了解语法,那么Stanford CS231n的这个教程提供了一个很好的概述。

- 如果你是Python的绝对初学者,Codecademy提供了一个友好的介绍。

- 虽然你不需要知道C ++来获得类中的A,但是用于某些赋值的底层软件是用C ++编写的。

- 数学优化

- 我们将教您如何了解优化,但获得该主题的背景将有助于您加深理解。如果您知道以下内容,那么您就完全可以设置:LP,QP,QCQP,MI-variants,SDP。

- 建议使用Stephen Boyd的 Convex Optimization视频讲座,以及Boyd和Vandenberghe的参考教科书 Convex Optimization。

- 阅读SOS(平方和)优化也会有所帮助(但我们会教你这个)。

- 机器学习

- 机器学习的基本背景只能提供帮助。在线有许多很棒的入门课程,Andrew Ng是其中之一。

- 机器人

- 您不需要任何先前的机器人曝光就能在课堂上取得成功。如果你想开始吸收基本原理(框架变换,操纵器方程等),那么John Craig的机器人技术:力学和控制是一个很好的参考。

欠驱动机器人学

前言

这本书是关于建造速度,效率和优雅的机器人。我相信这只能通过机械设计,被动动力学和非线性控制综合之间的紧密耦合来实现。因此,这些注释包含来自动力系统理论的选定材料,以及线性和非线性控制。

这些注释还反映了对计算算法的深刻信念,这些算法在寻找和优化复杂动态和控制问题的解决方案中发挥着重要作用。算法在现代控制理论中发挥着越来越重要的作用; 如今,即使是严格的数学家也会考虑在问题中找到凸性(因此使其适合于有效的计算解决方案),这几乎等同于分析结果。因此,这些注释还必须涵盖优化理论,运动规划和机器学习中的选定材料。

虽然本书中的材料来自许多来源,但演示文稿的目标非常明确地针对少数机器人问题。只有当它们能够帮助我们提高我们正在努力开发的功能时才会引入概念。我所采用的许多学科传统上非常严格,以至于对于该领域的新人来说,基本思想难以渗透。我已经在这些笔记中做了有意识的努力,即使在介绍这些严格的主题时,也要保持一种非正式的,会话式的语调,并参考最有力的定理,但只有在证据可以增加特定见解而不分散主流演示时才能证明它们。

组织

这些笔记中的材料分为几个主要部分。“模型系统”引入了一系列日益复杂的动力系统,并概述了每个系统的文献中的一些相关结果。“非线性规划和控制”引入了相当普遍的计算算法来推理那些动力系统,优化理论起着核心作用。其中许多算法将动态系统视为已知和确定性,直到本部分的最后几章介绍随机性和鲁棒性。“估计和学习” 通过统计学和机器学习技术来实现这一点,这些技术利用这一观点来引入额外的算法,这些算法可以在了解模型或具有完美传感器的情况下以较少的假设运行。本书以“附录”结束,该附录为课程中使用的主要主题提供了更多的介绍(和参考)。

选择章节的顺序是为了使这本书有价值作为参考。然而,在教授课程时,我采用螺旋轨迹穿过材料,一次引入机器人动力学和控制问题,并仅引入解决该特定问题所需的技术。

软件

本书中的所有示例和算法以及更多内容现在都可以作为我们的开源软件项目的一部分提供: 。 是一个C ++项目,但在本文中我们将使用Drake的Python绑定。我鼓励那些希望深入探索C ++代码的超级用户或读者(并回馈)。

有关使用的具体说明,请参阅附录 以及这些笔记。

Table of Contents

Model Systems

Nonlinear Planning and Control

Estimation and Learning

Appendix

- Preface

- Chapter 1: Fully-actuated vs Underactuated Systems

- Motivation

- Honda's ASIMO vs. passive dynamic walkers

- Birds vs. modern aircraft

- Manipulation

- The common theme

- Definitions

- Feedback Linearization

- Input and State Constraints

- Nonholonomic constraints

- Underactuated robotics

- Goals for the course

- Motivation

- Chapter 2: The Simple Pendulum

- Introduction

- Nonlinear dynamics with a constant torque

- The overdamped pendulum

- The undamped pendulum with zero torque

- The undamped pendulum with a constant torque

- The damped pendulum

- The torque-limited simple pendulum

- Chapter 3: Acrobots, Cart-Poles, and Quadrotors

- The Acrobot

- Equations of motion

- The Cart-Pole system

- Equations of motion

- Quadrotors

- The Planar Quadrotor

- The Full 3D Quadrotor

- Balancing

- Linearizing the manipulator equations

- Controllability of linear systems

- LQR feedback

- Partial feedback linearization

- PFL for the Cart-Pole System

- General form

- Swing-up control

- Energy shaping

- Simple pendulum

- Cart-Pole

- Acrobot

- Discussion

- Differential Flatness

- Other model systems

- The Acrobot

- Chapter 4: Simple Models of Walking and Running

- Limit Cycles

- Poincaré Maps

- Simple Models of Walking

- The Ballistic Walker

- The Rimless Wheel

- The Compass Gait

- The Kneed Walker

- Simple Models of Running

- The Spring-Loaded Inverted Pendulum

- The Planar Monopod Hopper

- A Simple Model That Can Walk and Run

- Limit Cycles

- Chapter 5: Highly-articulated Legged Robots

- Center of Mass Dynamics

- A hovercraft model

- Robots with (massless) legs

- Capturing the full robot dynamics

- Impact dynamics

- The special case of flat terrain

- An aside: the zero-moment point derivation

- ZMP planning

- From a CoM plan to a whole-body plan

- Whole-Body Control

- Footstep planning and push recovery

- Beyond ZMP planning

- Center of Mass Dynamics

- Chapter 6: Manipulation

- Chapter 7: Model Systems with Stochasticity

- The Master Equation

- Stationary Distributions

- Extended Example: The Rimless Wheel on Rough Terrain

- Noise models for real robots/systems.

- Analysis (a preview)

- Control Design (a preview)

- Chapter 8: Dynamic Programming

- Formulating control design as an optimization

- Additive cost

- Optimal control as graph search

- Continuous dynamic programming

- The Hamilton-Jacobi-Bellman Equation

- Solving for the minimizing control

- Numerical solutions for $J^*$

- Stochastic Optimal Control

- Graph search -- stochastic shortest path problems

- Stochastic interpretation of deterministic, continuous-state value iteration

- Extensions

- Linear Programming Approach

- Formulating control design as an optimization

- Chapter 9: Linear Quadratic Regulators

- Basic Derivation

- Local stabilization of nonlinear systems

- Finite-horizon formulations

- Finite-horizon LQR

- Time-varying LQR

- Linear Quadratic Optimal Tracking

- Linear Final Boundary Value Problems

- Basic Derivation

- Chapter 10: Lyapunov Analysis

- Lyapunov Functions

- Global Stability

- LaSalle's Invariance Principle

- Relationship to the Hamilton-Jacobi-Bellman equations

- Lyapunov analysis with convex optimization

- Lyapunov analysis for linear systems

- Lyapunov analysis as a semi-definite program (SDP)

- Sums-of-squares optimization

- Lyapunov analysis for polynomial systems

- Lyapunov functions for estimating regions of attraction

- Robustness analysis using "common Lyapunov functions"

- Region of attraction estimation for polynomial systems

- Rigid-body dynamics are polynomial

- Lyapunov Functions

- Chapter 11: Trajectory Optimization

- Problem Formulation

- Computational Tools for Nonlinear Optimization

- Trajectory optimization as a nonlinear program

- Direct Transcription

- Direct Collocation

- Shooting Methods

- Discussion

- Pontryagin's Minimum Principle

- Constrained optimization with Lagrange multipliers

- Lagrange multiplier derivation of the adjoint equations

- Necessary conditions for optimality in continuous time

- Trajectory optimization as a convex optimization

- Linear systems with convex linear constraints

- Differential Flatness

- Mixed-integer convex optimization for non-convex constraints

- Local Trajectory Feedback Design

- Model-predictive control

- Time-varying LQR

- Iterative LQR and Differential Dynamic Programming

- Case Study: A glider that can land on a perch like a bird

- Chapter 12: Motion Planning as Search

- Artificial Intelligence as Search

- Randomized motion planning

- Rapidly-Exploring Random Trees (RRTs)

- RRTs for robots with dynamics

- Variations and extensions

- Discussion

- Decomposition methods

- Chapter 13: Feedback Motion Planning

- Chapter 14: Policy Search

- Problem formulation

- Controller parameterizations

- Trajectory-based policy search

- Lyapunov-based approaches to policy search.

- Approximate Dynamic Programming

- Chapter 15: Output Feedback (aka Pixels-to-Torques)

- Static Output Feedback for Linear Systems

- Linear Quadratic Gaussian (LQG)

- Chapter 16: Algorithms for Limit Cycles

- Trajectory optimization

- Lyapunov analysis

- Transverse coordinates

- Transverse linearization

- Region of attraction estimation using sums-of-squares

- Feedback design

- Transverse LQR

- Orbital stabilization for non-periodic trajectories

- Chapter 17: Planning and Control through Contact

- Modeling and Simulating through Contact

- Trajectory Optimization

- Randomized Motion Planning

- Stabilizing a Fixed-Point

- Stabilizing a Trajectory or Limit Cycle

- Chapter 18: Robust and Stochastic Control

- Chapter 19: Planning Under Uncertainty

- Chapter 20: Verification and Validation

- Chapter 21: System Identification

- Chapter 22: State Estimation

- Chapter 23: Model-Free Policy Search

- Policy Gradient Methods

- The Policy Gradient "Trick" (aka REINFORCE)

- Sample efficiency

- Stochastic Gradient Descent

- The Weight Pertubation Algorithm

- Weight Perturbation with an Estimated Baseline

- REINFORCE w/ additive Gaussian noise

- Summary

- Sample performance via the signal-to-noise ratio.

- Performance of Weight Perturbation

- Policy Gradient Methods

- Chapter 24: Model -Free Value Function Methods

- Chapter 25: Actor-Critic Methods

- Bibliography

- Appendix A: Drake

- Download the textbook supplement

- Install Drake

- Download the binaries

- Unpack and set your PYTHONPATH and Test

- See Also

- Run an example

- Consider Jupyter Notebook

- Listing of Drake examples throughout this text

- Appendix B: Modeling Input-Output Dynamical Systems in Drake

- Writing your own dynamics

- Simulation

- The System "Context"

- Combinations of Systems: Diagram and DiagramBuilder

- Appendix C: Rigid-Body Dynamics

- Deriving the equations of motion (an example)

- The Manipulator Equations

- Bilateral Position Constraints

- Bilateral Velocity Constraints

- Contact Models

- Compliant Contact Models

- Rigid Contact with Event Detection

- Time-stepping Approximations for Rigid Contact

- Recursive Dynamics Algorithms

- Parameter Estimation

- Appendix D: Optimization and Mathematical Programming

- Optimization software

- Nonlinear optimization

- General formulation

- Solution techniques

- Example: Inverse Kinematics

- Convex optimization

- Linear Programs/Quadratic Programs/Second-Order Cones

- Semidefinite Programming and Linear Matrix Inequalities

- Sums of squares / polynomial optimization

- Solution techniques

- Mixed-integer convex optimization

- Combinatorial optimization

- Appendix E: An Optimization Playbook

第一章

Fully-actuated vs Underactuated Systems

Robots today move far too conservatively, and accomplish only a fraction of the tasks and achieve a fraction of the performance that they are mechanically capable of. In many cases, we are still fundamentally limited by control technology which matured on rigid robotic arms in structured factory environments. The study of underactuated robotics focuses on building control systems which use the natural dynamics of the machines in an attempt to achieve extraordinary performance in terms of speed, efficiency, or robustness.

Motivation

Let's start with some examples, and some videos.

Honda's ASIMO vs. passive dynamic walkers

The world of robotics changed when, in late 1996, Honda Motor Co. announced that they had been working for nearly 15 years (behind closed doors) on walking robot technology. Their designs have continued to evolve, resulting in a humanoid robot they call ASIMO (Advanced Step in Innovative MObility). For nearly 20 years, Honda's robots were widely considered to represent the state of the art in walking robots, although there are now many robots with designs and performance very similar to ASIMO's. We will dedicate effort to understanding a few of the details of ASIMO when we discuss algorithms for walking... for now I just want you to become familiar with the look and feel of ASIMO's movements [watch the asimo video below now].

I hope that your first reaction is to be incredibly impressed with the quality and versatility of ASIMO's movements. Now take a second look. Although the motions are very smooth, there is something a little unnatural about ASIMO's gait. It feels a little like an astronaut encumbered by a heavy space suit. In fact this is a reasonable analogy... ASIMO is walking a bit like somebody that is unfamiliar with his/her dynamics. Its control system is using high-gain feedback, and therefore considerable joint torque, to cancel out the natural dynamics of the machine and strictly follow a desired trajectory. This control approach comes with a stiff penalty. ASIMO uses roughly 20 times the energy (scaled) that a human uses to walk on the flat (measured by cost of transport)[1]. Also, control stabilization in this approach only works in a relatively small portion of the state space (when the stance foot is flat on the ground), so ASIMO can't move nearly as quickly as a human, and cannot walk on unmodelled or uneven terrain.

For contrast, let's now consider a very different type of walking robot, called a passive dynamic walker (PDW). This "robot" has no motors, no controllers, no computer, but is still capable of walking stably down a small ramp, powered only by gravity [watch videos above now]. Most people will agree that the passive gait of this machine is more natural than ASIMO's; it is certainly more efficient. Passive walking machines have a long history - there are patents for passively walking toys dating back to the mid 1800's. We will discuss, in detail, what people know about the dynamics of these machines and what has been accomplished experimentally. This most impressive passive dynamic walker to date was built by Steve Collins in Andy Ruina's lab at Cornell[2].

Passive walkers demonstrate that the high-gain, dynamics-cancelling feedback approach taken on ASIMO is not a necessary one. In fact, the dynamics of walking is beautiful, and should be exploited - not cancelled out.

The world is just starting to see what this vision could look like. This video from Boston Dynamics is one of my favorites of all time:

This result is a marvel of engineering (the mechanical design alone is amazing...). In this class, we'll teach you the computational tools to required to make robots perform this way. We'll also try to reason about how robust these types of maneuvers are and can be. Don't worry, if you do not have a super lightweight, super capable, and super durable humanoid, then a simulation will be provided for you.

Birds vs. modern aircraft

The story is surprisingly similar in a very different type of machine. Modern airplanes are extremely effective for steady-level flight in still air. Propellers produce thrust very efficiently, and today's cambered airfoils are highly optimized for speed and/or efficiency. It would be easy to convince yourself that we have nothing left to learn from birds. But, like ASIMO, these machines are mostly confined to a very conservative, low angle-of-attack flight regime where the aerodynamics on the wing are well understood. Birds routinely execute maneuvers outside of this flight envelope (for instance, when they are landing on a perch), and are considerably more effective than our best aircraft at exploiting energy (eg, wind) in the air.

As a consequence, birds are extremely efficient flying machines; some are capable of migrating thousands of kilometers with incredibly small fuel supplies. The wandering albatross can fly for hours, or even days, without flapping its wings - these birds exploit the shear layer formed by the wind over the ocean surface in a technique called dynamic soaring. Remarkably, the metabolic cost of flying for these birds is indistinguishable from the baseline metabolic cost[3], suggesting that they can travel incredible distances (upwind or downwind) powered almost completely by gradients in the wind. Other birds achieve efficiency through similarly rich interactions with the air - including formation flying, thermal soaring, and ridge soaring. Small birds and large insects, such as butterflies and locusts, use "gust soaring" to migrate hundreds or even thousands of kilometers carried primarily by the wind.

Birds are also incredibly maneuverable. The roll rate of a highly acrobatic aircraft (e.g, the A-4 Skyhawk) is approximately 720 deg/sec[4]; a barn swallow has a roll rate in excess of 5000 deg/sec[4]. Bats can be flying at full-speed in one direction, and completely reverse direction while maintaining forward speed, all in just over 2 wing-beats and in a distance less than half the wingspan[5]. Although quantitative flow visualization data from maneuvering flight is scarce, a dominant theory is that the ability of these animals to produce sudden, large forces for maneuverability can be attributed to unsteady aerodynamics, e.g., the animal creates a large suction vortex to rapidly change direction[6]. These astonishing capabilities are called upon routinely in maneuvers like flared perching, prey-catching, and high speed flying through forests and caves. Even at high speeds and high turn rates, these animals are capable of incredible agility - bats sometimes capture prey on their wings, Peregrine falcons can pull 25 G's out of a 240 mph dive to catch a sparrow in mid-flight[7], and even the small birds outside our building can be seen diving through a chain-link fence to grab a bite of food.

Although many impressive statistics about avian flight have been recorded, our understanding is partially limited by experimental accessibility - it is quite difficult to carefully measure birds (and the surrounding airflow) during their most impressive maneuvers without disturbing them. The dynamics of a swimming fish are closely related, and can be more convenient to study. Dolphins have been known to swim gracefully through the waves alongside ships moving at 20 knots[6]. Smaller fish, such as the bluegill sunfish, are known to possess an escape response in which they propel themselves to full speed from rest in less than a body length; flow visualizations indeed confirm that this is accomplished by creating a large suction vortex along the side of the body[8] - similar to how bats change direction in less than a body length. There are even observations of a dead fish swimming upstream by pulling energy out of the wake of a cylinder; this passive propulsion is presumably part of the technique used by rainbow trout to swim upstream at mating season[9].

Manipulation

Despite a long history of success in industrial applications, and the huge potential for consumer applications, we still don't have robot arms that can perform any meaningful tasks in the home. Admittedly, the perception problem (using sensors to detect/localize objects and understand the scene) for home robotics is incredibly difficult. But even if we were given a perfect perception system, our robots are still a long way from performing basic object manipulation tasks with the dexterity and versatility of a human.

Most robots that perform object manipulation today use a stereotypical pipeline. First, we enumerate a handful of contact locations on the hand (these points, and only these points, are allowed to contact the world). Then, given a localized object in the environment, we plan a collision-free trajectory for the arm that will move the hand into a "pre-grasp" location. At this point the robot closes it's eyes (figuratively) and closes the hand, hoping that the pre-grasp location was good enough that the object will be successfully grasped using e.g. only current feedback in the fingers to know when to stop closing. "Underactuated hands" make this approach more successful, but the entire approach really only works well for enveloping grasps.

The enveloping grasps approach may actually be sufficient for a number of simple pick-and-place tasks, but it is a very poor representation of how humans do manipulation. When humans manipulate objects, the contact interactions with the object and the world are very rich -- we often use pieces of the environment as fixtures to reduce uncertainty, we commonly exploit slipping behaviors (e.g. for picking things up, or reorienting it in the hand), and our brains don't throw NaNs if we use the entire surface of our arms to e.g. manipulate a large object.

By the way, in most cases, if the robots fail to make contact at the anticipated contact times/locations, bad things can happen. The results are hilarious and depressing at the same time. (Let's fix that!)

The common theme

Classical control techniques for robotics are based on the idea that feedback can be used to override the dynamics of our machines. These examples suggest that to achieve outstanding dynamic performance (efficiency, agility, and robustness) from our robots, we need to understand how to design control systems which take advantage of the dynamics, not cancel them out. That is the topic of this course.

Surprisingly, many formal control ideas do not support the idea of "exploiting" the dynamics. Optimal control formulations (which we will study in depth) allow it in principle, but optimal control of nonlinear systems is still a relatively ad hoc discipline. Sometimes I joke that in order to convince a control theorist to consider the dynamics, you have to do something drastic, like taking away her control authority - remove a motor, or enforce a torque-limit. These issues have created a formal class of systems, the underactuated systems, for which people have begun to more carefully consider the dynamics of their machines in the context of control.

Definitions

According to Newton, the dynamics of mechanical systems are second order (F=maF=ma). Their state is given by a vector of positions, qq (also known as the configuration vector), and a vector of velocities, q˙q˙, and (possibly) time. The general form for a second-order controllable dynamical system is:

q¨=f(q,q˙,u,t),q¨=f(q,q˙,u,t),

where uu is the control vector. As we will see, the dynamics for many of the robots that we care about turn out to be affine in commanded torque, so let's consider a slightly constrained form:

q¨=f1(q,q˙,t)+f2(q,q˙,t)u.(1)(1)q¨=f1(q,q˙,t)+f2(q,q˙,t)u.

Fully-Actuated

A control system described by equation 11 is fully-actuated in state (q,q˙)(q,q˙) at time tt if it is able to command any instantaneous acceleration in qq:

rank[f2(q,q˙,t)]=dim[q].(2)(2)rank[f2(q,q˙,t)]=dim[q].

Underactuated

A control system described by equation 11 is underactuated in state (q,q˙)(q,q˙) at time tt if it is not able to command an arbitrary instantaneous acceleration in qq:

rank[f2(q,q˙,t)] Notice that whether or not a control system is underactuated may depend on the state of the system or even on time, although for most systems (including all of the systems in this book) underactuation is a global property of the system. We will refer to a system as underactuated if it is underactuated in all states and times. In practice, we often refer informally to systems as fully actuated as long as they are fully actuated in most states (e.g., a "fully-actuated" system might still have joint limits or lose rank at a kinematic singularity). Admittedly, this permits the existence of a gray area, where it might feel awkward to describe the system as either fully- or underactuated (we should instead only describe its states); we'll see examples, for instance, of hybrid systems like walking robots that are fully-actuated in some modes but underactuated in others. I will still informally refer to these systems as being underactuated whenever reasoning about the underactuation is useful/necessary for developing a control strategy. Simple double pendulum Consider the simple robot manipulator illustrated above. As described in the Appendix, the equations of motion for this system are quite simple to derive, and take the form of the standard "manipulator equations": M(q)q¨+C(q,q˙)q˙=τg(q)+Bu.M(q)q¨+C(q,q˙)q˙=τg(q)+Bu. It is well known that the inertia matrix, M(q)M(q) is (always) uniformly symmetric and positive definite, and is therefore invertible. Putting the system into the form of equation 11 yields: q¨=M−1(q)[τg(q)+Bu−C(q,q˙)q˙].q¨=M−1(q)[τg(q)+Bu−C(q,q˙)q˙]. Because M−1(q)M−1(q) is always full rank, we find that a system described by the manipulator equations is fully-actuated if and only if BB is full row rank. For this particular example, q=[θ1,θ2]Tq=[θ1,θ2]T and u=[τ1,τ2]Tu=[τ1,τ2]T (motor torques at the joints), and B=I2×2B=I2×2. The system is fully actuated. I personally learn best when I can experiment and get some physical intuition. The companion software for the course should make it easy for you to see this system in action. To try it, make sure you've followed the installation instructions in the Appendix, then open Python and try the following lines in your Python console: It's worth taking a peek at the file that describes the robot. URDF and SDF are two of the standard formats, and they can be used to describe even very complicated robots (like the Boston Dynamics humanoid). We can also use Drake to evaluate the manipulator equations . Drake actually has a symbolic dynamics engine, but for now we will just evaluate the manipulator equations for a particular robot (with numerical values assigned for mass, link lengths, etc) and for a particular state of the robot: While the basic double pendulum is fully actuated, imagine the somewhat bizarre case that we have a motor to provide torque at the elbow, but no motor at the shoulder. In this case, we have u=τ2u=τ2, and B(q)=[0,1]TB(q)=[0,1]T. This system is clearly underactuated. While it may sound like a contrived example, it turns out that it is almost exactly the dynamics we will use to study as our simplest model of walking later in the class. The matrix f2f2 in equation 11 always has dim[q][q] rows, and dim[u][u] columns. Therefore, as in the example, one of the most common cases for underactuation, which trivially implies that f2f2 is not full row rank, is dim[u]<[u]< dim[q][q]. This is the case when a robot has joints with no motors. But this is not the only case. The human body, for instance, has an incredible number of actuators (muscles), and in many cases has multiple muscles per joint; despite having more actuators than position variables, when I jump through the air, there is no combination of muscle inputs that can change the ballistic trajectory of my center of mass (barring aerodynamic effects). My control system is underactuated. For completeness, let's generalize the definition of underactuation to systems beyond the second-order control affine systems. An nnth-order control differential equation (with n≥2n≥2) described by the equations dnqdtn=f(q,..,dn−1qdtn−1,t,u)(4)(4)dnqdtn=f(q,..,dn−1qdtn−1,t,u) is fully actuated in state x=(q,...,dn−1qdtn−1)x=(q,...,dn−1qdtn−1) and time tt if the resulting map ff is surjective: for every dnqdtndnqdtn there exists a uu which produces the desired response. Otherwise it is underactuated. It is easy to see that equation 33 is a sufficient condition for underactuation. This definition can also be extended to discrete-time systems and/or differential inclusions. A quick note about notation. When describing the dynamics of rigid-body systems in this class, I will use qq for configurations (positions), q˙q˙ for velocities, and use xx for the full state (x=[qT,q˙T]Tx=[qT,q˙T]T). There is an important limitation to this convention (3D angular velocity should not be represented as the derivative of 3D pose) described in the Appendix, but it will keep the notes cleaner. Unless otherwise noted, vectors are always treated as column vectors. Vectors and matrices are bold (scalars are not). Fully-actuated systems are dramatically easier to control than underactuated systems. The key observation is that, for fully-actuated systems with known dynamics (e.g., f1f1 and f2f2 are known for a second-order control-affine system), it is possible to use feedback to effectively change a nonlinear control problem into a linear control problem. The field of linear control is incredibly advanced, and there are many well-known solutions for controlling linear systems. The trick is called feedback linearization. When f2f2 is full row rank, it is invertibleIf f2f2 is not square, for instance you have multiple actuators per joint, then this inverse may not be unique.. Consider the nonlinear feedback control: u=π(q,q˙,t)=f−12(q,q˙,t)[u′−f1(q,q˙,t)],u=π(q,q˙,t)=f2−1(q,q˙,t)[u′−f1(q,q˙,t)], where u′u′ is the new control input (an input to your controller). Applying this feedback controller to equation 11 results in the linear, decoupled, second-order system: q¨=u′.q¨=u′. In other words, if f1f1 and f2f2 are known and f2f2 is invertible, then we say that the system is "feedback equivalent" to q¨=u′q¨=u′. There are a number of strong results which generalize this idea to the case where f1f1 and f2f2 are estimated, rather than known (e.g, [10]). Let's say that we would like our simple double pendulum to act like a simple single pendulum (with damping), whose dynamics are given by: θ¨1θ¨2=−glsinθ1−bθ˙1=0.θ¨1=−glsinθ1−bθ˙1θ¨2=0. This is easily achieved usingNote that our chosen dynamics do not actually stabilize θ2θ2 - this detail was left out for clarity, but would be necessary for any real implementation. u=B−1[Cq˙−τg+M[−gls1−bq˙10]].u=B−1[Cq˙−τg+M[−gls1−bq˙10]]. Since we are embedding a nonlinear dynamics (not a linear one), we refer to this as "feedback cancellation", or "dynamic inversion". This idea can, and does, make control look easy - for the special case of a fully-actuated deterministic system with known dynamics. For example, it would have been just as easy for me to invert gravity. Observe that the control derivations here would not have been any more difficult if the robot had 100 joints. You can run these examples using: Colab scratchpad Colab scratchpad As always, make soure you take a moment to read through the source code (linked above). The underactuated systems are not feedback linearizable. Therefore, unlike fully-actuated systems, the control designer has no choice but to reason about the nonlinear dynamics of the plant in the control design. This dramatically complicates feedback controller design. Although the dynamic constraints due to missing actuators certainly embody the spirit of this course, many of the systems we care about could be subject to other dynamic constraints as well. For example, the actuators on our machines may only be mechanically capable of producing some limited amount of torque, or there may be a physical obstacle in the free space with which we cannot permit our robot to come into contact with. A dynamical system described by x˙=f(x,u,t)x˙=f(x,u,t) may be subject to one or more constraints described by ϕ(x,u,t)≥0ϕ(x,u,t)≥0. In practice it can be useful to separate out constraints which depend only on the input, e.g. ϕ(u)≥0ϕ(u)≥0, such as actuator limits, as they can often be easier to handle than state constraints. An obstacle in the environment might manifest itself as one or more constraints that depend only on position, e.g. ϕ(q)≥0ϕ(q)≥0. By our generalized definition of underactuation, we can see that input constraints can certainly cause a system to be underactuated. State (only) constraints are more subtle -- in general these actually reduce the dimensionality of the state space, therefore requiring less dimensions of actuation to achieve "full" control, but we only reap the benefits if we are able to perform the control design in the "minimal coordinates" (which is often difficult). Consider the constrained second-order linear system x¨=u,|u|≤1.x¨=u,|u|≤1. By our definition, this system is underactuated. For example, there is no uu which can produce the acceleration x¨=2x¨=2. Input and state constraints can complicate control design in similar ways to having an insufficient number of actuators, (i.e., further limiting the set of the feasible trajectories), and often require similar tools to find a control solution. You might have heard of the term "nonholonomic system" (see e.g. [11]), and be thinking about how nonholonomy relates to underactuation. Briefly, a nonholonomic constraint is a constraint of the form ϕ(q,q˙,t)=0ϕ(q,q˙,t)=0, which cannot be integrated into a constraint of the form ϕ(q,t)=0ϕ(q,t)=0 (a holonomic constraint). A nonholonomic constraint does not restrain the possible configurations of the system, but rather the manner in which those configurations can be reached. While a holonomic constraint reduces the number of degrees of freedom of a system by one, a nonholonomic constraint does not. An automobile or traditional wheeled robot provides a canonical example: Consider a simple model of a wheeled robot whose configuration is described by its Cartesian position x,yx,y and its orientation, θθ, so q=[x,y,θ]Tq=[x,y,θ]T. The system is subject to a differential constraint that prevents side-slip, x˙=vcosθy˙=vsinθv=x˙2+y˙2−−−−−−√x˙=vcosθy˙=vsinθv=x˙2+y˙2 or equivalently, y˙cosθ−x˙sinθ=0.y˙cosθ−x˙sinθ=0. This constraint cannot be integrated into a constraint on configuration—the car can get to any configuration (x,y,θ)(x,y,θ), it just can't move directly sideways—so this is a nonholonomic constraint. Contrast the wheeled robot example with a robot on train tracks. The train tracks correspond to a holonomic constraint: the track constraint can be written directly in terms of the configuration qq of the system, without using the velocity q˙q˙. Even though the track constraint could also be written as a differential constraint on the velocity, it would be possible to integrate this constraint to obtain a constraint on configuration. The track restrains the possible configurations of the system. A nonholonomic constraint like the no-side-slip constraint on the wheeled vehicle certainly results in an underactuated system. The converse is not necessarily true—the double pendulum system which is missing an actuator is underactuated but would not typically be called a nonholonomic system. Note that the Lagrangian equations of motion are a constraint of the form ϕ(q,q˙,q¨,t)=0,ϕ(q,q˙,q¨,t)=0, so do not qualify as a nonholonomic constraint. The control of underactuated systems is an open and interesting problem in controls. Although there are a number of special cases where underactuated systems have been controlled, there are relatively few general principles. Now here's the rub... most of the interesting problems in robotics are underactuated: Even control systems for fully-actuated and otherwise unconstrained systems can be improved using the lessons from underactuated systems, particularly if there is a need to increase the efficiency of their motions or reduce the complexity of their designs. This course is based on the observation that there are new computational tools from optimization theory, control theory, motion planning, and even machine learning which can be used to design feedback control for underactuated systems. The goal of this class is to develop these tools in order to design robots that are more dynamic and more agile than the current state-of-the-art. The target audience for the class includes both computer science and mechanical/aero students pursuing research in robotics. Although I assume a comfort with linear algebra, ODEs, and Python, the course notes aim to provide most of the material and references required for the course. I have a confession: I actually think that the material we'll cover in these notes is valuable far beyond robotics. I think that systems theory provides a powerful language for organizing computation in exceedingly complex systems -- especially when one is trying to program and/or analyze systems with continuous variables in a feedback loop (which happens throughout computer science and engineering, by the way). I hope you find these tools to be broadly useful, even if you don't have a humanoid robot capable of performing a backflip at your immediate disposal. 第二章 Our goals for this chapter are modest: we'd like to understand the dynamics of a pendulum. Why a pendulum? In part, because the dynamics of a majority of our multi-link robotics manipulators are simply the dynamics of a large number of coupled pendula. Also, the dynamics of a single pendulum are rich enough to introduce most of the concepts from nonlinear dynamics that we will use in this text, but tractable enough for us to (mostly) understand in the next few pages. The simple pendulum The Lagrangian derivation of the equations of motion (as described in the appendix) of the simple pendulum yields: ml2θ¨(t)+mglsinθ(t)=Q.ml2θ¨(t)+mglsinθ(t)=Q. We'll consider the case where the generalized force, QQ, models a damping torque (from friction) plus a control torque input, u(t)u(t): Q=−bθ˙(t)+u(t).Q=−bθ˙(t)+u(t). Let us first consider the dynamics of the pendulum if it is driven in a particular simple way: a torque which does not vary with time: ml2θ¨+bθ˙+mglsinθ=u0.(1)(1)ml2θ¨+bθ˙+mglsinθ=u0. . You can experiment with this system in Drake using Colab scratchpad (use the slider at the top to adjust the torque input). These are relatively simple differential equations, so if I give you θ(0)θ(0) and θ˙(0)θ˙(0), then you should be able to integrate them to obtain θ(t)θ(t)... right? Although it is possible, integrating even the simplest case (b=u=0b=u=0) involves elliptic integrals of the first kind; there is relatively little intuition to be gained here. This is in stark contrast to the case of linear systems, where much of our understanding comes from being able to explicitly integrate the equations. For instance, for a simple linear system we have q˙=aq→q(t)=q(0)eat,q˙=aq→q(t)=q(0)eat, and we can immediately understand that the long-term behavior of the system is a (stable) decaying exponential if a<0a<0, an (unstable) growing exponential if a>0a>0, and that the system does nothing if a=0a=0. Here we are with certainly one of the simplest nonlinear systems we can imagine, and we can't even solve this system? All is not lost. If what we care about is the long-term behavior of the system, then there are a number of techniques we can apply. In this chapter, we will start by investigating graphical solution methods. These methods are described beautifully in a book by Steve Strogatz[12]. Let's start by studying a special case -- intuitively when bθ˙≫ml2θ¨bθ˙≫ml2θ¨ -- which via dimensional analysis (using the natural frequency gl−−√gl to match units) occurs when blg−−√≫ml2blg≫ml2. This is the case of heavy damping, for instance if the pendulum was moving in molasses. In this case, the damping term dominates the acceleration term, and we have: ml2θ¨+bθ˙≈bθ˙=u0−mglsinθ.ml2θ¨+bθ˙≈bθ˙=u0−mglsinθ. In other words, in the case of heavy damping, the system looks approximately first order. This is a general property of heavily damped systems, such as fluids at very low Reynolds number. I'd like to ignore one detail for a moment: the fact that θθ wraps around on itself every 2π2π. To be clear, let's write the system without the wrap-around as: bx˙=u0−mglsinx.(2)(2)bx˙=u0−mglsinx. Our goal is to understand the long-term behavior of this system: to find x(∞)x(∞) given x(0)x(0). Let's start by plotting x˙x˙ vs xx for the case when u0=0u0=0: The first thing to notice is that the system has a number of fixed points or steady states, which occur whenever x˙=0x˙=0. In this simple example, the zero-crossings are x∗={...,−π,0,π,2π,...}x∗={...,−π,0,π,2π,...}. When the system is in one of these states, it will never leave that state. If the initial conditions are at a fixed point, we know that x(∞)x(∞) will be at the same fixed point. Next let's investigate the behavior of the system in the local vicinity of the fixed points. Examining the fixed point at x∗=πx∗=π, if the system starts just to the right of the fixed point, then x˙x˙ is positive, so the system will move away from the fixed point. If it starts to the left, then x˙x˙ is negative, and the system will move away in the opposite direction. We'll call fixed-points which have this property unstable. If we look at the fixed point at x∗=0x∗=0, then the story is different: trajectories starting to the right or to the left will move back towards the fixed point. We will call this fixed point locally stable. More specifically, we'll distinguish between three types of local stability: An initial condition near a fixed point that is stable in the sense of Lyapunov may never reach the fixed point (but it won't diverge), near an asymptotically stable fixed point will reach the fixed point as t→∞t→∞, and near an exponentially stable fixed point will reach the fixed point with a bounded rate. An exponentially stable fixed point is also an asymptotically stable fixed point, but the converse is not true. Asymptotic stability and Lyapunov stability, however, are distinct notions -- it is actually possible to have a system that is asymptotically stable but not stable i.s.L.†† we can't see that in one dimension so will have to hold that example for a moment. Systems which are stable i.s.L. but not asymptotically stable are easy to construct (e.g. x˙=0x˙=0). Interestingly, it is also possible to have nonlinear systems that converge (or diverge) in finite-time; a so-called finite-time stability; we will see examples of this later in the book, but it is a difficult topic to penetrate with graphical analysis. Rigorous nonlinear system analysis is rich with subtleties and surprises. Moreover, these differences actually matter -- the code that we will write to stabilize the systems will be subtley different depending on what type of stability we want, and it can make or break the success of our methods. Our graph of x˙x˙ vs. xx can be used to convince ourselves of i.s.L. and asymptotic stability by visually inspecting x˙x˙ in the vicinity of a fixed point. Even exponential stability can be inferred if the function can be bounded away from the origin by a negatively-sloped line through the fixed point, since it implies that the nonlinear system will converge at least as fast as the linear system represented by the straight line. I will graphically illustrate unstable fixed points with open circles and stable fixed points (i.s.L.) with filled circles. Next, we need to consider what happens to initial conditions which begin farther from the fixed points. If we think of the dynamics of the system as a flow on the xx-axis, then we know that anytime x˙>0x˙>0, the flow is moving to the right, and x˙<0x˙<0, the flow is moving to the left. If we further annotate our graph with arrows indicating the direction of the flow, then the entire (long-term) system behavior becomes clear: For instance, we can see that any initial condition x(0)∈(−π,π)x(0)∈(−π,π) will result in limt→∞x(t)=0limt→∞x(t)=0. This region is called the basin of attraction of the fixed point at x∗=0x∗=0. Basins of attraction of two fixed points cannot overlap, and the manifold separating two basins of attraction is called the separatrix. Here the unstable fixed points, at x∗={..,−π,π,3π,...}x∗={..,−π,π,3π,...} form the separatrix between the basins of attraction of the stable fixed points. As these plots demonstrate, the behavior of a first-order one dimensional system on a line is relatively constrained. The system will either monotonically approach a fixed-point or monotonically move toward ±∞±∞. There are no other possibilities. Oscillations, for example, are impossible. Graphical analysis is a fantastic analysis tool for many first-order nonlinear systems (not just pendula); as illustrated by the following example: Consider the following system: x˙+x=tanh(wx),(3)(3)x˙+x=tanh(wx), which is plotted below for two values of ww. It's convenient to note that tanh(z)≈ztanh(z)≈z for small zz. For w≤1w≤1 the system has only a single fixed point. For w>1w>1 the system has three fixed points : two stable and one unstable. These equations are not arbitrary - they are actually a model for one of the simplest neural networks, and one of the simplest model of persistent memory[13]. In the equation xx models the firing rate of a single neuron, which has a feedback connection to itself. tanhtanh is the activation (sigmoidal) function of the neuron, and ww is the weight of the synaptic feedback. One last piece of terminology. In the neuron example, and in many dynamical systems, the dynamics were parameterized; in this case by a single parameter, ww. As we varied ww, the fixed points of the system moved around. In fact, if we increase ww through w=1w=1, something dramatic happens - the system goes from having one fixed point to having three fixed points. This is called a bifurcation. This particular bifurcation is called a pitchfork bifurcation. We often draw bifurcation diagrams which plot the fixed points of the system as a function of the parameters, with solid lines indicating stable fixed points and dashed lines indicating unstable fixed points, as seen in the figure: Bifurcation diagram of the nonlinear autapse. Our pendulum equations also have a (saddle-node) bifurcation when we change the constant torque input, u0u0. Finally, let's return to the original equations in θθ, instead of in xx. Only one point to make: because of the wrap-around, this system will appear have oscillations. In fact, the graphical analysis reveals that the pendulum will turn forever whenever |u0|>mgl|u0|>mgl, but now you understand that this is not an oscillation, but an instability with θ→±∞θ→±∞. Consider again the system ml2θ¨=u0−mglsinθ−bθ˙,ml2θ¨=u0−mglsinθ−bθ˙, this time with b=0b=0. This time the system dynamics are truly second-order. We can always think of any second-order system as (coupled) first-order system with twice as many variables. Consider a general, autonomous (not dependent on time), second-order system, q¨=f(q,q˙,u).q¨=f(q,q˙,u). This system is equivalent to the two-dimensional first-order system x˙1=x˙2=x2f(x1,x2,u),x˙1=x2x˙2=f(x1,x2,u), where x1=qx1=q and x2=q˙x2=q˙. Therefore, the graphical depiction of this system is not a line, but a vector field where the vectors [x˙1,x˙2]T[x˙1,x˙2]T are plotted over the domain (x1,x2)(x1,x2). This vector field is known as the phase portrait of the system. In this section we restrict ourselves to the simplest case when u0=0u0=0. Let's sketch the phase portrait. First sketch along the θθ-axis. The xx-component of the vector field here is zero, the yy-component is −mglsinθ.−mglsinθ. As expected, we have fixed points at ±π,...±π,... Now sketch the rest of the vector field. Can you tell me which fixed points are stable? Some of them are stable i.s.L., none are asymptotically stable. You might wonder how we drew the black contour lines in the figure above. We could have obtained them by simulating the system numerically, but those lines can be easily obtained in closed-form. Directly integrating the equations of motion is difficult, but at least for the case when u0=0u0=0, we have some additional physical insight for this problem that we can take advantage of. The kinetic energy, TT, and potential energy, UU, of the pendulum are given by T=12Iθ˙2,U=−mglcos(θ),T=12Iθ˙2,U=−mglcos(θ), where I=ml2I=ml2 and the total energy is E(θ,θ˙)=T(θ˙)+U(θ)E(θ,θ˙)=T(θ˙)+U(θ). The undamped pendulum is a conservative system: total energy is a constant over system trajectories. Using conservation of energy, we have: E(θ(t),θ˙(t))=E(θ(0),θ˙(0))=E012Iθ˙2(t)−mglcos(θ(t))=E0θ˙(t)=±2I[E0+mglcos(θ(t))]−−−−−−−−−−−−−−−−−√E(θ(t),θ˙(t))=E(θ(0),θ˙(0))=E012Iθ˙2(t)−mglcos(θ(t))=E0θ˙(t)=±2I[E0+mglcos(θ(t))] Using this, if you tell me θθ I can determine one of two possible values for θ˙θ˙, and the solution has all of the richness of the black countour lines from the plot. This equation has a real solution when cos(θ)>cos(θmax)cos(θ)>cos(θmax), where θmax={cos−1(Emgl),π,E Of course this is just the intuitive notion that the pendulum will not swing above the height where the total energy equals the potential energy. As an exercise, you can verify that differentiating this equation with respect to time indeed results in the equations of motion. Now what happens if we add a constant torque? If you visualize the bifurcation diagram, you'll see that the fixed points come together, towards q=π2,5π2,...q=π2,5π2,..., until they disappear. One fixed-point is unstable, and one is stable. Before we continue, let me now give you the promised example of a system that is asymptotically stable, but not stable i.s.L.. We can accomplish this with a very pendulum-like example (written here in polar coordinates): The system r˙=r(1−r)θ˙=sin2(θ2)r˙=r(1−r)θ˙=sin2(θ2) is asymptotically stable to x∗=[1,0]Tx∗=[1,0]T, but is not stable i.s.L. Take a minute to draw the vector field of this (you can draw each coordinate independently, if it helps) to make sure you understand. Note that to wrap-around rotation is convenient but not essential -- we could have written the same dynamical system in cartesian coordinates without wrapping. And if this feel too arbitrary, we will see it happen in practice when we introduce the energy-shaping swing-up controller for the pendulum in the next chapter. Now let's add damping back. You can still add torque to move the fixed points (in the same way). Phase diagram for the damped pendulum With damping, the downright fixed point of the pendulum now becomes asymptotically stable (in addition to stable i.s.L.). Is it also exponentially stable? How can we tell? One technique is to linearize the system at the fixed point. A smooth, time-invariant, nonlinear system that is locally exponentially stable must have a stable linearization; we'll discuss linearization more in the next chapter. Here's a thought exercise. If uu is no longer a constant, but a function π(θ,θ˙)π(θ,θ˙), then how would you choose ππ to stabilize the vertical position. Feedback linearization is the trivial solution, for example: u=π(θ,θ˙)=2mglsinθ.u=π(θ,θ˙)=2mglsinθ. But these plots we've been making tell a different story. How would you shape the natural dynamics - at each point pick a uu from the stack of phase plots - to stabilize the vertical fixed point with minimal torque effort? This is exactly the way that I would like you to think about control system design. And we'll give you your first solution techniques -- using dynamic programming -- in the next lecture. The simple pendulum is fully actuated. Given enough torque, we can produce any number of control solutions to stabilize the originally unstable fixed point at the top (such as designing a feedback controller to effectively invert gravity). The problem begins to get interesting (a.k.a. becomes underactuated) if we impose a torque-limit constraint, |u|≤umax|u|≤umax. Looking at the phase portraits again, you can now visualize the control problem. Via feedback, you are allowed to change the direction of the vector field at each point, but only by a fixed amount. Clearly, if the maximum torque is small (smaller than mglmgl), then there are some states which cannot be driven directly to the goal, but must pump up energy to reach the goal. Furthermore, if the torque-limit is too severe and the system has damping, then it may be impossible to swing up to the top. The existence of a solution, and number of pumps required to reach the top, is a non-trivial function of the initial conditions and the torque-limits. Although this system is very simple, its solution requires much of the same reasoning necessary for controlling much more complex underactuated systems; this problem will be a work-horse for us as we introduce new algorithms throughout this book. Robot Manipulators

Python Example

from pydrake.all import (AddMultibodyPlantSceneGraph,

DiagramBuilder,

Parser,

Simulator)

from underactuated import FindResource, PlanarSceneGraphVisualizer

# Set up a block diagram with the robot (dynamics) and a visualization block.

builder = DiagramBuilder()

plant, scene_graph = AddMultibodyPlantSceneGraph(builder)

# Load the double pendulum from Universal Robot Description Format

parser = Parser(plant, scene_graph)

parser.AddModelFromFile(FindResource("double_pendulum/double_pendulum.urdf"))

plant.Finalize()

builder.ExportInput(plant.get_actuation_input_port())

visualizer = builder.AddSystem(PlanarSceneGraphVisualizer(scene_graph,

xlim=[-2.8, 2.8],

ylim=[-2.8, 2.8]))

builder.Connect(scene_graph.get_pose_bundle_output_port(),

visualizer.get_input_port(0))

diagram = builder.Build()

# Set up a simulator to run this diagram

simulator = Simulator(diagram)

simulator.set_target_realtime_rate(1.0)

# Set the initial conditions

context = simulator.get_mutable_context()

# state is (theta1, theta2, theta1dot, theta2dot)

context.SetContinuousState([1., 1., 0., 0.])

context.FixInputPort(0, [0., 0.]) # Zero input torques

# Simulate for 10 seconds

simulator.StepTo(10)

from pydrake.all import MultibodyPlant, Parser

from underactuated import FindResource, ManipulatorDynamics

plant = MultibodyPlant()

parser = Parser(plant)

parser.AddModelFromFile(FindResource("double_pendulum/double_pendulum.urdf"))

plant.Finalize()

q = [0.1, 0.1]

v = [1, 1]

(M, Cv, tauG, B, tauExt) = ManipulatorDynamics(plant, q, v)

print("M = \n" + str(M))

print("Cv = " + str(Cv))

print("tau_G = " + str(tauG))

print("B = " + str(B))

print("tau_ext = " + str(tauExt))

# TODO(russt): add symbolic version pending resolution of drake #11240

Underactuated Control Differential Equations

Feedback Linearization

Feedback-Cancellation Double Pendulum

Python Example

python double_pendulum/as_single_pendulum.py --gravity=9.8python double_pendulum/as_single_pendulum.py --gravity=-9.8Input and State Constraints

Input and State Constraints

Input limits

Nonholonomic constraints

Wheeled robot

Underactuated robotics

Goals for the course

The Simple Pendulum

Introduction

Nonlinear dynamics with a constant torque

Simple Pendulum in Python

python pendulum/torque_slider_demo.pyThe overdamped pendulum

Nonlinear autapse

The undamped pendulum with zero torque

Orbit calculations

The undamped pendulum with a constant torque

Asymptotically stable, but not Lyapunov stable

The damped pendulum

The torque-limited simple pendulum