GAN相关模型理论以及Pytorch实现

GAN相关模型理论以及Pytorch实现(持续更新中)

对GAN相关的模型做简要分析,并使用Pytorch实现。

keywords: GAN,Pytorch,Pytorch实现GANs

因为是从论文的内容上出发,从最粗糙慢慢走向成熟。在这样的一个过程当中学习GAN相关的内容,以及其他的深度学习的内容。体会论文作者的改进思路,提出创新的出发点,已经用到的在别的领域的成熟技巧。以此来提高自己的深度学习的能力,我想这会是一次独特的体验吧。

因为我都是上传到我公众号上的,当然这篇博客也会持续更新。但是保持 公众号日更 ,以及这篇 博客周更 的速度直到后续的情况吧?

所以还是推荐大家到公众号上看啦(随便帮我点点推送中的广告或者转发推送,就当做是对我的赞赏啦~有额外的余力的话再打赏吧)

滑跪.jpg

文章目录

- GAN相关模型理论以及Pytorch实现(持续更新中)

- GAN

- DCGAN

- CGAN

- ImprovedGAN

- CatGAN

- LS-GAN

- CLS-GAN

- ACGAN

- InfoGAN

- Coupled-GAN

- Triple-GAN

- WGAN

- WGAN-gp

- WGAN-div

- DualGAN

- CT-GAN

- DRAGAN

- pix2pix

- ALI

- BiGAN

- CartoonGAN

GAN

We propose a new framework for estimating generative models via an adversarial process, in which we simultaneously train two models: a generative model G that captures the data distribution, and a discriminative model D that estimates theprobabilitythatasamplecamefromthetrainingdataratherthan G. Thetraining procedure for G is to maximize the probability of D making a mistake. This framework corresponds to a minimax two-player game. In the space of arbitrary functions G and D, a unique solution exists, with G recovering the training data distribution and D equal to 1 2 everywhere. In the case where G and D are defined by multilayer perceptrons, the entire system can be trained with backpropagation. There is no need for any Markov chains or unrolled approximate inference networks during either training or generation of samples. Experiments demonstrate the potential of the framework through qualitative and quantitative evaluation of the generated samples.

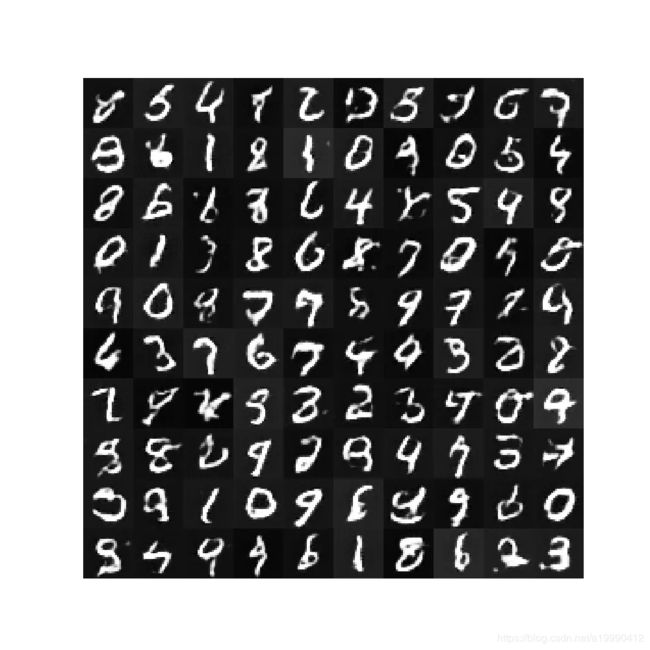

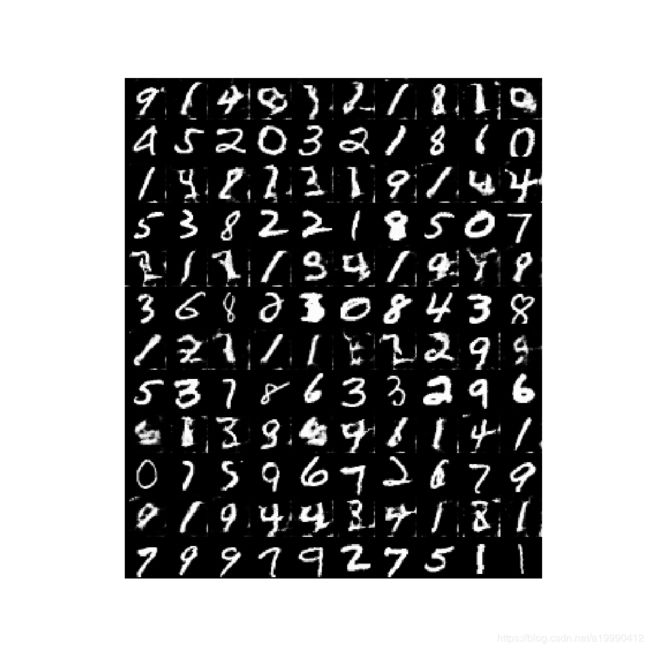

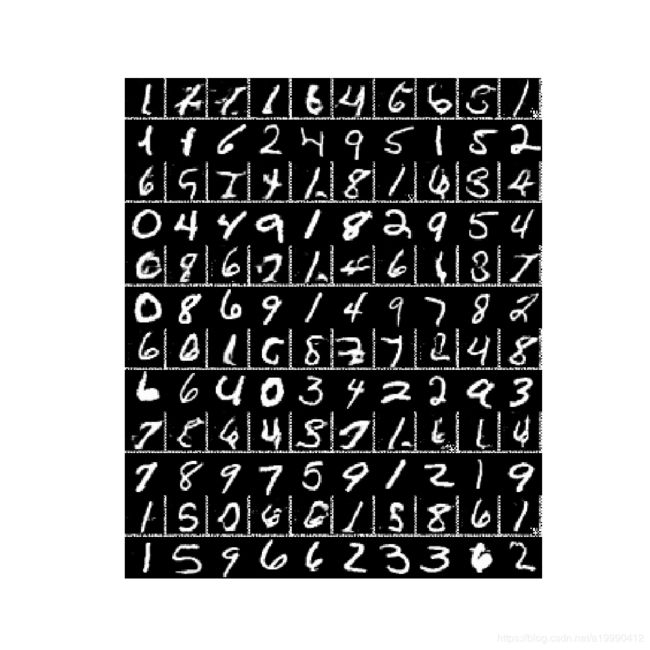

GAN理论以及Pytorch实现

GAN基于MNIST数据集的实验 Pytorch实现

DCGAN

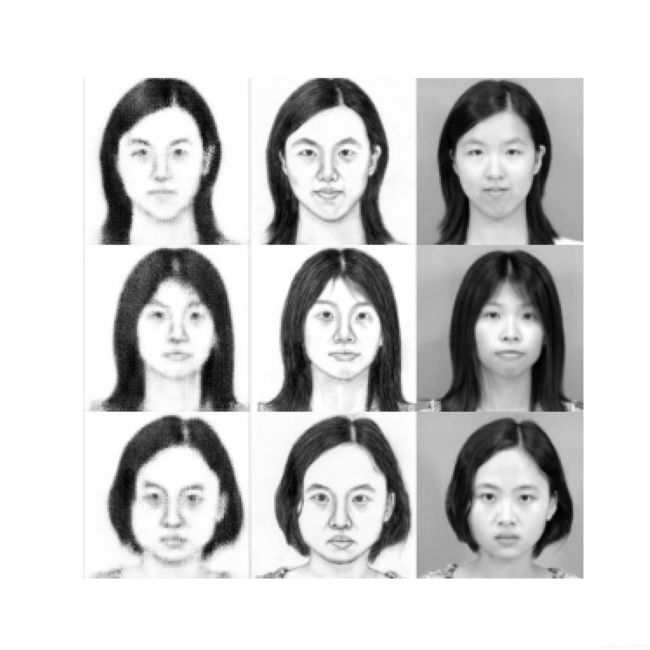

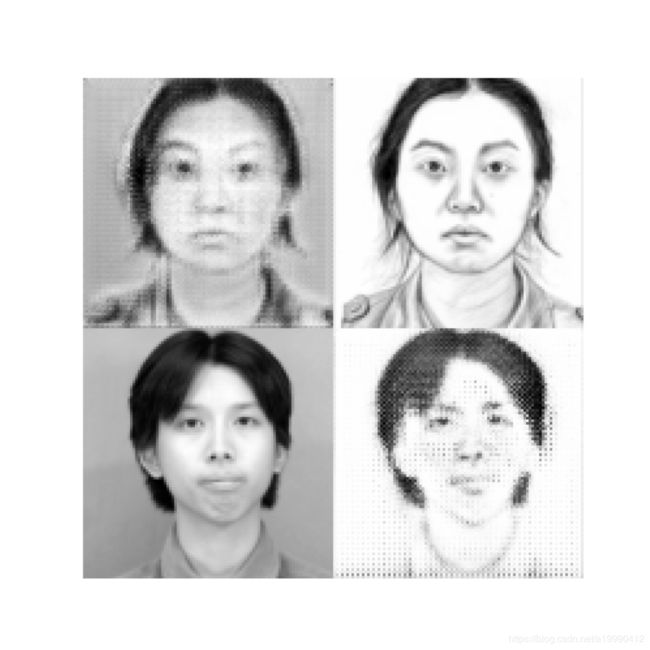

In recent years, supervised learning with convolutional networks (CNNs) has seenhugeadoptionincomputervisionapplications. Comparatively,unsupervised learning with CNNs has received less attention. In this work we hope to help bridgethegapbetweenthesuccessofCNNsforsupervisedlearningandunsupervisedlearning. WeintroduceaclassofCNNscalleddeepconvolutionalgenerative adversarial networks (DCGANs), that have certain architectural constraints, and demonstrate that they are a strong candidate for unsupervised learning. Training on various image datasets, we show convincing evidence that our deep convolutional adversarial pair learns a hierarchy of representations from object parts to scenes in both the generator and discriminator. Additionally, we use the learned featuresfornoveltasks-demonstratingtheirapplicabilityasgeneralimagerepresentations.

DCGAN理论以及Pytorch实现

DCGAN二次元头像问题Pytorch实现

CGAN

In this work we introduce the conditional version of generative adversarial nets, which can be constructed by simply feeding the data, y, we wish to condition on to both the generator and discriminator. We show that this model can generate MNIST digits conditioned on class labels. We also illustrate how this model could be used to learn a multi-modal model, and provide preliminary examples of an application to image tagging in which we demonstrate how this approach can generate descriptive tags which are not part of training labels.

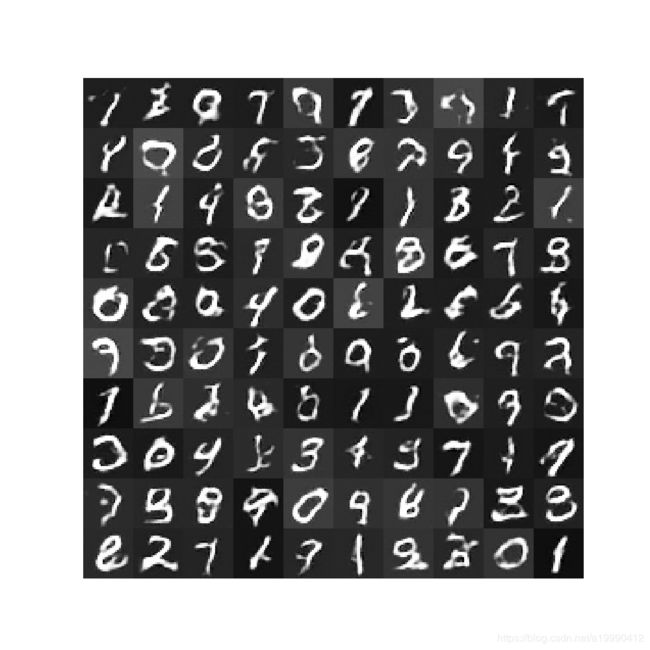

CGAN理论以及Pytorch实现

ImprovedGAN

We present a variety of new architectural features and training procedures that we apply to the generative adversarial networks (GANs) framework. Using our new techniques,weachievestate-of-the-artresultsinsemi-supervisedclassificationon MNIST, CIFAR-10 and SVHN. The generated images are of high quality as confirmed by a visual Turing test: our model generates MNIST samples that humans cannotdistinguishfromrealdata,andCIFAR-10samplesthatyieldahumanerror rate of 21.3%. We also present ImageNet samples with unprecedented resolution and show that our methods enable the model to learn recognizable features of ImageNet classes.

ImprovedGAN理论以及Pytorch实现

CatGAN

In this paper we present a method for learning a discriminative classifier from unlabeledorpartiallylabeleddata. Ourapproachisbasedonanobjectivefunction thattrades-offmutualinformationbetweenobservedexamplesandtheirpredicted categorical class distribution, against robustness of the classifier to an adversarial generative model. The resulting algorithm can either be interpreted as a natural generalization of the generative adversarial networks (GAN) framework or as an extensionoftheregularizedinformationmaximization(RIM)frameworktorobust classification against an optimal adversary. We empirically evaluate our method – which we dub categorical generative adversarial networks (or CatGAN) – on synthetic data as well as on challenging image classification tasks, demonstrating therobustnessofthelearnedclassifiers. Wefurtherqualitativelyassessthefidelity of samples generated by the adversarial generator that is learned alongside the discriminative classifier, and identify links between the CatGAN objective and discriminative clustering algorithms (such as RIM).

Cat-GAN理论以及Pytorch实现

LS-GAN

Unsupervised learning with generative adversarial networks (GANs) has proven hugely successful. Regular GANs hypothesize the discriminator as a classifier with the sigmoid cross entropy loss function. However, we found that this loss function may lead to the vanishing gradients problem during the learning process. To overcome such a problem, we propose in this paper the Least Squares Generative Adversarial Networks (LSGANs) which adopt the least squares loss function for the discriminator. We show that minimizing the objective function of LSGAN yields minimizing the Pearson χ2 divergence. There are two benefits of LSGANs over regular GANs. First, LSGANs are able to generate higher quality images than regular GANs. Second, LSGANs perform more stable during the learning process. We evaluate LSGANs on five scene datasets and the experimental results show that the images generated by LSGANs are of better quality than the ones generated by regular GANs. We also conduct two comparison experiments between LSGANs and regular GANs to illustrate the stability of LSGANs.

LSGAN理论以及Pytorch实现

CLS-GAN

CGAN+LS-GAN的结合版本,用Pytorch实现。

CLS-GAN理论以及Pytorch实现

ACGAN

In this paper we introduce new methods for the improved training of generative adversarial networks (GANs) for image synthesis. We construct a variant of GANs employing label conditioning that results in 128 × 128 resolution image samples exhibiting global coherence. We expand on previous work for image quality assessmenttoprovidetwonewanalysesforassessing the discriminability and diversity of samples from class-conditional image synthesis models. These analyses demonstrate that high resolution samples provide class information not present in low resolution samples. Across 1000 ImageNet classes, 128×128 samples are more than twice as discriminable as artificially resized 32 × 32 samples. In addition, 84.7% of the classes have samples exhibiting diversity comparable to real ImageNet data.

ACGAN理论以及Pytorch实现

InfoGAN

This paper describes InfoGAN, an information-theoretic extension to the Generative Adversarial Network that is able to learn disentangled representations in a completely unsupervised manner. InfoGAN is a generative adversarial network that also maximizes the mutual information between a small subset of the latent variables and the observation. We derive a lower bound of the mutual information objective that can be optimized efficiently. Specifically, InfoGAN successfully disentangles writing styles from digit shapes on the MNIST dataset, pose from lighting of 3D rendered images, and background digits from the central digit on the SVHN dataset. It also discovers visual concepts that include hair styles, presence/absence of eyeglasses, and emotions on the CelebA face dataset. Experiments show that InfoGAN learns interpretable representations that are competitive with representations learned by existing supervised methods.

InfoGAN理论以及Pytorch实现

Coupled-GAN

We propose coupled generative adversarial network (CoGAN) for learning a joint distribution of multi-domain images. In contrast to the existing approaches, which require tuples of corresponding images in different domains in the training set, CoGAN can learn a joint distribution without any tuple of corresponding images. It can learn a joint distribution with just samples drawn from the marginal distributions. This is achieved by enforcing a weight-sharing constraint that limits the network capacity and favors a joint distribution solution over a product of marginal distributions one. We apply CoGAN to several joint distribution learning tasks, including learning a joint distribution of color and depth images, and learning a joint distribution of face images with different attributes. For each task it successfully learns the joint distribution without any tuple of corresponding images. We also demonstrate its applications to domain adaptation and image transformation.

Coupled-GAN理论以及Pytorch实现

Triple-GAN

Generative Adversarial Nets (GANs) have shown promise in image generation and semi-supervised learning (SSL). However, existing GANs in SSL have two problems: (1) the generator and the discriminator (i.e. the classifier) may not be optimal at the same time; and (2) the generator cannot control the semantics of the generated samples. The problems essentially arise from the two-player formulation, where a single discriminator shares incompatible roles of identifying fakesamplesandpredictinglabelsanditonlyestimatesthedatawithoutconsidering the labels. To address the problems, we present triple generative adversarial net (Triple-GAN), which consists of three players—a generator, a discriminator and a classifier. The generator and the classifier characterize the conditional distributions between images and labels, and the discriminator solely focuses on identifying fake image-label pairs. We design compatible utilities to ensure that the distributions characterized by the classifier and the generator both converge to the data distribution. Our results on various datasets demonstrate that Triple-GAN as a unified model can simultaneously (1) achieve the state-of-the-art classification results among deep generative models, and (2) disentangle the classes and styles of the input and transfer smoothly in the data space via interpolation in the latent space class-conditionally.

Triple-GAN理论以及Pytorch实现

WGAN

Wasserstein GAN

WGAN理论以及Pytorch实现

验证线性模型,Sigmoid,BN在WGAN上的不同表现

WGAN-gp

Generative Adversarial Networks (GANs) are powerful generative models, but sufferfromtraininginstability. TherecentlyproposedWassersteinGAN(WGAN) makes progress toward stable training of GANs, but sometimes can still generate only poor samples or fail to converge. We find that these problems are often due to the use of weight clipping in WGAN to enforce a Lipschitz constraint on the critic,whichcanleadtoundesiredbehavior. Weproposeanalternativetoclipping weights: penalize the norm of gradient of the critic with respect to its input. Our proposed method performs better than standard WGAN and enables stable trainingofawidevarietyofGANarchitectureswithalmostnohyperparametertuning, including 101-layer ResNets and language models with continuous generators. We also achieve high quality generations on CIFAR-10 and LSUN bedrooms.

WGAN-gp理论以及Pytorch实现

验证线性模型,BN,Sigmoid在WGAN-GP不同表现

WGAN-div

In many domains of computer vision, generative adversarial networks (GANs) have achieved great success, among which the family of Wasserstein GANs (WGANs) is considered to be state-of-the-art due to the theoretical contributions and competitive qualitative performance. However, it is very challenging to approximate the k-Lipschitz constraint required by the Wasserstein-1 metric (W-met). In this paper, we propose a novel Wasserstein divergence (W-div), which is a relaxed version of W-met and does not require the k-Lipschitz constraint. As a concrete application, we introduce a Wasserstein divergence objective for GANs (WGAN-div), which can faithfully approximate Wdiv through optimization. Under various settings, including progressive growing training, we demonstrate the stability of the proposed WGANdiv owing to its theoretical and practical advantages over WGANs. Also, we study the quantitative and visual performance of WGAN-div on standard image synthesis benchmarks, showing the superior performance of WGAN-div compared to the state-of-the-art methods.

WGAN-div理论以及Pytorch实现

DualGAN

Conditional Generative Adversarial Networks (GANs) for cross-domain image-to-image translation have made much progress recently [7, 8, 21, 12, 4, 18]. Depending on the task complexity, thousands to millions of labeled image pairs are needed to train a conditional GAN. However, human labeling is expensive, even impractical, and large quantities of data may not always be available. Inspired by dual learning from natural language translation [23], we develop a novel dual-GAN mechanism, which enables image translators to be trained from two sets of unlabeled images from two domains. In our architecture, the primal GAN learns to translate images from domain U to those in domain V , while the dual GAN learns to invert the task. The closed loop made by the primal and dual tasks allows images from either domain to be translated and then reconstructed. Hence a loss function that accounts for the reconstruction error of images can be used to train the translators. Experiments on multiple image translation tasks with unlabeleddatashowconsiderableperformancegainofDualGAN over a single GAN. For some tasks, DualGAN can evenachievecomparableorslightlybetterresultsthanconditional GAN trained on fully labeled data.

DualGAN理论以及Pytorch实现

CT-GAN

Despite being impactful on a variety of problems and applications, the generative adversarialnets(GANs) are remarkably difficult to train. This issue is formally analyzed by Arjovsky&Bottou(2017),who also propose an alternative direction to avoid the caveats in the minmax two-player training of GANs. The corresponding algorithm, called Wasserstein GAN (WGAN), hinges on the 1-Lipschitz continuity of the discriminator. In this paper, we propose a novel approach to enforcing theLipschitz continuity in the training procedure of WGANs. Our approach seamlessly connects WGAN with one of the recent semi-supervised learning methods. As a result, it gives rise to not only better photo-realistic samples than the previous methods but also state-of-the-art semi-supervised learning results. In particular, our approach gives rise to the inception score of more than 5.0 with only 1,000 CIFAR-10 images and is the first that exceeds the accuracy of 90% on the CIFAR-10 dataset using only 4,000 labeled images, to the best of our knowledge.

CTGAN理论以及Pytorch实现

DRAGAN

We propose studying GAN training dynamics as regret minimization, which is in contrast to the popular view that there is consistent minimization of a divergence between real and generated distributions. We analyze the convergence of GAN trainingfromthisnewpointofviewtounderstandwhymodecollapsehappens. We hypothesize the existence of undesirable local equilibria in this non-convex game to be responsible for mode collapse. We observe that these local equilibria often exhibit sharp gradients of the discriminator function around some real data points. Wedemonstratethatthesedegeneratelocalequilibriacanbeavoidedwithagradient penalty scheme called DRAGAN. We show that DRAGAN enables faster training, achieves improved stability with fewer mode collapses, and leads to generator networks with better modeling performance across a variety of architectures and objective functions.

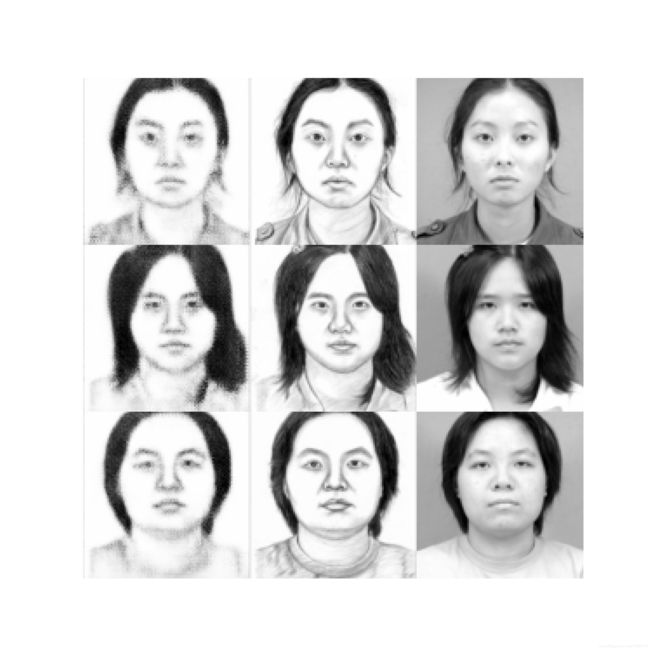

pix2pix

We investigate conditional adversarial networks as a general-purpose solution to image-to-image translation problems. These networks not only learn the mapping from input image to output image, but also learn a loss function to train this mapping. This makes it possible to apply the same generic approach to problems that traditionally would require very different loss formulations. We demonstrate that this approach is effective at synthesizing photos from label maps, reconstructing objects from edge maps, and colorizing images, among other tasks. Moreover, since the release of the pix2pix software associated with this paper,hundredsoftwitterusershavepostedtheirownartistic experiments using our system. As a community, we no longerhand-engineerourmappingfunctions,andthiswork suggests we can achieve reasonable results without handengineering our loss functions either.

pix2pix理论以及Pytorch实现

ALI

We introduce the adversarially learned inference (ALI) model, which jointly learns a generation network and an inference network using an adversarial process. The generation network maps samples from stochastic latent variables to the data space while the inference network maps training examples in data space to the space of latent variables. An adversarial game is cast between these two networks and a discriminative network is trained to distinguish between joint latent/data-space samples from the generative network and joint samples from the inference network. We illustrate the ability of the model to learn mutually coherent inference and generationnetworksthroughtheinspectionsofmodelsamplesandreconstructionsand confirm the usefulness of the learned representations by obtaining a performance competitive with state-of-the-art on the semi-supervised SVHN and CIFAR10 tasks.

BiGAN

The ability of the Generative Adversarial Networks (GANs) framework to learn generative models mapping from simple latent distributions to arbitrarily complex data distributions has been demonstrated empirically, with compelling results showing that the latent space of such generators captures semantic variation in the data distribution. Intuitively, models trained to predict these semantic latent representations given data may serve as useful feature representations for auxiliary problems where semantics are relevant. However, in their existing form, GANs have no means of learning the inverse mapping – projecting data back into the latentspace. WeproposeBidirectionalGenerativeAdversarialNetworks(BiGANs) as a means of learning this inverse mapping, and demonstrate that the resulting learnedfeaturerepresentationisusefulforauxiliarysuperviseddiscriminationtasks, competitive with contemporary approaches to unsupervised and self-supervised feature learning

BiGAN理论以及Pytorch实现

CartoonGAN

In this paper, we propose a solution to transforming photos of real-world scenes into cartoon style images, which is valuable and challenging in computer vision and computer graphics. Our solution belongs to learning based methods, which have recently become popular to stylize images in artistic forms such as painting. However, existing methods do not produce satisfactory results for cartoonization, due to the fact that (1) cartoon styles have unique characteristics with high level simplification and abstraction, and (2) cartoon images tend to have clear edges, smooth color shading and relatively simple textures, which exhibit significant challenges for texture-descriptor-based loss functions used in existing methods. In this paper, we propose CartoonGAN, a generative adversarial network (GAN) framework for cartoon stylization. Our method takes unpaired photos and cartoon images for training, which is easy to use. Two novel losses suitable for cartoonization are proposed: (1) a semantic content loss, which is formulated as a sparse regularization in the high-level feature maps of the VGG network to cope with substantial style variation between photos and cartoons, and (2) an edge-promoting adversarial loss for preserving clear edges. We further introduce an initialization phase, to improve the convergence of the network to the target manifold. Our method is also much more efficient to train than existing methods. Experimental results show that our method is able to generate high-quality cartoon images from real-world photos (i.e., following specific artists’ styles and with clear edges and smooth shading) and outperforms state-of-the-art methods

CartoonGAN理论以及Pytorch实现(部分)

更多内容可以在我的公众号上阅读~