paddlepaddle-加载预训练模型

参考GitHub:

https://github.com/yangninghua/deeplearning_backbone

首先上官方例子

参考:

https://github.com/PaddlePaddle/models/blob/develop/PaddleCV/image_classification/train.py

place = fluid.CUDAPlace(0) if args.use_gpu else fluid.CPUPlace()

exe = fluid.Executor(place)

exe.run(startup_prog)

if checkpoint is not None:

fluid.io.load_persistables(exe, checkpoint, main_program=train_prog)

add_arg('pretrained_model', str, None, "Whether to use pretrained model.")

pretrained_model = args.pretrained_model

if pretrained_model:

def if_exist(var):

return os.path.exists(os.path.join(pretrained_model, var.name))

fluid.io.load_vars(exe, pretrained_model, main_program=train_prog, predicate=if_exist)模型加载分成两种:

第一种是预训练模型

预训练的图像模式是可以借来使用的,即所谓迁移学习,将预训练模型的底层特征拿过来使用,接在新的图像模型结构上,对新的数据进行训练,这样就大大减少了训练数据量。

第二种是训练快照

if save_dirname is not None: fluid.io.save_inference_model( save_dirname, ["img"], [prediction], exe, model_filename=model_filename, params_filename=params_filename)

paddlepaddle最新的Fluid中,模型由一个或多个program来表示,program包含了block,block中包含了op和variable。在保存模型时,program–block–{op, variable}这一系列的拓扑结构会被保存成一个文件,variable具体的值会被保存在其他文件里。

https://blog.csdn.net/baidu_40840693/article/details/93396495

首先在上一个例子中的保存方式:

if save_dirname is not None:

fluid.io.save_inference_model(

save_dirname, ["img"], [prediction],

exe,

model_filename=model_filename,

params_filename=params_filename)https://github.com/PaddlePaddle/Paddle/issues/8973

paddlepaddle中调用fluid.io.save_inference_mode保存模型会保存模型的结构图,和模型Conv,BN,FC等层的权重

并不会保存快照,即

通过fluid.io.save_inference_mode 保存的模型,我们把它视为caffe、pytorch中的预训练模型

因为:

save_inference_model 存下来的,已经进行了图的剪枝,只能获取存储时设置的 fetch_list 中的变量,中间变量不可取我们看一下这个函数干了什么:

def save_inference_model(dirname,

feeded_var_names,

target_vars,

executor,

main_program=None,

model_filename=None,

params_filename=None,

export_for_deployment=True):

"""

Prune the given `main_program` to build a new program especially for inference,

and then save it and all related parameters to given `dirname` by the `executor`.

Args:

dirname(str): The directory path to save the inference model.

feeded_var_names(list[str]): Names of variables that need to be feeded data

during inference.

target_vars(list[Variable]): Variables from which we can get inference

results.

executor(Executor): The executor that saves the inference model.

main_program(Program|None): The original program, which will be pruned to

build the inference model. If is setted None,

the default main program will be used.

Default: None.

model_filename(str|None): The name of file to save the inference program

itself. If is setted None, a default filename

`__model__` will be used.

params_filename(str|None): The name of file to save all related parameters.

If it is setted None, parameters will be saved

in separate files .

export_for_deployment(bool): If True, programs are modified to only support

direct inference deployment. Otherwise,

more information will be stored for flexible

optimization and re-training. Currently, only

True is supported.

Returns:

target_var_name_list(list): The fetch variables' name list

Raises:

ValueError: If `feed_var_names` is not a list of basestring.

ValueError: If `target_vars` is not a list of Variable.

Examples:

.. code-block:: python

exe = fluid.Executor(fluid.CPUPlace())

path = "./infer_model"

fluid.io.save_inference_model(dirname=path, feeded_var_names=['img'],

target_vars=[predict_var], executor=exe)

# In this exsample, the function will prune the default main program

# to make it suitable for infering the `predict_var`. The pruned

# inference program is going to be saved in the "./infer_model/__model__"

# and parameters are going to be saved in separate files under folder

# "./infer_model".

"""

if isinstance(feeded_var_names, six.string_types):

feeded_var_names = [feeded_var_names]

elif export_for_deployment:

if len(feeded_var_names) > 0:

# TODO(paddle-dev): polish these code blocks

if not (bool(feeded_var_names) and all(

isinstance(name, six.string_types)

for name in feeded_var_names)):

raise ValueError("'feed_var_names' should be a list of str.")

if isinstance(target_vars, Variable):

target_vars = [target_vars]

elif export_for_deployment:

if not (bool(target_vars) and

all(isinstance(var, Variable) for var in target_vars)):

raise ValueError("'target_vars' should be a list of Variable.")

if main_program is None:

main_program = default_main_program()

if main_program._is_mem_optimized:

warnings.warn(

"save_inference_model must put before you call memory_optimize. \

the memory_optimize will modify the original program, \

is not suitable for saving inference model \

we save the original program as inference model.",

RuntimeWarning)

# fix the bug that the activation op's output as target will be pruned.

# will affect the inference performance.

# TODO(Superjomn) add an IR pass to remove 1-scale op.

with program_guard(main_program):

uniq_target_vars = []

for i, var in enumerate(target_vars):

if isinstance(var, Variable):

var = layers.scale(

var, 1., name="save_infer_model/scale_{}".format(i))

uniq_target_vars.append(var)

target_vars = uniq_target_vars

target_var_name_list = [var.name for var in target_vars]

# when a pserver and a trainer running on the same machine, mkdir may conflict

try:

os.makedirs(dirname)

except OSError as e:

if e.errno != errno.EEXIST:

raise

if model_filename is not None:

model_basename = os.path.basename(model_filename)

else:

model_basename = "__model__"

model_basename = os.path.join(dirname, model_basename)

# When export_for_deployment is true, we modify the program online so that

# it can only be loaded for inference directly. If it's false, the whole

# original program and related meta are saved so that future usage can be

# more flexible.

origin_program = main_program.clone()

if export_for_deployment:

main_program = main_program.clone()

global_block = main_program.global_block()

need_to_remove_op_index = []

for i, op in enumerate(global_block.ops):

op.desc.set_is_target(False)

if op.type == "feed" or op.type == "fetch":

need_to_remove_op_index.append(i)

for index in need_to_remove_op_index[::-1]:

global_block._remove_op(index)

main_program.desc.flush()

main_program = main_program._prune(targets=target_vars)

main_program = main_program._inference_optimize(prune_read_op=True)

fetch_var_names = [v.name for v in target_vars]

prepend_feed_ops(main_program, feeded_var_names)

append_fetch_ops(main_program, fetch_var_names)

with open(model_basename, "wb") as f:

f.write(main_program.desc.serialize_to_string())

else:

# TODO(panyx0718): Save more information so that it can also be used

# for training and more flexible post-processing.

with open(model_basename + ".main_program", "wb") as f:

f.write(main_program.desc.serialize_to_string())

main_program._copy_dist_param_info_from(origin_program)

if params_filename is not None:

params_filename = os.path.basename(params_filename)

save_persistables(executor, dirname, main_program, params_filename)

return target_var_name_list

但是如果我们想保存快照呢,就是训练突然中断,下一次接着上一次的学习率,权重,loss,梯度等接着训练

这种情况就是我们前面说的第二种,训练快照

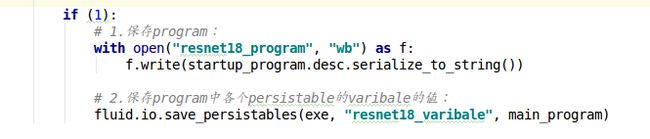

保存快照分为两步:

#1.保存program:

with open(filename, "wb") as f:

f.write(program.desc.serialize_to_string())

#2.保存program中各个persistable的varibale的值:

fluid.io.save_persistables(executor, dirname, main_program)

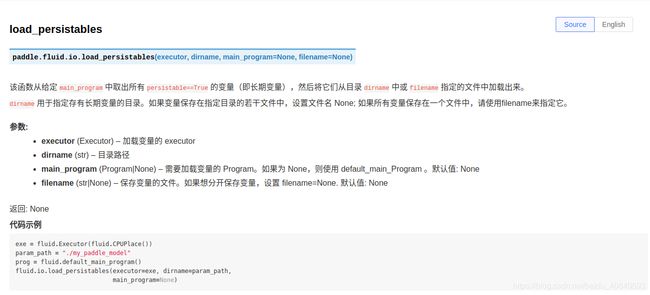

那么同样的,加载快照,也分为两步:

#1.恢复program:

with open(filename, "rb") as f:

program_desc_str = f.read()

program = Program.parse_from_string(program_desc_str)

#2.恢复persistable的variable:

fluid.io.load_persistables(executor, dirname, main_program)

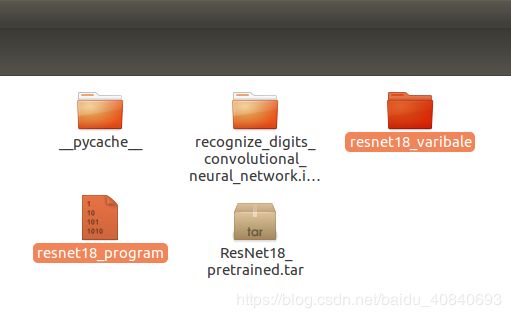

我们进行测试

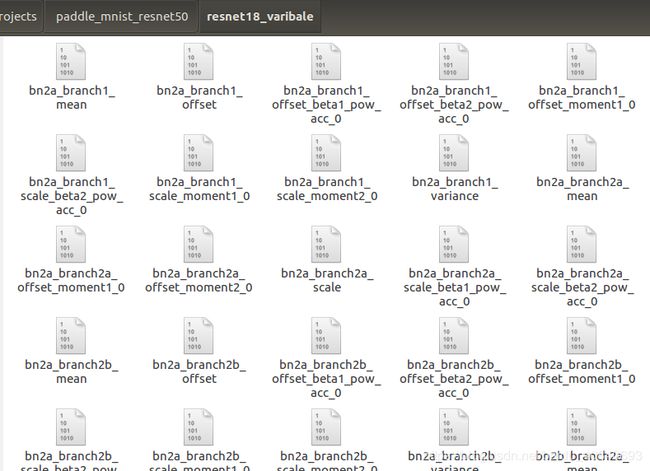

产生了两个文件:

resnet18_varibale中有300多项