confusion matrix

confusion matrix

https://scikit-learn.org/stable/modules/model_evaluation.html

confusion [kən'fjuːʒ(ə)n]:n. 混淆,混乱,困惑

The confusion_matrix function evaluates classification accuracy by computing the confusion matrix with each row corresponding to the true class (Wikipedia and other references may use different convention for axes.)

confusion_matrix 函数通过计算混淆矩阵来评估分类准确性,每个行对应于真实类别 (维基百科和其他引用可以使用不同的轴约定。)

By definition, entry i , j i, j i,j in a confusion matrix is the number of observations actually in group i i i, but predicted to be in group j j j. Here is an example:

根据定义,混淆矩阵中的条目 i , j i, j i,j 是实际在 i i i 组中的观察数,但预计在 j j j 组中。Here is an example:

>>> from sklearn.metrics import confusion_matrix

>>> y_true = [2, 0, 2, 2, 0, 1]

>>> y_pred = [0, 0, 2, 2, 0, 2]

>>> confusion_matrix(y_true, y_pred)

array([[2, 0, 0],

[0, 0, 1],

[1, 0, 2]])

#!/usr/bin/env python

# -*- coding: utf-8 -*-

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

from sklearn.metrics import confusion_matrix

y_true = [2, 0, 2, 2, 0, 1]

y_pred = [0, 0, 2, 2, 0, 2]

print(confusion_matrix(y_true, y_pred))

strong@foreverstrong:~/git_workspace/MonoGRNet$ python yongqiang.py

[[2 0 0]

[0 0 1]

[1 0 2]]

strong@foreverstrong:~/git_workspace/MonoGRNet$

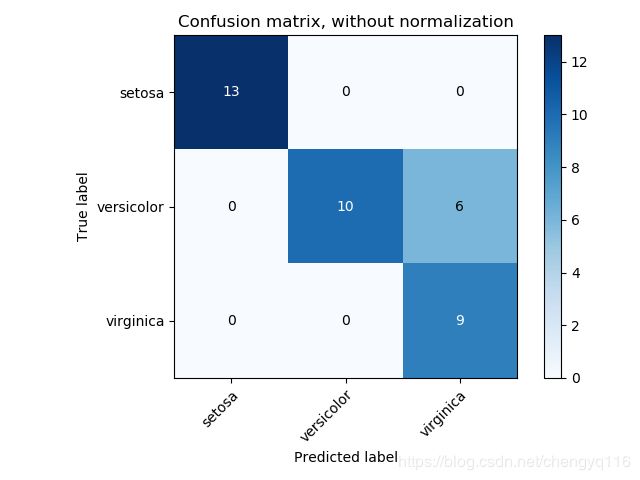

Here is a visual representation of such a confusion matrix (this figure comes from the Confusion matrix example):

For binary problems, we can get counts of true negatives, false positives, false negatives and true positives as follows:

对于二元问题,我们可以得到真阴性,误报,假阴性和真阳性的计数如下:

>>> y_true = [0, 0, 0, 1, 1, 1, 1, 1]

>>> y_pred = [0, 1, 0, 1, 0, 1, 0, 1]

>>> tn, fp, fn, tp = confusion_matrix(y_true, y_pred).ravel()

>>> tn, fp, fn, tp

(2, 1, 2, 3)

References

https://en.wikipedia.org/wiki/Confusion_matrix