List-wise Ranking

背景

ranking is a prediction task on list of objects. 所以 point-wise, pair-wise 等方法的训练任务与工作场景有差异, list-wise 理应更好.

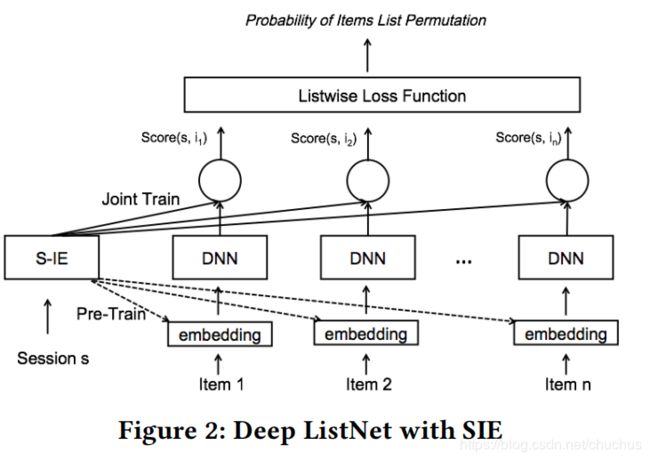

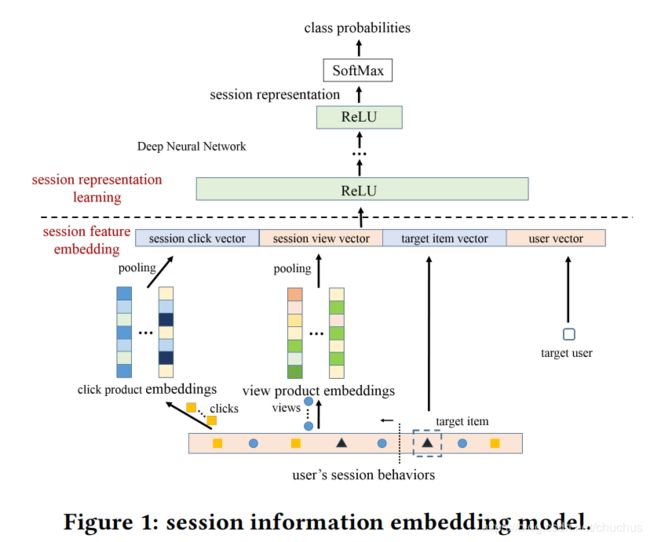

list-wise ranking with S-IE

该论文见参考[1].

Session Infomation Embedding (S-IE)

算是一个预训练, task为正负样本二分类, 为后面list-wise作准备.

图: 将点击与曝光内容分别pooling, 后与 target,user 作concat.

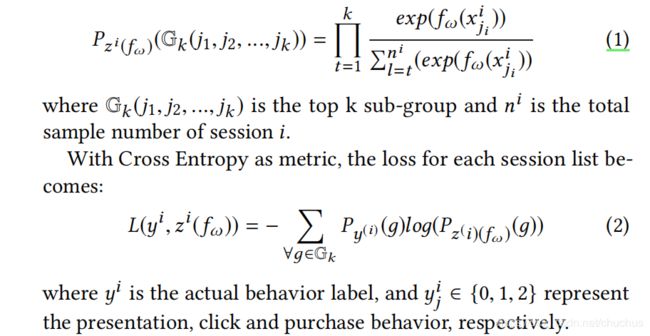

list-wise ranking

图 公式书写太差, 有误, (1)式中分子下标i可能为 t t t,分母下标i可能为 l l l; (2)式中i及右括号应放在上标位置.

实验

- 数据集. CIKM CUP 2016.是电商网站搜索引擎的日志.

- ndcg作指标. ctr预估, 通常用二分类的任务去做, 其指标为AUC/GAUC. 现在是list-wise, 就用nDCG.

我的疑惑

- session s 的rep由target得到,即 r e p ( s e s s i o n ) = f ( t a r g e t , o t h e r ) rep(session)=f(target,other) rep(session)=f(target,other) 那么 target 与 图2中的 n 个item是什么关系? 论文有说

each session with the contained item behaviors is treated as a list-wisw training sample,但还不是很清楚. - 为啥用搜索引擎的日志, 找个推荐数据集不是更直接么?

论文2,ListNet

loss定义

论文1的list-wise借鉴了参考2, ICML’2017的微软的论文.

定义 list-wise 的损失函数:

∑ i = 1 m L ( y ( i ) , z ( i ) ) (1) \sum _{i=1}^mL(y^{(i)},z^{(i)}) \tag 1 i=1∑mL(y(i),z(i))(1)

where m = ∣ t r a i n s e t ∣ m=|trainset| m=∣trainset∣ , y ( i ) = ( y 1 ( i ) , y 2 ( i ) , . . . , y n ( i ) ( i ) ) y^{(i)}=(y^{(i)}_1,y^{(i)}_2,...,y^{(i)}_{n^{(i)}}) y(i)=(y1(i),y2(i),...,yn(i)(i)), 是一个list,表示与query q ( i ) q^{(i)} q(i) 相关的 n ( i ) n^{(i)} n(i) 个文档的相关性得分. 与之类似, z ( i ) = ( f ( x 1 ( i ) ) , f ( x 2 ( i ) ) , . . . , f ( x n ( i ) ( i ) ) ) z^{(i)}=(f(x^{(i)}_1),f(x^{(i)}_2),...,f(x^{(i)}_{n^{(i)}})) z(i)=(f(x1(i)),f(x2(i)),...,f(xn(i)(i))) 是 ranking function f ( ⋅ ) f(\cdot) f(⋅) 计算出的预估相关性.

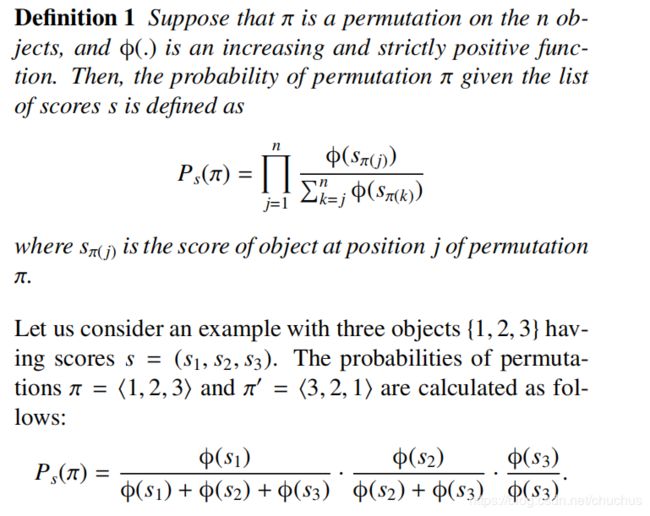

probability model

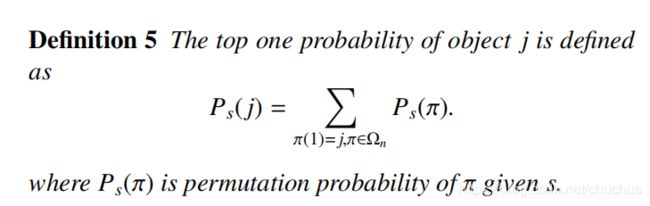

图: permutation probability

对size=n的list作全排列, 有 n ! n! n! 种结果, 计算量不可接受, 也就是 NP_Hard? 所以提出 top one probability.

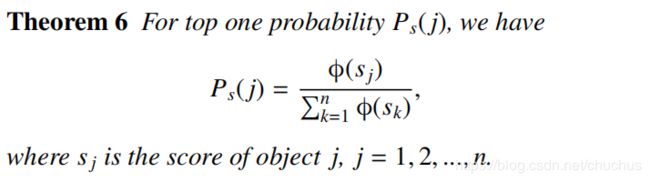

图: top one probability.

图: 定理6, doc j 排第一的概率描述

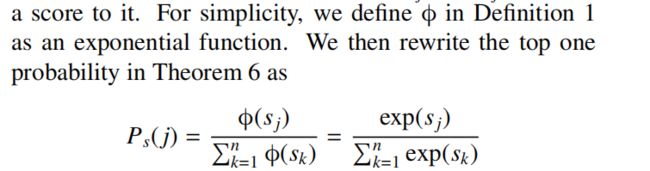

图: 对 ϕ ( ⋅ ) \phi(\cdot) ϕ(⋅)作指数函数定义后, 可以改写 定理6 , 就成了soft-max

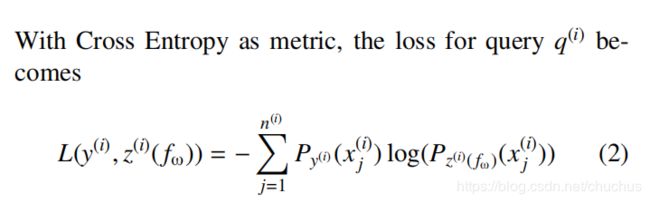

图: soft_max 得到 label与pred两个list的概率后, 用交叉熵作损失函数, 得到了最终的loss.

实验

- 数据集, 其中一个是CSearch, 来自商业搜索引擎.This data set provides five levels of relevance judgments, ranging from 4 (”perfect match”) to 0 (”bad match”).

- 指标,nDCG@5 and MAP(mean average precision).

思考讨论

- 所谓 list-wise

所谓list-wise 也只是损失函数相关, 预测阶段依旧是point-wise打分并排序, 由此得到序列.

谷歌的Seq2Slate的论文里有一段清晰的描述:

In listwise approaches the loss depends on the full permutation of items. Although these losses consider inter-item dependencies, the ranking function itself is pointwise, so at inference time the model still assigns a score to each item which does not depend on scores of other items (i.e., an item’s score will not change if it is placed in a different set).

- loss 与 常规多分类 有何异同

已经很像了, recsys中召回任务的设计就可以是transformer那样的多分类. 但常规的label是one-hot(可能带有 label smooth), 此处是一个不那么陡峭的分布.

参考

- CIKM’2017,alibaba,Session-aware Information Embedding for E-commerce Product Recommendation

- ICML’2007,Learning to Rank: From Pairwise Approach to Listwise Approach