实验目的:使用corosync v1 + pacemaker部署httpd高可用服务(+NFS)。

本实验使用Centos 6.8系统,FileSystem资源服务器,NA1节点1,NA2节点2,VIP192.168.94.222

目录结构:(好烦啊,布局一塌糊涂)

1、corosync v1 + pacemaker 基础安装

2、pacemaker管理工具crmsh安装

3、资源管理配置

4、创建资源基本介绍

5、创建一个VIP资源

6、创建一个httpd资源

7、资源约束

8、模拟故障集群转换

9、httpd服务高可用测试

10、创建nfs文件资源

11、高可用集群测试

1、corosync v1 + pacemaker 基础安装

参考

corosync v1 + pacemaker高可用集群部署(一)基础安装

2、pacemaker管理工具crmsh安装

从pacemaker 1.1.8开始,crm sh 发展成一个独立项目,pacemaker中不再提供,

说明我们安装好pacemaker后,是不会有crm这个命令行模式的资源管理器的。还有很多其它的管理工具,本次实验我们使用crmsh工具进行管理。

这里使用 crmsh-3.0.0-6.1.noarch.rpm

crmsh安装:NA1 & NA2

首次安装,它会提示我们需要如下几个依赖包,我们在网上找相应的rpm包安装即可。

[root@na1 ~]# rpm -ivh crmsh-3.0.0-6.1.noarch.rpm warning: crmsh-3.0.0-6.1.noarch.rpm: Header V3 RSA/SHA256 Signature, key ID 17280ddf: NOKEY error: Failed dependencies: crmsh-scripts >= 3.0.0-6.1 is needed by crmsh-3.0.0-6.1.noarch python-dateutil is needed by crmsh-3.0.0-6.1.noarch python-parallax is needed by crmsh-3.0.0-6.1.noarch redhat-rpm-config is needed by crmsh-3.0.0-6.1.noarch [root@na1 ~]#这两个使用rpm包安装

rpm -ivh crmsh-scripts-3.0.0-6.1.noarch.rpm rpm -ivh python-parallax-1.0.1-28.1.noarch.rpm这两个使用yum安装即可

yum install python-dateutil* -y yum install redhat-rpm-config* -y安装完成后,在安装crmsh

rpm -ivh crmsh-3.0.0-6.1.noarch.rpm

3、资源管理配置

crm会自动同步节点上资源的配置情况,只需在一个节点上进行配置即可,不需要手动复制同步。

NA1

配置方式说明

crm有两种配置方式,一种是批处理模式,另外一种是交互模式

批处理(在命令行输入命令)

[root@na1 ~]# crm ls cibstatus help site cd cluster quit end script verify exit ra maintenance bye ? ls node configure back report cib resource up status corosync options history交互模式(进入crm(live)#,进行命令操作,可进行ls cd cd..等基础操作 )

[root@na1 ~]# crm crm(live)# ls cibstatus help site cd cluster quit end script verify exit ra maintenance bye ? ls node configure back report cib resource up status corosync options history crm(live)#

初始配置检查

在crm交互模式下配置资源后,需要先检查配置是否有错误,然后在进行命令提交

在配置前我们先检查一下

[root@na1 ~]# crm crm(live)# configure crm(live)configure# verify ERROR: error: unpack_resources: Resource start-up disabled since no STONITH resources have been defined error: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option error: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity Errors found during check: config not valid crm(live)configure#

此错误是因为我们目前没有STONITH设备,因此它会报错,我们暂时给它关闭。

property stonith-enabled=false

命令提交

crm(live)configure# commit crm(live)configure#

查看当前配置

crm(live)configure# show node na1.server.com node na2.server.com property cib-bootstrap-options: \ have-watchdog=false \ dc-version=1.1.18-3.el6-bfe4e80420 \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false crm(live)configure#

crm(live)# status Stack: classic openais (with plugin) Current DC: na1.server.com (version 1.1.18-3.el6-bfe4e80420) - partition with quorum Last updated: Sun May 24 21:38:15 2020 Last change: Sun May 24 21:37:10 2020 by root via cibadmin on na1.server.com 2 nodes configured (2 expected votes) 0 resources configured Online: [ na1.server.com na2.server.com ] No resources crm(live)#

4、创建资源基本介绍

基础资源 primitive,使用help 命令 可以查看帮助

语法结构

primitive <rsc> {[<class>:[<provider>:]]<type>|@<template>} [description=<description>] [[params] attr_list] [meta attr_list] [utilization attr_list] [operations id_spec] [op op_type [<attribute>=<value>...] ...] attr_list :: [$id=<id>] [<score>:] [rule...] <attr>=<val> [<attr>=<val>...]] | $id-ref=<id> id_spec :: $id=<id> | $id-ref=<id> op_type :: start | stop | monitor

简单介绍

primitive 资源名称 资源类别:资源代理的提供程序:资源代理名称 资源代理类别: lsb, ocf, stonith, service 资源代理的提供程序: 例如 heartbeat , pacemaker 资源代理名称:即resource agent, 如:IPaddr2,httpd, mysql params-- 设置实例属性,传递实参(实际不这么叫) meta--元属性, 是可以为资源添加的选项。它们告诉 CRM 如何处理特定资源。 其余的用到的时候在简单说下

创建一个资源首先看看它属于什么类别

#查看当前支持的类别 crm(live)ra# classes lsb ocf / .isolation heartbeat pacemaker service stonith #查看代理名称属于哪个程序 crm(live)ra# providers IPaddr heartbeat #列出这个类别下的所有资源代理类型 crm(live)ra# list service # 查看IPaddr代理的信息,也就是帮助,查看此资源该怎么创建 crm(live)ra# info ocf:heartbeat:IPaddr

5、创建一个VIP资源

查看一下IPaddr的帮助,*的参数为必选项,其余的可根据具体要求进行设置。

info ocf:heartbeat:IPaddr

Parameters (*: required, []: default): ip* (string): IPv4 or IPv6 address The IPv4 (dotted quad notation) or IPv6 address (colon hexadecimal notation) example IPv4 "192.168.1.1". example IPv6 "2001:db8:DC28:0:0:FC57:D4C8:1FFF". nic (string): Network interface The base network interface on which the IP address will be brought online. If left empty, the script will try and determine this from the routing table.

创建VIP资源,在configure下

crm(live)configure# primitive VIP ocf:heartbeat:IPaddr params ip=192.168.94.222 crm(live)configure# verify crm(live)configure# commit crm(live)configure#

查看资源状态(VIP启动在na1上)

crm(live)# status Stack: classic openais (with plugin) Current DC: na1.server.com (version 1.1.18-3.el6-bfe4e80420) - partition with quorum Last updated: Sun May 24 22:12:05 2020 Last change: Sun May 24 22:11:15 2020 by root via cibadmin on na1.server.com 2 nodes configured (2 expected votes) 1 resource configured Online: [ na1.server.com na2.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na1.server.com crm(live)#

6、创建一个httpd资源

配置httpd服务(开机不启动)

NA1 [root@na1 ~]# chkconfig httpd off [root@na1 ~]# service httpd stop 停止 httpd: [确定] [root@na1 ~]# echo "na1.server.com" >> /var/www/html/index.html [root@na1 ~]# NA2 [root@na2 ~]# chkconfig httpd off [root@na2 ~]# service httpd stop 停止 httpd: [确定] [root@na2 ~]# echo "na2.server.com" >> /var/www/html/index.html [root@na2 ~]#

创建httpd资源

crm(live)configure# primitive httpd service:httpd httpd crm(live)configure# verify crm(live)configure# commit crm(live)configure#

查看资源状态

crm(live)# status Stack: classic openais (with plugin) Current DC: na1.server.com (version 1.1.18-3.el6-bfe4e80420) - partition with quorum Last updated: Sun May 24 22:22:47 2020 Last change: Sun May 24 22:17:35 2020 by root via cibadmin on na1.server.com 2 nodes configured (2 expected votes) 2 resources configured Online: [ na1.server.com na2.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na1.server.com httpd (service:httpd): Started na2.server.com crm(live)#

我们发现VIP在na1上,httpd在na2上,这可是不行的。corosync会将资源进行平均分配,因此我们需要对资源进行约束。

7、资源约束

资源约束用以指定在哪些群集节点上运行资源,以何种顺序装载资源,以及特定资源依赖于哪些其它资源。

pacemaker共给我们提供了三种资源约束方法:

1)Resource Location(资源位置):定义资源可以、不可以或尽可能在哪些节点上运行;

2)Resource Collocation(资源排列):排列约束用以定义集群资源可以或不可以在某个节点上同时运行;

3)Resource Order(资源顺序):顺序约束定义集群资源在节点上启动的顺序;1、资源优先在哪个节点上运行;2、2个资源在一起运行,或者不能在一起运行;3、启动顺序,先运行那个,在运行那个。

资源排列

这里我们使用collocation将VIP和httpd资源绑定在一起,让它们必须在同一个节点上允许。

#查看帮助,我们参考案例就行 crm(live)configure# help collocation Example: colocation never_put_apache_with_dummy -inf: apache dummy colocation c1 inf: A ( B C ) -inf 不能在一起,inf:必须在一起。中间never_put_apache_with_dummy这个是名字,方便识记的。进行约束

crm(live)configure# collocation vip_with_httpd inf: VIP httpd crm(live)configure# verify crm(live)configure# commit crm(live)configure#查看资源状态(都跑在na2上去了。我们约束它,希望它留在na1上)

crm(live)# status Stack: classic openais (with plugin) Current DC: na1.server.com (version 1.1.18-3.el6-bfe4e80420) - partition with quorum Last updated: Sun May 24 22:38:55 2020 Last change: Sun May 24 22:37:44 2020 by root via cibadmin on na1.server.com 2 nodes configured (2 expected votes) 2 resources configured Online: [ na1.server.com na2.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na2.server.com httpd (service:httpd): Started na2.server.com crm(live)#

位置约束

约束资源在na1上:Location(资源位置):

查看帮助(我们发现有个数字,数字的意思是指定类似于一个分数的意思,数字越大,越优先。集群会选择分数高的节点去允许,默认分数是0)

Examples: location conn_1 internal_www 100: node1 location conn_1 internal_www \ rule 50: #uname eq node1 \ rule pingd: defined pingd location conn_2 dummy_float \ rule -inf: not_defined pingd or pingd number:lte 0 # never probe for rsc1 on node1 location no-probe rsc1 resource-discovery=never -inf: node1约束资源(这里我们约束VIP就可以了,以为之前已经对VIP和httpd进行了约束,在一起、在一起、在一起)

crm(live)configure# location vip_httpd_prefer_na1 VIP 100: na1.server.com crm(live)configure# verify crm(live)configure# commit我们查看资源状态

都运行在NA1节点上了。

crm(live)# status Stack: classic openais (with plugin) Current DC: na1.server.com (version 1.1.18-3.el6-bfe4e80420) - partition with quorum Last updated: Sun May 24 22:48:22 2020 Last change: Sun May 24 22:48:10 2020 by root via cibadmin on na1.server.com 2 nodes configured (2 expected votes) 2 resources configured Online: [ na1.server.com na2.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na1.server.com httpd (service:httpd): Started na1.server.com crm(live)#

8、模拟故障集群转换

主备切换

crm node下,是针对节点的操作。有个standby,将自己改为备,online上线。

crm(live)node# --help bye exit maintenance show utilization -h cd fence online standby ? clearstate help quit status attribute delete list ready status-attr back end ls server up crm(live)node#进行下线&上线

#na1下线 crm(live)# node standby crm(live)# status Stack: classic openais (with plugin) Current DC: na1.server.com (version 1.1.18-3.el6-bfe4e80420) - partition with quorum Last updated: Sun May 24 22:56:18 2020 Last change: Sun May 24 22:56:15 2020 by root via crm_attribute on na1.server.com 2 nodes configured (2 expected votes) 2 resources configured Node na1.server.com: standby Online: [ na2.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na2.server.com httpd (service:httpd): Started na2.server.com # na1上线 crm(live)# node online crm(live)# status Stack: classic openais (with plugin) Current DC: na1.server.com (version 1.1.18-3.el6-bfe4e80420) - partition with quorum Last updated: Sun May 24 22:56:29 2020 Last change: Sun May 24 22:56:27 2020 by root via crm_attribute on na1.server.com 2 nodes configured (2 expected votes) 2 resources configured Online: [ na1.server.com na2.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na1.server.com httpd (service:httpd): Started na1.server.com crm(live)#资源会自动切换到na1上,之前定义了约束分数,100分,所以会再次回来。

物理故障

我们把na1的服务停掉,在na2上查看状态

[root@na2 ~]# crm status Stack: classic openais (with plugin) Current DC: na2.server.com (version 1.1.18-3.el6-bfe4e80420) - partition WITHOUT quorum Last updated: Sun May 24 23:20:29 2020 Last change: Sun May 24 22:56:27 2020 by root via crm_attribute on na1.server.com 2 nodes configured (2 expected votes) 2 resources configured Online: [ na2.server.com ] OFFLINE: [ na1.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Stopped httpd (service:httpd): Stopped [root@na2 ~]#

我们发现我们的na2在线,但是服务却是关闭的。

Quorum介绍

集群节点的票据存活数要大于等于Quorum,集群才能正常工作。

在集群票据数为奇数时,Quorum值的(票据数+1)/2。

当票据数数为偶数时,Quorum的值则为 (票据数/2)+1

我们是2个节点,Quorum的值为2,因此我们至少要存活2个或2个以上的节点,我们关闭了一个,因此集群就不能正常工作了。

这对于2个节点来说,不太友好。我们可以使用

crm configure property no-quorum-policy=ignore

对quorum不能满足的集群状态进行忽略

查看资源状态(资源服务正常启动)

[root@na2 ~]# crm configure property no-quorum-policy=ignore [root@na2 ~]# crm status Stack: classic openais (with plugin) Current DC: na2.server.com (version 1.1.18-3.el6-bfe4e80420) - partition WITHOUT quorum Last updated: Sun May 24 23:27:02 2020 Last change: Sun May 24 23:26:58 2020 by root via cibadmin on na2.server.com 2 nodes configured (2 expected votes) 2 resources configured Online: [ na2.server.com ] OFFLINE: [ na1.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na2.server.com httpd (service:httpd): Started na2.server.com [root@na2 ~]#

9、httpd服务高可用测试

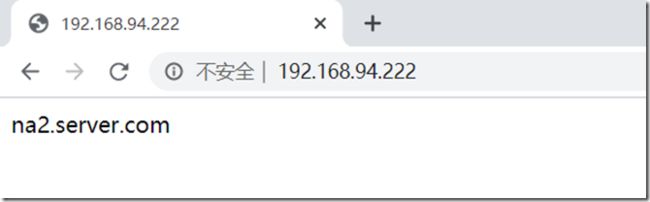

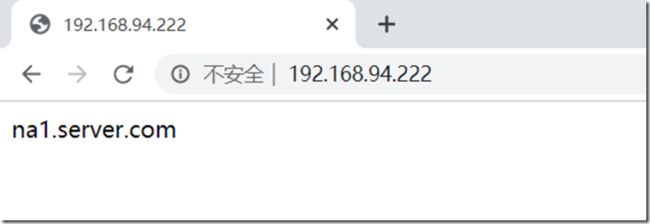

现在是na2在线,资源运行在na2上,NA1宕机,我们访问VIP

我们把na1的服务开启

[root@na1 ~]# service corosync start Starting Corosync Cluster Engine (corosync): [确定] [root@na1 ~]# crm status Stack: classic openais (with plugin) Current DC: na2.server.com (version 1.1.18-3.el6-bfe4e80420) - partition with quorum Last updated: Sun May 24 23:32:36 2020 Last change: Sun May 24 23:26:58 2020 by root via cibadmin on na2.server.com 2 nodes configured (2 expected votes) 2 resources configured Online: [ na1.server.com na2.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na1.server.com httpd (service:httpd): Started na1.server.com [root@na1 ~]#访问VIP

httpd服务实现高可用。

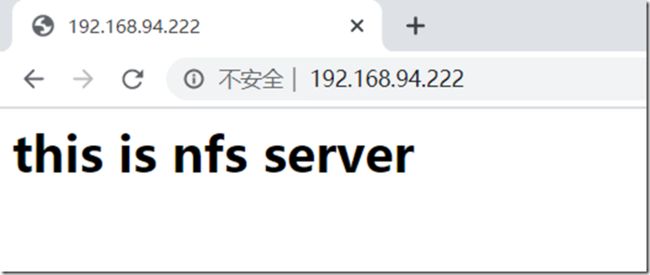

10、创建nfs文件资源

NFS服务测试

nfs服务器配置省略

NA1 NA2 节点关闭selinux

nfs资源:192.168.94.131

[root@filesystem ~]# exportfs /file/web 192.168.0.0/255.255.0.0 [root@filesystem ~]#测试挂载

[root@na1 ~]# mkdir /mnt/web [root@na1 ~]# mount -t nfs 192.168.94.131:/file/web /mnt/web [root@na1 ~]# cat /mnt/web/index.html <h1>this is nfs serverh1> [root@na1 ~]# umount /mnt/web/ [root@na1 ~]#

创建nfs文件资源

查看帮助(Filesystem有三个必选参数)

crm(live)ra# info ocf:heartbeat:Filesystem Parameters (*: required, []: default): device* (string): block device The name of block device for the filesystem, or -U, -L options for mount, or NFS mount specification. directory* (string): mount point The mount point for the filesystem. fstype* (string): filesystem type The type of filesystem to be mounted.创建nfs资源

crm(live)configure# primitive nfs ocf:heartbeat:Filesystem params device=192.168.94.131:/file/web directory=/var/www/html fstype=nfs crm(live)configure# verify crm(live)configure# commit查看状态(发现它在na2上,它应该要和httpd在一起。我们进行位置约束)

crm(live)# status Stack: classic openais (with plugin) Current DC: na2.server.com (version 1.1.18-3.el6-bfe4e80420) - partition with quorum Last updated: Sun May 24 23:48:09 2020 Last change: Sun May 24 23:44:24 2020 by root via cibadmin on na1.server.com 2 nodes configured (2 expected votes) 3 resources configured Online: [ na1.server.com na2.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na1.server.com httpd (service:httpd): Started na1.server.com nfs (ocf::heartbeat:Filesystem): Started na2.server.com crm(live)#

排列、排序约束

之前提到的约束,有3大功能,有一个order排序的。

httpd 和 nfs ,应该是nfs先启动,然后在启动httpd。

我们进行httpd和nfs排列约束、排序约束。

crm(live)configure# colocation httpd_with_nfs inf: httpd nfs # 先启动nfs,在启动httpd crm(live)configure# order nfs_first Mandatory: nfs httpd # 先启动httpd 在启动vip crm(live)configure# order httpd_first Mandatory: httpd VIP crm(live)configure# verify crm(live)configure# commit crm(live)configure#

11、高可用集群测试

查看状态

crm(live)# status Stack: classic openais (with plugin) Current DC: na2.server.com (version 1.1.18-3.el6-bfe4e80420) - partition with quorum Last updated: Mon May 25 00:00:23 2020 Last change: Sun May 24 23:58:47 2020 by root via cibadmin on na1.server.com 2 nodes configured (2 expected votes) 3 resources configured Online: [ na1.server.com na2.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na1.server.com httpd (service:httpd): Started na1.server.com nfs (ocf::heartbeat:Filesystem): Started na1.server.com crm(live)#访问VIP

NA1关闭服务、NA2查看状态

[root@na2 ~]# crm status Stack: classic openais (with plugin) Current DC: na2.server.com (version 1.1.18-3.el6-bfe4e80420) - partition WITHOUT quorum Last updated: Mon May 25 00:01:42 2020 Last change: Sun May 24 23:58:47 2020 by root via cibadmin on na1.server.com 2 nodes configured (2 expected votes) 3 resources configured Online: [ na2.server.com ] OFFLINE: [ na1.server.com ] Full list of resources: VIP (ocf::heartbeat:IPaddr): Started na2.server.com httpd (service:httpd): Started na2.server.com nfs (ocf::heartbeat:Filesystem): Started na2.server.com [root@na2 ~]#访问VIP

查看配置文件

[root@na2 ~]# crm configure show node na1.server.com \ attributes standby=off node na2.server.com primitive VIP IPaddr \ params ip=192.168.94.222 primitive httpd service:httpd \ params httpd primitive nfs Filesystem \ params device="192.168.94.131:/file/web" directory="/var/www/html" fstype=nfs order httpd_first Mandatory: httpd VIP colocation httpd_with_nfs inf: httpd nfs order nfs_first Mandatory: nfs httpd location vip_httpd_prefer_na1 VIP 100: na1.server.com colocation vip_with_httpd inf: VIP httpd property cib-bootstrap-options: \ have-watchdog=false \ dc-version=1.1.18-3.el6-bfe4e80420 \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore [root@na2 ~]#查看xml配置

crm configure show xml另外一个,如果中途配置错误了怎么办,

[root@na2 ~]# crm crm(live)# configure # 可以直接对配置文件进行编辑 crm(live)configure# edit node na1.server.com \ attributes standby=off node na2.server.com primitive VIP IPaddr \ params ip=192.168.94.222 primitive httpd service:httpd \

读书和健身总有一个在路上