spark分布式大数据计算7一spark和pyspark的安装和启动

本文参考自:https://blog.csdn.net/ouyangyanlan/article/details/52355350

原文中有Hadoop相关内容,我这边没有使用相关功能,于是忽略Hadoop的相关安装和配置操作。

前面我们已经学习了spark的基础知识了,那我们就来实际操练一下。我们的数据量并不大,所以本文搭建的是一个单机版的spark。服务端和客户端都是在同一个机器上。

首先,spark分为

- 服务端

- 客户端。

此处我们使用Python客户端(spark支持Scala、Java和Python这3种客户端)。

客户端比较简单,安装对应的工具包,导入项目即可。

服务端比较复杂,需要以下3个:

- Java8环境

- Scala环境

- spark

一、spark服务端安装

1.1 安装Java8

安装Java环境,参见我的另一篇博客:https://blog.csdn.net/gaitiangai/article/details/103009888

1.2 安装Scala

这个比较简单,下载、解压,配置环境变量即可。

1.2.1 Scala下载

进入Scala官网:https://www.scala-lang.org/download/

拉到底,点击下载我圈中的这个,然后移动到你的Linux系统中。

1.2.2 Scala安装配置

解压Scala

tar -zxf 你的tgz压缩包名称

配置环境变量(我是解压到/usr/local/share/scala目录下的)

vim /etc/profile

#在文件的末尾加入:

export PATH="$PATH:/usr/local/share/scala/bin"

#此处地址需要修改为你的Scala文件地址

输入 :wq! 保存退出。运行配置文件使之生效 :

source /etc/profile

测试安装结果:

[root@localhost ~]# scala

Welcome to Scala 2.13.0 (OpenJDK 64-Bit Server VM, Java 1.8.0_171).

Type in expressions for evaluation. Or try :help.

scala>

命令行输入Scala,输出入页面所示内容则安装成功,反之失败。

1.3 安装spark

1.3.1下载spark

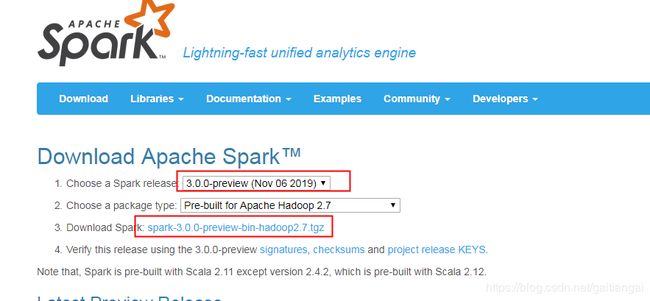

进入官方下载网站:http://spark.apache.org/downloads.html

第 1 个选项选择你想要安装的spark版本,第 2 个选项需要和你的Hadoop版本对应(我们不使用Hadoop,所以我没有安装,此处就随便选了),然后点击第 3 个选项里面的下载链接,进入如下页面:

点击我红色圈中的链接,开始下载。

1.3.2 安装配置spark

解压spark文件即可。无需配置。

tar -zxf 你的spark压缩文件名字

1.4 启动spark服务端

spark有4中启动模式,各模式介绍如下:

方式1:本地模式

Spark单机运行,一般用于开发测试。

方式2:Standalone模式

构建一个由Master+Slave构成的Spark集群,Spark运行在集群中。

方式3:Spark on Yarn模式

Spark客户端直接连接Yarn。不需要额外构建Spark集群。

方式4:Spark on Mesos模式

Spark客户端直接连接Mesos。不需要额外构建Spark集群。

启动方式: spark-shell.sh(Scala)

spark-shell通过不同的参数控制采用何种模式进行。主要涉及master 参数:

--master MASTER_URL

# MASTER_URL 此参数指导spark的启动模式,有以下取值可供选择:

# local.

# spark://host:port,

# yarn,

# mesos://host:port,

eg: 本地模式启动:

./spark-shell --master local

./spark-shell --master local[2] # 本地运行,两个worker线程,理想状态下为本地CPU core数

eg: Standalone模式启动:

./spark-shell --master spark://192.168.1.10:7077

OK,都安装好了,启动方式也都了解了,让我们来试一下, 好激动。

首先我们切换到spark的bin目录,

[root@localhost bin]# ls

beeline pyspark spark-class.cmd spark-sql

beeline.cmd pyspark2.cmd sparkR spark-sql2.cmd

docker-image-tool.sh pyspark.cmd sparkR2.cmd spark-sql.cmd

find-spark-home run-example sparkR.cmd spark-submit

find-spark-home.cmd run-example.cmd spark-shell spark-submit2.cmd

load-spark-env.cmd spark-class spark-shell2.cmd spark-submit.cmd

load-spark-env.sh spark-class2.cmd spark-shell.cmd

[root@localhost bin]# ./spark-shell --master local

Exception in thread "main" java.lang.ExceptionInInitializerError

at org.apache.spark.SparkConf$.<init>(SparkConf.scala:716)

at org.apache.spark.SparkConf$.<clinit>(SparkConf.scala)

at org.apache.spark.SparkConf$$anonfun$getOption$1.apply(SparkConf.scala:389)

at org.apache.spark.SparkConf$$anonfun$getOption$1.apply(SparkConf.scala:389)

at scala.Option.orElse(Option.scala:289)

at org.apache.spark.SparkConf.getOption(SparkConf.scala:389)

at org.apache.spark.SparkConf.get(SparkConf.scala:251)

at org.apache.spark.deploy.SparkHadoopUtil$.org$apache$spark$deploy$SparkHadoopUtil$$appendS3AndSparkHadoopConfigurations(SparkHadoopUtil.scala:463)

at org.apache.spark.deploy.SparkHadoopUtil$.newConfiguration(SparkHadoopUtil.scala:436)

at org.apache.spark.deploy.SparkSubmit$$anonfun$2.apply(SparkSubmit.scala:323)

at org.apache.spark.deploy.SparkSubmit$$anonfun$2.apply(SparkSubmit.scala:323)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.deploy.SparkSubmit.prepareSubmitEnvironment(SparkSubmit.scala:323)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:774)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:161)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:184)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:920)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:929)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.net.UnknownHostException: localhost.localdomain: localhost.localdomain: Name or service not known

at java.net.InetAddress.getLocalHost(InetAddress.java:1505)

at org.apache.spark.util.Utils$.findLocalInetAddress(Utils.scala:946)

at org.apache.spark.util.Utils$.org$apache$spark$util$Utils$$localIpAddress$lzycompute(Utils.scala:939)

at org.apache.spark.util.Utils$.org$apache$spark$util$Utils$$localIpAddress(Utils.scala:939)

at org.apache.spark.util.Utils$$anonfun$localCanonicalHostName$1.apply(Utils.scala:996)

at org.apache.spark.util.Utils$$anonfun$localCanonicalHostName$1.apply(Utils.scala:996)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.util.Utils$.localCanonicalHostName(Utils.scala:996)

at org.apache.spark.internal.config.package$.<init>(package.scala:302)

at org.apache.spark.internal.config.package$.<clinit>(package.scala)

... 20 more

Caused by: java.net.UnknownHostException: localhost.localdomain: Name or service not known

at java.net.Inet6AddressImpl.lookupAllHostAddr(Native Method)

at java.net.InetAddress$2.lookupAllHostAddr(InetAddress.java:928)

at java.net.InetAddress.getAddressesFromNameService(InetAddress.java:1323)

at java.net.InetAddress.getLocalHost(InetAddress.java:1500)

... 29 more

这么多错,慌的一批。没关系,百度一下我就知道。

错误信息这么多,但是仔细看就是这个:

Caused by: java.net.UnknownHostException: localhost.localdomain: Name or service not known

就是说localhost.localdomain这个域名解析失败。应为我们是在本机启动,这就是127.0.01啊,解析失败,咋办呢?既然它解析不出来,那我们就去给他写上:

vim /etc/hosts

添加这么一行:

127.0.0.1 localhost.localdomain localhost

然后:wq!保存退出。

再次启动就正常啦。输出如下,开心!

(py3.7) [root@localhost bin]# ./spark-shell --master local

19/11/20 14:21:21 WARN Utils: Your hostname, localhost.localdomain resolves to a loopback address: 127.0.0.1; using 172.17.20.145 instead (on interface eth0)

19/11/20 14:21:21 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

19/11/20 14:21:23 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://172.17.20.145:4040

Spark context available as 'sc' (master = local, app id = local-1574230900574).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.4

/_/

Using Scala version 2.11.12 (OpenJDK 64-Bit Server VM, Java 1.8.0_171)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

二、安装spark客户端

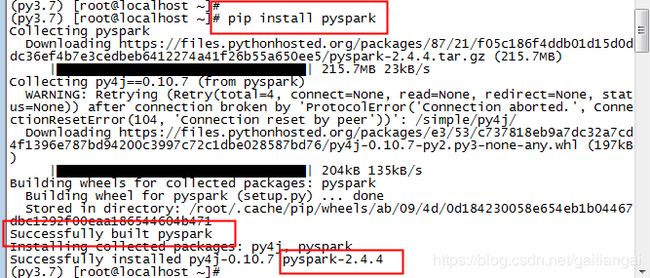

2.1 首先安装Python的spark工具包

pip install pyspark

我这里没有输入需要安装的版本,默认安装的是最新版本2.4.4,

你也可以写入你具体想要安装的版本:

pip install pyspark==2.4.4 #2.4.4就是你想要安装的具体版本号

200多兆呢,耐心等待吧,你也可以去泡一杯茶喝起来。

2.2、测试是否安装成功

编写test_spark.py 文件如下:

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local").setAppName("My App")

sc = SparkContext(conf = conf)

print("1111111111111111")

firstRDD = sc.parallelize([('sfs',27),('hk',26),('czp',25),('hdc',28),('wml',27)])

print("222222222")

t=firstRDD.take(3)

print(t)

执行该代码,观察输出结果。正常输出信息则环境OK,反之则环境不正常,需要排查。

我的执行结果:

(py3.7) [root@localhost 1119]# python test_spark.py

19/11/20 14:40:00 WARN Utils: Your hostname, localhost.localdomain resolves to a loopback address: 127.0.0.1; using 172.17.20.145 instead (on interface eth0)

19/11/20 14:40:00 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

19/11/20 14:40:01 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark""s default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

19/11/20 14:40:04 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

1111111111111111

222222222

[Stage 0:> ([('sfs', 27), ('hk', 26), ('czp', 25)]

OK, 一切正常。

注意:

但是很多人可能不知道,pyspark里面也有一个spark!你可以切换到pyspark的安装目录下去看。默认Python使用的是pyspark里面的spark,而不是刚刚我们安装的spark。想要系统使用我们刚刚安装的spark,需要将其配置在环境变量中:

vim /etc/profile

#在文件的末尾加入:

export SPARK_HOME="xxxx"

#此处xxx需要修改为你的spark安装目录