监督学习算法2.3.2-K邻近、二分类、回归

全文代码如下:

import matplotlib.pyplot as plt

import mglearn

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

x,y = mglearn.datasets.make_forge()

x_train,x_test,y_train,y_test = train_test_split(x,y,random_state = 0)

clf = KNeighborsClassifier(n_neighbors=3)

clf.fit(x_train,y_train)

print("test set predictions:{}".format(clf.predict(x_test)))

print('test set accuracy:{:.3f}'.format(clf.score(x_test,y_test)))

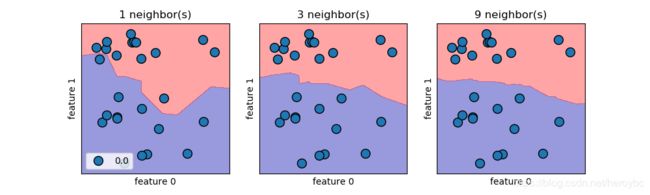

fig,axes = plt.subplots(1,3,figsize=(10,3))

for n_neighbors,ax in zip([1,3,9],axes):

clf = KNeighborsClassifier(n_neighbors=n_neighbors).fit(x,y)

mglearn.plots.plot_2d_separator(clf,x,fill=True,eps=0.5,ax=ax,alpha=.4)

mglearn.discrete_scatter(x[:,0],x[:,1],ax=ax)

ax.set_title('{} neighbor(s)'.format(n_neighbors))

ax.set_xlabel('feature 0')

ax.set_ylabel('feature 1')

axes[0].legend(loc=3)

plt.show()

from sklearn.datasets import load_breast_cancer

cancer = load_breast_cancer()

x_train,x_test,y_train,y_test = train_test_split(cancer.data,cancer.target,stratify=cancer.target,random_state=66)

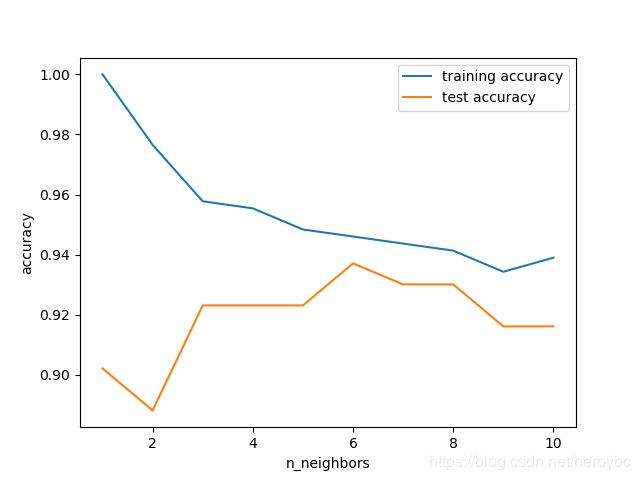

training_accuracy = []

test_accuracy = []

neighbors_settings = range(1,11)

for n_neighbors in neighbors_settings:

clf = KNeighborsClassifier(n_neighbors=n_neighbors)

clf.fit(x_train,y_train)

training_accuracy.append(clf.score(x_train,y_train))

test_accuracy.append(clf.score(x_test,y_test))

plt.plot(neighbors_settings,training_accuracy,label='training accuracy')

plt.plot(neighbors_settings,test_accuracy,label='test accuracy')

plt.ylabel('accuracy')

plt.xlabel('n_neighbors')

plt.legend()

plt.show()

from sklearn.neighbors import KNeighborsRegressor

x,y = mglearn.datasets.make_wave(n_samples=40)

x_train,x_test,y_train,y_test = train_test_split(x,y,random_state=0)

reg = KNeighborsRegressor(n_neighbors=3)

reg.fit(x_train,y_train)

print('test set predictons:{}'.format(reg.predict(x_test)))

print('test set r^2 :{:.2f}'.format(reg.score(x_test,y_test)))

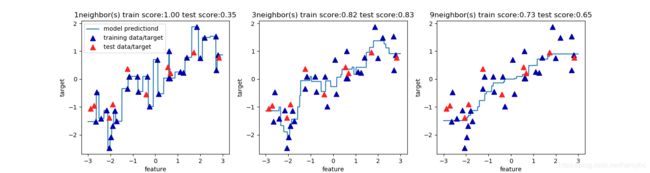

fig,axes = plt.subplots(1,3,figsize=(15,4))

line = np.linspace(-3,3,1000).reshape(-1,1)

for n_neighbors,ax in zip([1,3,9],axes):

reg = KNeighborsRegressor(n_neighbors=n_neighbors)

reg.fit(x_train,y_train)

ax.plot(line,reg.predict(line))

ax.plot(x_train,y_train,'^',c=mglearn.cm2(0),markersize=8)

ax.plot(x_test,y_test,'^',c=mglearn.cm2(1),markersize=8)

ax.set_title('{}neighbor(s) train score:{:.2f} test score:{:.2f}'.format(n_neighbors,reg.score(x_train,y_train),reg.score(x_test,y_test)))

ax.set_xlabel('feature')

ax.set_ylabel('target')

axes[0].legend(['model predictiond','training data/target','test data/target'],loc='best')

plt.show()