import jieba

from wordcloud import WordCloud

import imageio

mask = imageio.imread('china.jpg')

#读取文件

with open('novel/hongloumeng.txt','r',encoding='UTF-8') as f:

data = f.read()

#分词

word_list =jieba.lcut(data)

# print(word_list)

#创建一个无关词组的集合

exclude={"什么","一个","我们","你们","如今","说道","知道","姑娘","起来","这里","出来","众人","那里","自己","一面"

,"只见","两个","没有","怎么","不是","不知","这个","听见","这样","进来","咱们","就是","东西","告诉"

,"回来","只是","大家","只得","这些","他们","丫头","不敢","出去","所以","不过","不好","姐姐","姐姐"

,"的话","一时","鸳鸯","鸳鸯","心里","不能","过来","她们","如此","银子","今日","二人","答应","黛玉"

,"宝玉","凤姐儿","贾母"}#,"","","",

#创建一个字典,存储数据

count = {}

#筛选数据,名字小于2的删掉,大于等于二的存在字典

for i in(word_list):

if len(i) <= 1:

continue

else:

count[i] = count.get(i, 0) + 1

# print(count)

#把重复的人物合并成一个名字

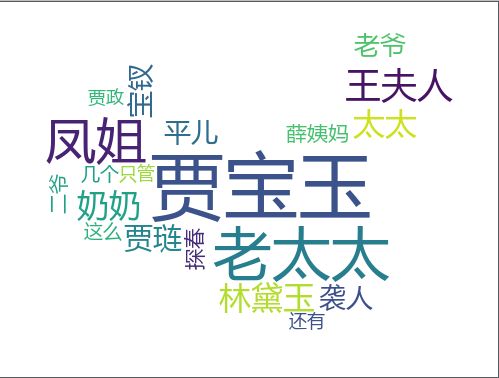

count['贾宝玉'] = count['宝玉'] +count['宝二爷']+count['贾宝玉']

count['林黛玉'] = count['黛玉']+count['无字'] +count['林黛玉']

count['凤姐']=count['凤姐儿']+count['凤姐']

count['老太太'] = count['老太太']+count['贾母']

#把多余的词组删除

for word in exclude:

del count[word]

#将字典转换成列表,并排序

items = list(count.items())

items.sort(key=lambda x: x[1], reverse=True)

#显示排行前10的人物

role_list = []

for i in range(20):

role, count = items[i]

print(role, count)

for _ in range (count):

role_list.append(role)

# print(role_list)

text = " ".join(role_list)

print(text)

WordCloud(

background_color='white',

mask=mask,

font_path='msyh.ttc',

collocations=False

).generate(text).to_file('红楼梦.png')